Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Support

- :

- Support

- :

- Knowledge

- :

- Support Articles

- :

- Migrate Spark projects from Compose for Data Lakes...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Migrate Spark projects from Compose for Data Lakes 6.6 to Qlik Compose 2021.8

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Migrate Spark projects from Compose for Data Lakes 6.6 to Qlik Compose 2021.8

May 10, 2022 8:10:39 AM

Mar 17, 2022 8:35:58 AM

This is part 4 of 4 in a series of articles with information about migrating Spark projects from Compose for Data Lakes 6.6 (C4DL 6.6) to Qlik Compose 2021.8 (Gen2)

Different Migration Modules for customers

- Migration Pre-Requisites

- Databricks to Databricks

- Hive to Hive supported version

Module 4: Migrate Spark projects from C4DL 6.6 to Gen2

There are two paths for Spark project, you can choose one of the paths to finish migration.

Path 1: You can clean up storage database and replicate landing database (including underlaying files for attrep_cdc_partition). Reload replicate task and start the compose tasks as it is new project. It was covered in first demo.

Path 2: You can follow migration path, and we explained all the required steps in this document.

Here are the migration steps (Path 2) if you don’t want to reload the replicate task. You are going to completely migrate your project definition and data to Gen2.

- Install Qlik Compose 2021.8 on a dedicated machine\the same machine where FDL 6.6 exist.

- Select a project in C4DL 6.6 and perform the below steps.

- Make sure external storage tables created before start migration.

- Run the CDC task last time before migrate data and disable if there are any schedules on the tasks.

- Run the compactor Job before migrating.

- Create the deployment package à Suresh_Spark_Hive_deployment_<datetime>.zip

- Create new Hive project in 2021.8 and project type as ODS+HDS. you have to migrate data also so create a new storage database with different name (we can’t use same database which is using in C4DL project) and provide it in Data Lake connection. Do the test connection to make sure you have correct drivers installed for your data lake. Now we have an empty project in gen2 with storage connection.

- Deploy the projects on Qlik Compose 2021.8 and migrate the data

- Migrate the project definitions

- Login to Qlik Compose 2021.8 cli and run below command to create compatible package.

ComposeCli.exe adjust_cfdl_project --project Suresh_Spark_Hive --infile “C:\Program Files\Qlik\C4DL66\Suresh_Spark_Hive_deployment_<datetime>.zip”

- C:\ProgramFiles\Qlik\Compose\data\projects\Suresh_Spark_Hive\deployment_package\ Suresh_Spark_Hive _<datetime>__QlikComposeDLMigration.zip

- Deploy adjusted deployment package on gen2 project.

- Validate model and create the storage tables.

- Migrate the data

- Generate tasks then execute a data migration SQL script from notebook.

- Generate 'create data migration script’ using below cli (Qlik Compose 2021.8 cli) command and run it on storage database Using External tool.

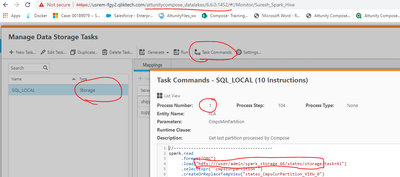

ComposeCli.exe create_cfdl_data_migration_script --project Suresh_Hive_HDS --infile “C:\Program Files\Qlik\C4DL66\ Suresh_Hive_HDS_deployment_<datetime>.zip” --states_path "/user/admin/spark_storage_66/states/storage"

You can get the storage path from C4DL 6.6 CDC task. You need not provide task no. also in path. Please observe the command and proceed it in your environment.

- verify the data is correct

NOTE:

- We must use new storage database in path 2 because we are going to run select * from C4DL 6.6 storage database and insert into Qlik Compose 2021.8 storage database in the migrate data step (3 (b)).

- If you want, you can run C4DL project and Gen2 project parallelly for few days.

After migration you can check\run

- ODS and HDS live views before running CDC

- CDC with updates\inserts\deletes

- CDC with reload event

- schema evolution - you should run schema evolution and skip all detected changes - to set the context

also set

- workflow

- scheduler

- notification

- work with multiple replicate servers

You can watch demo video How to Migrate Spark projects from C4DL66 to Gen2.

Migration Notes & Limitations

General

- current assumption: DL66 and gen2 use the same cluster

- replicate to support speed partition mode

- after project migrated, run cli to create migration script

- for spark project, user should provide the path to the context,

e.g., 'hdfs:///user/admin/spark_storage_66/states/storage'

Limitations:

- due to a bug in hive partitions can’t be created dynamically. we read status table in 66 then create script with hardcoded values of partitions https://issues.apache.org/jira/browse/HIVE-24163

- due to a bug in hive time zones are not always calculated correctly and there is no stable way to get UTC time. we get UTC time in compose server and then its hardcoded into the script

- because of the limitations mentioned above, script should be created close to time of execution. Do not execute old scripts.

- in case of ODS table in DL66 there is no easy way to determine the header__oper (I\U) for the migration, putting 'I'

- in case of multiple mappings for entity (not supported in gen2), the first active will be taken.

Migration flow

Spark (only HDS)

- requires external tables to be created

- run CDC in 66

- run compactor

- create adjusted package

- deploy the adjusted package to Qlik compose

- create external tables in Qlik compose

- create migration script

- run migration script in external tool

- verify the data

Hive:

- validate storage and run CDC tasks in 66

- create adjusted package

- deploy the adjusted package to Qlik compose

- create tables in Qlik compose

- create migration script

- run migration script in external tool

- verify the data

Databricks:

- Set data bricks project with delete records settings. 3 landings. do some insert, update and delete. run full load and cdc

- create a deployment

- run 'adjust deployment' cli command

- create the storage

- run 'create data migration script' cli command and run it on db

- verify the data is correct

- view live views with changes. run cdc. run cdc with reload event.

Before the migration, prepare an environment with the following settings

- non default settings for dwh_prefix,, prefix for compose column, from_date\to_date

- model with ods and hds entities

- rename entity\attribute

- mappings with expression and lookup

- no mappings - an error when trying to generate the data migration script(No active mapping was found for entity: `region2`), user should remove the entity first and run cli again

- multiple mappings - more than one mapping per an entity even if the other is not active - not supported. an error when running the adjust deployment cli (Entity: `products2` has multiple mappings: `Map_products_landing, Map_products2_1` 😉

- soft\hard delete\do nothing

- exclude TD

- The storage contains I. U, D records

- hds entity: inserts, updates and deleted records will be displayed with I, U and D accordingly

- ods entity: inserts and updates will be displayed with I, deleted with D

- Ran schema evolution including last CDC task run in C4DL 6.6 if it is enabled.

After migration check\run

- ods and hds live views before running cdc (with some updates \inserts\deletes)

- cdc with updates\inserts\deletes

- cdc with reload event

- schema evolution - user should run schema evolution and skip all detected changes - to set the context

and also set

- workflow

- scheduler

- notification

- work with multiple replicate servers

Environment

- From Compose for Data Lakes 6.6

- To Qlik Compose 2021.8

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.