Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Data Integration

- :

- Qlik

- :

- Qlik Compose for Data Warehouses

- :

- Re: Qlik Compose -- Data Loading Questions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Qlik Compose -- Data Loading Questions

Hello,

I am new to Qlik Compose and our company just started implementing this and we are in the initial stages. I have below questions and looking for some clarifications on this.

- I see that we can create full load and CDC tasks separately to load from landing tables to DV. We often have a scenario where we do full load for some tables (low volume tables) and incremental load for high volume tables. Is it possible to configure a task to have a mix of both -- some tables in full load mode and some tables in CDC mode (under a single task) ?

- When loading high volume tables first time in the full load mode, are there any design patterns (like parallel processing, partitioning, processing subsets of data in multiple runs etc) to improve the performance ?

- Regarding Multi table ETL/Single table ETL, Is there any difference when they get executed ?

- Once the compose process the data from “_ct” tables, data will be either purged or archived from the “_ct” tables. If I want to reload last 2-3 days of data, Is it possible or is there a way to do this ?

- While doing a CDC load, I can only use “source type” as table whereas in Full load I can use all the three source types. Are there any other limitations/differences when doing CDC vs Full load?

Thanks alot for taking time to read my questions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @mopidesp - welcome to the forum!

Some great questions concerning Compose understanding. Apologies in advance for the long reply, but hopefully this is helpful!

- Full and CDC tasks.

- You cannot combine CDC and Full load into a single ETL Set today. Instead you would need 2 ETL sets – 1 for full load and 1 for CDC. These could be executed together in a workflow. Note that if you have dependencies between these (ie Category <Full> -> Subcategory (CDC) -> SubSubCategory (Full) ) you do not need to have 3 ETL sets execute. You can simply run the Full then CDC work loads. Compose will infer missing members (i.e. the subcat records in this example) and data will be “whole” after processing.

- Compose ETL sets are executing SET-based SQL so you are getting native parallelism from the underlying data warehouse engine. Having said that, if you have a few transactional type tables with very large records you may want to

- put them in their own ETL Set for initial load purposes.

- Use a table-driven filter and execute the ETL Set multiple times with specific ranges (e.g. date ranges for “transaction” tables or natural key ranges). When I say table driven filter – create a table to hold the data ranges and in the mapping filter use a condition like “TRANASCTION_DATE IN (SELECT DATES_TO_PROCESS FROM FilterTable ) “. You can execute the process and have a Post-Load ETL step to simply insert / set the new set of filters – then execute the process again until no more data to process.

- If you have existing history you want to load (e.g. an existing set of Type 2 data for dimensional / reference data) you should load from beginning of time to current or based on natural key ranges. For this you should map the FROM_DATE (FD by default) in the mapping with the historical data

- If you can run an initial load without having to worry about missing references or executing any data validations there is an option in Compose to “Optimize for Initial Load”. (Highlight the full load ETL Set – click Settings and under Advanced tab - ) Please check the documentation for restrictions when using this option.

- Multi-table v Single-table ETL

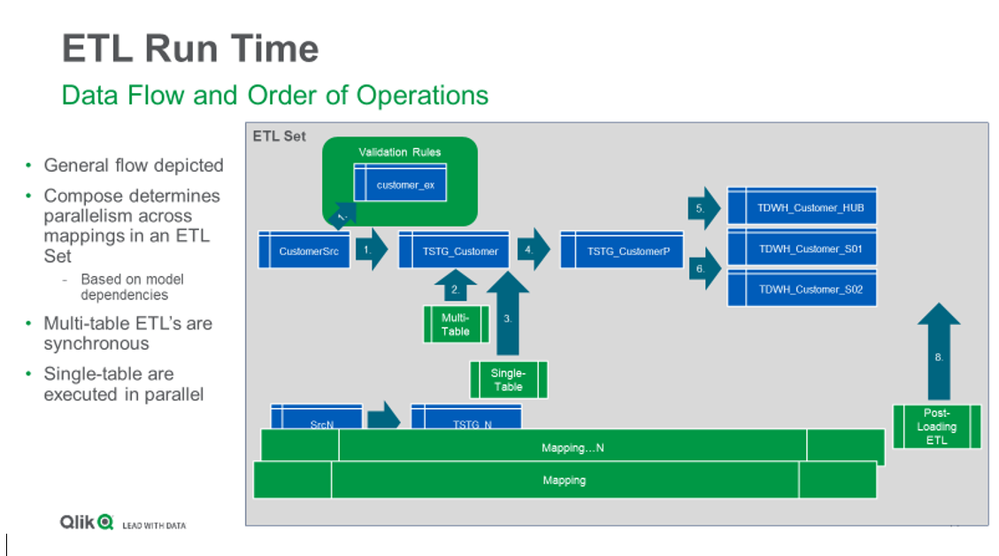

- There is a difference when Multi-table / Single table gets executed and their purpose. First understand the overall ETL flow (see high-level diagram below) –

- Compose performs data validations first – then loads data to a staging table TSTG. If performs this in parallel for all the mappings that are executing in a single ETL Set. Then multitable and single table etls kick in.

- Multi-table ETL’s should be used when you want to use data in multiple TSTG tables. These are serial processes and are executed after the load to TSTG occurs. For example I wish to perform some additional processing for customers have changes to both sales transactions and demographic data.

- Single-table ETL’s should be used when you want to use data in a single TSTG table. Single-table ETLs are executed in parallel (by using that component you signify to Compose that you only care about the specific table assigned to the Single-table ETL.) For example – I wish to update a Type 1 attribute in the customer hub for “last_order_date” based on incoming order changes

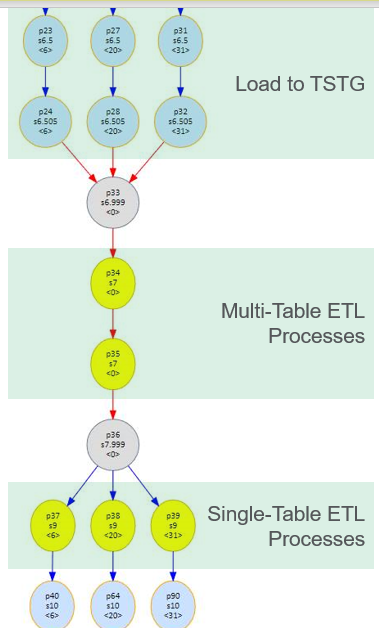

- This graphic depicts the multi-table and single-table steps in the ETL flow

- There is a difference when Multi-table / Single table gets executed and their purpose. First understand the overall ETL flow (see high-level diagram below) –

The first set of blue nodes are the loading to TSTG for 3 mappings – once completed the mult-table steps kick in. Once they are completed Single-table can be executed in parallel. After that Compose completes the processing of the data.

- CT Delete or Archive

- Compose will either simple DELETE or DELETE and ARCHIVE the data from the __Ct tables once it has processed the data.

- Configure Compose to DELETE or to ARCHIVE the data based on the landing zone configuration (see image below).

- If you wish to “replay” the events – you can move them from the archive table back into the __Ct table. A best practice is if you decide to replace a period of time – replay all the data from that point forward for the given natural key. For example,. If I want to replay data from 2 days ago for CustomerID XXXX – then I would move all CT records from 2 days ago to now back into the __CT tables for CustomerID XXXX. (Or all data from 2 days to now if I want to replay the entire data set).

- Note that on some platforms the archive tables are created with a primary key of header__change_seq (Replicate generated value that tells Compose the order of operations) and header__change_oper). If you “replay” these with ARCHIVE configured its possible (depending on DW platform) for the second archival to fail as the data already exists in the __ar (archive suffix) tables. In those cases when replaying it is possible to adjust the header__change_seq as you insert into the CT tables for replay. Just ensure that you maintain the order relative to the header__timestamp

- CDC Sets

- As you said – CDC requires the use of TABLE as a source as Compose will replace the table_name with table_name__ct and add the archival of data.

- The only other “limitation” I’m aware of for CDC is to be aware that any lookups defined in the ETL are NOT driver tables.

- Ie. If I have customers with a lookup to customer_type – a change to customer_type would not get picked up by the CDC process as customer_type is not a driving table.

- It may be better to normalize that model design instead – see this doc for details - Modeling Real-time Data Warehouses in Compose >.

a. Note that even if you have “full load” data sources not fed by Replicate, there are incremental design patterns that can be used if you know for example that the full load data set has sent you only changes. (For example an industry data feed that sends descriptive data for financial market instruments that have changed in the past 2 days).

If you wish for more info on this – please private message me on here. In some cases these design patterns can enable the use of “CDC” ETL sets for both FULL and CDC based data loads by mimicking the CT tables for full data sets. Which would solve your other question of combining CDC and FULL into a single process 😊

FYI – there are some additional white-papers in the documents and videos section of this forum for Compose for DW - https://community.qlik.com/t5/Qlik-Compose-for-Data-Warehouses-Documents/tkb-p/qlik-compose-warehous...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Question: "I see that we can create full load and CDC tasks separately to load from landing tables to DV. We often have a scenario where we do full load for some tables (low volume tables) and incremental load for high volume tables. Is it possible to configure a task to have a mix of both -- some tables in full load mode and some tables in CDC mode (under a single task) ?"

Comment: You can combine full-load and CDC ETL sets via the Compose workflows. Basically, create your full-load ETL set and add those tables that require a full load strategy. Then, create the CDC ETL set and add those large tables in it. Using the Compose workflows, create a new workflow and add both CDC sets. You can schedule this new workflow based on your needs. You will then accomplish your combined ETL sets - full-load and CDC strategy. If your datamart depends on these ETL sets, make sure you add the datamart task in the workflow right after the execution of your ETL sets.

Question: "When loading high volume tables first time in the full load mode, are there any design patterns (like parallel processing, partitioning, processing subsets of data in multiple runs etc) to improve the performance ?"

Comments: Based on the model, Compose will execute the mappings and the generated code in parallel, so you do not need to worry about it. However, you can add some of the following additional performance tuning options: (a) increase the JVM for each ETL set (if required and if you have the resources for it), (b) using the workflows, you can run ETL sets in parallel, (c) using single/multi table, and post loading ETLs, you can add performance tuning steps such as indexes, collection of stats, and other database options to improve the workloads. You also have two options in the datamart layer to perform pre-load and post-load steps, which can also be used to create partitions, collect statistics, and add other database performance-tuning options.

Question: "Regarding Multi table ETL/Single table ETL, Is there any difference when they get executed ?"

Comment: There are some differences between multi-table and single table ETLs:

a. Multi-table ETL sets are executed before single table ETL sets.

b. Multi-table ETL sets are executed sequentially, one at the time.

c. Single-table ETL sets are executed in parallel, and after multi-table ETL sets.

d. Both, single-table and multi-table ETLs are executed after the TSTG tables are created/populated, and typically these ETL sets are written to select/update, or change data in the TSTG tables.

d. Additionally, you can use post-loading ETL sets to select/update/ or change your data ware house tables, TDWH tables, and post-loading ETL sets are executed after the TDWH tables are populated/modified.

Question: "While doing a CDC load, I can only use “source type” as table whereas in Full load I can use all the three source types. Are there any other limitations/differences when doing CDC vs Full load?"

Comment: The main difference between the CDC and Full Load is that the CDC mode uses the change tables (_ct) tables for consuming and synching changes (typically applied by Replicate). The full load uses the base tables of your landing area to perform the initial load. However, even if you use the full load mode to maintain a warehouse table, if the the full load data has changed in the landing base table, Compose can detect the change, and update your data in the warehouse even if you are using a full load ETL set.

Question: "Once the compose process the data from “_ct” tables, data will be either purged or archived from the “_ct” tables. If I want to reload last 2-3 days of data, Is it possible or is there a way to do this ?"

Comment: Compose has a option in the Database connection layer to allow the user to archive the changed-data into another table or database/schema. You can reload data by copying the data from the archived tables into the _CT tables again.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Tim, Thank you so much for quick response. I will go through your responses and get back to you if I have any questions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi benperezg, Thanks alot for quick response. I will let you know if I have further questions .