Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Data Integration

- :

- Qlik

- :

- Qlik Compose for Data Warehouses

- :

- initial load with historized source tables

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

initial load with historized source tables

Hi,

i've got a source system that already uses the current table + historized table with validity period. is it possible to use that information to perform a SCD2 load for satellites, so that it will recreate a history of validity periods?

thanks, rd

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Hi @pandabrowski -

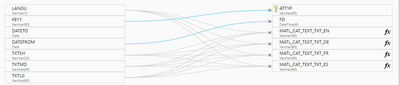

This is easy to accomplish in Compose. All you need to do is map the FROM_DATE / EFFECTIVE_DATE in your Source to the FD / FROM_DATE column in the Compose mapping. See example below where FD has been mapped.

- By default Compose derives the FROM_DATE (FD)

- Bulk load - I believe it uses UTC in ComposeDW.

- CDC load from Replicate __CT tables - Compose uses the header__timestamp from Replicate

In mapping the FD you are simply telling Compose that you have SCD type of data as a source and you want it to use the src systems from date. Compose will auto-detect and calculate the "TO_DATE (TD)" value.

Whenever you map the FD column, ensure you have "HANDLE DUPLICATES" checked for the mapping. As this tells Compose it could see multiple values per Key for the entity and it will then handle the SCD behavior appropriately.

(Note if you leave the column mapped for CDC ETL Sets also - then Compose will continue to use the data from the source and NOT the header__timestamp - which maybe what you want for this use case / requirement).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @sangam -

In Compose, you can define the attributes in the DWH model as Type 2 or Type 1 attributes. You can also then define a dimensions as Type 2 or Type 1. (Note for a dim to be managed as Type 2, the DWH attributes must be Type 2 also).

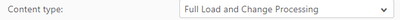

Type 2 management of the DWH model is automated by Compose. With the configuration you mentioned, when setting up your Compose landing zone connection - make sure you define it as having "Full Load and Change Processing" in the Content Type.

When you discover tables - you will get 2 ETL Tasks. 1 will be called "LandingZoneName" - this is a full load. It is not a truncate and load - but rather reads all the table from the Replicate like to like table and compares it with the data in the DWH tables to detect new / changed records (and will process / manage type 2 accordingly).

A second ETL task "LandingZoneName_CDC" will be created also. This can use the same mappings (if they are table based) as the full load task. When the code is generated, Compose will point itself to the __CT tables. The CDC task will then process only changes from the __CT tables, and will use the header_timestamp as the FROM_DATE (FD) for Type 2 processing. Type 2 management / processing is automated by Compose. Under normal scenarios, (as mentioned above), the header__timestamp column is used for the from date. In this scenario, the From Date (FD) will not be mapped in the mapping - Compose will handle it automatically for you.

If your source data comes as type 2 (as the original posters question) - then you should map the source column that represents the From date to the FD field in the mapping.

Hope that helps!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Hi @pandabrowski -

This is easy to accomplish in Compose. All you need to do is map the FROM_DATE / EFFECTIVE_DATE in your Source to the FD / FROM_DATE column in the Compose mapping. See example below where FD has been mapped.

- By default Compose derives the FROM_DATE (FD)

- Bulk load - I believe it uses UTC in ComposeDW.

- CDC load from Replicate __CT tables - Compose uses the header__timestamp from Replicate

In mapping the FD you are simply telling Compose that you have SCD type of data as a source and you want it to use the src systems from date. Compose will auto-detect and calculate the "TO_DATE (TD)" value.

Whenever you map the FD column, ensure you have "HANDLE DUPLICATES" checked for the mapping. As this tells Compose it could see multiple values per Key for the entity and it will then handle the SCD behavior appropriately.

(Note if you leave the column mapped for CDC ETL Sets also - then Compose will continue to use the data from the source and NOT the header__timestamp - which maybe what you want for this use case / requirement).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Tim,

I have a similar requirement. I am using Qlik Replicate to replicate the source table data from Oracle database to Snowflake database.

In Qlik Replicate, I have created a Task with Full load, Apply Changes and Store Changes. The task created 2 tables in Snowflake -

- a like to like table

- a change table with __ct as suffix.

I would need to create and populate SCD2 tables using above tables. Can you please elaborate your solution for this.

Thank you,

Sangam.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @sangam -

In Compose, you can define the attributes in the DWH model as Type 2 or Type 1 attributes. You can also then define a dimensions as Type 2 or Type 1. (Note for a dim to be managed as Type 2, the DWH attributes must be Type 2 also).

Type 2 management of the DWH model is automated by Compose. With the configuration you mentioned, when setting up your Compose landing zone connection - make sure you define it as having "Full Load and Change Processing" in the Content Type.

When you discover tables - you will get 2 ETL Tasks. 1 will be called "LandingZoneName" - this is a full load. It is not a truncate and load - but rather reads all the table from the Replicate like to like table and compares it with the data in the DWH tables to detect new / changed records (and will process / manage type 2 accordingly).

A second ETL task "LandingZoneName_CDC" will be created also. This can use the same mappings (if they are table based) as the full load task. When the code is generated, Compose will point itself to the __CT tables. The CDC task will then process only changes from the __CT tables, and will use the header_timestamp as the FROM_DATE (FD) for Type 2 processing. Type 2 management / processing is automated by Compose. Under normal scenarios, (as mentioned above), the header__timestamp column is used for the from date. In this scenario, the From Date (FD) will not be mapped in the mapping - Compose will handle it automatically for you.

If your source data comes as type 2 (as the original posters question) - then you should map the source column that represents the From date to the FD field in the mapping.

Hope that helps!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you so much for your detailed response.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@sangam Did Tims's suggestion work for you? If so, please don't forget to mark a solution that worked for you. This way users can find the answers for a similar situation.

Thanks,

Nanda

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you Tim, it worked for me.