- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

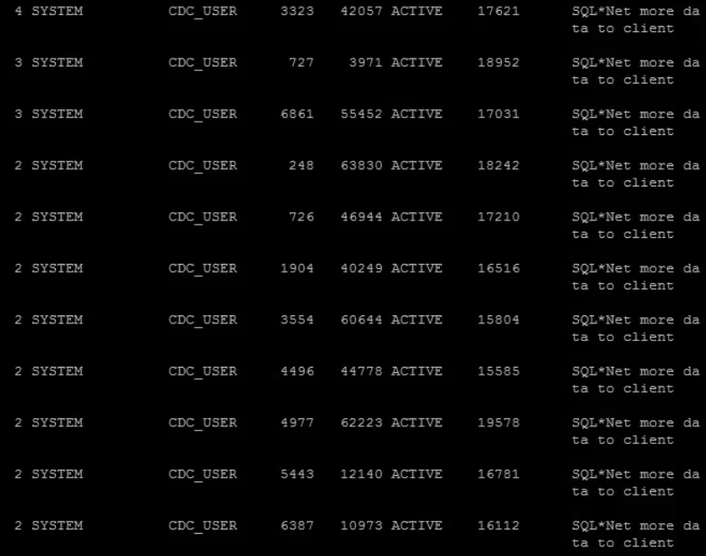

What's the reasons for havening many session created by Qlik replicate at oracle source with SQL *Net More data to client ?

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

below are connection per task:

so during full load you have the below

Full Load – for each table that is being loaded: For each subtask.

1 connection for metadata

1 connection for unloading the data

1 connection for LOB read-- The connection is opened if LOB support is enabled (Limited or Full) although, it is not required in limited LOB mode…

Then CDC: This is when the task is running on Change Capture mode.

1 connection for metadata

1 connection for the merger

1 connection for the reader – One connection per RAC node.

1 connection for LOB read

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

below are connection per task:

so during full load you have the below

Full Load – for each table that is being loaded: For each subtask.

1 connection for metadata

1 connection for unloading the data

1 connection for LOB read-- The connection is opened if LOB support is enabled (Limited or Full) although, it is not required in limited LOB mode…

Then CDC: This is when the task is running on Change Capture mode.

1 connection for metadata

1 connection for the merger

1 connection for the reader – One connection per RAC node.

1 connection for LOB read

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you so much for the response!

Can you please help us understand why do we have session event with “SQL *Net More data to client” ?

Is there any way to limit the no of season or can we optimize the sessions that is being created by replicate?

Thanks

Bhaskar

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. not sure about : “SQL *Net More data to client”

2. number of connection, depend on number of task and LOBs.

3. if Oracle have you added enable=broken ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for providing the information!

Point no 3 - will Have to check.

Best regard

Bhasker

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It would be good to know what the requested processing is when you made your observations.

I suspect it is a full-load possibly with more than the default 5 parallel streams. Partitioning? CDC active? ASM? Please provide some context.

The Oracle documentation is pretty clear about "SQL*Net more data to client (%)" writing:

"The amount of data being sent requires more than one send to the client. The shadow process waits for the client to receive the last send. This happens, for example, in a SQL statement that returns a large amount of data."

During a full-load Replicate essentially requests SELECT <column-list> FROM TABLE <each-table>. This is the easiest, cheapest, query one can ask any DB to do. It will provide large (gigabytes?) of data with very little resources on the DB source server side. Therefor the source server is likely to be waiting for the client to accept the data and ask for more. From the DB server's perspective this is a perfect situation. There is nothing you can do to make it better other then perhaps double-check MTU/TDU SQL*Net communication settings are set big enough. On the client side (Replicate), you may want to check there are enough CPU and Network resources available to do the job as best as one can with the resources available. Slightly overcommitting CPU is ok, but if say the CPU is at 80% with 10 streams, then you can try 12 or 15 but please don't bother with 20 streams in that situation as it will just increase contention and time will be wasted fighting over what to do next.

To further understand what is happening you may want to get in to V$SESSION / V$SQL_TEXT / V$SQL and the likes to map the PID's to actual SQL statements using parse addresses and such as needed.

Hein.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your response!

we were running only CDC operations, we haven't performed any full load. we have source and target as oracle and have 20 tasks out of which 6 have CDC only the rest 14 are Full load).

As per the clinet their source database system were impacted, one the reasons has been given by them is wait event "SQL *Net More data to client"

Regards,

Bhaskar Kumar

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>>> As per the clinet their source database system were impacted, one the reasons has been given by them is wait event "SQL *Net More data to client"

A little knowledge is a dangerous thing.

Yes the ""SQL *Net More data to client" will stand out on performance reports perhaps in:

Top 5 Wait Events

But it is NOT directly a resource consumption on the source, it is waiting for the client.

Admittedly that client may have issued in intense operation which did have an impact. For a CDC that would typically be reading the redo log, quit possibly in parallel chunks notably for ASM based redo.

For the default "To access redo log as binary file:" Replicate will essentially perform a DBMS_LOB.OPEN and then sit in a loop to read the whole file with DBMS_LOB.READ until it hits the end. For the active redo it will then keep on re-requesting DBMS_LOB.READ until it rolls.

Your DBA will need to dig deeper and better articulate the concern. Show the queries being executed, perhaps highlighting CPU used for disk reads. CDC is NOT for free, as it will read the redo 'all the time' and toss in data lob reads and metadata lookups as it goes.

Now you indicate " 6 have CDC". Are those to the same source? Then those would perform that redo (and/or Archive) log read. Generally you want only one or perhaps two tasks doing so to avoid excessive overhead. This may be what your DBA is observing.

\Why are there 6 tasks doing CDC? Is there a good business reason or is it just Replicate task design 'convenience' - a creature feature? If they all 6 go to the same source DB then you should really consider a single LOG STREAM staging task to read the redo/archive once only and configure those 6 tasks as "Read changes from log stream staging folder" as the source.

Hein.