Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- All Forums

- :

- QlikView App Dev

- :

- Re: Issues with a Pivot table and memory consumpti...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Issues with a Pivot table and memory consumption

Good morning/afternoon/evening QlikView gurus!

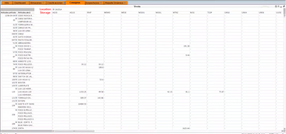

We're currently having an issue with a pivot table in one of our documents. The info shown consists in 4 dimensions and 2 expresions, which are simple Sum(${<Simple set analisys>}Field) ones, nothing fancy or particularly complex. The issue comes with the dimensions. See, we have 6 main locations, and currently about 180+ or so storage locations, from which some overlap with the locations, but some don't. Our final user wants to see every storage in a horizontal manner (dragging the dimension to the top, as pivot tables do), with the location as a dimension, without filtering any of them. The dataset is not big at all, probably less than a million rows, but when the pivot table comes into play all hell breaks loose.

My best guess is that Qlik needs to calculate each one of the 6 locations times the 180+ storages times as many items exist in any storage, even if there's no relation or information asociated, just so it can fill the field with a '-', but at the time you clear all fields or select each of the 6 locations (which is the point of the analisys, that's what the final user wants) the RAM usage skyrockets (the server has 48Gb of RAM), reaching almost 80% usage, or sometimes even crashing the server. I should mention that if you transform the pivot table into a straight table there's no problems of any kind and the table loads incredibly fast, so its pretty obvious the pivoting is the issue.

As you can see, theres multiple nulls shown, because the information might not even belong to said location (For example, the storage AGUS might not even have anything to do with the location CACESA or with the item 1238-04-04, but it gets shown as a null because... I'm guessing that's how pivot tables work)

The information is already as digested as it can be, with most of the calculations being done in script, so I think this is a matter of tying to show a lot of information where it doesn't really belong. I guess Qlik has to show and calculate each one of the 180+ storages even if the location has nothing to do with it just because it needs to be shown thanks to the pivoted dimension. I already tried selecting "supress when value is null" on the dimensions, but it doesn't do anything noticeable, plus trying to segment the pivot table differently trying to set the location on top of the storage doesn't do a lot either, the server still hits 70% usage or more and it takes a solid 3 to 4 minutes for the table to finish calculating. We also tried to prevent the user to select multiple locations at the same time (with a getselectcount) but we were told that's not the funcionality they want, they need to select every location at once (or not to have to select a single one, same thing). Oddly enough, I haven't seen an "Object Out of Memory" message at all, for what it's worth.

Is there anything I can do to limit the memory usage of a pivot table like this? Is this the right way for a pivot table to work? Anything I could try to circumvent the issue other than to make it a straight table and manually make 180+ expressions, one per storage? Any way I can visually trick a straight table to work like a pivot table with a pivoted dimension made a column/header?

Thanks for any advice you can give!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Does really any user work with this table in QlikView? From an analysis point of view such wide and large tables doesn't make much sense because you will frequently lose the focus which data are in which row/column and don't get the wanted overview. Therefore I assume this table is just for an export to excel. Is this the case I suggest to review together with the users the entire process to find out what's their end-targets with the data - maybe there are other and more suitable approaches possible.

If you really want to remain by such large pivot there is probably not much to customize/optimize with the pivot itself because a pivot meant to crosstable stream-data structured records by creating a cartesian dimensionally structure - depending on the set selections and the specified datasets within the set analysis. This is the first step within the calculation and is done in a single-threaded execution. The second step is the (multi-threading) aggregations of the expressions which is usually much faster as the first step (you notice already the differences against a straight-table).

What you could try to optimize is your datamodel - make sure that all relevant fields (dimensions and the fields within the expressions) come from a single table. The more associations exists between the used fields the more efforts are needed to create the virtual tables which are underneath the visible objects within the UI.

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is a bug in the treatment of table pivots with Qlik Sense.

Indeed, I have a table pivot containing 10 dimensions which gives in table 22.000 rows

If my measure does not contain a set analysis, the result is immediate. If my measurement contains a simple set analysis, the RAM goes up by more than 10 GB and my result takes more than 3 minutes to be displayed or crashes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @PBarvaux ,

I am with @marcus_sommer on this one (and also other ones...). If the table is being used just for export purposes, make sure it derives from one table from your datamodel and maybe there are other solutions than just presenting in the UI.

Otherwise, If you insists on having such a large pivot object table in Qlik Sense then I would recommend to use an Calculation Condition on the pivot table or you could use pagination (search in community for solutions).

Regards

Eddie