Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- All Forums

- :

- QlikView App Dev

- :

- Re: Should We Stop Worrying and Love the Synthetic...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Should We Stop Worrying and Love the Synthetic Key?

Synthetic keys have a bad reputation. The consensus seems to be that they cause performance and memory problems, and should usually or even always be removed.

I believe that the consensus is wrong.

My understanding of a synthetic key is that it’s a pretty basic data structure. If you load tables like this:

TableA: FieldA, FieldB, FieldC

TableB: FieldA, FieldB, FieldD

What you’ll actually get is this:

TableA: SyntheticKey, FieldC

TableB: SyntheticKey, FieldD

SyntheticKeyTable: SyntheticKey, FieldA, FieldB

Where neither the synthetic key nor the synthetic key table can be directly referenced, only the original fields.

Well, that doesn’t sound like a bad thing. If I had two tables that I legitimately wanted to connect by two fields, that’s pretty much what I would do manually. As far as I can tell, QlikView is simply saving me the trouble.

Two tables can be connected by one field. That shows up as a connection. Two tables can be connected by two or more fields. That shows up as a synthetic key.

Now, maybe you should NOT connect two particular tables by one field. If so, that’s a data model problem that should be fixed. And while you could say that THAT connection is a problem, you should never say that CONNECTIONS are a problem.

Similarly, maybe you should NOT connect two particular tables by two or more fields. If so, that’s a data model problem that should be fixed. And while you could say that THAT synthetic key is a problem, perhaps you should never say that SYNTHETIC KEYS are a problem.

Synthetic keys should be no more a problem than automatic connections between tables are a problem. Either can cause problems when they result from a bad data model, when there are too many or the wrong connections. But neither should cause problems when they result from a good data model. You should not remove all synthetic keys any more than you should remove all connections between tables. If it is appropriate to connect two tables on two or more fields, I believe it is appropriate to use a synthetic key.

What does the reference manual have to say on the subject?

"When two or more input tables have two or more fields in common, this implies a composite key relationship. QlikView handles this through synthetic keys. These keys are anonymous fields that represent all occurring combinations of the composite key. When the number of composite keys increases, depending on data amounts, table structure and other factors, QlikView may or may not handle them gracefully. QlikView may end up using excessive amount of time and/or memory. Unfortunately the actual limitations are virtually impossible to predict, which leaves only trial and error as a practical method to determine them.

Therefore we recommend an overall analysis of the intended table structure by the application designer. Typical tricks include:

· Forming your own non-composite keys, typically using string concatenation inside an AutoNumber script function.

· Making sure only the necessary fields connect. If you for example use a date as a key, make sure you do not load e.g. year, month or day_of_month from more than one input table."

Yikes! Dire warnings like “may not handle them gracefully” and “may end up using excessive amount of time and/or memory” and “impossible to predict”. No WONDER everyone tries to remove them!

But I suspect that the reference manual is just poorly written. I don’t think these warnings are about having synthetic keys; I think they’re about having a LOT of synthetic keys. Like many of you, I’ve gotten that nasty virtual memory error at the end of a data load as QlikView builds large numbers of synthetic keys. But the only time I’ve ever seen this happen is when I’ve introduced a serious data model problem. I’ve never seen a good data model that resulted in a lot of synthetic keys. Doesn’t mean they don’t exist, of course, but I’ve never seen one.

I’d also like to focus on this particular part, “Typical tricks include: Forming your own non-composite keys”. While I agree that this is a typical trick, I also believe it is useless at best, and typically A BAD IDEA. And THAT is what I’m particularly interested in discussing.

My belief is that there is no or almost no GOOD data model where this trick will actually improve performance and memory usage. I’m suggesting that if you have a synthetic key, and you do a direct one to one replacement with your own composite key table, you will not improve performance or memory usage. In fact, I believe performance and memory usage will typically get marginally worse.

I only have one test of my own to provide, from this thread:

http://community.qlik.com/forums/t/23510.aspx

In the thread, a synthetic key was blamed for some performance and memory problems, and it was stated that when the synthetic key was removed, these problems were solved. I explained that the problem was actually a data modeling problem, where the new version had actually corrected the data model itself in addition to removing the synthetic key. I then demonstrated that if the synthetic key was reintroduced to the corrected data model, script performance was significantly improved, while application performance and memory usage were marginally improved.

load time file KB RAM KB CalcTime

synthetic key removed 14:01 49,507 77,248 46,000 ms

synthetic key left in place 3:27 49,401 77,160 44,797 ms

What I would love to see are COUNTEREXAMPLES to what I’m suggesting. I’m happy to learn something here. I’ve seen plenty of vague suggestions that the synthetic keys have hurt performance and wasted memory, but actual examples seem to be lacking. The few I ran across all looked like they were caused by data model problems rather than by the synthetic keys themselves. Maybe I just have a bad memory, and failed to find good examples when I searched. But I just don’t remember seeing them.

So who has a script that produces a good data model with a composite key table, where removing the composite key table and allowing QlikView to build a synthetic key instead decreases performance or increases memory usage? Who has an actual problem CAUSED by synthetic keys, rather than by an underlying data model problem?

- « Previous Replies

- Next Replies »

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think most of us have been doing ETL for years with QlikView, and actually found colleagues using other tools moving some developments to Qlik because of its ETL capabilities.

But as you mention below it's been said already, ETL is not the strength of the QlikView script. The functions are there to be used, and you can preaggregate as much as you want, or, rather, as much as you can. For me, the reason of using a function over another or JOINing in Qlik or somewhere else is basically performance.

While conceptually is perfectly fine, given your environment and requirements might not, and you will need to do those, or some of those ETL steps out of QlikView.

Classic examples to use other tools, or use the data source capabilities could be amount of data to be loaded and transformed, schedule to reload that data, hierarchies that need to be flattened, complexity in the front end send to back end (e.g.: preaggregating), and so on.

As for the "QlikView is easy to use", well, after so many years using it as a partner, demonstrating it as employee and now consuming and developing it as customer, all I have to say is: easy thing will be easy, complex things will be difficult. Loading from a spreadsheet and doing a dozen charts with Count() or Sum() -while not straightforward-, it's definitely easy to do and provides lots of additional options for visualization.

It applies to basically any other "user friendly" technology out there. For example, it's easy to use a smartphone to do easy tasks, either it be Android or iOS. It takes a lot of time to be fully aware of all the settings available and make use of them.

Along those lines, data modelling from disparate data sources and writing complex expressions for the measures of the charts is more difficult, as I would expect it to be. It requires more skills, more thought and more time. And at some point, you will most probably find a limitation you cannot overcome with QlikView alone, which is also OK: QlikView cannot do anything (although I could agree that it has been sometimes positioned as one-size-fits-all, when it's not).

The synthetic key tables, for me, is the perfect example. Do they work? Yes. Do they perform? As far as I can test it, they do. Are they a solution to data modelling issues? Definitely not.

For what it's worth, do I use them? I don't, even if it means spending some more time in the script editor. Not that much because they don't do what I want or because they do not perform, rather because I find clearer to follow a composite key and I expect the next developer to understand what I was trying to do as well. That's me and my experience, others may find more usable the opposite.

As for bugs or unexpected results, there are, there will be and the sooner you report them and the easier you make for Qlik Support to reproduce the issue, the more likely you are to get a workaround, patch and eventually a fix.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Everyone may have different ideas. I agree with you most of the views, but I am afraid of any unknown. I will never allow anything that is not clear to appear in my product. You may find this kind of "work style" from all over the world, such as Germany or Japan.

Based on the tests I've done before, if I can't guarantee that my data source (especially the fields that might be identified as connection keys) doesn't have a null value, then I won't allow any nested synthetic keys to be generated -- I'll let my program maximize data compatibility.

It's also because of this conditionality that I personally can't go any further to love the synthetic key - it gives me some concern and makes me feel troubled.

Regards,

T&W

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I feel you. When we started, probably because our more relational background, synthetic key tables where unexpected and took us some time to get accustomed to association vs. relation (even if theoretically is similar).

That's why most of us decided that, to get full control on our data models, big or small, easy or difficult, best was to do ourselves and don't let QlikView do for us, following the coworker example by John.

To address those null values you can find in the data model, there are ways to make sure that, when loaded into QlikView, they have some value that can be later used to create those composite keys. JOINs, ApplyMaps(), If(Len()), etc. depending on the type of null (e.g.: blank or real absence) can help you achieve this.

All in all, I think it's perfectly OK to avoid synthetic keys, and is the de facto approach for most of us. Practically speaking, the trade off is very little, if it exists at all, and will create a better readable code for you and anyone else working with you on those QlikView apps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

While I've not used this, another way to handle nulls is with NullAsValue. You tell it which fields you want it to apply to, and for those specific fields, nulls will link to nulls as if they were values.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As you said, when I want to match null values, this is an alternative. But when I don't want null values to match, I can't do this, and I also can't to use synthetic keys.

At the same time, I will also worry that my tests and induction are not enough. I'm just doing a simple exclusion. I'm not sure that there will be no accidents as long as there is no null value in synthetic keys.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

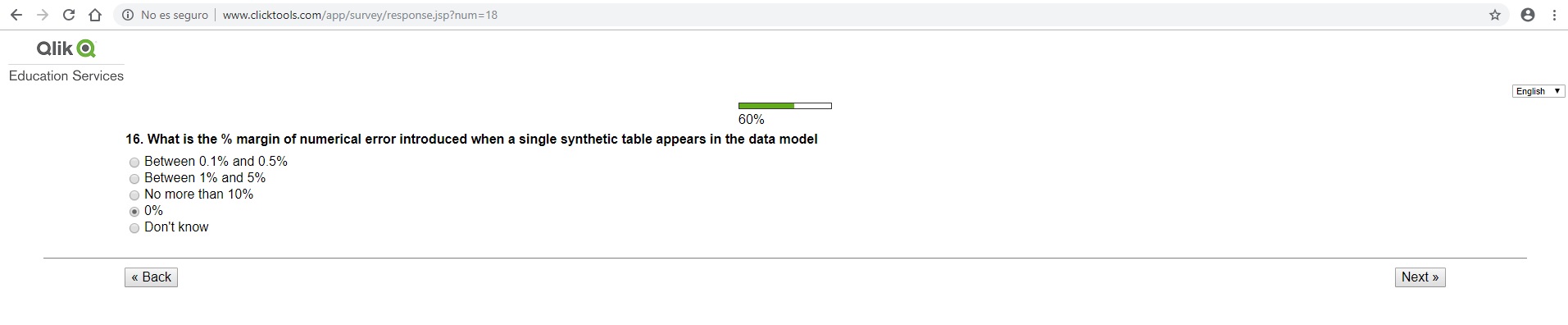

Hi MA:

I'd like to answer the next question for the test, but, I don't know what to answer…

Could you please tell the answer?

Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I seem to have found some benefits about automatically generated Synthetic Keys. The premise is that we need to give up our love for some of the computer hardware resources.

We often hear complaints that they take up terrible hardware resources and lead to server crashes. In fact, they never "waste" these resources. They have the amount of information that is worth the resources they occupy.

Imagine a scenario where our tables need a combination key to associate, but at the same time our front-end logic refers to each sub-column of the combination key separately.

-------PFA-------

Synthetic Keys not only generates composite keys, but also preserves the complete information of all sub-columns. When your front end references these sub-columns, these information becomes particularly important.

When you really need this information, you will find that your manual modification can easily lead to errors and loss of necessary information. And you'll also find that your clumsy modifications won't save more resources for the computer.

Looking back at the previous complaints, the fundamental reason is that they do not need all the information contained in Synthetic Keys. Their needs may be very simple - they just want to associate those tables correctly.

So I would like to state that the waste of hardware resources is not Synthetic Keys itself, but you are using a dragon sword to kill chickens.

--------------

BTW, I'm still worried about Synthetic Keys' behavior towards null values. This is something I can't let go of, although Synthetic Keys seem to have the above advantages.

I hate the new version of the community, which makes it difficult for me to format text and doesn't allow me to upload QVW files.

- « Previous Replies

- Next Replies »