Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Support

- :

- Support

- :

- Product News

- :

- Release Notes

- :

- Qlik Compose Release Notes - May 2021 Initial Rele...

- Subscribe to RSS Feed

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Qlik Compose Release Notes - May 2021 Initial Release to Service Release 1

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Qlik Compose Release Notes - May 2021 Initial Release to Service Release 1

Dec 20, 2024 3:28:07 PM

Nov 28, 2022 8:59:53 AM

Table of Contents

- Migration and Upgrade

- Microsoft .NET Framework 4.8 Upgrade/Installation Prerequisite

- Licensing

- Migration from Compose for Data Warehouses

- The Migration Procedure

- Updating the Views in Data Lake Projects

- Compatibility with Related Qlik Products

- What's new in Qlik Compose May 2021

- Data Warehouse Projects

- Data Lake projects

- General

- End of Life/Support and Deprecated Features

- Resolved Defects

- May 2021

- Qlik Compose May 2021 - Patch Release 07 (build 2021.5.0.267)

- PR06 (build 2021.5.0.198)

- PR05 (build 2021.5.0.176)

- PR04 (build 2021.5.0.155)

- PR03 (build 2021.5.0.130)

- PR02 (build 2021.5.0.79)

- PR01 (build 2021.5.0.78)

- Known issues and limitations

The following release notes cover the versions of Qlik Compose released in May 2021.

Migration and Upgrade

This section describes various upgrade scenarios and considerations.

- Migration from Compose for Data Lakes is not currently supported.

- After upgrading, in order to benefit from the latest enhancements to the task ETL scripts:

- Customers with Data Warehouse projects should regenerate all task ETLs either by selecting the task and clicking the Generate button in the Manage Tasks and Manage Data Marts windows, or by running the generate_project CLI described in the following topic: https://help.qlik.com/en-US/compose/February2021/Content/ComposeDWDL/Main/DW/Projects/Gene rate_projects.htm

- Customers with DataLake projects should regenerate all task ETLs by selecting the task and clicking the Generate button in the Manage Storage Tasks window.

Microsoft .NET Framework 4.8 Upgrade/Installation Prerequisite

- Using the Setup Wizard - It is preferable for .NET Framework 4.8 to be installed on the Compose machine before running Setup. If .NET Framework 4.8 is not present on the machine, Setup will prompt you to install it. This may require the machine to be rebooted when the installation completes.

- Silent Installation – The ISS file required for silently installing Compose must be created on a machine that already has .NET 4.8 installed on it.

Licensing

Existing Compose for Data Warehouses customers who want to create and manage Data Warehouse projects only in Qlik Compose can use their existing license. Similarly, existing Compose for Data Lakes customers who want to create and manage Data Lake projects only in Qlik Compose can use their existing license.

Customers migrating from Qlik Compose for Data Warehouses or Qlik Compose for Data Lakes, and who want to create and manage both Data Warehouse projects and Data Lakes projects in Qlik Compose, will need to obtain a new license. Customers upgrading from Compose February 2021 can continue using their existing license.

It should be noted that the license is enforced only when trying to generate, run, or schedule a task (via the UI or API ). Other operations such as Test Connection may also fail if you do not have an appropriate license.

Migration from Compose for Data Warehouses

Direct migration is supported from Qlik Compose for Data Warehouses November 2020 only. The migration procedure requires you to uninstall Compose for Data Warehouses first and then install Qlik Compose as described below.

The following table details the migration path from your existing Qlik Compose for Data Warehouses version.

|

Existing Compose for Data Warehouses Version |

Upgrade Path |

|

November 2020 |

Upgrade directly to May 2021. |

|

April 2020 (6.6) and September 2020 (6.6.1) |

|

| 6.5 |

|

|

6.4 (End-of-life version) |

|

|

6.43(End-of-life version) |

|

|

3.1 (End-of-life version) |

Contact Support. |

The Migration Procedure

We strongly recommend backing up your existing installation, projects and data warehouse before migrating to any new version.

To migrate Qlik Compose for Data Warehouses to Qlik Compose:

-

Suspend all scheduled jobs.

-

Make a note of where you installed Qlik Compose for Data Warehouses and then proceed to uninstall it. After the product is uninstalled, the following folders will remain in their original locations:

<INSTALLATION_DIR>\data-Containsyourprojectsandsettingswillremainintheoriginal installation folder.

<INSTALLATION_DIR>\java\data-ContainsSQLiterepositories,theMasterUserKey, and log files.

-

Install Qlik Compose. When the installation completes, stop the Qlik Compose service.

-

Replace the <INSTALLATION_DIR>\data folder (default location: C:\Program Files\Qlik\Compose\data) with the <INSTALLATION_DIR>\data folder from the Qlik Compose for Data Warehouses installation.

-

Replace the <INSTALLATION_DIR>\java\data folder (default location: C:\Program Files\Qlik\Compose\java\data) with the <INSTALLATION_DIR>\java\data folder from the Qlik Compose for Data Warehouses installation.

-

From the Windows Start menu, navigate to Qlik Compose > Compose Command Line. The Windows Command Prompt opens.

-

Upgrade the project repositories by running one of the following commands, according to whether you changed the default location of the Compose for Data Warehouses "data" folder:

If you did not change the default location of the "data" folder, run:

composecli.exe upgradeThe command should complete with the following message:

Compose Control Program completed successfully.If you changed the default location of the "data" folder, run:

ComposeCtl -d new_dir compose upgrade

Where new_dir is the location of the Compose "data" folder.The command should complete with the following message:

Compose Control Program completed successfully.

-

Start the Qlik Compose service.

-

Resume any suspended scheduled jobs.

Updating the Views in Data Lake Projects

If you are upgrading from a version prior to Qlik Compose February 2021 Patch Release 04, you need to recreate the Compose Views for each of your Data Lake projects. The Views can be recreated using the Compose web console or using the Compose CLI.

If Compose detects a mismatch between the Logical Metadata (defined via the Metadata panel) and the Storage Zone metadata, the view recreation operation will fail and you will need to validate and adjust the storage before retrying the operation.

Recreating the Views with the web console

To recreate the Views using the web console, simply select Recreate Views from the menu in the top-right of the STORAGE ZONE panel. You will be prompted to confirm the operation as it might take some time, during which the Views data might not be accessible.

Recreating the Views with the CLI

To recreate the Views using the CLI:

-

From the Start menu, open the Compose Command Line console.

-

Run the following command to connect to the server:

ComposeCli.exe connect -

When the command completes successfully, run the following command:

ComposeCli.exe recreate_views --project project_name Where:

--projectisthenameoftheprojectforwhichyouneedtheviewstoberecreated. Example:

ComposeCli.exe recreate_views --project myproject

Compatibility with Related Qlik Products

- Qlik Replicate Compatibility - Qlik Compose is compatible with Replicate November 2020, Replicate November 2020 latest service release, and Replicate May 2021.

- Enterprise Manager Compatibility - Qlik Compose is compatible with Enterprise Manager May 2021 only. Customers upgrading from Compose for Data Warehouses should be aware that all references to "Compose for Data Warehouses" in the Enterprise Manager UI have been replaced with "Compose". The monitoring functionality remains identical to previous versions.

What's new in Qlik Compose May 2021

The following section describes the enhancements and new features introduced in Qlik Compose Data Warehouse projects.

Data Warehouse Projects

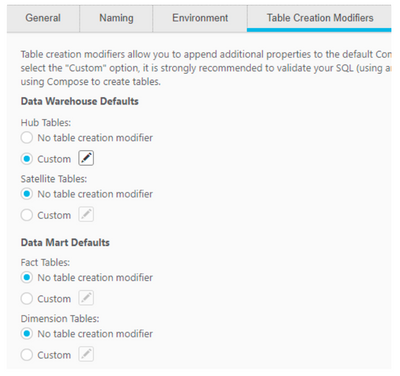

Table Creation Modifiers

Compose May 2021 introduces support for table creation modifiers. By default, Compose creates tables in the data warehouse using the standard CREATE TABLE statement. However, organizations often need tables to be created with custom properties for better performance, special permissions, custom collation, and so on. For example, in Microsoft Azure Synapse Analytics, it’s possible to create a table as a CLUSTERED COLUMNSTORE INDEX, which is optimized for larger tables. By default, Compose creates tables in Microsoft Azure Synapse Analytics as a HEAP, which offers the best overall query performance for smaller tables.

In the Table creation modifiers tab, you can append table creation modifiers as SQL parts to the CREATE TABLE statement. You can set table creation modifiers for both data warehouse tables and for data mart tables. In the data warehouse, separate modifiers can be set for Hub and Satellite tables while in the data mart, separate modifiers can be set for fact and dimension tables. Once set, all tables will be created using the specified modifiers, unless overridden at the entity level.

You can also set table creation modifiers for specific data warehouse (hub and satellite) and data mart tables (fact and dimension).

NVARCHAR data type support

Support for the NVARCHAR (Length) data type has been added when using Microsoft Azure Synapse Analytics as a data warehouse.

Design CLI enhancements

This version expands the functionality of the Design CLI to support data warehouse tasks, data mart tasks, and custom ETLs (tasks). One of the possible uses of CSV export/import is to incrementally update production environments with new versions from the test environment.

Migration of the following objects (as CSV files) is now supported:

- Data warehouse tasks (contains a list of tasks only)

- Data warehouse task settings (contains a list of tasks with settings)

- Data warehouse task entities

- Data warehouse task mappings

- Data warehouse custom ETLs (contains a list of custom ETLs only)

- Data warehouse task customETLs(contains a list of custom ETLs with definitions)

- Data mart task settings

- Data mart customETLs

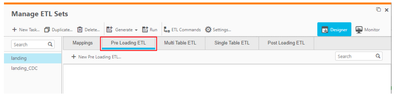

Custom Pre-Loading ETL

Users can now define a "Pre-loading ETL" that will manipulate the data before it is loaded from the landing tables to the data warehouse staging tables. When enabled, the Pre-loading ETL will be run even if there are no mappings or Replicate-generated source data associated with it, which is particularly useful for customer wanting to perform transformations on data generated by third-party tools.

Google Cloud BigQuery data type changes

From this release, the BYTES Compose data type will be mapped to BYTE on Google Cloud BigQuery instead of STRING. After upgrading, only new tables will be created with the updated mapping. Existing tables with not be affected.

If you want to continue using STRING instead of BYTES, you will need to manually change the data type for the affected target columns after the initial creation of the data warehouse tables.

Customers with a Google Cloud BigQuery data warehouse must use Qlik Replicate May 2021 to land their data.Data Mart Enhancements

When creating transactional or aggregated facts with Type 2 data warehouse entities, you can now choose whether the fact table should always be updated with the last record version of any Type 2 data warehouse entities the star schema contains.

To this end, the following option has been added to the General tab of the Edit Star Schema window and is enabled by default.

Update fact with changes to Type 2 data warehouse entities

When upgrading, this option will initially be disabled for existing data marts, but can be enabled if needed.Data Lake projects

Schema Evolution

This version introduces Schema Evolution, which replaces and significantly improves upon the implementation of Schema Evolution in Compose for Data Lakes 6.6. Unlike the former implementation of Schema Evolution, which was fully automated and prone to unexpected behavior, this implementation allows users to easily detect structural changes to multiple data sources and then control how those changes will be applied to your project. Schema evolution can be used to detect the addition of new columns to the source tables as well as the addition of new tables to the source database. Schema evolution can be performed using either the web console or the CLI.

Although a previous implementation of Schema Evolution previously existed in Compose for Data Lakes 6.6, it was fully automated which meant that customers could not choose which changes to apply.

In the web console, the Schema Evolution window can be opened by clicking the Schema Evolution button in the METADATA panel or by selecting Schema Evolution from the menu in the top right of the METADATA panel.

Customers who want to use this feature should run it once the first time (i.e. scan for changes) and choose to skip any changes that were made prior to upgrading. This will "reset" the schema evolution module so the next time it's run, only changes that occurred after the upgrade will be detected.

General

Scalability

This version introduces an option to fine-tune performance by adjusting the number of database connections as necessary.

To this end, the following option has been added to the project settings' Environment tab in both Data Warehouse projects and Data Lake projects:

Limit the number of database connections to: The higher the number of database connections, the more data warehouse tables Compose will be able to create or drop in parallel. While increasing the default should improve performance, it may also impact other database applications. It is therefore not recommended to increase the default unless you encounter performance issues.

CLI for clearing the metadata cache

In addition to the console-based existing functionality, you can now use the Compose CLI to clear the storage zone or data warehouse metadata cache, and the landing zone metadata cache.

End of Life/Support and Deprecated Features

This section provides information about End of Life versions, End of Support features, and deprecated features.

- Internet Explorer will no longer be supported from Compose November 2021.

Resolved Defects

The table below lists the resolved issues for this release.

May 2021

|

Component/Process |

Description |

Customer Case # |

|

ETL Statements |

A lookup expression for a table with an attribute name similar to the “FromDate” column name, would result in an invalid statement being generated for loading the staging table. |

2125644 |

|

Data Marts |

In rare scenarios, when loading the data warehouse, data mart tables would sometimes be unnecessary reloaded as well. |

2100849 |

|

Snowflake on Azure and Microsoft SQL Server |

The number of inserted rows reported in the UI would sometimes be incorrect. |

2100828 |

|

Mappings |

When there were multiple partial mappings for Type 1 attributes, only columns mapped in the first mapping would be updated in the Data Warehouse table. |

2145629 |

|

Export/Import CLI Data marts |

When multiple relations in the model pointed to the same entity, an incorrect CSV file would sometimes be generated when exporting the data mart using the CLI. The issue is resolved in this version. After installing this version, export the changes and then apply them from the newly created CSV file. |

2125704 |

|

UI |

When there were numerous mappings to the same entity, it would not be possible to scroll or click in the Manage ETL Sets window. |

2145533 |

|

Server |

In Data Lake non-acid projects, changes would sometimes be processed from partitions that were still open. |

N/A |

|

Server |

In Data Lake non-acid projects, duplicate records would sometimes appear in the Views if change processing did not complete successfully. |

N/A |

|

ETL Statements |

In rare scenarios, an error would be encountered when generating an ETL with a lookup statement. |

2155502 |

|

ETL Statements |

When running a data warehouse task to load a Type 2 entity, the following error would sometimes occur: Cannot return unknown process parameter

|

2100849 |

|

ETL Statements |

When having multiple segments for the primary key, the generated ETL statements would sometimes insert columns in the wrong order. |

2161449 |

|

UI - Connectivity |

After changing the data warehouse connection from Advanced to Standard, the source database would still attempt to use the settings defined in the Advanced connection string. |

2121114 |

|

Performance |

Working with projects with numerous mappings would sometimes impact UI performance and prolong ETL generation. |

2149788 |

|

UI Responsiveness |

Working with projects containing many mappings would impact UI responsiveness in certain scenarios. |

2149788 |

|

Amazon EMR - CDC |

When attempting to run a CDC task against an Amazon EMR cluster configured with Glue Metadata, the "UPDATE" statement would constantly fail. |

2168085 |

|

Snowflake |

Data warehouse creation would fail when Date and Time entities were added to the Model. |

2180960 |

Qlik Compose May 2021 - Patch Release 07 (build 2021.5.0.267)

These release notes provide details on the resolved issues and/or enhancements included in this patch release. All patch releases are cumulative, including the fixes and enhancements provided in previous patch releases.

Note This patch release must be installed for compatibility with Replicate May 2021 PR03 or above.

| Component/Process | Description | Customer Case # | Internal Issue # |

| Security | Fixes critical vulnerabilities (CVE2021-45105, CVE-2021-45046, CVE-2021-44228) that may allow an attacker to perform remote code execution by exploiting the insecure JNDI lookups feature exposed by the logging library log4j. The fix replaces the vulnerable log4j library with version 2.17. | RECOB-4369 | N/A |

PR06 (build 2021.5.0.198)

| Component/Process | Description | Customer Case # | Internal Issue # |

| Data marts | Adding data mart dimensions would sometimes fail without a clear error. | RECOB-3956 | 2231873 |

| Data warehouse validation | The following error would occur when validating the data warehouse: Index was out of range. Must be non-negative and less than the size of the collection | RECOB-3920 | 8634 |

PR05 (build 2021.5.0.176)

| Component/Process | Description | Customer Case # | Internal Issue # |

| ETL Statements | When an entity had a Primary Key relation to another entity whose Primary Key column order had been changed, an incorrect join clause would be generated for late arriving data. This would result in the table column order being inconsistent with the mappings. | RECOB-3745 | 2260091 |

| ETL Statements | Primary Key columns that were also Foreign Key columns would be ordered incorrectly. | RECOB-3804 | 2260091 |

PR04 (build 2021.5.0.155)

| Component/Process | Description | Customer Case # | Internal Issue # |

| Backdating | Migrating a project from an older version would disable the backdating options. The issue was resolved by adding a new CLI command line that sets the "Add actual data row and a precursor row" option for all entities as well as in the project settings. composecli set_backdating_options --project project_name After running the command, refresh the browser to see the changes. | RECOB-3652 | 2240557 |

| Discover - Snowflake | Discovering from Snowflake would fail when tables have foreign keys. | RECOB-3718 | 2260256 |

PR03 (build 2021.5.0.130)

| Component/Process | Description | Customer Case # | Internal Issue # |

| User Interface | After clicking OK in the database connectivity settings, Compose would sometimes take a long time to return to the project design user interface | RECOB-3577 | 2243100 |

| Replicate compatibility | The Replicate Client was updated to support the latest Replicate patch release. | N/A | RECOB-3609 |

PR02 (build 2021.5.0.79)

| Component/Process | Description | Customer Case # | Internal Issue # |

| ETL Statements - Error marts | In rare scenarios, when the mapping contained filters and more than one data validation rule was defined, the filters would not be applied when moving the data to the error marts, resulting in incorrect data. | N/A | RECOB-3087 |

| Databricks on Google Cloud | Added support for Databricks on Google Cloud storage (version 8.x) | N/A | RECOB-3096 |

PR01 (build 2021.5.0.78)

| Component/Process | Description | Customer Case # | Internal Issue # |

| UI - Table Creation Modifiers | The Table Creation Modifiers feature was mistakenly available in Data Lake projects. It has now been removed. | N/A | RECOB-2804 |

| UI - Lookup | A "Project Settings is null" error would be encountered when clicking the Show Lookup Data button in the Select Lookup Table window. | N/A | RECOB-2860 |

| Amazon EMR | When using Amazon EMR with Glue, the following error would sometimes be encountered when performing Full Load of many tables (excerpt): ERROR processing query/statement. Error Code: 10006, SQL state: TStatus(statusCode:ERROR_ STATUS, | 2193872 | RECOB-2869 |

| Project deployment - Snowflake | After deploying a project with a Snowflake data warehouse, Compose would fail to run queries on Snowflake. | 2201916 | RECOB-2894 |

| Generate project command | When the database_already_ adjusted parameter was included in the generate_project command, Compose would drop and recreate the data mart tables. | 2191511 | RECOB-2739 |

| Microsoft SQL Server | When using Windows authentication, the JDBC connection would fail with a "This driver is not configured for integrated authentication" error while running a Compose task. | 2201049 | RECOB-2959 |

| Generate Project Documentation | When trying to generate project documentation, an "Object reference not set to an instance of an object." error would sometimes be encountered. | 2189238 | RECOB-2771 |

| Microsoft SQL Server | Using VARCHAR to calculate the checksum would sometimes result in an incorrect value. This was fixed by using VARCHAR(MAX) instead. After installing this patch, you need to regenerate all the task ETLs (which can also be done using the generate_project CLI command. For details, see the Compose Help. It is also recommended to reload any data that contains strings. For an explanation of how to reload data, see the Compose Help. | 2192529 | RECOB-2845 |

| ETL Statements - Satellite Tables | In rare scenarios related to backdating, the value in the "From Date" column would be different from the value in the "To Date" column of the preceding record. | 2165287 | RECOB-2723 |

| Export to CSV | The ComposeCli export_csv command would fail with a "Object reference not set to an instance of an object" error when custom ETLs were present in the project (e.g. multi-table ETL , single table ETL, etc.). | 2200378 | RECOB-3073 |

Known issues and limitations

The table below lists the known issues for this release.

|

Component/Process |

Description |

Ref # |

|

Schema Evolution - New Columns |

When using Replicate to move source data to Compose, both the Full Load and Store Changes replication options must be enabled. This means that when Replicate captures a new column, it is added to the Replicate Change Table only. In other words, the column is stored without being added to the actual target table (which in terms of Compose is the table containing the Full Load data only i.e. the landing table). For example, let's assume the Employees source table contains the columns First Name and Last Name. Later, the column Middle Name is added to the source table as well. The Change Table will contain the new column while the Replicate Full Load target table (the Compose Landing table) will not. In previous versions of Compose for Data Warehouses, mappings relied on the Full Load tables (the Compose Landing tables), meaning that users were not able to see any new columns (i.e. Middle Name in the above example) until they were created in the Full Load tables via a reload. From this version, the Compose Discover and Mappings windows will show changes new columns that exist in both the Change Tables and the Replicate Full Load target tables. This will allow Schema Evolution to suggest adding columns that exist in either of them. Although this is a much better implementation, it may create another issue. If a Full Load or Reload occurs in Compose before the Replicate reload, Compose will try to read from columns that have not yet been propagated to the Landing tables (assuming they exist in the Change Tables only). In this case, the Compose task will fail with an error indicating that the columns are missing. Should you encounter such a scenario, either execute a reload in Replicate or create an additional mapping without the new columns to allow Compose to perform a Full Load from the Landing tables. |

N/A |

|

Qlik Compose - Data Lake Tasks |

Qlik Compose Data Lake tasks are currently not shown in Qlik Enterprise Manager. |

RECOB- 2587 |

About Qlik

Qlik converts complex data landscapes into actionable insights, driving strategic business outcomes. Serving over 40,000 global customers, our portfolio provides advanced, enterprise-grade AI/ML, data integration, and analytics. Our AI/ML tools, both practical and scalable, lead to better decisions, faster. We excel in data integration and governance, offering comprehensive solutions that work with diverse data sources. Intuitive analytics from Qlik uncover hidden patterns, empowering teams to address complex challenges and seize new opportunities. As strategic partners, our platform-agnostic technology and expertise make our customers more competitive.