Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- All Forums

- :

- GeoAnalytics

- :

- GeoAnalytics challenge, travel areas use case

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

GeoAnalytics challenge, travel areas use case

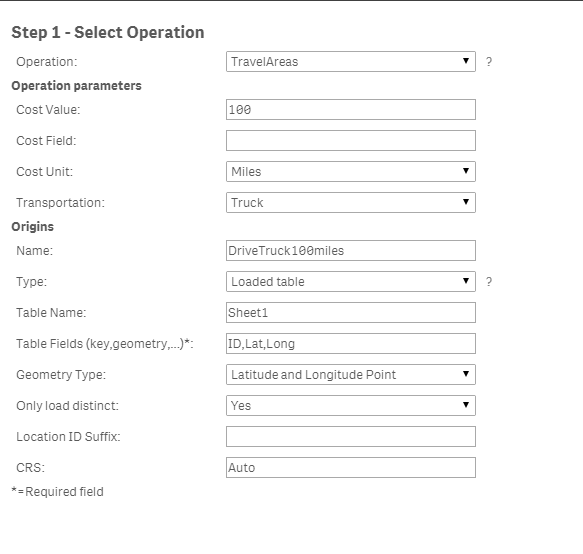

These are the steps we followed, working with the Qlik GeoAnalytics .ppt file as a reference.

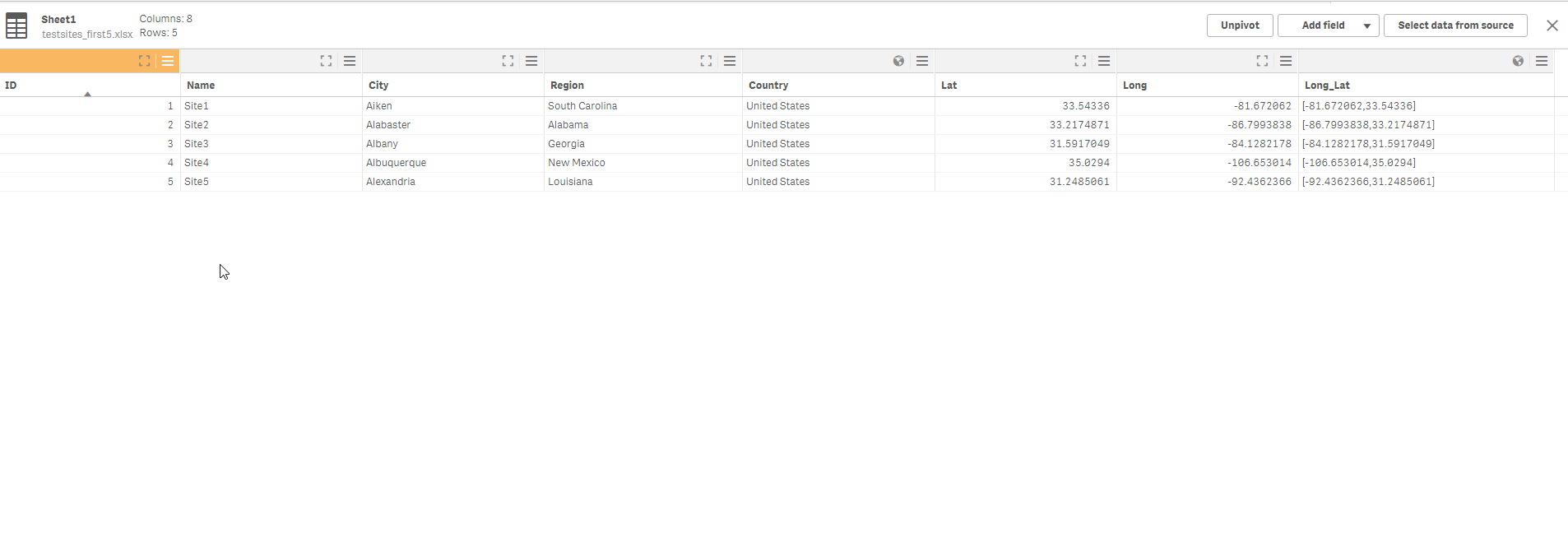

1. Load file in Data Manager. DM creates the Long_Lat geopoint automatically.

2. Go to Data Loader editor.

3. confirm we have a working connection. checks out.

4. configure choose "TravelAreas" operation

a. Cost Value = 100

b. Cost Field = blank

c. Cost Unit = Miles

d. Transportation = truck

e. Name = DriveTruck100miles

f. Type = Loaded Table

g. TableName = sites

h. Table Fields = ID,Long_Lat

i. Type = point

j. Only distinct = yes

5. Choose "TravelAreas columns", and Insert Script

6. Load Data

The following error occurred:

Connector reply error: QVX_SYNTAX_ERROR: Failed to process query: Failed to create dataset DriveTruck100miles: Malformed POINT geometry: .

The error occurred here:

?

We've tried only loading 1 single value, and get the same error.

The data and .qvf are both attached. A support case has been created, and it is being treated as a bug thus far. I suspect it has something to do with our lat/long values, but they seem valid.

Hoping for assistance!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Additional note, for Step 4h and i if we instead use Lat and Long (instead of Point), we get a different error.

The following error occurred:

Connector reply error: QVX_SYNTAX_ERROR: Failed to process query: Failed to create dataset DriveTruck100Miles: Invalid latitude or longitude value in CSV, [,]

The error occurred here:

?

We've checked and confirmed there there are no "," in our .xls columns for Lat and Long.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

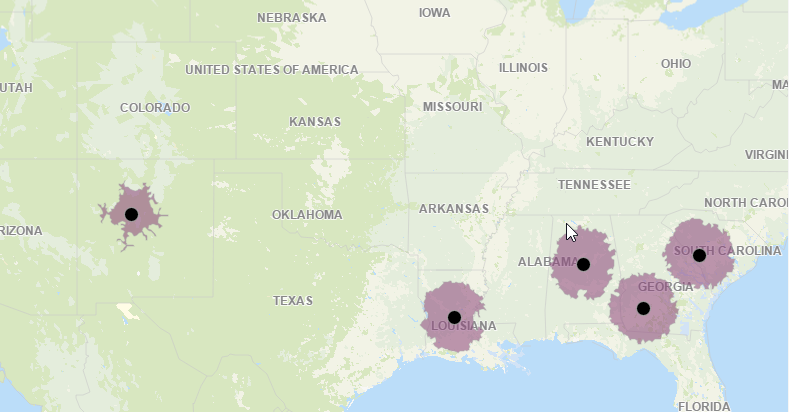

Please see the attached app! I use the geo type lat and lon point using a subset of your data.

When applicable please mark the appropriate replies as CORRECT. This will help community members and Qlik Employees know which discussions have already been addressed and have a possible known solution. Please mark threads as HELPFUL if the provided solution is helpful to the problem, but does not necessarily solve the indicated problem. You can mark multiple threads as HELPFUL if you feel additional info is useful to others.

Regards,

Jonas Karlsson

Qlik

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jonas, thank you. It is good to see that I'm setting the parameters correctly.

I've chosen a few random subsets of data, and have also tried removing duplicate values first, but still continue to have the same error.

Do you mind providing your "Sheet1" to me, and I'll try with exactly the same data set?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I just used a new spreadsheet. Sheet1 is just the name of the table

When applicable please mark the appropriate replies as CORRECT. This will help community members and Qlik Employees know which discussions have already been addressed and have a possible known solution. Please mark threads as HELPFUL if the provided solution is helpful to the problem, but does not necessarily solve the indicated problem. You can mark multiple threads as HELPFUL if you feel additional info is useful to others.

Regards,

Jonas Karlsson

Qlik

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Dave and Jonas,

I believe the issue has to do with the formatting of the data in the original tables. As noted, Jonas created a new spreadsheet that got rid of the alternating colors. I believe there were also two records with orange cell highlights. I am guessing that when the xlsx is converted into CSV, something happens with the data thus causing the error.

I was also able to get the connector to work after stripping out the formatting.

Regards,

Thomas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jonas, it works! It turns out the problem was some formatting problem in the original .xls document. We removed all formatting and it worked. Your reply helped Thomas Lin figure that out. Working great now, thank you for your assistance.