Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Support

- :

- Support

- :

- Knowledge

- :

- Support Articles

- :

- Filter for last 90 days of data in Qlik Replicate

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Filter for last 90 days of data in Qlik Replicate

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Filter for last 90 days of data in Qlik Replicate

Jul 9, 2024 3:33:03 AM

Jan 13, 2022 10:46:42 AM

This article references two options for filtering the last 90 days worth of data on a date column in Qlik Replicate.

Additionally, the goal is to show a working example of full load pass thru vs record selection condition so users can adjust filters to different types or conditions.

Option 1: Full load passthru

The full load pass thru filter is more efficient since it filters directly on the source. For this to work, you need to use the exact syntax of the source database. It can only be applied to full load tasks, not change data capture mode.

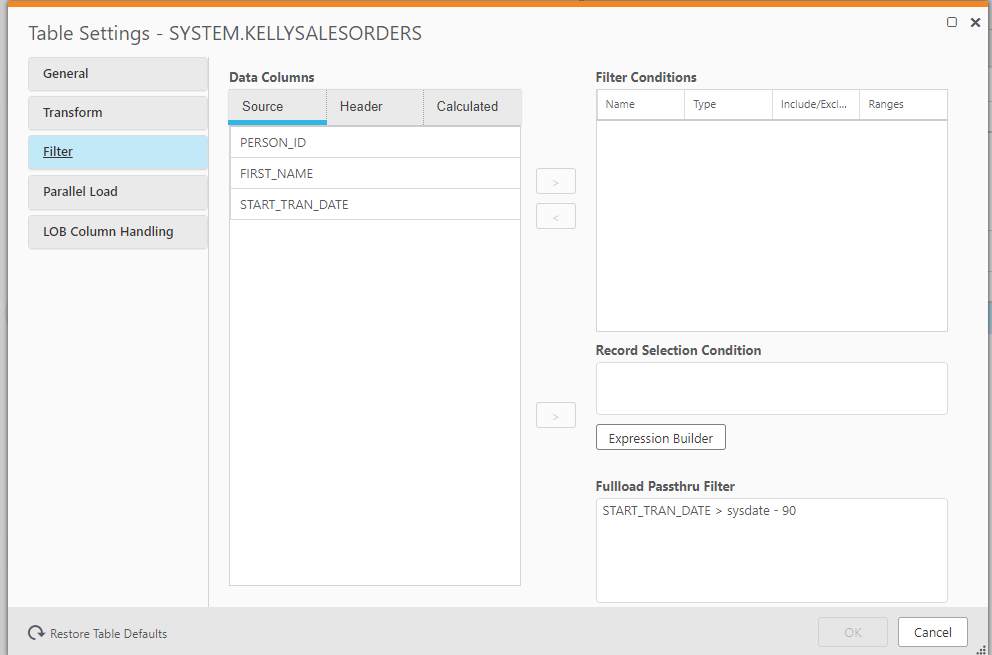

Example with Oracle source where START_TRAN_DATE is of type DATE:

START_TRAN_DATE > sysdate - 90

To open with Full load Passthru available:

- Select the table you want to filter on in the Designer tab

- Hit CTRL + Table Settings

- Click okay and save your task.

If you see the error:

Table 'x' cannot be reloaded because a passthrough filter is defined for it. Passthrough filters allow task designers to control SQL statements executed on source database tables during replication. To continue using passthrough filters, you must explicitly set "enable_passthrough_filter" to true in the "C:\Program Files\Attunity\Replicate\bin\repctl.cfg" file. Otherwise, remove the passthrough filter from this table and any other tables defined with passthrough filters. [1020439] (endpointshell.c:3716)

Then:

- Open C:\Program Files\Attunity\Replicate\bin\repctl.cfg

- Add:

"enable_passthrough_filter": true - Restart the Qlik Replicate Server service.

Option 2: Record Selection Condition

This option can be used for full load and change data capture task but is not as efficient for large tables as full load passthru. It does not have to be the exact syntax of the source, i.e the example below can work on many sources. This is because the filter condition function is an SQLite function within Replicate.

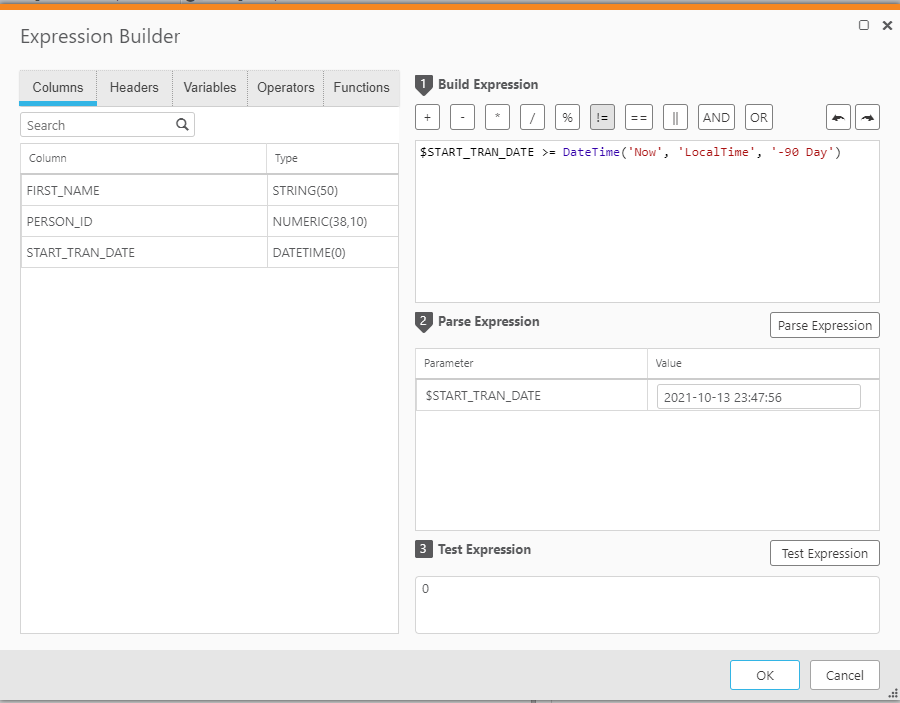

An example where START_TRAN_DATE is of type DATE

$START_TRAN_DATE >= DateTime('Now', 'LocalTime', '-90 Day')

- Go to Task Designer

- Open Table Settings

- Click Filter

- Choose Expression Builder

- Fill out 1: Build Expression with:

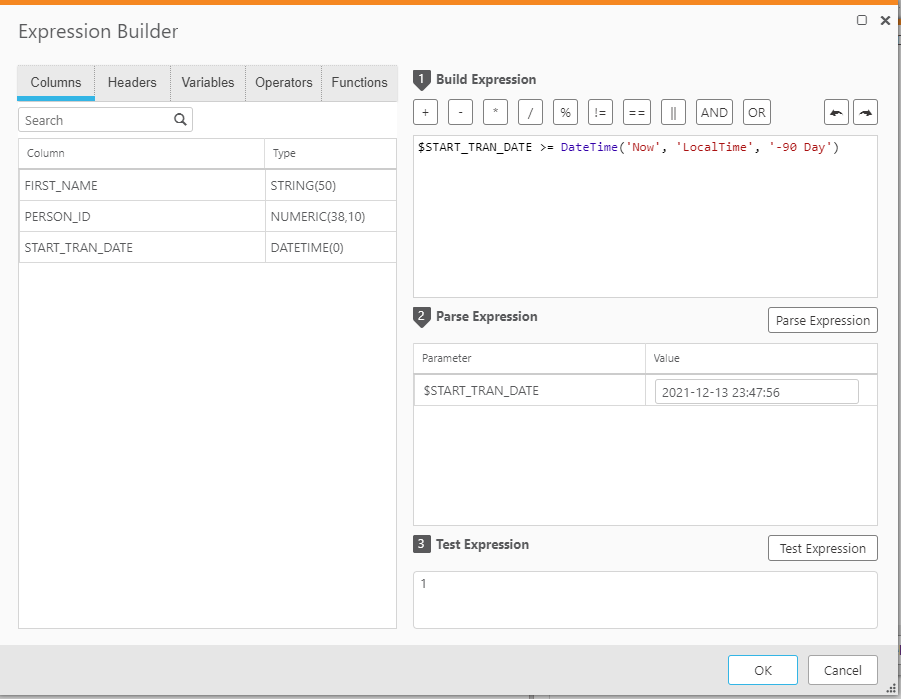

$START_TRAN_DATE >= DateTime('Now', 'LocalTime', '-90 Day') - Click Parse Expression

- Fill out 2: Parse Expression with:

2021-10-13 23:47:56 - Click Test Expression

This should return 0. - Fill out 2: Parse Expression with:

2021-12-13 23:47:56 - Click Test Expression

This should return 1.

- Fill out 1: Build Expression with:

- Click OK.

- Save your task

Related Content:

Filter for last 90 days part two with postgres example

Environment:

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Is there a place in log where one can see whether replicate uses "enable_passthrough_filter": true or not? . How do I know whether replicate process uses latest changes from repctl.cfg file?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello @S_Ravi!

I reached out to a subject matter expert for you and have an answer for you:

If "enable_passthrough_filter" is set to true, then (in the passthrough builder there is an expression) this message is written in the log:

2022-03-03T19:34:05:966289 [SOURCE_UNLOAD ]I: Table 'SCOTT.KIT' is unloaded using the passthrough filter expression 'ID > 2' (endpointshell.c:3783)

All the best,

Sonja

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

I was wondering why the passthrough filter is not enabled by default and I found this comment by @john_wang in this post:

Quote:

A recent product hardening change to Replicate has the passthrough feature turned off by default. When disabled, using passthrough filters will cause the replication task to fail when trying to reload a table for which a passthrough filter is defined. While the passthrough filter feature can be re-enabled, it is not recommended if the user configured for the source endpoint has update permissions on the source database. An alternative approach is to use a view in the source database to implement the filter.

If the passthrough filter is enabled, what could happen in the worst case?

It would be helpful in order to evaluate the risk when turning it on.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hey @AndreasFenske,

Because the passthrough filter can interact directly with the source database, there is some risk involved if you use incorrect syntax or logic in the passthrough filter.

As John pointed out, this applies to users with update permissions on the source DB. If you check the Qlik user guide by source, it will provide more information about what permissions are required for the replicate user. Additionally, you can check with source dba to see if replicate user has update permission.

Thus so long as your user does not have update privileges and you test your filter prior, it's safe to use.

Best,

Kelly

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Kelly,

We noticed that data that is older then the filters stays on the target tables. This sometimes causes some problems with certain unique keys we create on the target table. As the old data, which might have changed in the source system, but doesen't get transferd due to the filter, is not be the same anymore.

Are there any best practices how to delete all records that are older then the filter (sysdate-90) on the target database (so in our example, sysdate - 91 and older would be gone)? We are using Oracle as a source and MSSQL as the target.

Thanks for your help.

Best regards,

Marc

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hey @Finnova

Thanks for reaching out about this topic.

When you are applying the 90 day pass thru filter and running a full load, do you have 'Drop and Create' set in Task Settings -> Full Load Settings -> Target Table Preparation ?

With these settings this will drop the existing target table and create a new table with only the past 90 days worth of data. One idea would be renaming the table to a certain cohort value every time the full load is run (table_q1,table_q2,etc.) and think if this table as a snapshot in time and only that period. Depends on your use case/ how often you initiating the full load.

Additionally, please note, full load passthru filters are designed for full load portion of tasks only, not change data capture mode. This is because it is a direct query on the source.

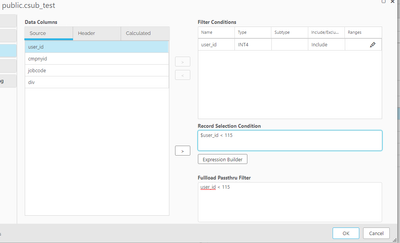

If you need change data capture mode enabled on a task, I would recommend a full load pass thru filter (for full load) and a record level selection condition (for CDC).

Here is an example for capturing records with an user_id less than 115 on the full load and also CDC of a task:

Let me know if you have any follow up questions.

Best,

Kelly

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Kelly,

Thanks for the prompt answer.

First to your question:

When you are applying the 90 day pass thru filter and running a full load, do you have 'Drop and Create' set in Task Settings -> Full Load Settings -> Target Table Preparation ?

No we use "TRUNCATE before loading"

We do use the passthrough filter though and have also used the cdc filter.

Our questions:

As we do not perform full loads very regularly, we get allot of "discontinued data", which is outside of the range of the CDC filter. As this data on the target can be very different to what is on the source (maybe it doesn't eaven exist there anymore) this can cause problems with unique indexes we implemented on the target.

Our idea is to maype run a scheduled job on the MS SQL target db which deletes all this "old discontinued data" so it is not in the way anymore and cannot cause problems with the unique indexes.

Our question is how do other customers do this / what are the your best practices or are we the only ones with this problem?

Thanks for your help.

Best regards,

Marc

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hey @Finnova

That's interesting, if the data is truncated (all rows removed, but table structure remains) before the load, then it should remove dates outside of the range.

Are you experiencing any errors or issues running the load? If willing, can you DM me your task json (Task Designer -> Export Task) ?

It is possible to have a job or a query on the SQL Server target side where you filter for only past 90 days worth of data (or the exact date range you have defined for the particular table). This can be done by your DBA with some SQL code.

Best,

Kelly

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hey Kelly

When we perform a full load of the task the filter also gets applied with us (with the truncate before load settings) as after the table is truncated and completly empty, only the data in range will be transfered.

In CDC mode data outside of the range of the filter still exists. As we don't do full loads very often it can become a substancial amount of old discontinued unusable data.

But then if I read your reply corectly there is no way to reduce the amout of data on the target db with Qlik Replicate but the full load. I was guessing there might be an option of a special Qlik Replicate load that will keep the data in the filtered range but clean out all the old data (as this would be much faster then a full load), but I guess this dosn't exist. It would be handy though as we don't like to modify (or delete) data in the target db that is beeing loaded by Qlik Replicate, as problems can occur that will make the Qlik Replicate task fail (like waits from locks etc.).

Best regards,

Marc

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hey @Finnova ,

I posted a follow up article with another example of restricting to a 90 day window in a postgres source task. In this example, I hardcode record selection conditions to stay within an explicit date range.

Depending on your requirements, its possible to set up a task for each date range with respective record selection filters set up. This would prevent having to touch anything on the target end.

For your comment: "I was guessing there might be an option of a special Qlik Replicate load that will keep the data in the filtered range but clean out all the old data (as this would be much faster then a full load), but I guess this dosn't exist". No, at this point the functionality for this does not exist for a rolling window filter. If you would like to propose as a feature request, please visit our Ideations Page.

Best,

Kelly