Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Support

- :

- Support

- :

- Knowledge

- :

- Support Articles

- :

- Merging the Hard to Imagine with Simplicity

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Merging the Hard to Imagine with Simplicity

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Merging the Hard to Imagine with Simplicity

Hard to Imagine

Let's imagine you have millions/billions of rows of disparate data. That's not hard to imagine. You do.

Let's imagine that you need to create analytics from all that data. That's not hard to imagine. You do.

Let's imagine that you have a data integration pipeline with change data capture to keep changes constantly flowing for those sources. That's not hard to imagine. You do.

Let's imagine that you want to quickly get those changes into your analytics applications being used by thousands of end users without having to touch the other millions/billions of rows of disparate data you already loaded. WOW! Now that is hard to imagine.

Qlik Basics

Before I propose a super simple solution to that hard to imagine issue, I need to share just a few Qlik basics to solidify my proposal:

- Qlik encourages you to load each table individually so that you can take advantage of the Qlik Associative Engine, rather than pushing down SQL statements with JOINS.

- Qlik encourages you to store the tables into binary* storage (QVD files).

- Qlik encourages you to separate the concept of all that data from your visual applications so that your QVD files can be reused by multiple applications.

Those concepts are different. They are unique. Sometimes those things can be frustrating for those new to Qlik because it makes them think differently than they have for their entire life. I get that. It is these differences that allow Qlik customers to associate disparate data sources in-memory for speed of thought insights with minimal impact to underlying source systems.

Don't get me wrong. You do not have to take advantage of them, or follow this model at all. You can absolutely use Qlik like other tools. You can push down any SQL you want, including statements that contain as many JOIN clauses as needed. You can pull data live from your data sources on the fly via Direct Query, On Demand Application Generation or Dynamic Views. Each of those options have use cases that they are well suited for. They just aren't suited for this enterprise use case where we have millions/billions of rows of data that we need to analyze. While also rapidly incorporating any changes that might be flowing through our data integration pipelines so that our impact of reading the data, and the costs associated are kept to a minimum.

Incremental Loads

Those Qlik basics I referred to, have always enabled Qlik architects to perform incremental loads of data. The changes. Regardless of whether the source systems involved:

- Only Insertions

- Insertions plus Updates

- Insertions, Updates and Deletes

Qlik architects could write script that would load and merge those incremental changes as required. Allowing for super rapid data refreshes that had little impact on the source systems. While awesome, there were some minor issues. Architects had to know what the source systems allowed, and had to write different types of scripts for each of the use cases. Deletions were thought provoking for sure. Neither is hard to overcome because there are a ton of posts online you can find with examples. Including Qlik's own help site.

Simplicity

The only thing that could make the situation any easier is if the source systems would flat out say "You need to insert/update/delete this row of data." If they did that, Qlik data architects could simply say "Here is the existing data I already have loaded into memory, now go merge these changes that the system has."

Assuming of course that Qlik provided a MERGE function that would:

- Insert the rows that the source system said needed inserted.

- Update the rows that the source system said needed updated.

- Delete the rows that the source system said needed deleted.

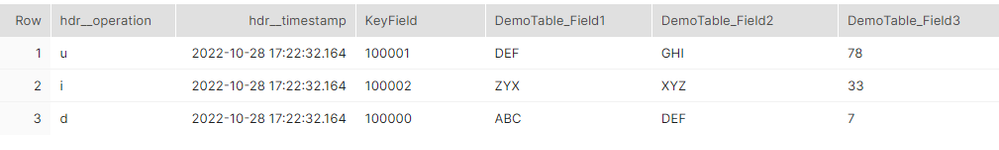

The great news is that Qlik does indeed provide a MERGE function that will do just that. But what about the source systems? As it turns out whether you are using Qlik Replicate on-premise, or Qlik Cloud Data Integration in your SaaS/PaaS tenant, the change tables written provide the information needed for the merge function to work. Here is an example of data stored in the change table.

Well lookie theire. The update, insertion and deletion are clearly identified as well as the values impacted.

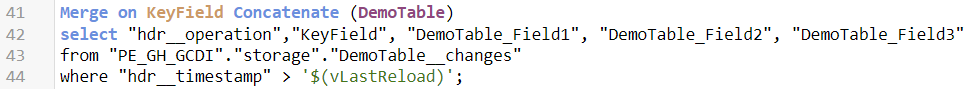

Here is an example of the code I wrote that passes that data to the Merge function in my load script:

To understand the merge function ... assume that I currently have a table called "DemoTable" in memory that has millions/billions of rows with the following columns in it: KeyField, DemoTable_Field1, DemoTable_Field2, DemoTable_Field3.

The "on" clause of the merge command indicates the field that represents the primary key for the table, in my case it is simply called "KeyField."

The "concatenate" clause of the merge command indicates the in-memory table that you want to merge data into/with.

The "select" clause is simply a typical SQL select statement that will pull the data that you want to merge. The first field of the return will have the values for update, insert, or delete so that the merge function will know what to do with that row.

The "where" part of the clause simply filters the selection to the rows in the change data capture table that have occurred since the last time I reloaded my data. Which in my case is stored in a variable and updated after each time the merge is performed.

Conclusion

Let's imagine that you want to quickly get changes that are flowing from your source systems with change data capture into your analytics applications being used by thousands of end users without having to touch the other millions/billions of rows of disparate data. Hard to imagine?

Not anymore, my friends, not anymore. Your load scripts can now quickly and easily incorporate these merges keeping your QVD files/applications up to date with very minimal impact on your systems, with minimal cost on your budget.

But wait, there's more! Because it is so lightweight/efficient, this merge functionality also provides the benefit of being able to be utilized via partial reloads. Meaning you can allow your end users to take action in their own sessions to perform the merge in use cases where it makes sense. Thus, enabling them to have the confidence that they are indeed seeing the very latest/greatest/newest/freshest data. You gotta be loving that.

Related Content

If you aren't already using Qlik Replicate or Qlik Cloud Data Integration and still want to take advantage of the Merge function you can. You just need a method of getting the update, insert and delete information from the system you are reading from. If you are using Snowflake you can take advantage of their time travel functionality as well. Click this link to read my post on using Snowflake's time travel with the merge function.

* Qlik QVD files typically offer 70-95% space savings over original data/text files.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@Dalton_Ruer Great article, thank you!

The "where" part of the clause, line 44 on the code example, for the change data capture, how would you handle it if you have null value in your table for timestamp/lastupdate, see screen shot below from our Oracle database. Can Qlik Replicate handle this with incremental load? How?