Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Discover

- :

- Blogs

- :

- Product

- :

- Design

- :

- Behind the scenes with bracketchallenge.qlik.com a...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

Most of you are probably aware and have already participated in the $100K competition, that Qlik has created for the College basketball tournament.

My colleague Arturo has a nice introduction on what the app is and how to get involved @ https://community.qlik.com/t5/Qlik-Design-Blog/Win-100K-The-Bracket-madness-has-started/ba-p/1558557

What very few are aware of, is how we designed the entire solution and why we picked each component.

The first and most important decision was about which Qlik product to use. We've decided on using Qlik Core, the Native cloud analytics development platform, because we needed to successfully handle thousands of concurrent users as fast as possible, in an independent cloud solution. The beauty of Qlik Core is that we take advantage of just the Qlik Associative Engine and we decide on the front end how to handle the data that comes from it. This makes the app ultra-fast since it is opening one WebSocket connection per user session and the data transferred are just simple json objects!

The second part we were worried about, was concurrency which leads to scalability. We needed to be able to handle thousands of concurrent users and if the server was reaching a certain percentage of Ram and Cpu, spin another one and route the new requests to the new server. That is where Qlik's awesome core-scaling repository came to be our key and the center of our solution.

The Core-scaling Solution

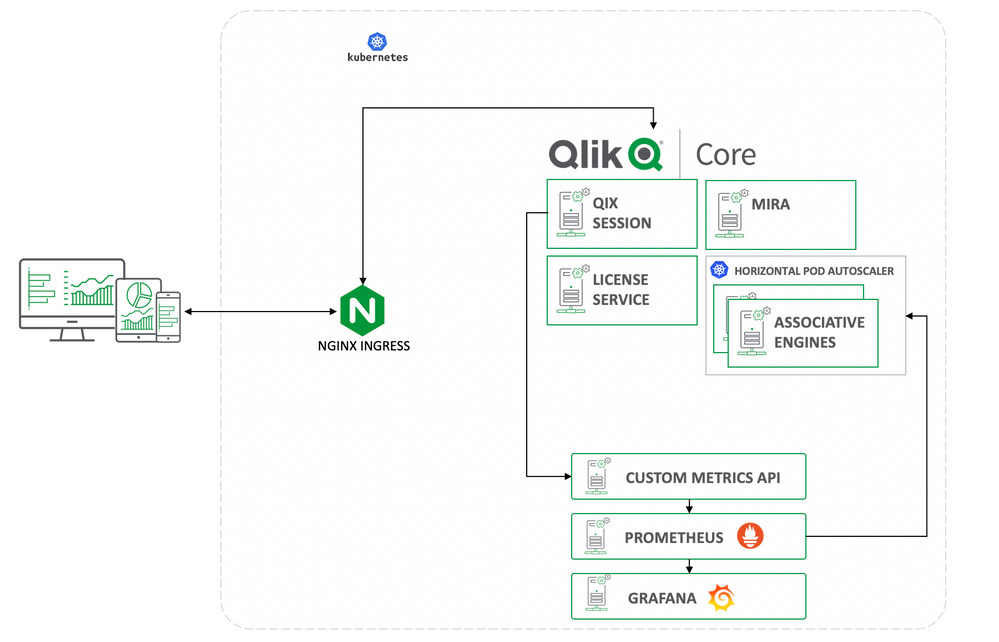

This Github repository was the beginning and the foundation of the entire solution. I used Kubernetes as our container orchestrator and started with the main containers that Qlikhas included in the public repo, Grafana, Prometheus, Custom Metrics Api, Nginx, Qix Session, Mira, License Service and the Qlik Engine. This repository is based on Google cloud, but I have made it work on Azure and AWS without any issues.

Now, each of these play a crucial role into the cluster and they are all needed.

Custom Metrics Api is an Api service that is used to send metrics from various Kubernetes services to Prometheus. It is mostly required on the scaling part of the engines which is based on the average sessions.

Prometheus is a service monitoring system that collects metrics from configured targets at given intervals. In our solution, we collect metrics from all of the servers so we can monitor performance and alert any issues, infrastructure or software related.

Grafana is a dashboard for visual representations of the metrics that we are collecting. In the repo you will find a custom json dashboard for the engines. You can view the resources utilized, total current sessions vs sessions per engine etc. I have included one more dashboard to monitor the health of the entire cluster, like the total Ram and Cpu used of all the services and totals for each one.

Nginx Ingress controller is a reverse proxy that handles all the security and traffic flow to the cluster. I will explain later a common user flow to the cluster.

Qix Session handles the placement based on custom metrics and set up of a session.

Mira is a Qlik Associative Engine discovery service for containerized environments. Mira finds the available Qlik Associative Engine instances and the properties of each instance.

License Service checks the license expiration or token consumption.

Qlik Associative Engine is the central service in the Qlik Core stack. It is designed specifically for interactive, free-form exploration and analysis. It fully combines large numbers of data sources and indexes them to find the possible associations, without leaving any data behind.

Once I had the initial repo, I created a cluster in Azure and I started deploying. You can do the same by following this guide to create the cluster https://community.qlik.com/t5/Qlik-Design-Blog/Azure-Kubernetes-Services-and-Qlik-Core/ba-p/1515128.

There are 2 ways of deploying to any kubernetes cluster, Microsoft, Amazon or Google. Either by following the instruction in core-scaling repository https://github.com/qlik-oss/core-scaling and using kubectl commands to deploy the yaml files, or by using helm charts which I have created in https://github.com/yianni-ververis/core-scaling-helm repository.

Customizing the Solution

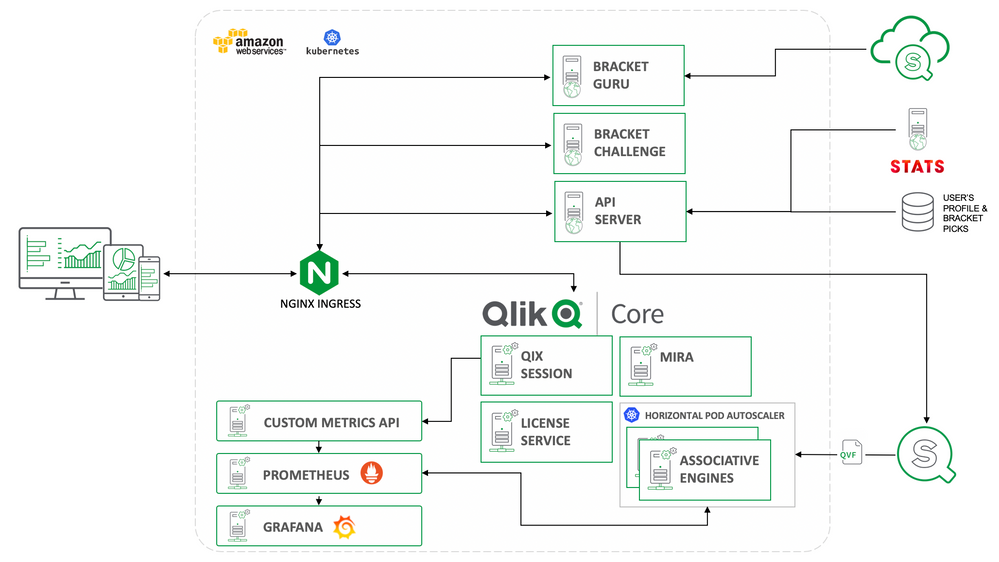

OK, we have Qlik Core and the Grafana dashboard where we can view the user sessions and they are all deployed in the Kubernetes cluster but what about the rest of the application? We need to host the mashups, get statistical data for each basketball game, handle user authentications and store users, their profiles and their brackets.

The first part with the websites was the easiest one. We’ve just created one docker image for bracketchallenge and one for bracketguru. We bound the DNSs to the cluster and let Nginx Ingress controller route the traffic based on the domain. So, if you typed in the URL bracketchallenge.qlik.com you will be redirected internally to the qdt-bracketchallenge server and if you typed bracketguru.qlik.com, you will be redirected to the qdt-bracketguru server.

Now that we got the websites’ physical location out of the way, the next question is how are going to handle authentication, with focus on security and data integrity. We created a Nodejs API server that handles the user authentication, creates sessions, checks and writes into the database the user data like profile and the desired bracket. It is also used to handle the authentication for stats.com and feed the desired stats to Qlik Sense. Then, created another docker image and pulled it in our Kubernetes cluster.

Each server is sending data to the Custom Metrics Api server which then are stored in Prometheus. Then we use Grafana to view all the stats on the Qlik Associative Engine like how many current sessions we have open, what is the distribution on the running Associative Engines, how much memory and Cpu is consumed on each one etc. These sessions are critical on determining how many Associative Engines to run. Kubernetes has a controller called HPA (Horizontal Pod Autoscaler). This, based on some metrics, in our case the Sessions, decides when to spin another pod / engine. We set the Qlik Associative Engines not to accept more than 3000 sessions and when we are averaging 1750 sessions per engine, then we need the HPA to start another engine and start sending new sessions there. You need to fine tune those settings to match your qvfs and what works better with the Ram usage. You can max the HPA to how ever many pods your cluster can take. If you combine this with a Kubernetes Cluster Autoscaler, you can have infinite amount of sessions! The only limit would be of course your budget.

Having all of the puzzle pieces together, let’s see what a user flow will be like. First you will type the url to bracketchallenge.qlik.com. Nginx will route your request to the bracketchallenge server where it will send you back the authentication page. From there, login or create a user, the mashup will send, in both scenarios, your request to the Api Server, which will validate you against the database and issue a session token. Once the React webpage gets your session and validates it for its integrity, you are guided to the bracket page. There, a new request is sent to the cluster and more specifically to the Nginx Ingress controller where it will redirect it to the Qix Session server and initiate a Websocket connection with a new session. Once we have established a session, we go to Mira to see our available Associative Engines and place our connection to the most appropriate one. I need to make a side note here, that Mira sees and utilizes the availability of the Qlik Associative Engines, only ifs they have been cleared by the License Service and that the license provided in the cluster, has not expired or the number of tokens have not been completely consumed. Once the Websocket connection has established, enigma.js opens the app and gets a hypercube with the available teams.

Conclusion

Qlik as a leader in the BI world, has many products that are here to transform your business. Bracketchallenge.qlik.com is one example that utilizes the latest platform, Qlik Core. Qlik Core is taking the Qlik Associative Engine into your cloud and making it part of your business infrastructure. Even though this solution is on AWS, you can still use it on Microsoft Azure or Google Cloud. It does not have to be on Kubernetes either. Qlik OSS has examples for Docker swarm and Nomad https://core.qlik.com/tutorials/orchestration/.

This journey was a tremendous experience for me as a developer and I gained a lot. I hope you enjoyed this article as much as I did and if you have any questions, feel free to drop me a note. If you have not done so, please join our community of developers in qlik-branch.slack.com and subscribe to the #qlik-core channel.

Yianni

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.