Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Data Integration

- :

- Qlik

- :

- Qlik Replicate

- :

- Re: Table got suspended

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Table got suspended

Hi,

Getting the below error. What could be issue and resolution for this. This task was running fine before, but getting error today.

Source : IBM DB2 for iseries

Target : Google cloud big query

Full load task

Error:

Waiting on bqjob_r1a9e083a5a097f09_0000018a257cee21_1 ... (0s) Current status: DONE

BigQuery error in load operation: Error processing job 'pa-qlk-production-

dfdfdfd:bqjob_r1a9e083dfdfd00018a257cee21_1': Error while reading data,

error message: Input CSV files are not splittable and at least one of the files

is larger than the maximum allowed size. Size is: 13608161648. Max allowed size

is: 4294967296.

Failure details:

- You are loading data without specifying data format, data will be

treated as CSV format by default. If this is not what you mean,

please specify data format by --source_format. [1020403] (csv_target.c:1012)

00009784: 2023-08-23T23:03:45 [TARGET_LOAD ]E: Failed to wait for previous run [1020403] (csv_target.c:1902)

00009784: 2023-08-23T23:03:46 [TARGET_LOAD ]E: Failed to load data from csv file. [1020403] (odbc_endpoint_imp.c:7776)

00009784: 2023-08-23T23:03:46 [TARGET_LOAD ]E: Handling End of table 'REPDATA'.'QWTYU' loading failed by subtask 2 thread 1 [1020403] (endpointshell.c:3050)

00008860: 2023-08-23T23:03:46 [TASK_MANAGER ]W: Table 'REPDATA'.'QWTYU' (subtask 2 thread 1) is suspended. Command failed to load data with exit error code 1, Command output:

Uploaded 0%...

- « Previous Replies

-

- 1

- 2

- Next Replies »

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @suvbin ,

Based on my testing, I have found that as the size of the text/csv file increases, the compression ratio also improves. I performed compression on 20GB file, the compression ratio can reach 1:250. So it can be estimated that a 1000 GB file would be compressed to around 4GB in size or even smaller.

Considering that you encountered an error with a 1000 GB file, I recommend reducing the file size setting to 900 GB initially. If the problem persists, please examine the gz file size located at "e:\data\tasks\WorkdataTar\data_files\".

Regards,

Desmond

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Check the section "Loading CSV files quota errors" in https://cloud.google.com/bigquery/docs/troubleshoot-quotas

---------------------------------------------

Resolution

To resolve this quota error, do the following:

- Set the

--allow_quoted_newlinesflag tofalse. - Split the CSV file into smaller chunks that are each less than 4 GB.

-----------------------------------------------------------

So, you need to have the max file set to 4 GB or less on the GBQ endpoint.

Hope this helps.

Thank you,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Those error message seem to come from GoogleQuery and are rather clear.

@SachinB >> You can try to increase the max file size at Target endpoint as per the need. Reload the table.

- I think itis the other way around. The max file size should be decreased to be under 4GB

@suvbin >> the value that has been set for the "Max file size(MB):" in the BigQuery endpoint 1000 gb (max as per the endpoint).

That's just a silly big value suggestion insufficient reflection on the meaning of needs and feeds.

Those are GigaBYTES. Transferred over the network that's 10,000 GigaBits (roughly) and at 1 Gigabit/sec would take 3 hours just to transfer. With the reported Max of 4GB, I would start with 3500 although 4000 MB probably is fine assuming 4GB is really 4096 MB, not 4000 MB but why live on the edge (unless well tested)

Yes 1 file of 4GB is more efficient than 4000 files of 1 MB. But 1 file of 1000GB is unlikely to be measurably more efficient than 250 files of 4GB.

How much change volume/hour are you roughly dealing with? What is the desirable latency? What is the best case upload speed to Google big query from the Replicate server? At that same 1Gigabit/sec a 4 GB file will take 40 seconds, just to transfer. That file also has to be written by Replicate, Read by the network transfer agent, Received by Google, Read by GOogle. I would guess 2 minutes at best for all of that.

Go to 100GB or more and you will exceed all file cache limit everywhere forcing storage write and reads on both sides and hurting everything else on the server in the process, pushing other files out of the caches. Nah, bad idea to go above say 20 GB in general and here above the reported 4GB.

Hein.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Resolution

To resolve this quota error, do the following:

- Set the

--allow_quoted_newlinesflag tofalse. - Split the CSV file into smaller chunks that are each less than 4 GB.

May i please know , where we need to keep the above configuration in the Qlik replicate . Any whitepaper on this please.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @suvbin ,

I think @shashi_holla meant the syntax setting in Replicate side.

To resolve this quota error, do the following:

- Set the

--allow_quoted_newlinesflag tofalse.

I'm not very sure if this is mandatory to solve your error however if you want to apply the setting, please add below internal parameter:

- Open Google Big Query target endpoint

- Go to the Advanced tab

- Open Internal Parameters

- Add below Parameter (depends on the platform where your Replicate server runs)

- Linux:

Parameter name: $info.query_syntax.load_data_exe_params

Parameter value: --project_id ${DATABASE} --dataset_id ${TABLE_OWNER} load ${CA_PATH} --allow_quoted_newlines=false--format=csv --null_marker='attNULL' '${TABLE_NAME}' '${FILENAME}'

- Windows:

Parameter name: $info.query_syntax.load_data_exe_params_linux

Parameter value: --project_id ${DATABASE} --dataset_id ${TABLE_OWNER} load ${CA_PATH} --allow_quoted_newlines=false --format=csv --null_marker=\"attNULL\" \"${TABLE_NAME}\" \"${FILENAME}\"

Hope this helps.

Regards,

John.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @john_wang

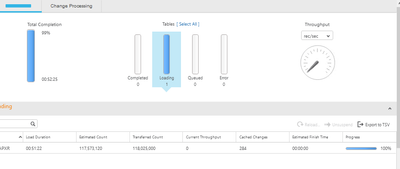

Thank you for the setting. I kept the above mentioned settings at the target endpoint. During the full load, it got stuck. Below is the screenshot for the same. it shows 100% completed. But the table is not moving from "Loading" state to "completed" state.

Appreciate your help on this.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @suvbin ,

Thanks for your update.

The "Progress" and the completion percentage are calculated based on the table metadata statistics "estimated count" rather than the real rows. It may take a bit longer than the 100% completion - in your scenario the gap is about 441880 rows. If you want to know the backend operations are doing now you may set SOURCE_UNLOAD/TARGET_LOAD and/or SOURCE_CAPTURE/TARGET_APPLY to Verbose (via Monitor --> Tools --> Log Management) then check the task log file.

Hope this helps.

Regards,

John.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The task failed again with the same error during the full load.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @suvbin ,

Please open a support ticket and provide the diagnostics packages with TARGET_APPLY to TRACE, Qlik support team will help you further on this issue.

Regards,

John.

- « Previous Replies

-

- 1

- 2

- Next Replies »