Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Support

- :

- Support

- :

- Knowledge

- :

- Support Articles

- :

- How to use the Cloud Storage Connector for Amazon ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

How to use the Cloud Storage Connector for Amazon S3 with Qlik Application Automation

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to use the Cloud Storage Connector for Amazon S3 with Qlik Application Automation

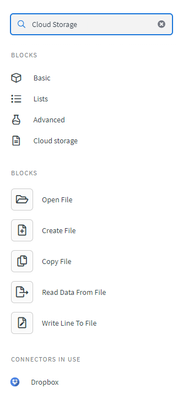

Inside Qlik Application Automation, the Amazon S3 functionality is split into two connectors: the native Cloud Storage connector and the specific Amazon S3 connector. To create, update, and delete files, it’s highly recommended to use the native Cloud Storage connector. To get the information and metadata of regions & buckets, use the Amazon S3 connector.

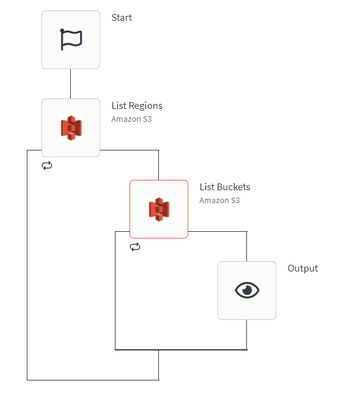

The following is an example of automation using the Amazon S3 connector to output a paginated list of regions and buckets in each region (not covered in this article).

Environment:

This article focuses on the available blocks in the native Cloud Storage connector in Qlik Application Automation to work with files stored in S3 buckets. It will provide some examples of basic operations such as listing files in a bucket, opening a file, reading from an existing file, creating a new file in a bucket, and writing lines to an existing file.

The Cloud Storage connector supports additional building blocks to copy files, move files, and check if a file already exists in a bucket, which can help with additional use cases. The Amazon S3 connection also supports advanced use cases such as generating a URL that grants temporary access to an S3 object, or downloading a file from a public URL and uploading this to Amazon S3.

Let’s get started.

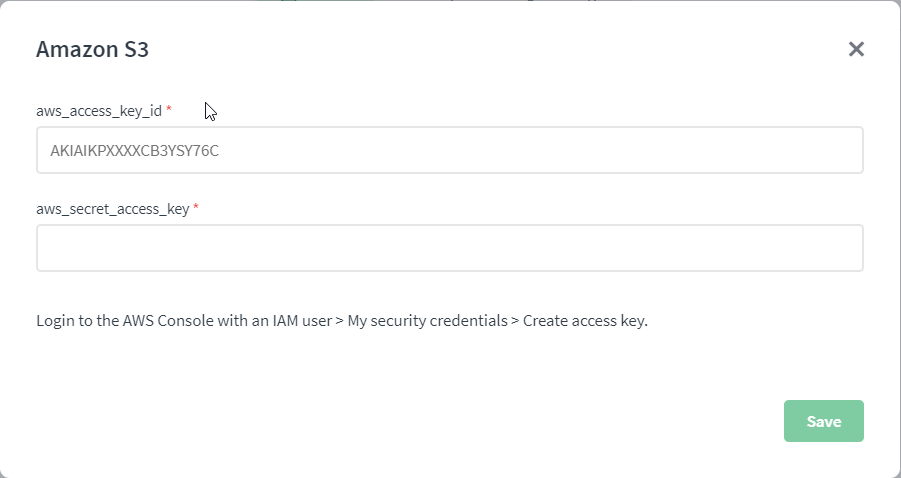

Authentication for this connector is based on tokens or keys.

Log in to the AWS console with an IAM user to generate the access key ID and secret access key required to authenticate.

Now let's go over the basic use cases and the supporting building blocks in the Cloud Storage connector to work with Amazon S3:

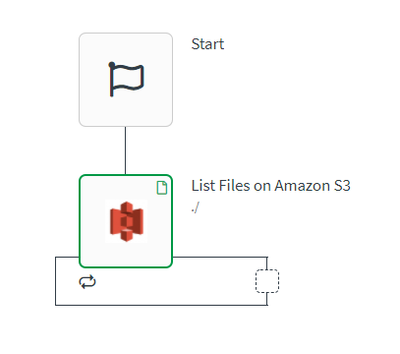

- How to list files from an existing S3 bucket

- Create an Automation.

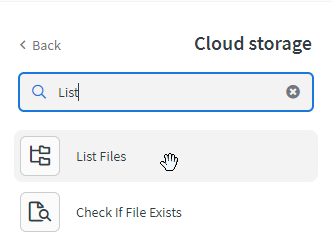

- From the left menu, select the Cloud Storage connector.

- Search for the List Files block from the available list of blocks.

- Drag and drop the block into the automation and connect it to the Start block.

- The ‘Path’ parameter of this block allows to list the contents of a specific directory from a Dropbox account. In this example, ‘./’ indicates the root directory of your bucket.

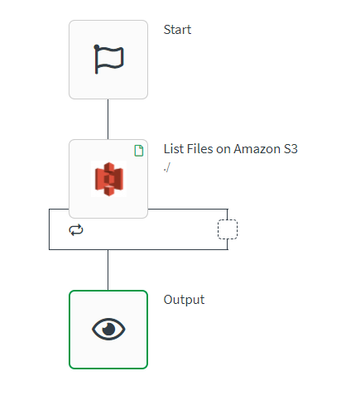

- Drag and drop the Output block into the automation and connect it to the List Files on Amazon S3 block.

- Run the automation. If it was not previously saved, a 'Save automation' popup will appear. This will output a paginated list of files available in the root directory of a S3 bucket.

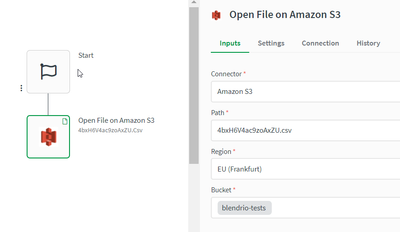

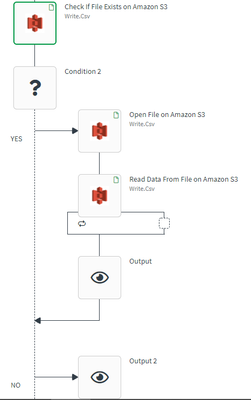

- How to open an existing file and read from it

- The first two steps are similar to those described before.

- Now use the Open File block from the list.

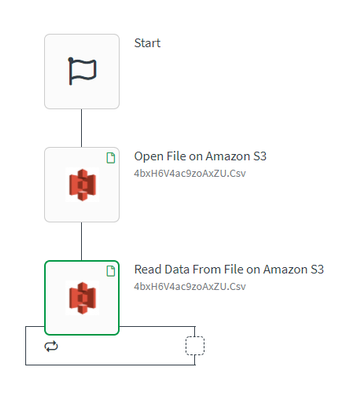

- Drag and drop the block into the automation, link it to the Start block, and fill in the required parameters, ie. Path, Region, and Bucket. You can use ‘do look up’ to search across your S3 account. Add the file directory, filename, and file extension under ‘Path’, e.g. ./4bxH6V4ac9zoAxZU.csv

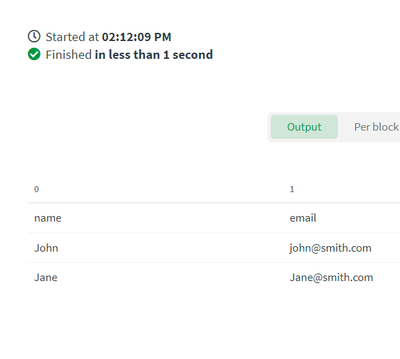

- Drag and drop the Read Data From File block and link it to the previous block. Use the output from the previous block as input.

- Drag and drop the Output block into the automation before running the automation. This will output a paginated table with the data stored in the file.

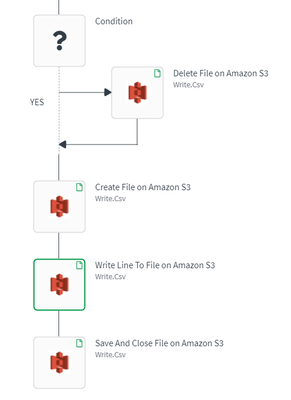

- How to create a new file (if it doesn’t exist and delete it if it does) in the S3 bucket, write lines of data, save, and close the opened file

- The first two steps are similar to the two previous use cases.

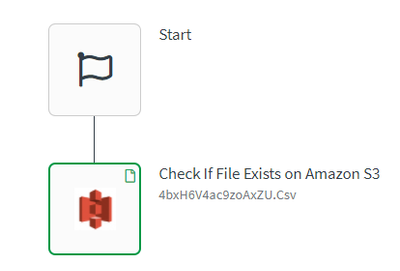

- Now select the Check If File Exists block, drag and drop it into the automation, and link it to the Start block.

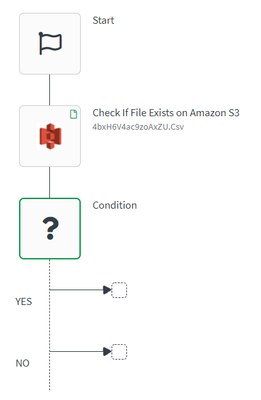

- The previous block will return ‘True’ if the file exists, and ‘False’ if it doesn’t. Now search for the Condition block to drag and drop it into the automation. Link it to the previous block and add the following condition:

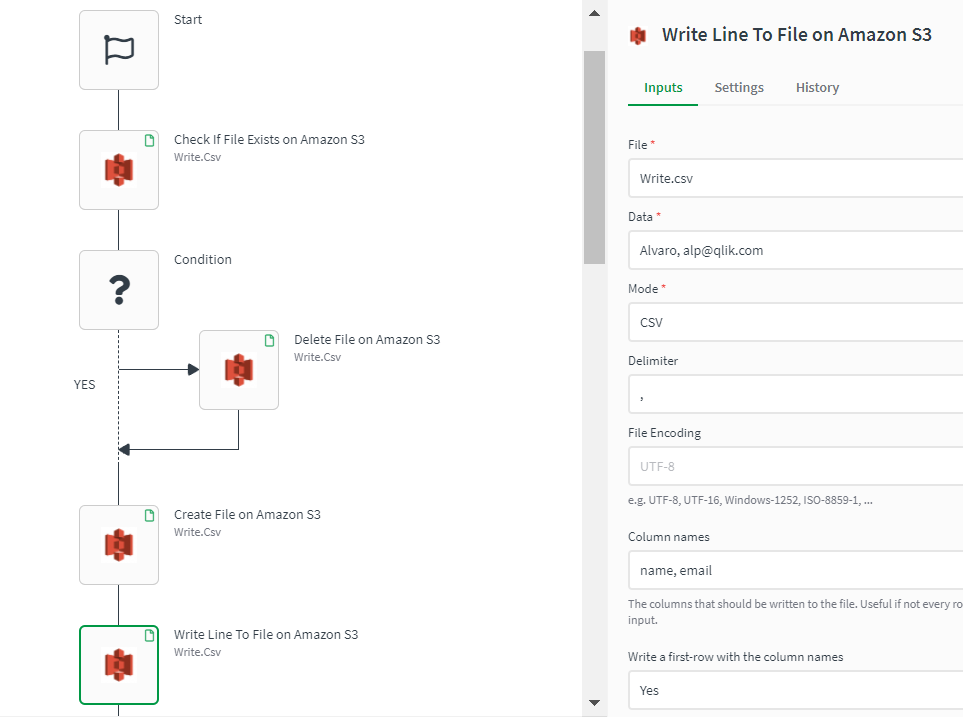

- First, let’s focus on the ‘YES’ part of the condition. Search the Delete File block and drag and drop it into the automation. If it already exists, this will delete the specified file inside the S3 bucket.

- Hide the ‘NO’ part of the condition and continue building this automation at the loose end of the Condition block (this part executes regardless of the result of evaluating the condition). First, search the Create File block, add it to the canvas, and connect it to the previous block.

- Next, search the Write Line to File block and connect it to the Create File on Amazon S3 block. Fill in the required input parameters. Select CSV as ‘Mode’ and specify the ‘Column names’ (i.e. headers) of the file.

- This example shows how to define ‘Column names’ manually but this operation can also be automated using the Get Keys formula and reading files stored in S3 buckets, lists or objects defined as variable. The same applies to the ‘Data’ input parameter. In this example, one single line of data has been added manually, but we could read data from other data sources (e.g. tables, flat files, etc.) or loop through a list of items, and write each item as a line in the CSV file. It requires additional data transformations though. Check the ‘Csv’ function from the ‘Other Functions’ link.

- Finally, search the Save and Close block, and link it to the Write Line to File on Amazon S3 block. Optionally, add the Output block into the automation. This will show the path where the file has been saved and closed on the S3 bucket in Amazon.

- Optionally, you can add the following building blocks as a continuation of your automation to check the content of the newly created file in the S3 bucket. If the file has been successfully created and written, then this will output its content as rows, otherwise, it’ll output ‘File Not Found’.

Server Side Encryption update:

The Amazon S3 connector in Qlik Application Automation now supports adding the SSE header value for creating new files. This header is available on the Create File and Copy File blocks. It's possible to choose the default behavior which is AES256 encryption. As an alternative, it's possible to choose aws:kms encryption and provide a valid KMS Key ID.

Attached example file: create_and_write_files_amazon_s3.json

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello,

Thank you for the documentation.

Does Amazon S3 connector on Qlik Automations support server side encryption (SSE)? I am not able to pass the SSE header value on automations but I can do it on the data connection configuration inside the app.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi,

Answering for consistency, (since this was answered on the forum here)

Answser: This is indeed not possible in the Amazon S3 connector in automations.

If you require this functionality, I suggest you ask for it through ideation.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi,

Is it possible to read data from qvd using the read data from the file block on Amazon S3 ?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello @OppilaalDaniel

Please post it in the Qlik Application Automation forum to give your question appropriate reach and attention,

All the best,

Sonja