Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

Recent Documents

-

Qlik Sense Enterprise on Windows and SAP Connector v8.1.0: Data load error when ...

Extracting data to Qlik Sense Enterprise on Windows from an SAP BW InfoProvider / ASDO with two values in a WHERE clause fails with an error similar t... Show MoreExtracting data to Qlik Sense Enterprise on Windows from an SAP BW InfoProvider / ASDO with two values in a

WHEREclause fails with an error similar to the following:Data load did not complete

Data has not been loaded. Please correct the error and try loading again.Using only one field value in the

WHEREclause, it works as expected.Resolution

- Upgrade the Qlik SAP Connector to Qlik SAP Connector version 8.3.0

- Ensure you have also imported Qlik SAP Transport version 8.3.0

Related Content

Internal Investigation ID(s)

- SUPPORT-5101

Environment

- Qlik Sense Enterprise on Windows

- SAP Connector v8.1.0

-

Qlik Replicate and Google Cloud Storage: Failed to convert file from csv to parq...

When using Google Cloud Storage as a target in Qlik Replicate, and the target File Format is set to Parquet, an error may occur if the incoming data c... Show MoreWhen using Google Cloud Storage as a target in Qlik Replicate, and the target File Format is set to Parquet, an error may occur if the incoming data contains invalid values.

This happens because the Parquet writer validates data during the CSV-to-Parquet conversion. A typical error looks like:

[TARGET_LOAD ]E: Failed to convert file from csv to parquet

Error:: failed to read csv temp file

Error:: std::exception [1024902] (file_utils.c:899)Environment

- Qlik Replicate all versions

- Google Cloud Storage all versions

Resolution

There are two possible solutions:

- Clean up or remove the incorrect records in the source databases

- Or add a transformation to correct or replace invalid dates before they reach the target.

Cause

In this case, the source is SAP Oracle, and a few rare rows contained invalid date values. Example: 2023-11-31.

By enabling the internal parameters keepCSVFiles and keepErrorFiles in the target endpoint (both set to TRUE), you can inspect the generated CSV files to identify which rows contain invalid data.

Internal Investigation ID(s)

00417320

-

Qlik Connectors: How to import strings longer than 255 characters

Recent versions of Qlik connectors have an out-of-the-box value of 255 for their DefaultStringColumnLength setting. This means that, by default, any ... Show MoreRecent versions of Qlik connectors have an out-of-the-box value of 255 for their DefaultStringColumnLength setting.

This means that, by default, any strings containing more than 255 characters is cut when imported from the database.

To import longer strings, specify a higher value for DefaultStringColumnLength.

This can be done in the connection definition and the Advanced Properties, as shown in the example below.

The maximum value that can be set is 2,147,483,647.

Environment

- Qlik Connectors

- Built-in Connectors Qlik Sense Enterprise on Windows November 2024 and later

-

Unleashing the Qlik Talend Cloud Dynamic Engine

This Techspert Talks session addresses: the Qlik Talend Cloud Dynamic Engine Chapters: 00:45 - What the Dynamic Engine is 01:57 - How to enab... Show More -

How does Qlik Replicate convert DB2 commit timestamp to Kafka message payload?

How does Qlik Replicate convert DB2 commit timestamps to Kafka message payload, and why are we seeing a lag of several hours? When Qlik Replicate rea... Show MoreHow does Qlik Replicate convert DB2 commit timestamps to Kafka message payload, and why are we seeing a lag of several hours?

- When Qlik Replicate reads change events from the DB2 iSeries journal, each journal entry includes both:

- Entry timestamp: when the individual operation was logged; from JOENTTST. Qlik Replicate does not use it.

- Commit timestamp: when the transaction was committed; from JOCTIM. This is what Qlik Replicate uses as the payload timestamp field.

- Then Qlik Replicate converts DB2 iSeries journal commit timestamps to UTC

- Qlik Replicate normalizes all internal event timestamps to UTC before serializing the payload, regardless of the source system’s local timezone.

- This guarantees downstream consumers (Kafka / Schema Registry / Avro) have a single consistent time base.

- Qlik Replicate then populates the data.timestamp field in the Kafka message payload.

- The timestamp field in the Kafka message payload represents the commit timestamp of the transaction as recorded in the DB2i journal in UTC timezone. This does not use the Kafka broker's or source DB2 i system's local timezone.

- An offset of several hours here is due to timezone normalization, not replication lag.

- Note that some operations (DDL, full-load, or deletes) may omit data.timestamp because no valid source commit time exists. This is also expected behavior.

- DDL: Not tied to a commit

- Full Load: No CDC commit time yet

- Delete: DB2 journals don’t always include a valid commit timestamp for the before-image

Environment

- Qlik Replicate

- When Qlik Replicate reads change events from the DB2 iSeries journal, each journal entry includes both:

-

Qlik GeoAnalytics Enterprise Server or GeoCoding Connector

Qlik Geocoding operates using two QlikGeoAnalytics operations: AddressPointLookup and PointToAddressLookup. Two frequently asked questions are: Does ... Show MoreQlik Geocoding operates using two QlikGeoAnalytics operations: AddressPointLookup and PointToAddressLookup.

Two frequently asked questions are:

- Does Qlik Geocoding work with a custom GeoAnalytics Server, or does it require a connector type "Cloud"?

- Do you need an online connection for Qlik Geocoding?

The Qlik Geocoding add-on option requires an Internet connection. It is, by design, an online service. You will be using Qlik Cloud (https://ga.qlikcloud.com), rather than your local GeoAnalytics Enterprise Server.

See the online documentation for details: Configuring Qlik Geocoding.

Environment

- Qlik Cloud

- Qlik GeoAnalytics

-

Qlik Replicate: When are DDL changes needed on an SQL Server source?

This article outlines how to handle DDL changes on a SQL Server table as part of the publication. Resolution The steps in this article assume you u... Show MoreThis article outlines how to handle DDL changes on a SQL Server table as part of the publication.

Resolution

The steps in this article assume you use the task's default settings: full load and apply changes are enabled, full load is set to drop and recreate target tables, and DDL Handling Policy is set to apply alter statements to the target.

To achieve something simple, such as increasing the length of a column (without changing the data type), run an

ALTER TABLEcommand on the source while the task is running, and it will be pushed to the target.For example:

alter table dbo.address alter column city varchar(70)To make more complicated changes to the table, such as:

- Changing the Allow Nulls setting for a column

- Reordering columns in the table

- Changing the column data type

- Adding a new column

- Changing the filegroup of a table or its text/image data

Follow this procedure:

- Stop the task

- Remove the table from the task

- Remove the table from the publication.

Some changes do not require this, and some do. Removing it from the publication will work in all cases.- Right-click the publication (starts with AR_) and select Properties

- Click to select the Articles tab

- Uncheck the box next to the table and click OK

- Copy all the data to a temp table

- Recreate the table with the needed modifications

- Copy the data back into the table

- Add the table back to the task and resume. This will automatically add the table back to the publication and reload the table on the target. Reference: Saving changes is not permitted error message in SSMS

Environment

- Qlik Replicate

-

Qlik Sense Analytics: Microsoft OneDrive connection fail to list shared files

When connecting to Microsoft OneDrive using either Qlik Cloud Analytics or Qlik Sense Enterprise on Windows, shared files and folders are no longer vi... Show MoreWhen connecting to Microsoft OneDrive using either Qlik Cloud Analytics or Qlik Sense Enterprise on Windows, shared files and folders are no longer visible.

Resolution

While the endpoint may intermittently work as expected, it is in a degraded state until November 2026. See drive: sharedWithMe (deprecated) | learn.microsoft.com. In most cases, the API endpoint is no longer accessible due to the publicly documented degraded state.

Qlik is actively reviewing the situation internally (SUPPORT-7182).

However, given that the MS API endpoint has been deprecated by Microsoft, a Qlik workaround or solution is not certain or guaranteed.

Suggested Workaround

Use a different type of shared storage, such as mapped network drives, Dropbox, or SharePoint, to name a few.

Cause

Microsoft deprecated the

/me/drive/sharedWithMeAPI endpoint.Internal Investigation ID(s)

SUPPORT-7182

Environment

- Qlik Cloud

- Qlik Sense Enterprise on Windows

-

Qlik Cloud Direct Access Data Gateway: extracting data from SAP BW InfoProvider ...

Extracting data from SAP BW InfoProvider / ASDO with two values in a WHERE clause returns 0 lines. Example: The following is a simple standard ADSO wi... Show MoreExtracting data from SAP BW InfoProvider / ASDO with two values in a

WHEREclause returns 0 lines.Example:

The following is a simple standard ADSO with two infoObjects (‘

0BPARTNER’, ‘/BIC/TPGW8001’,) and one field (‘PARTNUM’). All are CHAR data type.In the scripts we used

[PARTNUM] = A,[PARTNUM] = Bin theWHEREclause.However

- If you use only one field value in the

WHEREclause, it works as expected:From GTDIPCTS2Where ([PARTNUM] = A); - If you use two values infoObject

[TPGW8001]instead of the field[PARTNUM]in theWHEREclause, it also works as expected:From GTDIPCTS2Where ([TPGW8001] = “1000”, [TPGW8001] = “1000”);

Environment

- Qlik Cloud / Direct Data Gateway version 1.7.4

- SAP BW Connector

Resolution

Upgrade to Direct Data Gateway version 1.7.8.

Cause

Defect SUPPORT-5101

Related Content

Internal Investigation ID(s)

SUPPORT-5101

- If you use only one field value in the

-

Qlik Replicate and Google Cloud Pub/Sub target: Topic deleted when DROP TABLE oc...

When using Google Cloud Pub/Sub as a target and configuring Data Message Publishing to Separate topic for each table, the Pub/Sub topic may be unexpec... Show MoreWhen using Google Cloud Pub/Sub as a target and configuring Data Message Publishing to Separate topic for each table, the Pub/Sub topic may be unexpectedly dropped if a DROP TABLE DDL is executed on the source. This occurs even if the Qlik Replicate task’s DDL Handling Policy When source table is dropped is set to Ignore DROP.

Environment

- Qlik Replicate versions before 2025.5 SP3

Resolution

This issue has been fixed in the following builds:

- 2025.5.0.454 (SP3)

- 2025.11 GA

To apply the fix, upgrade Qlik Replicate to one of the listed versions or any later release.

Cause

A product defect in versions earlier than 2025.5 SP3 causes the Pub/Sub topic to be dropped despite the DDL policy configuration.

Internal Investigation ID(s)

- 00401331

-

Qlik Talend Data Integration: Error while using tSetKeystore component with kafk...

A Job design is presented below: tSetKeystore: set the Kafka truststore file. tKafkaConnection, tKafkaInput: connect with Kafka Cluster as a Consumer ... Show MoreA Job design is presented below:

tSetKeystore: set the Kafka truststore file.

tKafkaConnection, tKafkaInput: connect with Kafka Cluster as a Consumer and transmits messages.

However, while running the Job, an error exception occurred under the tKafkaInput component.

org.apache.kafka.common.KafkaException: Failed to construct kafka consumer

Resolution

Make sure to execute the tSetKeyStore component prior to the Kafka components to enable the Job to locate the certificates required for the Kafka connection. To achieve this, connect the tSetKeystore component to tkafkaConnection using an OnSubjobOK link, as demonstrated below:

For more detailed information on trigger connectors, specifically OnSubjobOK and OnComponentOK, please refer to this KB article: What is the difference between OnSubjobOK and OnComponentOK?.

Environment

- Talend Data Integration 7.3.1, 8.0.1

-

Qlik Stitch Database integration extraction error: Fatal Error Occured <>decimal...

This article addresses the error encountered during extraction when using log-based incremental replication for MySQL integration: [main] tap-hp-mysql... Show MoreThis article addresses the error encountered during extraction when using log-based incremental replication for MySQL integration:

[main] tap-hp-mysql.sync-strategies.binlog - Fatal Error Occurred - <ColumnName> - decimal SQL type for value type class clojure.core$val is not implemented.

Resolution

There are two recommended approaches:

Option 1: Enable Commit Order Preservation

Run the following command in your MySQL instance:

SET GLOBAL replica_preserve_commit_order = ON;

Then, reset the affected table(s) through the integration settings.

Option 2: Validate Replication Settings

Ensure that either:

replica_preserve_commit_order(MySQL 8.0+), orslave_preserve_commit_order(older versions)

is enabled. These settings maintain commit order on multi-threaded replicas, preventing gaps and inconsistencies.

How to Check if Setting is Enabled

Run:

SHOW GLOBAL VARIABLES LIKE 'replica_preserve_commit_order';

Expected Output:

Variable_nameValuereplica_preserve_commit_orderONFor older versions:

SHOW GLOBAL VARIABLES LIKE 'slave_preserve_commit_order';

For more information, reference MySQL Documentation

replication-features-transaction-inconsistencies | dev.mysql.com

Cause

When using log-based incremental replication, Stitch reads changes from MySQL’s binary log (binlog). This error occurs because the source database provides events out of order, which leads to mismatched data types during extraction. In this case, the extraction encounters a decimal SQL type where the value type is unexpected.

Why does this happen?

- Non-transactional DML statements that do not guarantee ordering.

- Misconfigured or inconsistent replication setup.

- Multi-threaded replication without commit order preservation, causing commit gaps and inconsistencies.

Environment

-

Qlik Stitch: Will changing the hostname, username and password automatically res...

This article explains whether changing integration credentials or the host address for a database integration requires an integration reset in Stitch.... Show MoreThis article explains whether changing integration credentials or the host address for a database integration requires an integration reset in Stitch. It will also address key differences between key-based incremental replication and log-based incremental replication.

Changing Integration Credentials

Updating credentials (e.g., username or password) does not require an integration reset. Stitch will continue replicating data from the last saved bookmark values for your tables according to the configured replication method.

Changing the Host Address

Changing the host address is more nuanced and depends on the replication method:

✅ Key-Based Incremental Replication

- Bookmarks are based on column values (e.g., primary key or timestamp).

- Changing the host does not affect bookmarks as long as:

- Database name, schema names, and table names remain unchanged.

- Data structure is identical.

- Stitch will resume replication from the last bookmark without requiring a reset.

Important:

If the database name changes, Stitch treats it as a new database:- Table and field selections must be reconfigured.

- A full historical replication will occur.

⚠️ Log-Based Incremental Replication

-

Bookmarks reference the transaction log file name and position (e.g., binlog for MySQL, WAL for PostgreSQL, oplog for MongoDB).

- Changing the host does affect bookmarks because:

- Log files and positions differ on the new host.

- The integration cannot locate the previous bookmark.

- Result: Extraction fails, and a reset is required (full historical replication).

Decision Matrix

Change Type Key-Based Replication Log-Based Replication Credentials No reset required No reset required Host Address No reset (if search path unchanged) Reset required Database Name Reset required Reset required Best Practices

- Before changing the host for log-based replication, plan for a full historical replication.

- Validate that database names, schemas, and tables remain unchanged for key-based replication.

- Consider using consistent log retention policies if migrating hosts for log-based replication.

-

Qlik Stitch: MySQL integration extraction error “Fatal Error Occurred YEAR“

MySQL extraction encounters the following error: FATAL [main] tap-hp-mysql.main - Fatal Error Occurred - YEAR Resolution Identify columns storing ... Show MoreMySQL extraction encounters the following error:

FATAL [main] tap-hp-mysql.main - Fatal Error Occurred - YEAR

Resolution

- Identify columns storing date/time values.

- Query for invalid years

For example:

YEAR(date_column) < 1 OR YEAR(date_column) > 9999

- Correct invalid records or replace them with valid defaults.

- If you cannot modify source data, deselect the problematic column in table settings in the integration.

- Consider enforcing valid ranges via application-level validation or MySQL constraints.

- If using MySQL zero dates (

0000-00-00), adjust SQL mode or replace with valid dates.

Cause

This error occurs when the MySQL integration attempts to process a

DATE,DATETIME, orTIMESTAMPfield containing an invalid year value. Common examples include0or any year outside the supported range. The error message typically states "Fatal Error Occurred" followed by details about the invalid year or month value.The underlying Python library used by the Stitch MySQL integration enforces strict date parsing rules. It only supports years in the range 0001–9999. If the source data contains values less than

0001or greater than9999, the extraction will error. This issue often arises from legacy data, zero dates (0000-00-00), or improperly validated application inserts.Any column selected for replication that contains invalid date values will trigger this error.

Environment

-

Qlik Stitch: ERROR: null value in column xxxxx violates not-null constraint

Loading Error Across All Destinations When Stitch tries to insert data into a destination table and encounters a NOT NULL constraint violation, the er... Show MoreLoading Error Across All Destinations

When Stitch tries to insert data into a destination table and encounters a

NOT NULLconstraint violation, the error message typically looks like:ERROR: null value in column "xxxxx" of relation "xxxxx" violates not-null constraint

or

ERROR: null value in column "xxxxx" violates not-null constraint

Key Points

- The column referenced is usually a Primary Key (PK) field or a Stitch-generated system column(Qlik Stitch Documentation) such as

_sdc_level_1_id. - These

_sdc_level_idcolumns help form composite keys for nested records and are used to associate child records with their parent. Stitch generates these values sequentially for each unique record. - When combined with

_sdc_source_key_[name]columns, they create a unique identifier for each row. Depending on nesting depth, multiple_sdc_level_idcolumns may exist in subtables.

Resolution

Recommended Approach

Pause the integration, drop the affected table(s) from the destination, and reset the table from the Stitch UI. If you plan to change the PK on the table, you must either:

- Drop the destination table and reload, or

- Modify PK constraints in the destination.

If residual data in the destination is blocking the load, manual intervention may be required. Contact Qlik Support if you need assistance clearing this data.

Cause

Primary Key constraints enforce both uniqueness and non-nullability. If a null value exists in a PK field, the database rejects the insert because Primary Keys cannot contain nulls.

If you suspect your chosen PK field may contain nulls, you can:

- Choose a different field as the primary key to avoid this error, or

- Review and update your source data to populate null records, ensuring data integrity—especially for fields used in a composite PK.

Environment

- The column referenced is usually a Primary Key (PK) field or a Stitch-generated system column(Qlik Stitch Documentation) such as

-

Qlik Stitch: NetSuite Extraction Error "Request failed syncing stream Transactio...

The NetSuite integration encounters the following extraction error: [main] tap-netsuite.core - Fatal Error Occured - Request failed syncing stream Tra... Show MoreThe NetSuite integration encounters the following extraction error:

[main] tap-netsuite.core - Fatal Error Occured - Request failed syncing stream Transaction, more info: :data {:messages ({:code {:value UNEXPECTED_ERROR}, :message "An unexpected error occurred. Error ID: <ID>", :type {:value ERROR}})}

Resolution

The extraction error message provides limited context beyond the NetSuite Error ID. It is recommend reaching out to NetSuite Support with the Error ID for further elaboration and context.

Cause

This error occurs when NetSuite’s API returns an

UNEXPECTED_ERRORduring pagination while syncing a stream. It typically affects certain records within the requested range and is triggered by problematic records or internal processing issues during large result set pagination.Potential contributing factors include

- Data inconsistencies in specific records.

- NetSuite backend limitations or transient service issues.

- Large result sets combined with complex queries or filters, which increase processing complexity.

Environment

-

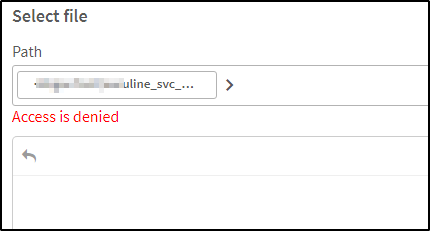

Access denied or Unable to view the files or folders while creating the Folder D...

When attempting to create a Folder Data Connection in Qlik Sense Enterprise, you may encounter one of the following issues: Ac... Show MoreAccess Denied

Or

You will not see any files/folders

Resolution

- Make sure the path you are accessing has proper Read rights and permissions are granted. Please read here to check effective permissions, Effective Permission.

- If it is a multi-node environment, ensure that the path is accessible from all the Qlik Sense Windows machines (RIM nodes).

- Make sure to use UNC paths. Please read more about UNC Paths here, File Path Formats

- Qlik Sense Service account must have access to the path you are connecting to. Please read more about Service Account here,

- The Network Shared folder must be on the same domain as the Qlik Sense Windows machine.

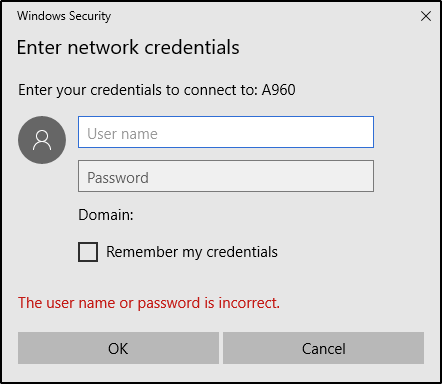

- If it is a network path, ensure that the users' credentials of those who do not have access to Qlik Sense are not saved. If the users' credentials are saved, accessing the network path may result in the following warning:

The username or password is incorrect

Cause

- Qlik Sense Service Account does not have proper rights and permissions to access the path.

- The Network Shared path is not accessible from the other Qlik Sense Windows machines (RIM nodes) in a multi-node environment.

- The Path provided is a Mapped drive. Please read here as the mapped drives are not supported, Cannot use mapped network drives for Qlik Sense folder connections

- Network share is Password protected and not accessible to the Qlik Sense Service account.

When the network share is password-protected, even if the service account has access to it, you must first enter the network share from the Qlik Sense Windows machine/s with credentials to access it.

Note that for every reboot, the password-protected folder will prompt for credentials, causing the Folder Data Connection to fail since Folder Data Connection does not have an option to save user credentials.Environment

-

Unable to Fetch Qlik Sense Applications/Streams in Talend Data Catalog

Talend Data Catalog (TDC) to Qlik Sense using certificate authentication, the connection test shows as successful. However, when attempting to fetch a... Show MoreTalend Data Catalog (TDC) to Qlik Sense using certificate authentication, the connection test shows as successful. However, when attempting to fetch applications/streams, the process fails and no applications are listed after completing the harvest of Qlik Sense Bridge.

HarvestofQlikSenseBridge

Resolution

The Qlik Sense user directory is required for connecting to the Qlik Sense Server with the appropriate user ID. See the "Users" page of the Qlik Management Console

QlikSenseUserDirectory

For Example,

- As in the testing, we have access to the "INTERNAL" user directory mentioned in the Qlik Sense Model. But can mention a different user directory as user access to the user directory mentioned in the above screenshot

INTERNALUser

- Save the chanages and Import the model

ImportModel

- Can see the apps and streams are available

AppsandStreams

Cause

This issue is caused by the user directory was not mentioned correctly.

Related Content

For more information about MIMB Import Bridge from Qlik Sense Server, please refer to documentation:

MIRQlikSenseServerImport.html | www.metaintegration.net

Environment

- # Talend Data Catalog

- Qlik Sense Enterprise on Windows

- As in the testing, we have access to the "INTERNAL" user directory mentioned in the Qlik Sense Model. But can mention a different user directory as user access to the user directory mentioned in the above screenshot

-

Qlik Replicate: Archival Process (Deletes) causing crashing

The following is not being handled properly by Qlik Replicate and leads to a task crashing without errors: The execution of DELETE statements on an O... Show MoreThe following is not being handled properly by Qlik Replicate and leads to a task crashing without errors:

- The execution of DELETE statements on an Oracle source with an invisible column involved,

- With the addition of new columns at a later point in time after the table was created

Resolution

This is caused by SUPPORT-6402, which has been resolved.

Upgrade Qlik Replicate to patch May 2025 SP01 or above.

Cause

When more columns are added to a table with invisible columns, Qlik Replicate cannot process the delete statements as expected.

Internal Investigation ID(s)

SUPPORT-6402

Environment

- Qlik Replicate

-

Qlik Replicate: Microsoft SQL Server 2025 release and native JSON datatype suppo...

Previous versions of Microsoft SQL Server do not support a dedicated JSON data type. For later versions, Microsoft announced the introduction of a nat... Show MorePrevious versions of Microsoft SQL Server do not support a dedicated JSON data type.

For later versions, Microsoft announced the introduction of a native JSON data type (along with JSON aggregate functions). This new data type is already available in Azure SQL Database and Azure SQL Managed Instance, and is included in SQL Server 2025 (17.x).

SQL Server 2025 (17.x) became Generally Available (GA) on November 18, 2025.

At this time, the current Qlik Replicate major releases 2025.05/2025.11 do not support SQL Server 2025 or its native JSON data type yet.

Environment

- Qlik Replicate all versions

- SQL Server 2025 on-premises

- Azure SQL Database and Azure SQL Managed Instance (which share the same engine as SQL Server 2025)

Common Errors Observed if the table contains a JSON column in SQL Server 2025

SQL Server endpoint with SQL Server 2025 as the source

During the endpoint connection ping test, you may encounter:

SYS-E-HTTPFAIL, Unsupported server/database version: 0.

SYS,GENERAL_EXCEPTION,Unsupported server/database version: 0MS-CDC endpoint with Azure SQL Database as the source

Since the Azure SQL Database version is always 14.x, the version check succeeds. However, Azure SQL DB already uses the SQL Server 2025 kernel, the task later fails during runtime with:

[SOURCE_CAPTURE ]T: Failed to set ct table column ids for ct table with id '1021246693' (sqlserver_mscdc.c:2968)

[SOURCE_CAPTURE ]T: Failed to get change tables IDs for capture list [1000100] (sqlserver_mscdc.c:3672)

[SOURCE_CAPTURE ]E: Failed to get change tables IDs for capture list [1000100] (sqlserver_mscdc.c:3672)Resolution

No workaround can be provided until support has been introduced.

According to the current roadmap, support for SQL Server 2025 and the native JSON data type is planned for the upcoming major release: Qlik Replicate 2026.5.

No date or guaranteed timeframe can yet be given. The support planned for 2026.5 is an estimate.

Internal Investigation ID(s)

00419519