Question

How do I convert a Qlik Sense Bar Chart to a Table without having to recreate the table?

Environment

- Qlik Sense: All supported versions

Solution:

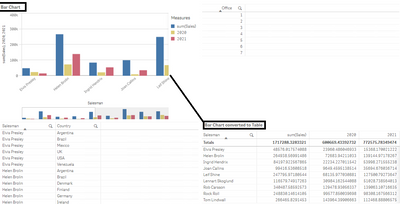

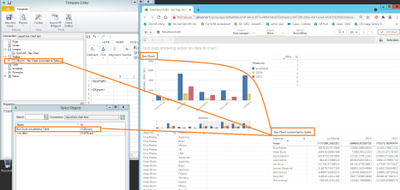

- edit the Qlik Sense sheet containing the Bar Chart

- make a copy of the bar chart then con

Question

How do I convert a Qlik Sense Bar Chart to a Table without having to recreate the table?

Environment

- Qlik Sense: All supported versions

Solution:

- edit the Qlik Sense sheet containing the Bar Chart

- make a copy of the bar chart then convert it to a straight table by dragging the 'table' object onto the copied bar chart.

- click 'convert to table'

Result:

Use Case: NPrinting Reporting

Keep in mind that Qlik Sense bar charts are not supported for use as exportable data 'tables' with NPrinting. (See unsupported and partially supported objects here https://help.qlik.com/en-US/nprinting/Content/NPrinting/ReportsDevelopment/Qlik-objects-supported-NP.htm )

To use the data in the bar chart in an NPrniting report, you can simply follow the steps above and then

- Reload the NPrinting connection to this QS app

- find the new object is visible in the tables list

Then you can use the straight table data in your NPrinting report.

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

- Tags:

- Qlik Sense Business

Below is the list of defects resolved with Qlik Sense February 2020 Patch 13. For more information, please see the Release Notes.

Environment: Qlik Sense Enterprise on Windows February 2020 Patch 13

Charts missing from Visualization bundle

Jira issue

Below is the list of defects resolved with Qlik Sense February 2020 Patch 13. For more information, please see the Release Notes.

Environment: Qlik Sense Enterprise on Windows February 2020 Patch 13

Charts missing from Visualization bundle

Jira issue ID: QB-5320

Description: The Bar & Area chart and Bullet chart are missing from the Visualization bundle. They are still available on sheets, but cannot be created from the assets panel.

- Labels:

-

General Question

Below is the list of defects resolved with Qlik Sense April 2020 Patch 17. For more information, please see the Release Notes.

Environment: Qlik Sense Enterprise on Windows April 2020 Patch 17

Charts missing from Visualization bundle

Jira issue ID: Q

Below is the list of defects resolved with Qlik Sense April 2020 Patch 17. For more information, please see the Release Notes.

Environment: Qlik Sense Enterprise on Windows April 2020 Patch 17

Charts missing from Visualization bundle

Jira issue ID: QB-5320

Description: The Bar & Area chart and Bullet chart are missing from the Visualization bundle. They are still available on sheets, but cannot be created from the assets panel.

- Labels:

-

General Question

Below is the list of defects resolved with Qlik Sense June 2020 Patch 17. For more information, please see the Release Notes.

Environment: Qlik Sense Enterprise on Windows June 2020 Patch 17

Charts missing from Visualization bundle

Jira issue ID: QB-

Below is the list of defects resolved with Qlik Sense June 2020 Patch 17. For more information, please see the Release Notes.

Environment: Qlik Sense Enterprise on Windows June 2020 Patch 17

Charts missing from Visualization bundle

Jira issue ID: QB-5320

Description: The Bar & Area chart and Bullet chart are missing from the Visualization bundle. They are still available on sheets but cannot be created from the assets panel.

Address layout issues with longer errors in expression editor

Jira issue ID: QB-3989

Description: Improved layout for expression edit to allow scrollbars for longer error messages.

Access-Control headers no longer set

Jira issue ID: QB-1733

Description: The Access-Control headers are no longer set by odag-service. This means that any Access-Control header configured in qmc for the virtual proxy will be used for calls to odag-service, including isOdagAvailable.

- Labels:

-

General Question

Below is the list of defects resolved with Qlik Sense September 2020 Patch 13. For more information, please see the Release Notes.

Environment: Qlik Sense Enterprise on Windows Qlik Sense September 2020 Patch 13

Open app slow when having lot of book

Below is the list of defects resolved with Qlik Sense September 2020 Patch 13. For more information, please see the Release Notes.

Environment: Qlik Sense Enterprise on Windows Qlik Sense September 2020 Patch 13

Open app slow when having lot of bookmarks

Jira issue ID: EN-3074

Description: Generic performance improvement around loading of available bookmarks during open app.

Charts missing from Visualization bundle

Jira issue ID: QB-5320

Description: The Bar & Area chart and Bullet chart are missing from the Visualization bundle. They are still available on sheets but cannot be created from the assets panel.

Address layout issues with longer errors in expression editor

Jira issue ID: QB-3989

Description: Improved layout for expression edit to allow scrollbars for longer error messages.

Access-Control headers no longer set

Jira issue ID: QB-1733

Description: The Access-Control headers are no longer set by odag-service. This means that any Access-Control header configured in qmc for the virtual proxy will be used for calls to odag-service, including isOdagAvailable.

- Labels:

-

General Question

Below is the list of defects resolved with Qlik Sense November 2020 Patch 11. For more information, please see the Release Notes.

Environment: Qlik Sense Enterprise on Windows November 2020 Patch 11

Charts missing from Visualization bundle

Jira issue

Below is the list of defects resolved with Qlik Sense November 2020 Patch 11. For more information, please see the Release Notes.

Environment: Qlik Sense Enterprise on Windows November 2020 Patch 11

Charts missing from Visualization bundle

Jira issue ID: QB-5320

Description: The Bar & Area chart and Bullet chart are missing from the Visualization bundle. They are still available on sheets but cannot be created from the assets panel.

Address layout issues with longer errors in expression editor

Jira issue ID: QB-3989

Description: Improved layout for expression edit to allow scrollbars for longer error messages.

- Labels:

-

General Question

Below is the list of defects resolved with Qlik Sense February 2021 Patch 6. For more information, please see the Release Notes.

Environment: Qlik Sense Enterprise on WindowsFebruary 2021 Patch 6

Charts missing from Visualization bundle

Jira issue I

Below is the list of defects resolved with Qlik Sense February 2021 Patch 6. For more information, please see the Release Notes.

Environment: Qlik Sense Enterprise on WindowsFebruary 2021 Patch 6

Charts missing from Visualization bundle

Jira issue ID: QB-5320

Description: The Bar & Area chart and Bullet chart are missing from the Visualization bundle. They are still available on sheets but cannot be created from the assets panel.

Fix for Insight Advisor Chat data collection opt out issue

Jira issue ID: EQB-5204

Description: Fixed an issue where Insight Advisor Chat may send usage metrics to Qlik servers in cases where this has been deactivated.

Not being able to export data from dynamic views

Jira issue ID: QB-4960

Description: The ability to export data from dynamic views has been restored.

Address layout issues with longer errors in expression editor

Jira issue ID: QB-3989

Description: Improved layout for expression edit to allow scrollbars for longer error messages.

Fix the bug where tooltip was showing unnecessary information

Jira issue ID: QB-3742

Description: Fix the bug where tooltip was showing unnecessary information. This was happening due to the tooltip not being re-rendered when moving away from the incision between areas.

Custom numerical abbreivation not working for the funnel chart

Jira issue ID: QB-3226

Description: Should now apply custom numerical abbreviation for the funnel chart.

Improve handling of conflicts when updating time-stamps on UDC related entities.

Jira issue ID: SHEND-509

Description: Value of ModfiedDate in the UserDirectorySettings table from QSR database is now set to DateTime.Maxvalue in order to avoid any potential conflicts.

- Labels:

-

General Question

Server Patch: https://da3hntz84uekx.cloudfront.net/QlikSense/13.51/18/_MSI/Qlik_Sense_update.exe

Desktop: https://da3hntz84uekx.cloudfront.net/QlikSenseDesktop/13.51/18/_MSI/Qlik_Sense_Desktop_setup.exe

Server Patch: https://da3hntz84uekx.cloudfront.net/QlikSense/13.51/18/_MSI/Qlik_Sense_update.exe

Desktop: https://da3hntz84uekx.cloudfront.net/QlikSenseDesktop/13.51/18/_MSI/Qlik_Sense_Desktop_setup.exe

- Labels:

-

Client Managed

Server Patch: https://da3hntz84uekx.cloudfront.net/QlikSense/13.62/13/_MSI/Qlik_Sense_update.exe

Desktop: https://da3hntz84uekx.cloudfront.net/QlikSenseDesktop/13.62/13/_MSI/Qlik_Sense_Desktop_setup.exe

Server Patch: https://da3hntz84uekx.cloudfront.net/QlikSense/13.62/13/_MSI/Qlik_Sense_update.exe

Desktop: https://da3hntz84uekx.cloudfront.net/QlikSenseDesktop/13.62/13/_MSI/Qlik_Sense_Desktop_setup.exe

- Labels:

-

Client Managed

Server Patch: https://da3hntz84uekx.cloudfront.net/QlikSense/13.72/17/_MSI/Qlik_Sense_update.exe

Desktop: https://da3hntz84uekx.cloudfront.net/QlikSenseDesktop/13.72/17/_MSI/Qlik_Sense_Desktop_setup.exe

Server Patch: https://da3hntz84uekx.cloudfront.net/QlikSense/13.72/17/_MSI/Qlik_Sense_update.exe

Desktop: https://da3hntz84uekx.cloudfront.net/QlikSenseDesktop/13.72/17/_MSI/Qlik_Sense_Desktop_setup.exe

- Labels:

-

Client Managed

A certificate error is displayed when you open the Qlik Sense Management Console or Hub:

There is a problem with this website's security certificate.

The end-user can click Continue to this website (not recommended) and successfully access Qlik Sen

...A certificate error is displayed when you open the Qlik Sense Management Console or Hub:

There is a problem with this website's security certificate.

The end-user can click Continue to this website (not recommended) and successfully access Qlik Sense.

Environment

Qlik Sense Enterprise for Windows will by default use a self-signed certificate to allow for the use of HTTPS when accessing the Management Console (QMC) or the Hub. This certificate is by default not trusted, as it does not have a Trusted Certificate Authority.

Resolution

Option 1: Use a signed certificate

- Obtain a 3rd party certificate signed by a Trusted Certificate Authority (Enterprise CA, VeriSign, etc...).

- Install the certificate as per Qlik Sense Hub and QMC with a custom SSL certificate.

Option 2: Import the currently used self-signed certificate to the client machines

Perform the following on the Microsoft IIS server or Certificate Server to import the Qlik Sense server certificate to the remote system used to access the Qlik Sense Management Console and Hub:

- Open Microsoft Management Console (MMC) on the Qlik Sense server. Press Windows+R, type MMC, and press ENTER.

- Add the Certificates snap-in using the computer account:

- Click File and select Add/Remove Snap-in. The Add or Remove Snap-ins window opens.

- Select Certificates and click Add.

- Select Computer account and click Next.

- Select Local computer (selected by default) and click Finish.

- Click OK. You now have a snap-in named Certificates (Local Computer) in MMC.

- Export the Qlik Sense server certificate from MMC:

- Expand Certificates.

- Expand Trusted Root Certification Authorities and select the Certificates folder.

- Right-click the Qlik Sense server Root Certification Authority, and select All Tasks, Export. The Certificate Export Wizard opens.

- Select the following in the wizard:

- Select Cryptographic Message Syntax Standard - PKCS #7 Certificates (.P7B).

- Select Include all certificates in the certification path if possible.

- Click Next.

- Specify a file name.

- Click Next, Finish. This creates the file <chosen_filename>.p7b.

- Copy <chosen_filename>.p7b to the remote system used to access the Qlik Sense Management console and Hub.

- Install the certificate on the system using one of the following methods:

- Method 1 - Right-click the certificate file

- Right-click <chosen_filename>.p7b and select Install Certificate. The Certificate Import Wizard opens.

- Click Next.

- Select Place all certificates in the following store.

- Click Browse.

- Select Trusted Root Certification Authorities and click OK.

- Click Next, Finish.

- Method 2 - Import using Microsoft Internet Explorer

- Open Internet Explorer.

- Select Tools, Internet options.

- Click the Content tab.

- Click Certificates.

- Select the Trusted Root Certification Authorities tab.

- Click Import. The Certificate Import Wizard opens.

- Click Next.

- Click Browse.

- Browse to and select the <chosen_filename>.p7b file.

- Click Next.

- Select Place all certificates in the following store and Trusted Root Certification Authorities.

- Click Next, Finish.

- Method 1 - Right-click the certificate file

Related Content

Qlik Sense Hub and QMC with a custom SSL certificate

ERR_CERT_COMMON_NAME_INVALID when using 3rd party certificate

End of Support for Qlik products follows Release Management Policy, as documented in the Product Terms found on the Qlik Site: On-Premise Products Release Management Policy.

For detailed information on end of life and release dates, see:

...End of Support for Qlik products follows Release Management Policy, as documented in the Product Terms found on the Qlik Site: On-Premise Products Release Management Policy.

For detailed information on end of life and release dates, see:

- Labels:

-

Deployment

Some Qlik Sense actions, for example the update of new users, are failing. The Repository logs show this error message: "Sequence contains more than one element".

Environment

- Qlik Sense on Windows any versions

Resolution

The resolution requires

...Some Qlik Sense actions, for example the update of new users, are failing. The Repository logs show this error message: "Sequence contains more than one element".

Environment

- Qlik Sense on Windows any versions

Resolution

The resolution requires some modifications on the database. This can require technical competence, so we recommend to contact Qlik Support and to provide a backup of the repository.

Cause

This issue is due to duplicated rows in a repository table. In most of the cases this occurs after an upgrade.

Internal Investigation ID(s)

QB-4618

Hi All,

Can anyone help me how can I get User documents for a certain user alone in qlikview API. I have already searched method regarding this in QMS Api latest version. But still cannot come up with the solution. Is there any possibility to get use

...Hi All,

Can anyone help me how can I get User documents for a certain user alone in qlikview API. I have already searched method regarding this in QMS Api latest version. But still cannot come up with the solution. Is there any possibility to get user documents alone by passing the user and domain name with server id?

#QMS #QlikView #API #development #userdocuments

- Tags:

- qlikview

The Qlik ODBC Snowflake Connector fails to connect with the following error print in the Data Load Editor and Connector Logs:

ERROR [HY000] [Qlik][Snowflake] (25)Result download worker error: Worker error: [Qlik][Snowflake] (4)REST request for URL https://sfc.s3.amazonaws.com/d

The Qlik ODBC Snowflake Connector fails to connect with the following error print in the Data Load Editor and Connector Logs:

ERROR [HY000] [Qlik][Snowflake] (25)Result download worker error: Worker error: [Qlik][Snowflake] (4)REST request for URL https://sfc.s3.amazonaws.com/d

Environment:

Qlik Sense Enterprise on Windows

Qlik ODBC Connector Package (Qlik ODBC Snowflake connector)

Resolution

Add the additional parameters for proxy and No_proxy in the Advanced tab in Edit/Create a connection in Data Load Editor:

| Parameter Name | Proxy | No_Proxy |

| Value | http://[hostname]:[portnumber] | comma-separated list of IP addresses or host name endings that |

| OR | should be allowed to bypass the proxy server | |

| [hostname]:[portnumber] | * |

Where [hostname] is the hostname of the proxy server and [portnumber] is the port used by the proxy server.

The proxy server URL, when this property is set, all ODBC communications with Snowflake use this server, with the exception of communications from servers specified in the NoProxy property.

Use No_Proxy as the value name, and a comma-separated list of hostname endings or IP addresses as the value data.

Note: This parameter does not support wildcard characters

- Tags:

- snowflake

- Labels:

-

Configuration

-

Data Connection

Suppose we have to compare two JSON objects and check for the key/values that are different in both objects, Compare Object block comes into use here. The output of compare object block might have null properties that means it can contain certain key

...Suppose we have to compare two JSON objects and check for the key/values that are different in both objects, Compare Object block comes into use here. The output of compare object block might have null properties that means it can contain certain keys with null values. In order to get rid of these null properties we just use the 'Remove Empty Properties' formula available in the editor.

Usage : {removeemptyproperties: {$.compareObject}}

This would be useful when we are trying to update an endpoint based on the changes of source data with destination data where we will be comparing both and checking for the key/values that are different in both objects(source and destination). This will give an output object if there are different values for the same keys in both objects. There might be some fields that are missing in source data which would get listed out in the output of the compare object block. If you are trying to update an endpoint by checking if the output from compare object block is not empty that is updating an endpoint only if there is a difference between source and destination data this might cause an issue since compare object output will always list out null properties if there are missing fields in source data. In that case, add the 'Remove Empty Properties' formula to the output of compare object block.

- Tags:

- blendr.io

- Labels:

-

How To

-

Workflow and Automation

This article explains how in Blendr.io, variables can be shared between different templates in a bundle.

General idea

Store the variables in the CDP or in the Data Store and give them a unique identifier. It's best to use the {bundleguid} formula for

...This article explains how in Blendr.io, variables can be shared between different templates in a bundle.

General idea

Store the variables in the CDP or in the Data Store and give them a unique identifier. It's best to use the {bundleguid} formula for this as it is unique for each installation of the bundle and can't be changed by a user.

When using the CDP, add variables using the "Upsert Custom Object By Key" block with {bundleguid} as a scope. This should be done during the setup or settings flow of a template.

Storing variables to the Data Store is a bit more difficult as it needs to be created before any variables can be added. Create the Data Store in the setup flow of one of the templates inside the bundle. Then add new variables to it in the settings flow of the same or other templates. Use {bundleguid} as the name for the Data Store.

By implementing this, each bundle will have its own CDP/Data Store.

Use case: sharing trigger URLs between templates

When building bundles that contain triggered templates, the trigger URL of these templates will be different for each installation. So, hard coding it is not an option. (Triggered blends )

By storing the trigger URLs in the CDP (or Data Store), they can be accessed by other templates in the bundle.

Once a URL is fetched, the corresponding template can be executed by using the Call URL block.

1) Store the trigger URL by using the {url} formula:

2) Fetch the URL from the CDP/Data Store in a different template:

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

- Tags:

- blendr.io

- Labels:

-

How To

-

Workflow and Automation

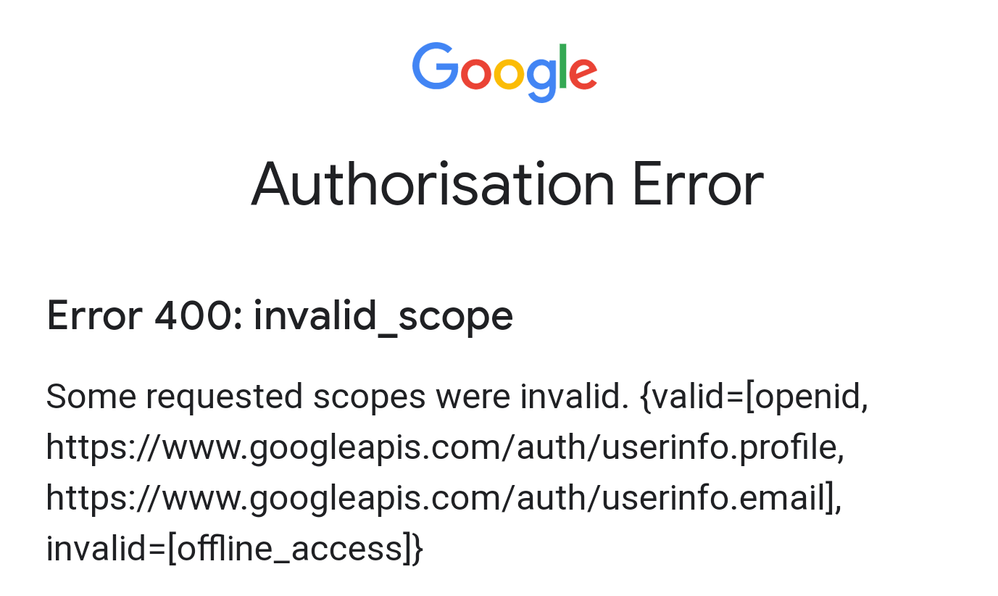

Using Google as the IdP with the Qlik Sense Mobile (SaaS) app on either iOS or Android fails.

The following error is shown in the app:

Authorisation Error

Error 400: invalid_scope

Some requested scopes were invalid. {valid=[openid,

https://www.googleapis.com/auth/userinfo.profile

Using Google as the IdP with the Qlik Sense Mobile (SaaS) app on either iOS or Android fails.

The following error is shown in the app:

Authorisation Error

Error 400: invalid_scope

Some requested scopes were invalid. {valid=[openid,

https://www.googleapis.com/auth/userinfo.profile,

https://www.googleapis.com/auth/userinfo.email],

invalid=[offline_access]}

Resolution

Set the Block_offline_access scope in your Google IdP Advanced settings in the Qlik Cloud console.

Environment

Qlik Sense Mobile SaaS with Qlik Cloud

- Labels:

-

Integration and Embedding

Does Replicate support STARTTLS?

How to enable SSL for email notifications and set email with STARTTLS?

Environment:

Qlik Replicate version: 7.x

Qlik Enterprise Manager: 7.x

Currently, STARTTLS is not supported by Qlik Replicate. We ask you to r

...Does Replicate support STARTTLS?

How to enable SSL for email notifications and set email with STARTTLS?

Environment:

Qlik Replicate version: 7.x

Qlik Enterprise Manager: 7.x

Currently, STARTTLS is not supported by Qlik Replicate. We ask you to raise an enhancement request for this compatibility.

To submit a Feature Request, see Getting Started with Ideas.

Is there any way of putting explanation/details about table columns

Environment

- Qlik Sense Desktop, all versions

- Qlik Sense Enterprise, all versions

The information in this article is provided as-is and to be used at own discretion. Depending o

...Is there any way of putting explanation/details about table columns

Environment

- Qlik Sense Desktop, all versions

- Qlik Sense Enterprise, all versions

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

Resolution

No it is not possible to add explanation/details in regular Qlik Sense charts.

However, it is possible to add explanation/details in "Description" of Master items like below:

If adding details to regular Qlik Sense charts is necessary, please create custom extension or raise Feature request.