Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

Search our knowledge base, curated by global Support, for answers ranging from account questions to troubleshooting error messages.

Recent Documents

-

How to schedule a Talend Job with Kubernetes

This article explains how to schedule a Talend Job as a Kubernetes Job and how to use Kubernetes as a Job orchestrator. Sources for th... Show More -

Qlik Talend ESB: Activity monitoring with routes

Overview This article expands on the Talend Knowledge Base (KB) article, Improve Camel Flow Monitoring, by demonstrating how to use the monitoring s... Show More -

Getting a '[statistics] connection refused' error

Talend Version All versions Summary Additional Versions Product Data Integration Component Studio Problem Description Running a Job in Studio wit... Show More -

How to configure observability metrics with Talend Cloud and Remote Engine

You can observe your Data Integration Jobs running on Talend Remote Engines if your Jobs are scheduled to run on Talend Remote Engine version 2.9.2 or... Show MoreYou can observe your Data Integration Jobs running on Talend Remote Engines if your Jobs are scheduled to run on Talend Remote Engine version 2.9.2 or later.

This is a step-by-step guide on how Talend Cloud Management Console can provide the data needed to build your own customized dashboards, with an example of how to ingest and consume data from Microsoft Azure Monitor.

Once you have set up the metric and log collection system in Talend Remote Engine and your Application Performance Monitoring (APM) tool, you can design and organize your dashboards thanks to the information sent from Talend Cloud Management Console to APM through the engine.Content:

- Prerequisites

- Configuring and starting the remote engine

- Get the metric details from the API

- Push metric to Azure logs workspace

- Observable metrics

- Queries and dashboard example

- Report One: A sample report for the number of rows processed by a component within the overall run

- Report Two: A sample report to showcase the average time taken by each component grouped by Job

- Report Three: A sample report to showcase OS memory and file storage available in MB

- Report Four: A sample report to showcase all jvm_process_cpu_load events counted in the last two days, in15-minute intervals

- Azure dashboard

- Pin the reports to the Azure dashboard

- Sample Talend Data Integration Job

- Job structure

- Job details

Prerequisites

This document has been tested on the following products and versions running in a Talend Cloud environment:

- Remote Engine 2.9.2 #182 – downloaded and installed following the instructions in Installing Talend Remote Engine in the Talend Help Center.

Optional requirements for obtaining detailed Job statistics:

- Studio 7.3.1 R2020-07 – downloaded from the Talend Cloud Portal and updated with the appropriate monthly patch

- Republish your Jobs from the new version of Studio to Talend Cloud Management Console

Configuring and starting the remote engine

To configure the files and check that the Remote Engine is running, navigate to the Monitoring Job runs on Remote Engines section of the Talend Remote Engine User Guide for Linux.

Get the metric details from the API

Use any REST client, such as Talend API Tester or Postman, and use the endpoint as explained below.

- Endpoint:

GET http://ip_where_RE_is_installed:8043/metrics/json

8043 is the default http port of Remote Engines. Replace it with the port you used when installing the Remote Engine. - Add a header: Authorization Bearer {token}.

This token is defined in the etc/org.talend.observability.http.security.cfg file as endpointToken={token}. - Example:

GET http://localhost:8043/metrics/json Authorization Bearer F7VvcRAC6T7aArU

Push metric to Azure logs workspace

There are numerous ways to push the metric results to any analytics and visualization tool. This document shows how to use the Azure monitor HTTP data collector API to push the metrics to an Azure log workspace. Python code is also used to send the logs in batch mode at frequent intervals. Alternatively, you can create a Talend Job as a service for real-time metric extraction. For more information, see the attached Job and Python Code.zip file.

The logs are pushed to the Azure Log Analytics workspace as “custom logs”.Observable metrics

Talend Cloud Management Console provides metrics through Talend Remote Engine. They can be integrated in your APM tool to observe your Jobs.

For the list of available metrics, see Available metrics for monitoring in the Talend Remote Engine User Guide for Linux.Queries and dashboard example

Report One: A sample report for the number of rows processed by a component within the overall run

Query:

Remote_Engine_OBS_CL |where TimeGenerated > ago(2d) |where name_s=='component_connection_rows_total' |summarize sum(value_d) by context_target_connector_type_s |render piechart

Chart:

Report Two: A sample report to showcase the average time taken by each component grouped by Job

Query:

Remote_Engine_OBS_CL |where TimeGenerated > ago(2d) |where name_s=='component_execution_duration_seconds' |summarize count(), avg(value_d) by context_artifact_name_s,context_connector_label_s

Chart:

Report Three: A sample report to showcase OS memory and file storage available in MB

Query:

Remote_Engine_OBS_CL |where name_s=='os_memory_bytes_available' or name_s =='os_filestore_bytes_available' |summarize sum(value_d)/1000000 by name_s

Chart:

Report Four: A sample report to showcase all jvm_process_cpu_load events counted in the last two days, in 15-minute intervals

Query:

Remote_Engine_OBS_CL |where TimeGenerated > ago(2d) |where name_s =='jvm_process_cpu_load' |summarize events_count=sum(value_d) by bin(TimeGenerated, 15m), context_artifact_name_s |render timechart

Chart:

Azure dashboard

Pin the reports to the Azure dashboard

Sample Talend Data Integration Job

This section explains the sample Job used to send the metric logs to the Azure log workspace. This Job is available in the attached Job and Python Code.zip file.

Job structure

Job details

The components used and their detailed configurations are explained below.

tREST

Component to make a REST API Get call.

tJavaRow

The component used to print the response from the API call.

tFileOutputRaw

The component used to create a JSON file with the API response body.

tSystem

Component to call the Python code.

tJava

Related Content

-

Log4j tips and tricks

Log4j, incorporated in Talend software, is an essential tool for discovering and solving problems. This article shows you some tips and tricks for usi... Show MoreLog4j, incorporated in Talend software, is an essential tool for discovering and solving problems. This article shows you some tips and tricks for using Log4j.

The examples in this article use Log4j v1, but Talend 7.3 uses Log4j v2. Although the syntax is different between the versions, anything you do in Log4j v1 should work, with some modification, in Log4j v2. For more information on Log4j v2, see Configuring Log4j, available in the Talend Help Center.

Content:

- Configuring Log4j in Talend Studio

- Emitting messages

- Routines

- Controlling Log4j message formats with patterns

- Logging levels

- Using Appenders

- Using filters

- Overriding default settings in Talend Administration Center

Configuring Log4j in Talend Studio

Configure the log4j.xml file in Talend Studio by navigating to File > Edit Project properties > Log4j.

You can also configure Log4j using properties files or built-in classes; however, that is not covered in this article.

Emitting messages

You can execute code in a tJava component to create Log4j messages, as shown in the example below:

log.info("Hello World"); log.warn("HELLO WORLD!!!");This code results in the following messages:

[INFO ]: myproject.myjob - Hello World [WARN ]: myproject.myjob - HELLO WORLD!!!

Routines

You can use Log4j to emit messages by creating a logger class in a routine, as shown in the example below:

public class logSample { /*Pick 1 that fits*/ private static org.apache.log4j.Logger log = org.apache.log4j.Logger.getLogger(logSample.class); private static org.apache.log4j.Logger log1 = org.apache.log4j.Logger.getLogger("from_routine_logSample"); /*...*/ public static void helloExample(String message) { if (message == null) { message = "World"; } log.info("Hello " + message + " !"); log1.info("Hello " + message + " !"); } }To call this routine from Talend, use the following command in a tJava component:

logSample.helloExample("Talend");The log results will look like this:

[INFO ]: routines.logSample - Hello Talend ! [INFO ]: from_routine_logSample - Hello Talend !

Using <routineName>.class includes the class name in the log results. Using free text with the logger includes the text itself in the log results. This is not really different than using System.out, but Log4j can be customized and fine-tuned.

Controlling Log4j message formats with patterns

You can use patterns to control the Log4j message format. Adding patterns to Appenders customizes their output. Patterns add extra information to the message itself. For example, when multiple threads are used, the default pattern doesn't provide information about the origin of the message. Use the %t variable to add a thread name to the logs. To easily identify new messages, it's helpful to use %d to add a timestamp to the log message.

To add thread names and timestamps, use the following pattern after the CONSOLE appender section in the Log4j template:

<param name="ConversionPattern" value= "%d{yyyy-MM-dd HH:mm:ss} [%-5p] (%t): %c - %m%n" />The pattern displays messages as follows:

ISO formatted date [log level] (thread name): class projectname.jobname - message contents

If the following Java code is executed in three parallel threads, using the sample pattern above helps distinguish between the threads.

java.util.Random rand = new java.util.Random(); log.info("Hello World"); Thread.sleep(rand.nextInt(1000)); log.warn("HELLO WORLD!!!"); logSample.helloExample("Talend");This results in an output that shows which thread emitted the message and when:

2020-05-19 12:18:30 [INFO ] (tParallelize_1_e45bc79b-d61f-45a3-be8f-7089ab6d565d): myproject.myjob_0_1.myjob - Hello World 2020-05-19 12:18:30 [INFO ] (tParallelize_1_4064c9b8-0585-41e0-b9f0-95fb31e602b7): myproject.myjob_0_1.myjob - Hello World 2020-05-19 12:18:30 [INFO ] (tParallelize_1_a8ef1065-0106-4b45-8a60-d02a9cbe1f00): myproject.myjob_0_1.myjob - Hello World 2020-05-19 12:18:30 [WARN ] (tParallelize_1_e45bc79b-d61f-45a3-be8f-7089ab6d565d): myproject.myjob_0_1.myjob - HELLO WORLD!!! 2020-05-19 12:18:30 [INFO ] (tParallelize_1_e45bc79b-d61f-45a3-be8f-7089ab6d565d): routines.logSample - Hello Talend ! 2020-05-19 12:18:30 [INFO ] (tParallelize_1_e45bc79b-d61f-45a3-be8f-7089ab6d565d): from_routine.logSample - Hello Talend ! 2020-05-19 12:18:30 [WARN ] (tParallelize_1_a8ef1065-0106-4b45-8a60-d02a9cbe1f00): myproject.myjob_0_1.myjob - HELLO WORLD!!! 2020-05-19 12:18:30 [INFO ] (tParallelize_1_a8ef1065-0106-4b45-8a60-d02a9cbe1f00): routines.logSample - Hello Talend ! 2020-05-19 12:18:30 [INFO ] (tParallelize_1_a8ef1065-0106-4b45-8a60-d02a9cbe1f00): from_routine.logSample - Hello Talend ! 2020-05-19 12:18:31 [WARN ] (tParallelize_1_4064c9b8-0585-41e0-b9f0-95fb31e602b7): myproject.myjob_0_1.myjob - HELLO WORLD!!! 2020-05-19 12:18:31 [INFO ] (tParallelize_1_4064c9b8-0585-41e0-b9f0-95fb31e602b7): routines.logSample - Hello Talend ! 2020-05-19 12:18:31 [INFO ] (tParallelize_1_4064c9b8-0585-41e0-b9f0-95fb31e602b7): from_routine.logSample - Hello Talend !

If you want to know which component belongs to which thread, you need to change the log level to add more information.

You can do this in Studio on the Run tab, in the Advanced settings tab of the Job execution.

In Talend Administration Center, you do this in Job Conductor.

Using DEBUG level adds a few extra lines to the log file, which can help you understand which parameters resulted in a certain output:

2020-05-19 12:51:50 [DEBUG] (tParallelize_1_c6de81be-1bbf-4f9b-9b7a-3d92bf345c40): myproject.myjob_0_1.myjob - tParallelize_1 - The subjob starting with the component 'tJava_1' starts. 2020-05-19 12:51:50 [DEBUG] (tParallelize_1_fa636a36-9f53-423f-abc6-b26c4c52c5b4): myproject.myjob_0_1.myjob - tParallelize_1 - The subjob starting with the component 'tJava_3' starts. 2020-05-19 12:51:50 [DEBUG] (tParallelize_1_d4da8ea0-4401-4229-82e9-86ff0ed67c3b): myproject.myjob_0_1.myjob - tParallelize_1 - The subjob starting with the component 'tJava_2' starts.

Keep in mind the following:

- Changing the default log pattern causes Studio to stop coloring the messages.

- The default log level in Studio is defined by the root logger's priority value (Warn, by default).

- Changing the log level changes the number of messages.

- Changing the pattern changes the message format.

Logging levels

The following table describes the Log4j logging levels you can use in Talend applications:

Debug Level Description TRACE Everything that is available is being emitted at this logging level, which makes every row behave like it has a tLogRow component attached. This can make the log file extremely large; however, it also displays the transformation done by each component. DEBUG This logging level displays the component parameters, database connection information, queries executed, and provides information about which row is processed, but it does not capture the actual data. INFO This logging level includes the Job start and finish times, and how many records were read and written. WARN Talend components do not use this logging level. ERROR This logging level writes exceptions. These exceptions do not necessarily cause the Job to halt. FATAL When this appears, the Job execution is halted. OFF Nothing is emitted. These levels offer high-level controls for messages. When changed from the outside they affect only the Appenders that did not specify a log level and rely on the level set by the root logger.

Using Appenders

Log4j messages are processed by Appenders, which route the messages to different outputs, such as to console, files, or logstash. Appenders can even send messages to databases, but for database logs, the built-in Stats & Logs might be a better solution.

Storing Log4j messages in files can be useful when working with standalone Jobs. Here is an example of a file Appender:

<appender name="ROLLINGFILE" class="org.apache.log4j.RollingFileAppender"> <param name="file" value="rolling_error.log"/> <param name="Threshold" value="ERROR"/> <param name="MaxFileSize" value="10000KB"/> <param name="MaxBackupIndex" value="5"/> <layout class="org.apache.log4j.PatternLayout"> <param name="ConversionPattern" value="%d{yyyy-MM-dd HH:mm:ss} [%-5p] (%t): %c - %m%n"/> </layout> </appender>You can use multiple Appenders to have multiple files with different log levels and formats. Use the parameters to control the content. The Threshold value of ERROR doesn't provide information about the Job execution, but a value of INFO makes errors harder to detect.

For more information on Appenders, see the Apache Interface Appender page.

Using filters

You can use filters with Appenders to keep messages that are not of interest out of the logs. Log4j v2 offers regular expression based filters too.

The following example filter omits any Log4j messages that contain the string " - Adding the record ".

<filter class="org.apache.log4j.varia.StringMatchFilter"> <param name="StringToMatch" value=" - Adding the record " /> <param name="AcceptOnMatch" value="false" /> </filter>

Overriding default settings in Talend Administration Center

When a Java program starts, it attempts to load its Log4j settings from the log4j.xml file. You can modify this file to change the default settings, or you can force Java to use a different file. For example, you can do this for Jobs deployed to Talend Administration Center by configuring the JVM parameters. This way, you can change the logging behavior for a Job without modifying the original Job, or you can revert back to the original logging behavior by clearing the Active check box.

-

Enabling Snowflake tracing log in Talend Studio components

Use one of the following options to enable the Snowflake tracing log. Option 1 - Using the Snowflake connection component Add "tracing=All" to th... Show MoreUse one of the following options to enable the Snowflake tracing log.

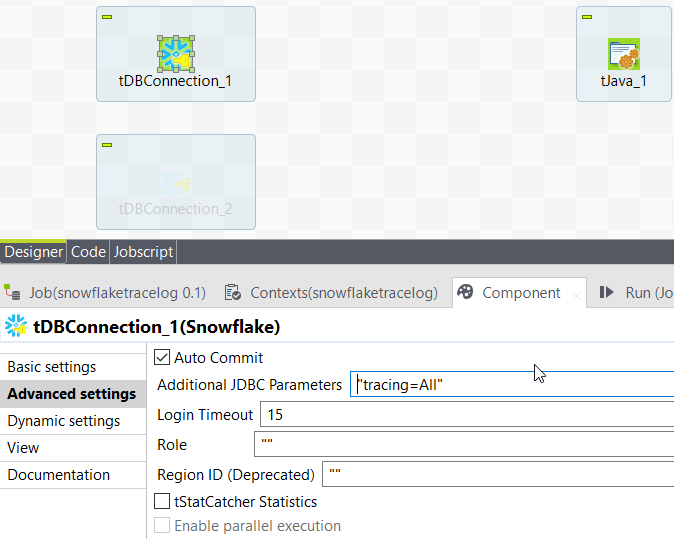

Option 1 - Using the Snowflake connection component

Add "tracing=All" to the component Advanced Settings > Additional JDBC Parameters field.

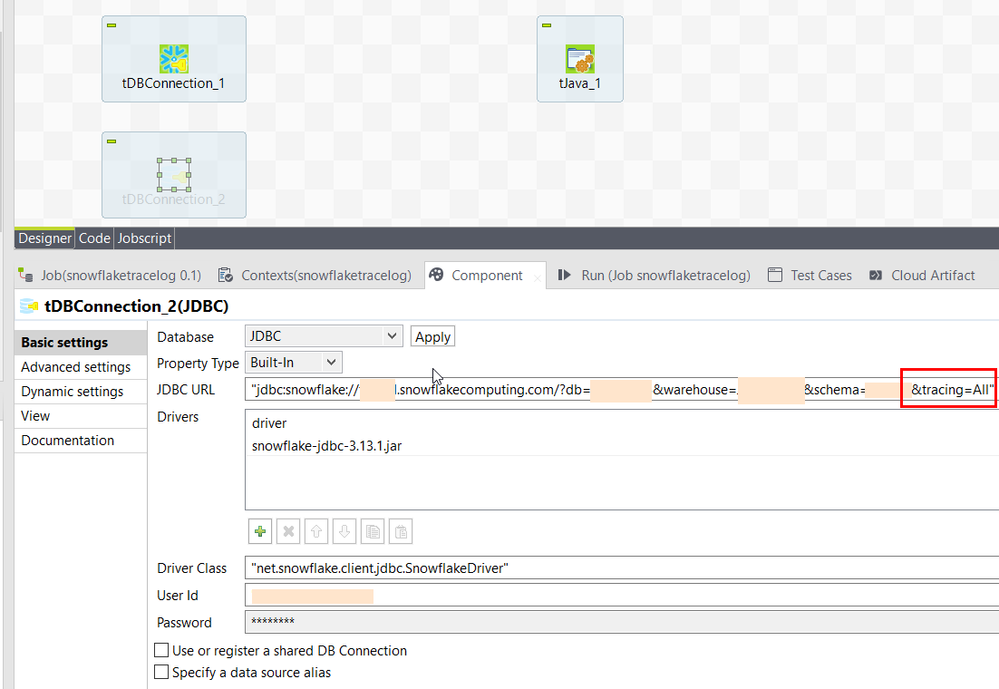

Option 2 - Using the JDBC connection component

Configure the JDBC URL using the following parameters:

jdbc:snowflake://<account>.snowflakecomputing.com?db=<dbname>&warehouse=<whname>&schema=<scname>&tracing=ALL

Locating the trace logs

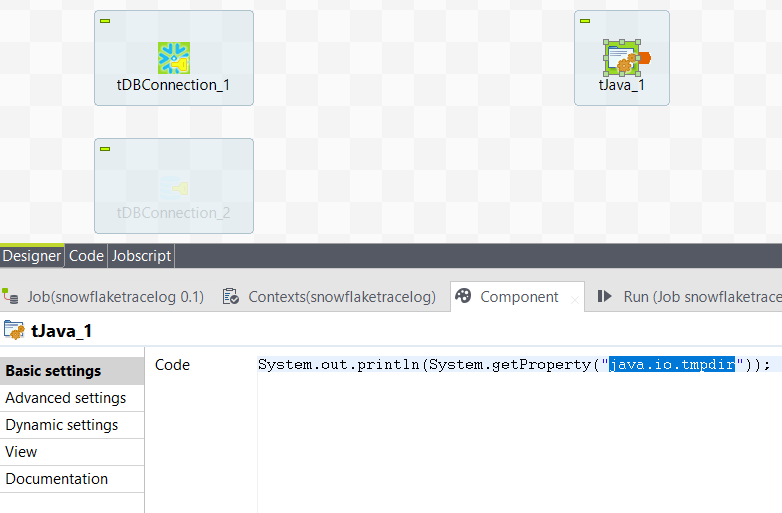

You can locate the trace log, stored in the tmp log file directory, by running a tJava component with the following code:

System.out.printIn(System.getProperty("java.io.tmpdir"));For more information, see the Snowflake KB article, How To: Generate log files for Snowflake Drivers & Connectors

-

Talend Cloud Platform Engines: Cloud Engine, Remote Engine, and Remote Engine Ge...

Talend Cloud platform provides computational capabilities that allow organizations to securely run data integration processes natively from cloud to c... Show MoreTalend Cloud platform provides computational capabilities that allow organizations to securely run data integration processes natively from cloud to cloud, on-premises to cloud, or cloud to on-premises environments.

These capabilities are powered by compute resources, commonly known as Engines. This article covers the four basic types.

Content:

- Cloud Engine (CE)

- Remote Engine (RE)

- Remote Engine Gen2 (REG2)

- Cloud Engine for Design (CE4D)

- Cloud Engine versus Remote Engine

- Cloud Engine for Design versus Remote Engine Gen 2

- Need for additional engines

- Cloud Engine - usage considerations

- Remote Engine – recommendations

- Summary

Cloud Engine (CE)

A Cloud Engine is a compute resource managed by Talend in Talend Cloud that executes Job tasks.

- You can allocate Cloud Engines to environments in proportion to the number of concurrent task executions, workloads, and Job designs you plan to run.

- All environments can use unassigned Cloud Engines. If Cloud Engines are not allocated to specific environments, you may not be able to run certain tasks because other tasks might keep all the unassigned Cloud Engines occupied.

- Cloud Engines can handle parallel execution of three tasks. That means a maximum of three different tasks can run in parallel on a single Cloud Engine. (A task cannot run more than once concurrently on a single Cloud Engine.) So, if three different tasks are already running on a Cloud Engine or if the same task is already running on that engine, another Cloud Engine is selected to execute the task.

- If you run your task in Cloud Exclusive mode, you cannot execute other tasks on that Cloud Engine. You can only use Cloud Exclusive engines in environments that do not have Cloud Engines assigned to them.

- Cloud Engines have limited system resources – memory usage: 8 GB, disk usage: 200 GB.

- Only standard Data Integration Batch Jobs can run on Cloud Engines.

- You cannot group Cloud Engines together to form clusters.

- Cloud Engines are hosted on AWS or Azure Cloud.

- Talend manages Cloud Engines.

- Cloud Engines employ TCP communication.

Remote Engine (RE)

A capability in Talend Cloud platform that allows you to securely run data integration Jobs natively from cloud to cloud, on-premises to cloud, or cloud to on-premises environments completely within your environment for enhanced performance and security, without transferring the data through the Cloud Engines in Talend Cloud platform.

Java-based runtime (similar to a Cloud Engine) to execute Talend Jobs on-premises or on another cloud platform that you control.

- Remote Engines allow you to run Jobs, Routes, and Data Service tasks.

- Data Service and Route Microservice tasks can only be deployed on Remote Engines. OSGi type deployments require that Talend Runtime version 7.1.1 or higher is installed and running on the same machine as the Talend Remote Engine.

- Remote Engines support configurable max parallel execution: by default, a maximum of three different tasks can run in parallel on the same Remote Engine. However, this is a modifiable configuration.

- Remote Engines can be grouped to form clusters called Remote Engine Cluster. Remote Engines added to a cluster cannot be used to execute tasks directly from Talend Studio.

- Remote Engines are hosted on-premises or on the cloud.

- You manage Remote Engines.

- Remote Engines employ HTTPS communication.

Remote Engine Gen2 (REG2)

A Remote Engine Gen2 is a secure execution engine on which you can safely execute data pipelines (that is, data flows designed using Talend Pipeline Designer). It allows you to have control over your execution environment and resources because you can create and configure the engine in your own environment (Virtual Private Cloud or on-premises). Previously referred to as Remote Engines for Pipelines, this engine was renamed Remote Engine Gen2 during H1/2020. It is a Docker-based runtime to execute data pipelines on-premises or on another cloud platform that you control.

A Remote Engine Gen2 ensures:

- Data processing in a safe and secure environment, because Talend never has access to your pipelines' data and resources

- Optimal performance and security by increasing the data locality instead of moving large data to computation

Cloud Engine for Design (CE4D)

Cloud Engine for Design is a built-in runner that allows you to easily design pipelines without setting up any processing engines. With this engine you can run two pipelines in parallel. For advanced processing of data, Talend recommends installing the secure Remote Engine Gen2.

- CE4Ds have limited system resources – memory usage: 8 GB

- CE4Ds support a maximum of two pipelines that can run in parallel on a single CE4D

- CE4Ds should be used only for design purposes; that is, you shouldn’t use them to execute data pipelines in a Production environment

Cloud Engine versus Remote Engine

The following table lists a comparative perspective between the two engines:

Cloud Engine (CE)

Remote Engine (RE)

Consumes 45,000 engine tokens

Consumes 9,000 engine tokens

Runs within Talend Cloud platform – no download required

Downloadable software from Talend Cloud platform

Managed by Talend, run on-demand as needed to execute Jobs

Managed by the customer

No customer resources required

Customer can run on Windows, Linux, or OS X

Set physical specifications (Memory, CPU, Temp Disk Space)

Unlimited Memory, CPU, and Temp Space

Require data sources/targets to be visible through the internet to the Cloud Engine

Hybrid cloud or on-premises data sources

Restricted to three concurrent Jobs

Unlimited concurrent Jobs (default three)

Available within Talend Cloud portal

Available in AWS and Azure Marketplace

Runs natively within Talend Cloud iPaaS infrastructure

Uses HTTPS calls to Talend Cloud service to get configuration information and Job definition and schedules

Cloud Engine for Design versus Remote Engine Gen 2

Cloud Engine for Design (CE4D)

Remote Engine Gen 2 (REG2)

Consumes zero engine tokens

Consumes 9000 engine tokens

Build upon a Docker-compose stack

Build upon a Docker-compose stack

Available as Cloud Image and Instantiated in Talend Cloud platform on behalf of the customer

Available as an AMI Cloud Formation Template (for AWS) and Azure Image (for Azure)

Not available as downloadable software as this type of engine is only suitable for design using Pipeline Designer in Talend Cloud portal

Available as .zip or .tar.gz (for local deployment)

A Cloud Engine for Design is included with Talend Cloud platform, to offer a serverless experience during design and testing. However, it is not meant for production (that is, not for running pipelines in non-development environments). It won’t scale for prod-size volumes and long-running pipelines. It should be used for design teams to get a preview working and test execution during development. This engine should not be used for production execution.

It is used to run artifacts, tasks, preparations, and pipelines in the cloud, as well as creating connections and fetching data samples.

Static IPs cannot be enabled for CE4D within Talend Management Console

Not applicable as REG2 runs outside Talend Management Console (that is, in Customer Data Center)

Need for additional engines

Additional engines (CE or RE) may be required if you have one or more of the following use cases:

- Continuous delivery – for example, Dev and QA separate from UAT and Production environments

- Data access - data is in two different private locations where an engine is needed in each site (or a mix of Cloud and Remote Engines)

- Scalability - concurrent Job volume requires additional engines, Jobs are complex and require significant memory or CPU

These use cases depend on the deployment architecture in the specific customer environment and layout of the Remote Engine at the environment or workspace level configurations. This would need proper capacity planning and automatic horizontal and vertical scaling of the compute Engines.

Cloud Engine - usage considerations

Question

Guideline

How much data must be transferred per hour?

Each Cloud Engine can transfer 225 GB per hour.

How many separate flows can run in parallel?

Each Cloud Engine can run up to three flows in parallel.

How much temporary disk space is needed?

Each Cloud Engine has 200GB of temp space.

How CPU and memory intensive are the flows?

Each Cloud Engine provides 8 GB of memory and two vCPU. This is shared among any concurrent flows.

Are separate execution environments required?

Many users desire separate execution for QA/Test/Development and Production. If this is needed, additional Cloud Engines should be added as required.

Remote Engine – recommendations

If a source or target system is not accessible through the internet:

If one of the systems is not accessible using the internet, then a Remote Engine is needed.

When single flow requirements exceed the capacity of a Talend Cloud Engine:

If the Cloud Engine is too small (for example, the maximum memory of 5.25 GB, temporary space of 200 GB, two vCPU, or the maximum of 225 GB per hour) then, a Remote Engine is needed.

If a native driver is required:

If the solution requires a native driver, which is not part of the Talend action or Job generated code, a typical case for this is SAP with the JCO v3 Library, MS SQL Server Windows Authentication, then a Remote Engine is needed.

Data jurisdiction, security, or compliance reasons:

It may be desirable or required to retain data in a particular region or country for data privacy reasons. The data being processed may be subject to regulations such as PCI or HIPAA, or it may be more efficient to process the data within a single data center or public cloud location. These are all valid reasons to use a Remote Engine.

Summary

Cloud Engine (CE)

Remote Engine (RE)

Remote Engine Gen 2 (REG2)

Cloud Engines allow you to run batch tasks that use on-premises or cloud applications and datasets (sources, targets)

Remote Engines allow you to run batch tasks or microservices (APIs or Routes) that use on-premises or cloud applications and datasets (sources, targets)

The Remote Engine Gen2 is used to run artifacts, tasks, preparations, and pipelines in the cloud, as well as creating connections and fetching data samples

Consumes 45,000 engine tokens

Consumes 9,000 engine tokens

Consumes 9,000 engine tokens

No download required - Runs within Talend Cloud platform

Downloadable software from Talend Cloud platform

Downloadable software from Talend Cloud platform

Managed by Talend, run on-demand as needed to execute Jobs

Managed by the customer

Managed by the customer

No customer resources required

Can run on Windows, Linux, or OS X

Require compatible Docker and Docker compose versions for Linux, Mac, and Windows

Set physical specifications (Memory, CPU, and Temp Disk Space)

Unlimited Memory, CPU, and Temp Space

Unlimited Memory, CPU, and Temp Space

Require data sources/targets to be visible through the internet to the Cloud Engine

Hybrid cloud or on-premises data sources

Hybrid cloud or on-premises data sources

Restricted to three concurrent Jobs

Unlimited concurrent Jobs (default three)

Unlimited concurrent pipelines (configurable)

Available within Talend Cloud portal

Available in AWS and Azure Marketplace

Available as an AMI Cloud Formation Template (for AWS) and Azure Image (for Azure)

Runs natively within Talend Cloud iPaaS infrastructure

Uses HTTPS calls to Talend Cloud service to get configuration information and Job definition and schedules

Uses HTTPS calls to Talend Cloud service to get configuration information and pipeline definition and schedules

References

Talend Help Center documentation:

-

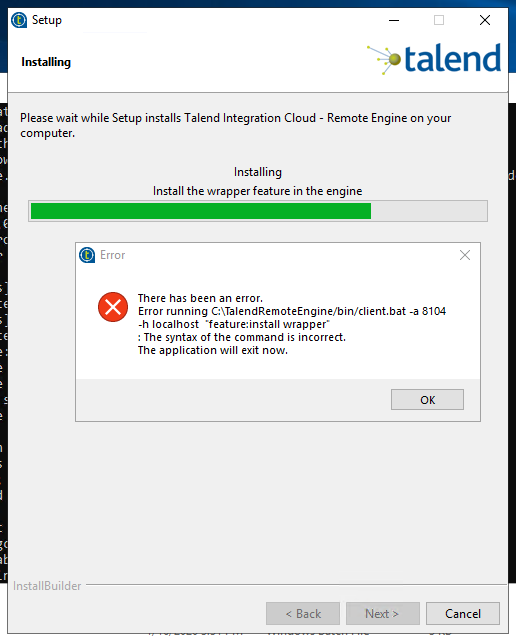

Talend Cloud: Remote Engine automatic and manual installation issues

When attempting to execute the automatic installer.exe for Remote Engine, on Windows Server 2019, it fails with the error: Error running C:\TalendRemo... Show MoreWhen attempting to execute the automatic installer.exe for Remote Engine, on Windows Server 2019, it fails with the error:

Error running C:\TalendRemoteEngine/bin/client.bat -a 8104 -h localhost -u tadmin "feature:install wrapper"

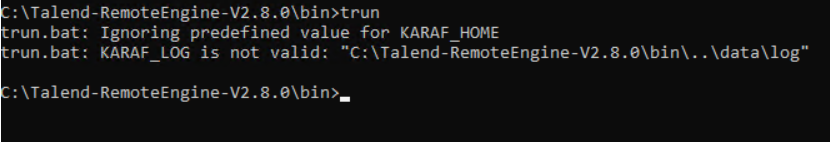

When attempting to run the Remote Engine manually by executing the trun command in the bin directory of the Remote Engine installation, the following error occurs:

Cause

The installer.exe itself can cause the error during the automatic run of the Remote Engine.

The error caused during the manual run of Remote Engine occurs when the JAVA_HOME and PATH environment variables are not set up correctly on the machine where this is happening and can cause the batch files to fail when starting.

Resolution

The best way to avoid the error caused during the automatic run of the Remote Engine is to clear the existing Remote Engine installation and install it again manually with 7-Zip.

To avoid the error caused during the manual run of Remote Engine, set the JAVA_HOME and PATH environment variables according to the Setting up JAVA_HOME instructions available in Talend Cloud Installation Guide for Windows.

-

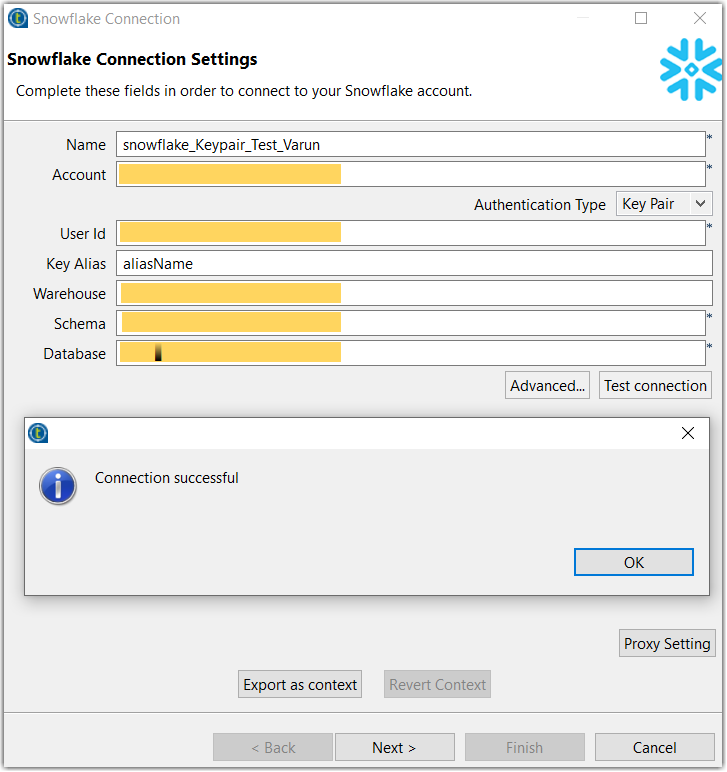

Talend Job using key pair authentication for Snowflake fails with a ‘Missing Key...

Running a Talend Job using a key pair authentication for Snowflake fails with the exception: Starting job Snowflake_CreateTable at 09:21 19/07/2021. ... Show MoreRunning a Talend Job using a key pair authentication for Snowflake fails with the exception:

Starting job Snowflake_CreateTable at 09:21 19/07/2021. [statistics] connecting to socket on port 3725 [statistics] connected Exception in component tDBConnection_2 (Snowflake_CreateTable) java.lang.RuntimeException: java.io.IOException: Missing Keystore location at edw_demo.snowflake_createtable_0_1.Snowflake_CreateTable.tDBConnection_2Process(Snowflake_CreateTable.java:619) at edw_demo.snowflake_createtable_0_1.Snowflake_CreateTable.runJobInTOS(Snowflake_CreateTable.java:3881) at edw_demo.snowflake_createtable_0_1.Snowflake_CreateTable.main(Snowflake_CreateTable.java:3651) [FATAL] 09:21:38 edw_demo.snowflake_createtable_0_1.Snowflake_CreateTable- tDBConnection_2 java.io.IOException: Missing Keystore location java.lang.RuntimeException: java.io.IOException: Missing Keystore location at edw_demo.snowflake_createtable_0_1.Snowflake_CreateTable.tDBConnection_2Process(Snowflake_CreateTable.java:619) [classes/:?] at edw_demo.snowflake_createtable_0_1.Snowflake_CreateTable.runJobInTOS(Snowflake_CreateTable.java:3881) [classes/:?] at edw_demo.snowflake_createtable_0_1.Snowflake_CreateTable.main(Snowflake_CreateTable.java:3651) [classes/:?]

Cause

The Keystore path is not configured correctly at the Job or Studio level before connecting to Snowflake on the metadata and using the same metadata connection in the Jobs.

Resolution

To use key pair authentication for Snowflake, they Keystone settings must be configured in Talend Studio before connecting to Snowflake.

Configuring the Keystore at the Studio level

Perform one of the following options.

Option 1:

Update the appropriate Studio initialization file (Talend-Studio-win-x86_64.ini,Talend-Studio-linux-gtk-x86_64.ini,or Talend-Studio-macosx-cocoa.ini depending on your operating system), with the following settings:

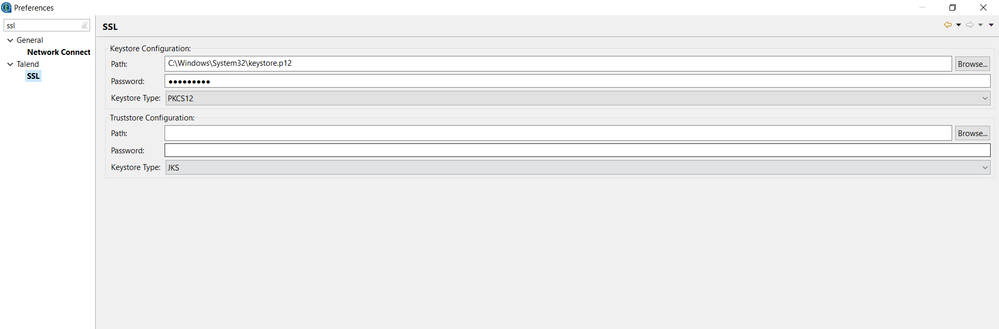

-Djavax.net.ssl.keyStore={yourPathToKeyStore} -Djavax.net.ssl.keyStoreType={PKCS12}/{JKS} -Djavax.net.ssl.keyStorePassword={keyStorePassword}Option 2:

-

Update the Keystore configuration in Studio SSL preferences with the required Path, Password, and Keystore Type.

-

Add the Key Alias to the Snowflake metadata.

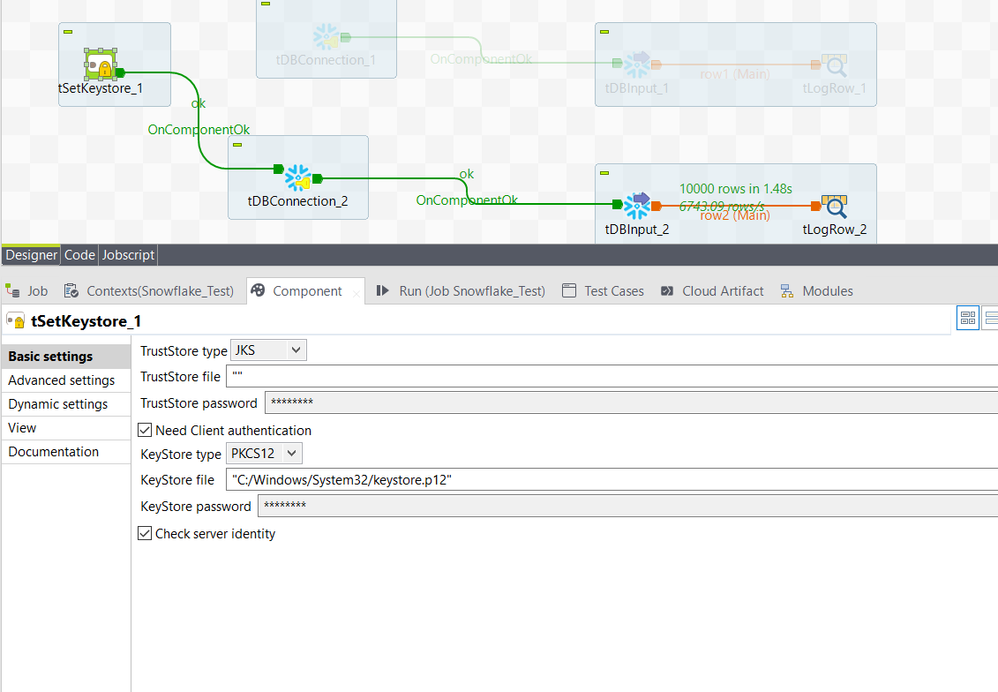

Configuring the Keystore at the Job level

Update the tSetKeystore components in your Job, if you plan to run the Job when the target execution is local, Remote Engine, or JobServer (the versions do not matter). Before selecting the Key Pair option for the tSnowflakeConnection component, configure the key pair authentication on the Basic settings tab of the tSetKeystore component:

-

Select JKS from the TrustStore type pull-down list.

-

Enter " " in the TrustStore file field.

-

Clear the TrustStore password field.

-

Select the Need Client authentication check box.

-

Enter the path to the Keystore file in double quotation marks in the KeyStore file field.

-

Enter the Keystore password in the KeyStore password field.

-

-

Can Power BI connect with Talend?

Question Can Talend talk, work, integrate, and communicate with Power BI? Answer Yes, Power BI has an API, so you can use an ESB tREST or... Show More -

Talend ESB: Use tHash components in ESB Runtime

Question Is it safe to use the tHashOutput and tHashInput components in SOAP/REST services or in Jobs called by routes? Answer tHashxxx components a... Show More -

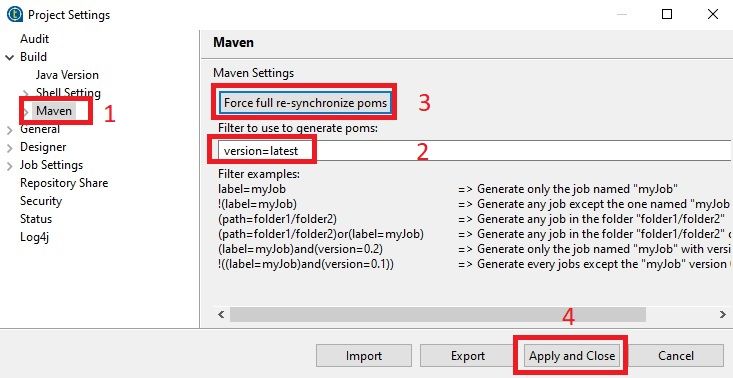

Talend Studio: How to set up a project's pom.xml to list only the latest version...

The project's master pom.xml file (located in the project_name\poms\ folder) lists all versions for each Job, Route, or service, for example: <modules... Show MoreThe project's master pom.xml file (located in the project_name\poms\ folder) lists all versions for each Job, Route, or service, for example:

<modules> ... <module>jobs/process/testJob_0.1</module> <module>jobs/process/testJob_0.2</module> <module>jobs/process/testJob_0.3</module> <module>jobs/process/testJob_0.4</module> ... </modules>

Is there is a way to have the project's master pom.xml file list only the latest version of each Job, Route, or service?

Answer

Yes. In Studio navigate to File > Edit Project properties > Build > Maven, then in the Filter to use to generate poms field enter version=latest. Click Force full re-synchronize poms then click Apply and Close.

Using this process on the example file in the question above returns a pom.xml file like this:

<modules> ... <module>jobs/process/testJob_0.4</module> ... </modules>

You can also achieve this from the Talend CommandLine by entering the following command:

regenerateAllPoms -if (version=-1.-1)

-

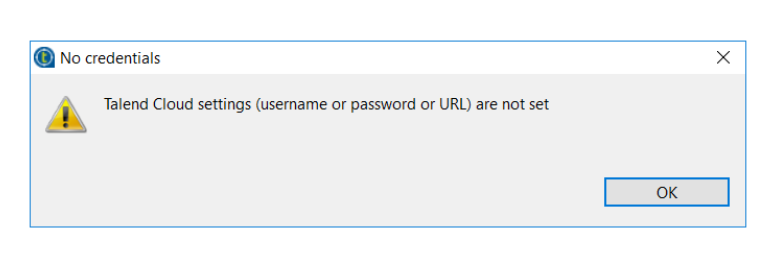

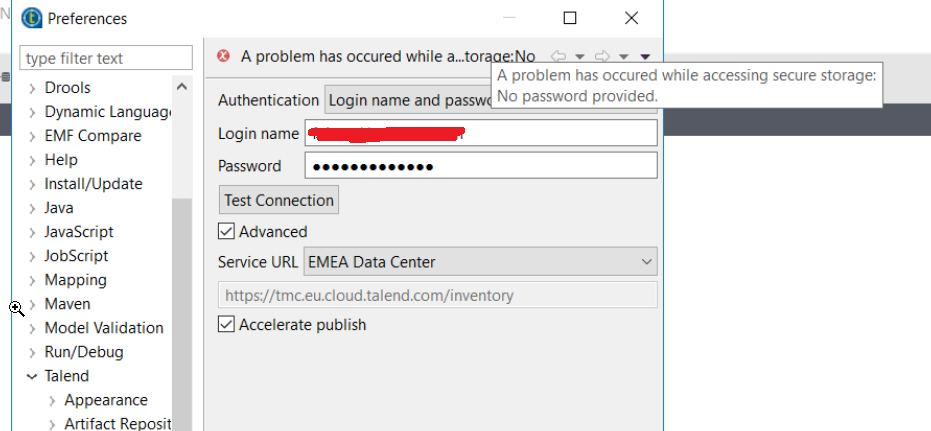

Publishing to Talend Cloud: 'A problem has occurred while accessing secure stora...

Publishing Jobs to the cloud in Studio results in the following error: When attempting to set up Talend Cloud credentials, you notice the following... Show More -

Where to define JAVA_HOME for Studio / TAC / JobServer / CommandLine

You may want to upgrade your Talend DI version, but require several versions to run on your machine simultaneously. The different versions will run on... Show MoreYou may want to upgrade your Talend DI version, but require several versions to run on your machine simultaneously. The different versions will run on different JVM versions, so using the global JAVA_HOME variable is not an option. You must point the different DI elements to specific Java installs.

Resolution

For Talend Studio

- Stop Studio.

- In the installation folder of your Studio, where the executable you are using is located, edit the ini file that has the same name as your executable. For example, if you are starting Studio with Talend-Studio-win-x86_64.exe, edit Talend-Studio-win-x86_64.ini. At the beginning of the file, add two lines:

Your updated file might look like this:-vm JDK path-vm C:\Program Files\Java\jdk1.8.0_101\bin -vmargs -Xms512m -Xmx4G -Dfile.encoding=UTF-8

For CommandLine

- Stop the CommandLine service.

- Edit the file cmdline installation\TalendServices\conf\wrapper.conf.

- Find the line starting with wrapper.java.command.

- Change the path in that line to point to the Java executable you wish to use. It should look similar to this:

wrapper.java.command = C:/Java/jre1.8.0_101/bin/java.exe - Make sure other instances of the key wrapper.java.command are commented out by having a # at the start of the line.

- Open Regedit, and under HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\talend-cmdline-6.2.1, edit the key ImagePath to replace the first argument with your desired java.exe path.

For JobServer

- Stop the JobServer service.

- Edit the file jobserver installation\TalendServices\conf\wrapper.conf.

- Find the line starting with wrapper.java.command.

- Change the path in that line to point to the Java executable you wish to use. It should look similar to this:

wrapper.java.command = C:/Java/jre1.8.0_101/bin/java.exe - Make sure other instances of the key wrapper.java.command are commented out by having a # at the start of the line.

- Open Regedit, and under HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\talend-rjs-6.2.1, edit the key ImagePath to replace the first argument with your desired java.exe path.

For TAC

- Stop TAC.

- Find the name of the TAC service: in the Windows Services interface, double-click the Talend Administration Center service to open its configuration, and note the Service name shown in the General tab.

- Open a command prompt as an administrator.

- Navigate to the tac > apache-tomcat > bin folder, for example C:\Talend\6.2.1\tac\apache-tomcat\bin.

- Run the following command, using the service name you found earlier, in this case talend-tac-6.2.1:

This opens a configuration GUI.

tomcat8w.exe //ES//talend-tac-6.2.1 - In the Java tab, modify the Java Virtual Machine to point it to your desired installation - it must point to the jvm.dll in the server folder.

Environment:

Talend Data Integration version 6.21.

-

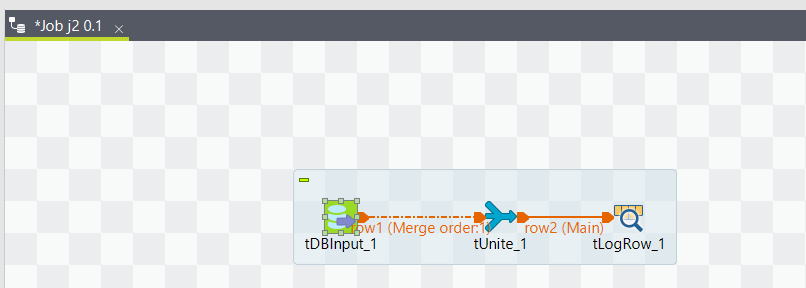

line output for TCK connectors

Since Talend JDBC components start using new tcompv0 framework, we might get this error when using merge connector of tJDBCInput. java.lang.RuntimeExc... Show MoreSince Talend JDBC components start using new tcompv0 framework, we might get this error when using merge connector of tJDBCInput.

java.lang.RuntimeException: Missing output connection for component JDBC#Input

Scenario: using merge line to connect tJDBCInput with tUnite

Temp Workaround: add an intermediate component javajet between tDBInput and tUnite

Permanent solution: install R2023-11v2

URL: https://update.talend.com/Studio/8/updates/R2023-11v2/

-

TMC Error Failed to save plan.

Problem:When in the Talend Management Console in cloud you may receive the following error message when trying to save your plan.Error Failed to save ... Show MoreProblem:

When in the Talend Management Console in cloud you may receive the following error message when trying to save your plan.

Error

Failed to save plan.

You have checked the following:

1. You have checked your workspace permissions and you have Author, Execute, Manage, and Publish checked.

Found in Environments | Workspace permissions

2. The task being added is able to be run with no errors.

3. The plan can be run without errors.

4. You are only getting the error with that specific plan.

Workaround:

This issue is due to certain string patterns used in the Plan Description.

If you are using any keywords in your description with "Update" or "Insert" this will cause the error.

Certain keywords are detected as a SQL injection by our security layer.

A workaround is to modify the Plan description and avoid certain key words such as "Update" or "Insert". -

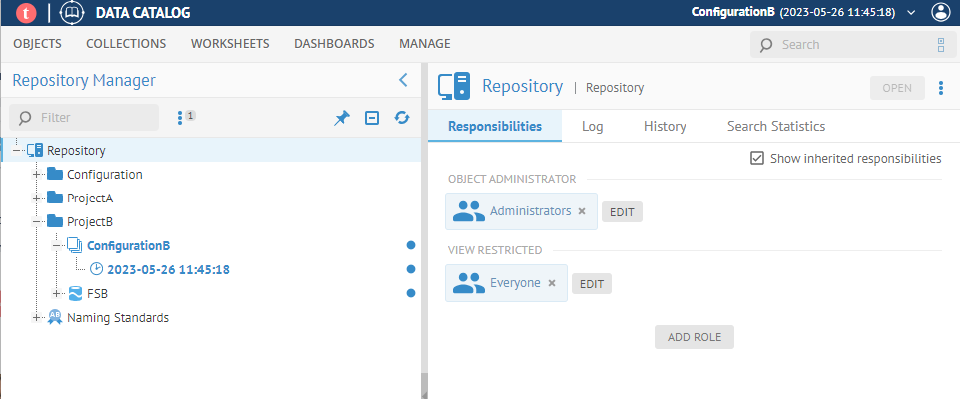

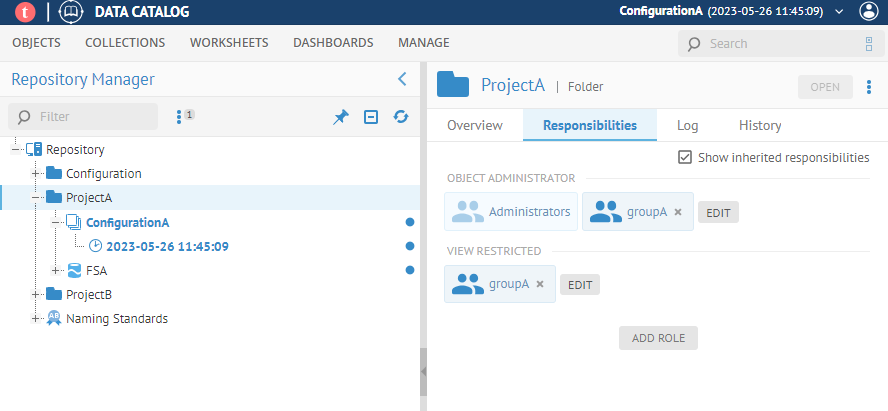

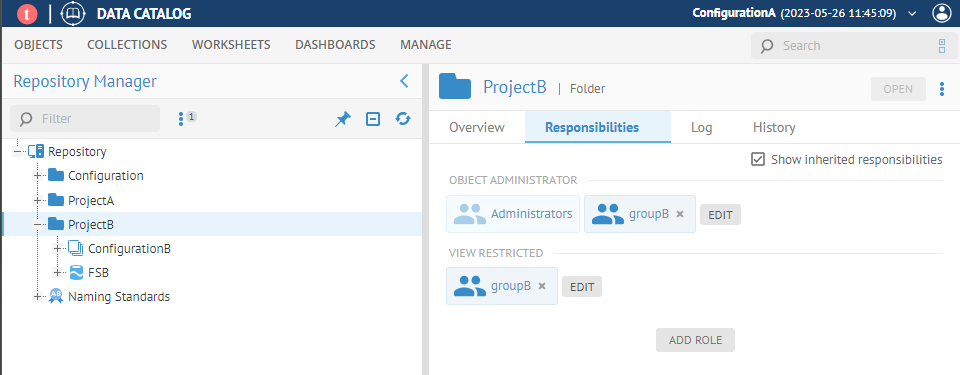

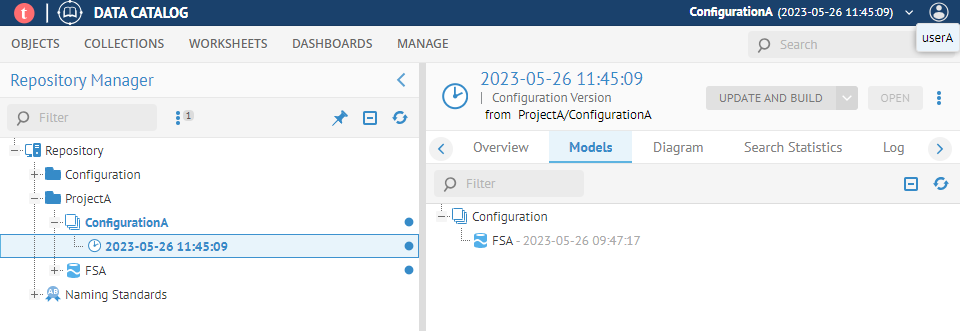

How to restrict viewing in Talend Data Catalog at the folder level

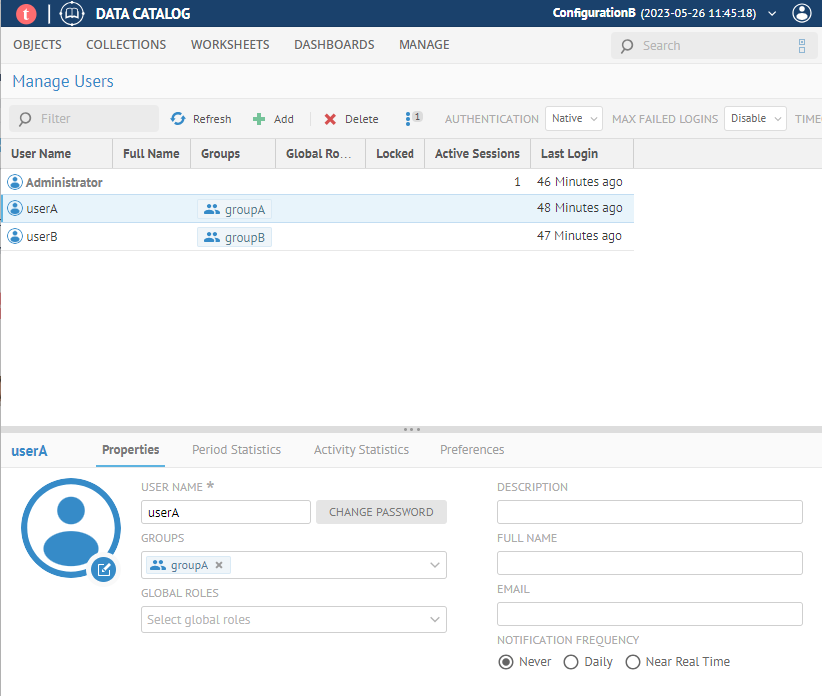

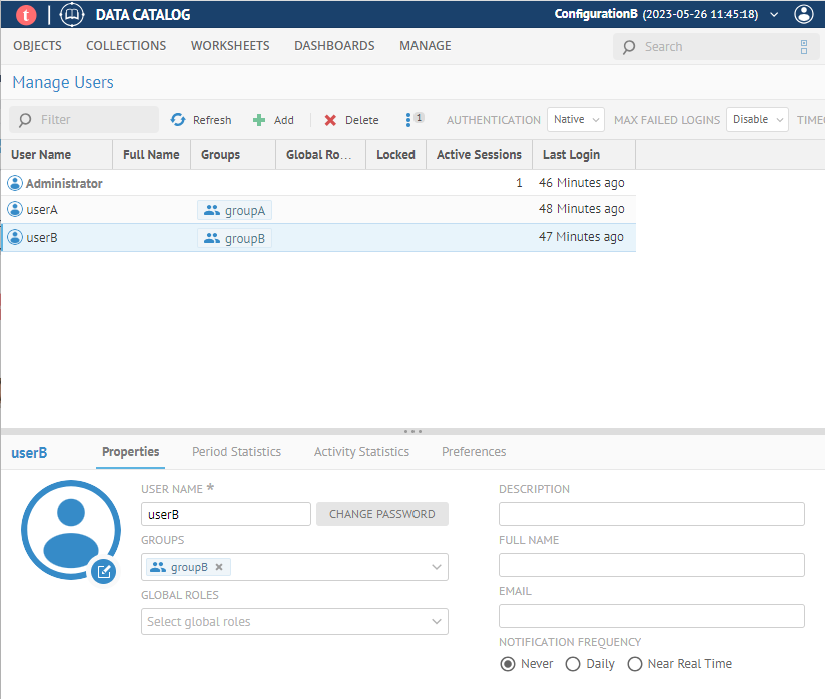

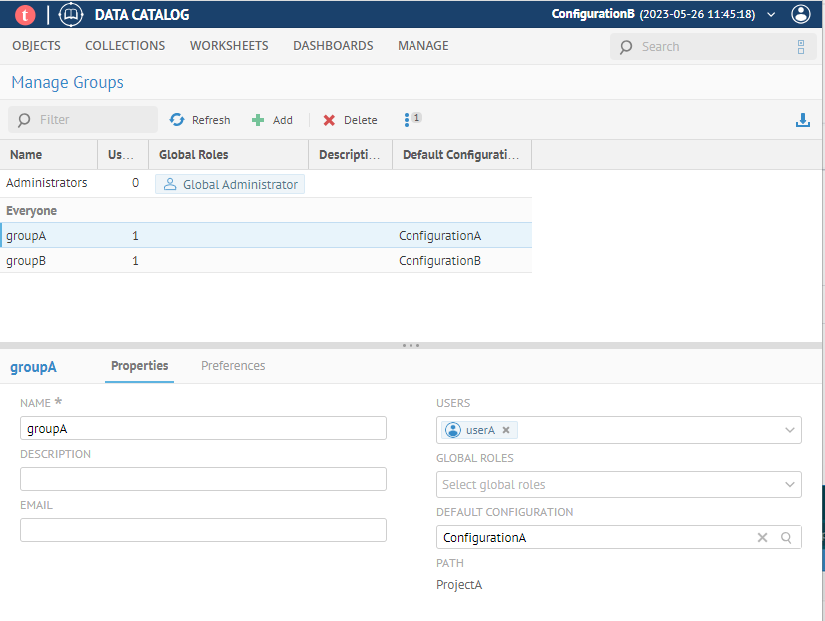

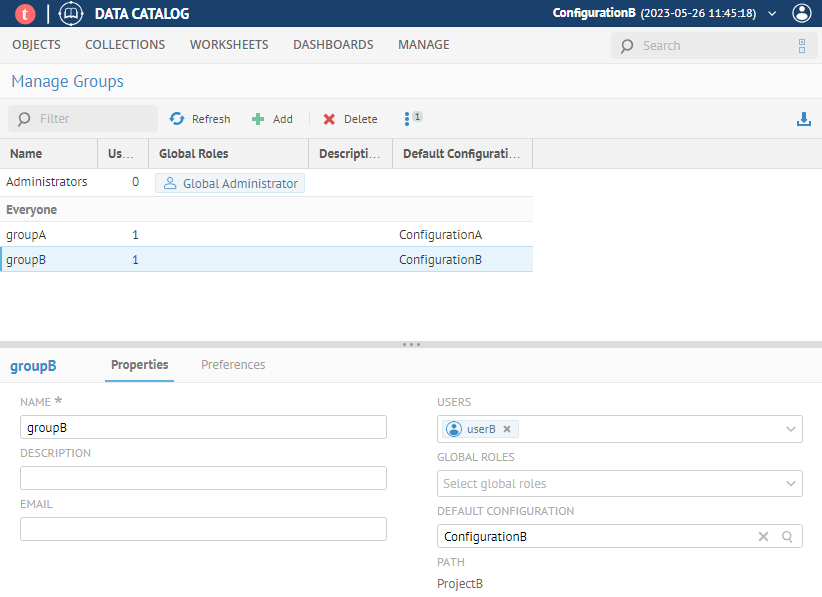

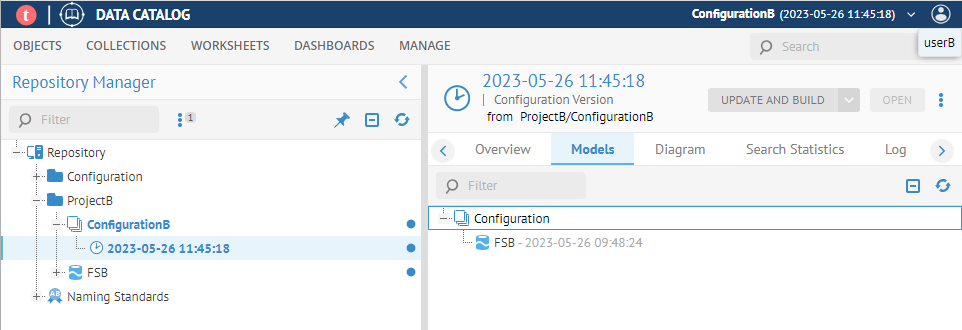

This article describes how to configure Talend Data Catalog (TDC) to restrict viewing capability on folders to a specific set of users using Restricte... Show MoreThis article describes how to configure Talend Data Catalog (TDC) to restrict viewing capability on folders to a specific set of users using Restricted View object roles.

For more information, see Managing object roles available in Talend Help Center.

To demonstrate how to configure the permissions, consider a simple use case with two users, where:- UserA must have viewing capability on all folders except ProjectB.

- UserB must have viewing capability on all folders except ProjectA.

-

Configure UserA to be a member of groupA.

-

Configure UserB to be a member of groupB.

-

Configure groupA with the Default Configuration, ConfigurationA.

-

Configure groupB with the Default Configuration, ConfigurationB.

-

Ensure the View Restricted role for the Repository's main folder is set to the Everyone group.

-

Configure the ProjectA folder with the View Restricted object role set to groupA.

-

Configure the ProjectB folder with the View Restricted object role set to groupB.

-

Log in as userA and make sure you don't see ProjectB.

-

Log in as UserB and make sure you don't see ProjectA.

-

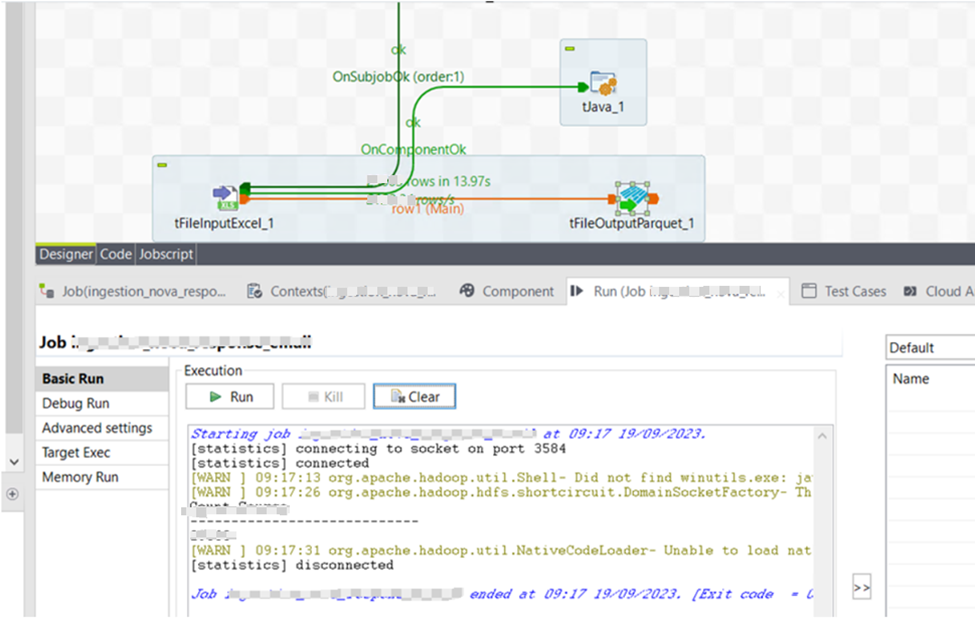

tFileOutputParquet - java.lang.NullPointerException: name should not be null

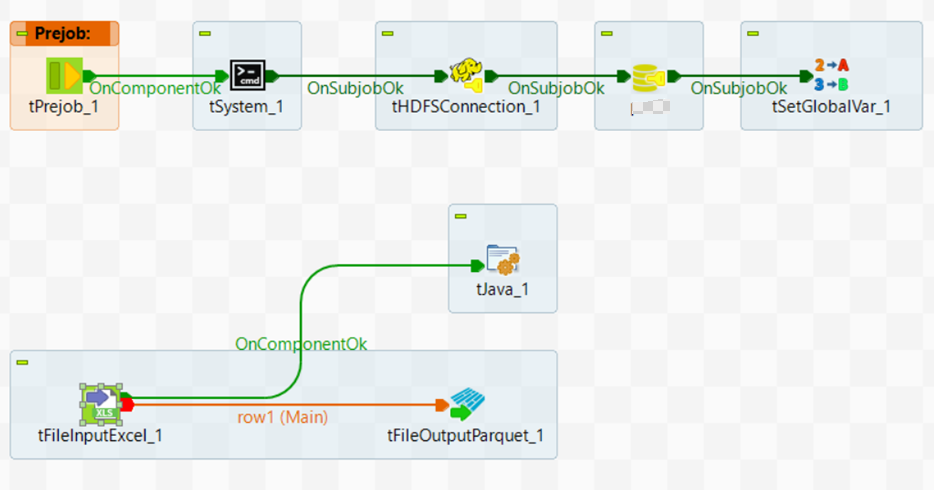

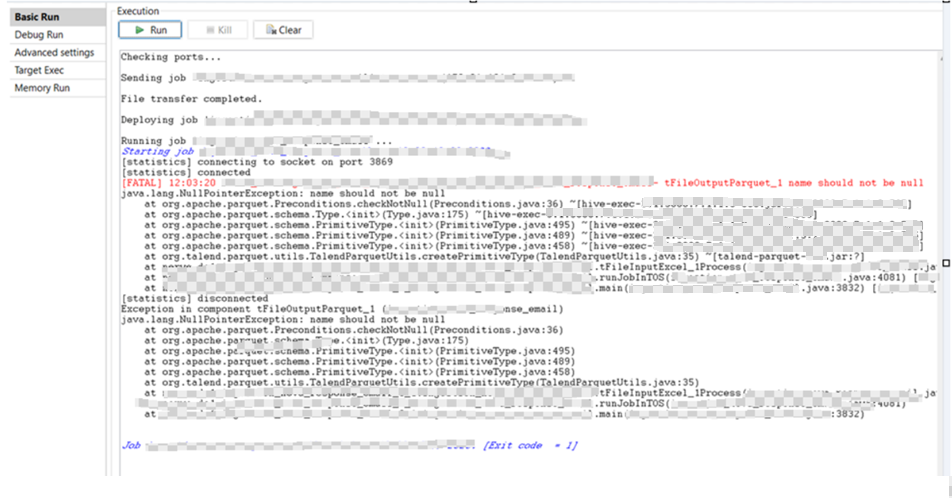

[Background]-Talend Studio 7.3.1 or Talend Studio 8.0.1[Problem]-We have a workflow to convert Excel files to parquet,tFileInputExcel with dynamic-Run... Show More[Background]

-Talend Studio 7.3.1 or Talend Studio 8.0.1

[Problem]

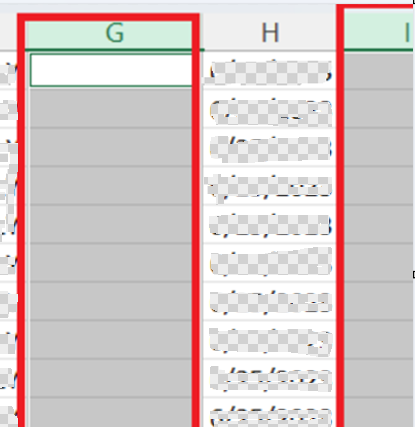

-We have a workflow to convert Excel files to parquet,tFileInputExcel with dynamic

-Run the job, and it gives us: name should not be null

[Reason]

-There are some empty columns in the source Excel file:

[Workaround]-For the tFileInputExcel dynamic, It needs the first line to guess the schema.

-The example job source Excel didn't set a real header. Please Add tJavaFlex to transform Dynamic metadata with:

To the start code:

boolean isFirst = true;

To the main code:

if(isFirst) { isFirst = false; Dynamic newDyn = row1.newColumn.clone(false); for(DynamicMetadata meta : newDyn.metadatas) { // reset column name, String newColumnName = "field"+meta.getColumnPosition(); meta.setName(newColumnName); meta.setDbName(newColumnName); System.out.println("New dynamic field name: '"+ meta.getDbName()+"' "); } row2.newColumn = newDyn; }After that, the job works. -

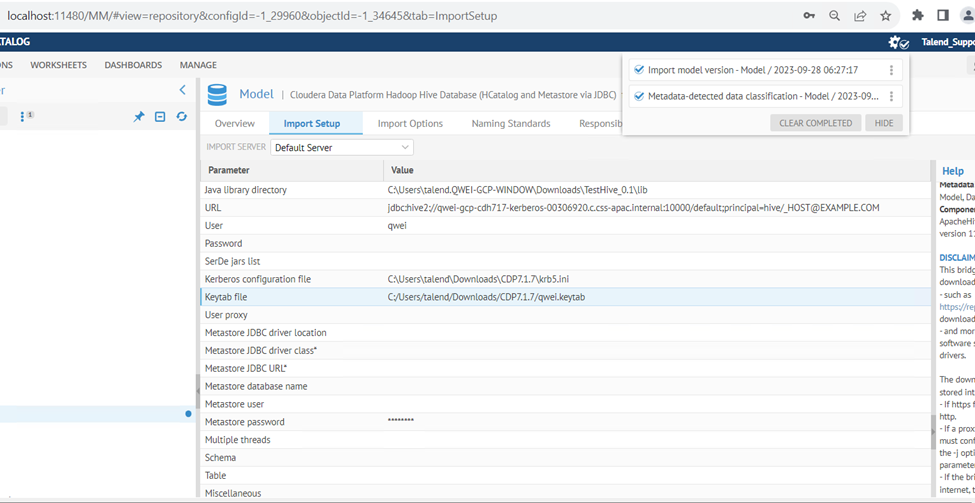

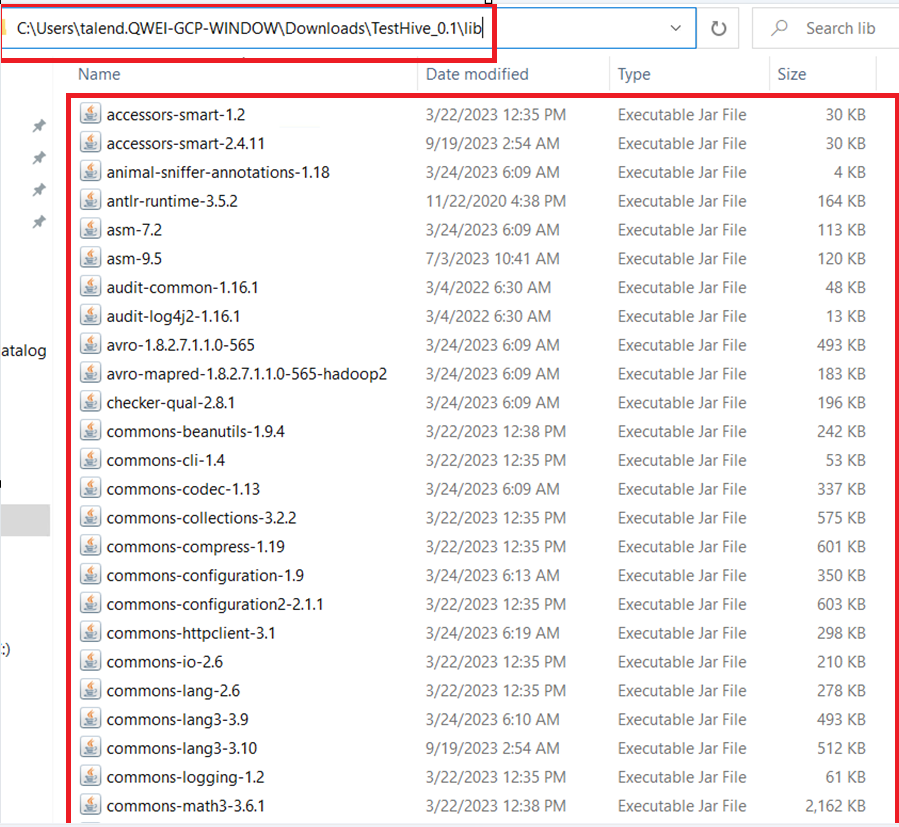

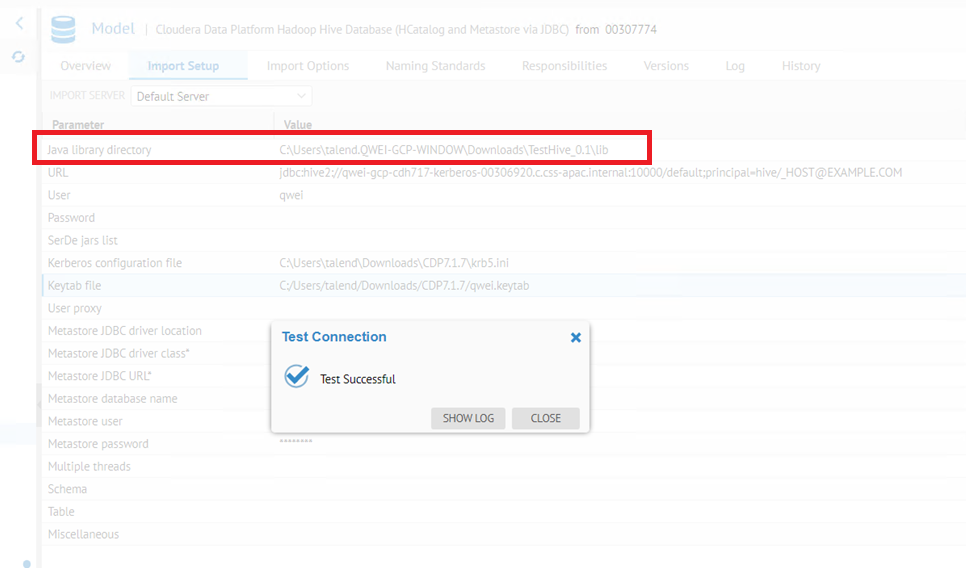

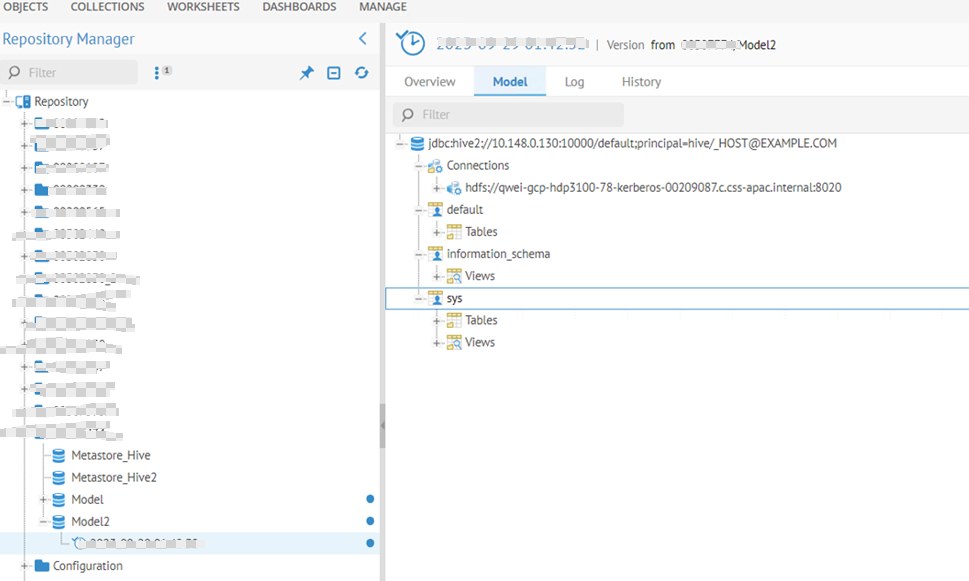

TDC harvest Cloudera Data Platform Hadoop Hive Database

[Background]-TDC 8.0.1-CDP 7.1.7[Requirent]-How to create a Cloudera Data Platform Hadoop Hive Database model in TDCCreate a hive model, The configura... Show More[Background]

-TDC 8.0.1

-CDP 7.1.7

[Requirent]

-How to create a Cloudera Data Platform Hadoop Hive Database model in TDC

Create a hive model, The configuration details of each parameter are shown in the following figure:

Hive is not a relational database, so it requires many JDBC dependencies that we cannot locate one by one, so I used Talens studio to create a job with a design like this: tHiveConnection->tJAVA(OnSubjobOK), then build this job create all libraries for hive connection, put it this folder to Java library directory of Hive model.

Then we can harvest Hive database structure: -

Preventing DeadLock in Tac's database

The TAC Webapp is designed to do the modifications in the TAC DB in an "isolated way" (no conflicts between the different modifications) As the settin... Show MoreThe TAC Webapp is designed to do the modifications in the TAC DB in an "isolated way" (no conflicts between the different modifications) As the setting :

database.server.selectForUpdate.enabled=true

is useless for the TAC Webapp and is known to cause some issues , it is recommended to set it to false for the TAC :

database.server.selectForUpdate.enabled=false

Other operations might be required are

1. truncate temp, work folder under tomcat

2. restart Tac

3. Set the Task action on unavailable JobServer to "Reset" task instead of "Wait" indefinitely