Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

Product Innovation

By reading the Product Innovation blog, you will learn about what's new across all of the products in our growing Qlik product portfolio.

Support Updates

The Support Updates blog delivers important and useful Qlik Support information about end-of-product support, new service releases, and general support topics.

Qlik Academic Program

This blog was created for professors and students using Qlik within academia.

Community News

Hear it from your Community Managers! The Community News blog provides updates about the Qlik Community Platform and other news and important announcements.

Qlik Digest

The Qlik Digest is your essential monthly low-down of the need-to-know product updates, events, and resources from Qlik.

Qlik Learning

The Qlik Learning blog offers information about the latest updates to our courses and programs, as well as insights from the Qlik Learning team.

Recent Blog Posts

-

Upgrade advisory for Qlik Sense on-premise May and November 2025: ODBC-based con...

During recent testing, Qlik has identified an issue that can occur after upgrading Qlik Sense on-premise to specific releases. While the upgrade completes successfully, some environments may experience problems with ODBC-based connectors after the upgrade. The issue is upgrade path dependent and relates to connector components that are included as part of the Qlik Sense client-managed installation. Recommendation: After upgrading Qlik Sense on-pr... Show MoreDuring recent testing, Qlik has identified an issue that can occur after upgrading Qlik Sense on-premise to specific releases. While the upgrade completes successfully, some environments may experience problems with ODBC-based connectors after the upgrade.

The issue is upgrade path dependent and relates to connector components that are included as part of the Qlik Sense client-managed installation.

Recommendation: After upgrading Qlik Sense on-premise, verify your connector functionality as part of your post-upgrade checks, especially when upgrading from earlier Qlik Sense Enterprise on Windows May 2025 releases.

How can I identify if I am affected?

The issue can typically be identified by files being missing after the upgrade. In this example, the Athena connector is not working, and the following file is missing:

C:\Program Files\Common Files\Qlik\Custom Data\QvOdbcConnectorPackage\athena\lib\AthenaODBC_sb64.dll

In this example, all ODBC connectors stopped working:

C:\Program Files\Common Files\Qlik\Custom Data\QvOdbcConnectorPackage\QvxLibrary.dll

With the QvxLibrary.dll missing, both existing and newly created ODBC connections will fail.

How do I resolve the issue?

A fix will be delivered in upcoming patches. Stay up to date with the most recent version by reviewing our Release Notes.

Workaround

If your connectors have been impacted by this upgrade, rollback your ODBC connector package to the previously working version based on a pre-update backup. See How to manually upgrade or downgrade the Qlik Sense Enterprise on Windows ODBC Connector Packages for details.

The workaround is intended to be temporary. Apply the fixed Qlik Sense Enterprise on Windows patch for your respective version as soon as it becomes available.

If you have any questions, we're happy to assist. Reply to this blog post or take your queries to our Support Chat.

Thank you for choosing Qlik,

Qlik Support -

Streamlining user types in Qlik Cloud capacity-based subscriptions

Hello Qlik Cloud Admins! As part of our ongoing commitment to provide the best possible experience for Qlik Cloud users, we are removing the Basic and Full User construct from tenants on capacity-based subscriptions, simplifying to just User. Why are we doing this? User capabilities on capacity-based tenants are governed by access control. Thus, a difference in user type designation is no longer required to determine what a user can do in the t... Show MoreHello Qlik Cloud Admins!

As part of our ongoing commitment to provide the best possible experience for Qlik Cloud users, we are removing the Basic and Full User construct from tenants on capacity-based subscriptions, simplifying to just User.

Why are we doing this?

User capabilities on capacity-based tenants are governed by access control. Thus, a difference in user type designation is no longer required to determine what a user can do in the tenant.

What changes are being made?

- Any user authenticating to a capacity-based tenant will be considered a Full User. The concept of a Basic User is removed from the product. Any users purchased under capacity entitlement will be available as Full Users. Full User consumption is deducted from the total number of user capacity purchased.

- The auto-assignment toggles in the Qlik Cloud Administration Center > Settings > Entitlements will be removed from the product.

To grant these roles to everyone in the tenant, use the Auto assign option in Manage users > Permissions.

How does this impact you?

Current access control configuration for existing users remains unchanged. You may have to modify the User Default role, assign users to built-in roles, or create new custom roles to support access to tenant features and capabilities. See Roles and permissions for users and administrators for information on your tenant’s access control system.

When will this change take effect?

This is targeted for February 2nd, 2026.

If you have any questions, we're happy to assist. Reply to this blog post or take your queries to our Support Chat.

Thank you for choosing Qlik,

Qlik Support -

Qlik’s Response to Apache Tika vulnerability CVE-2025-66516

In December 2025, the Apache Project announced a vulnerability in Apache Tika (CVE-2025-66516) and provided patches to resolve the issue. Qlik has been reviewing our usage of the Apache Tika product suite and has identified a limited impact as follows. Affected Software Apache Tika is used in several Qlik products. However, the vulnerability is only relevant to the case of a Talend Studio route that uses Apache Tika to parse PDFs. No other use... Show MoreIn December 2025, the Apache Project announced a vulnerability in Apache Tika (CVE-2025-66516) and provided patches to resolve the issue. Qlik has been reviewing our usage of the Apache Tika product suite and has identified a limited impact as follows.

Affected Software

Apache Tika is used in several Qlik products. However, the vulnerability is only relevant to the case of a Talend Studio route that uses Apache Tika to parse PDFs.

No other use case or product is impacted by the vulnerability. Qlik Cloud and Talend Cloud are not impacted by this vulnerability.

Nevertheless, we are patching all our products that contain Apache Tika out of an abundance of caution. Be on the lookout for a series of product patches for supported and affected versions.

Recommendation

The releases listed in the table below contain the updated version of Apache Tika, which addresses CVE-2025-66516.

Always update to the latest version. Before you upgrade, check if a more recent release is available.

Product Patch Release Date Talend Studio R2025-11v2 December 16, 2025 Talend Administration Center QTAC-1472 December 19, 2025 Talend ESB Runtime R2025-12-RT December 19, 2025 Talend Remote Engine Gen 2. Connectors 1.58.8 December 23, 2025 Talend Data Stewardship TPS-6013 December 23, 2025 Talend Data Preparation TBD TBD Thank you for choosing Qlik,

Qlik Support -

Multitenant Provisioning is now available!

Multitenant Provisioning is now available for our OEM Partners, Solution Provider Partners (ISVs), and Enterprise Customers with multitenant requirements. -

Developing Word and PowerPoint reports with the expanded Microsoft O365 Add-in f...

Qlik's O365 Add-in offering for report developers has expanded with two new add-ins to enable Word document and PowerPoint presentation analytic reports. Report developers can now: Compose document or portrait style reports with data from a Qlik Sense app with an output format of .docx or .PDF (using the new Microsoft O365 Add-in for Word). Create PowerPoint presentation analytic reports from a Qlik Sense app with an output of .pptx or .PDF (usi... Show MoreQlik's O365 Add-in offering for report developers has expanded with two new add-ins to enable Word document and PowerPoint presentation analytic reports.

Report developers can now:

- Compose document or portrait style reports with data from a Qlik Sense app with an output format of .docx or .PDF (using the new Microsoft O365 Add-in for Word).

- Create PowerPoint presentation analytic reports from a Qlik Sense app with an output of .pptx or .PDF (using the new Microsoft O365 Add-in for PowerPoint).

How do I get started?

Qlik add-ins for Microsoft Office are installed using a manifest file. If you are using an existing manifest, you will need to download and deploy an updated file to access the new add-ins. See the deployment guide Deploying and installing Qlik add-ins for Microsoft Office.

The manifest covers all three productivity tool Add-ins. They cannot be deployed individually.

Notes on the PowerPoint add-in use

Qlik’s integration testing of Microsoft PowerPoint APIs shows that the O365 Add-in for PowerPoint can be unstable or slow at times. Our investigation with Microsoft reveals this to be a known challenge; some APIs on web vs desktop can result in different behaviors.

If your report developers have difficulty with the online PowerPoint Add-in, contact Qlik Support to open a case with us.

While we investigate the integration with Microsoft to determine if a solution is possible, consider developing your reports with the desktop version of PowerPoint.

For Qlik NPrinting Customers

- Watch for an upcoming Qlik NPrinting release in 2026 that will support Word and PowerPoint template export of compatible templates to Qlik Cloud Analytics.

- Please note that due to Microsoft API limitations, it is not possible to embed data into an Excel table within the PowerPoint template to then produce native PowerPoint visualizations in the report. Refer to the help documentation for any other Word and PowerPoint report authoring limitations. See Qlik Reporting Service specifications and limitations for details.

If you have any questions, we're happy to assist. Reply to this blog post or take your queries to our Support Chat.

Thank you for choosing Qlik,

Qlik Support -

Starting 2026 Strong: Empowering Data Analytics Education Across EMEA

As we step into 2026, I want to take a moment to reflect on the year behind us and look ahead to what’s coming for the Qlik Academic Program across EMEA. 2025 was a strong and rewarding year marked by meaningful collaboration with educators, growing student engagement, and a shared commitment to bringing real-world data analytics into the classroom. Our goal for 2026 is simple: to build on that momentum and continue supporting academic institutio... Show MoreAs we step into 2026, I want to take a moment to reflect on the year behind us and look ahead to what’s coming for the Qlik Academic Program across EMEA. 2025 was a strong and rewarding year marked by meaningful collaboration with educators, growing student engagement, and a shared commitment to bringing real-world data analytics into the classroom. Our goal for 2026 is simple: to build on that momentum and continue supporting academic institutions across the region in even more impactful ways.

-

Qlik Sense Migration: Migrating your Entire Qlik Sense Environment

Hi everyone, For various and valid reasons, you might need to migrate your entire Qlik Sense environment, or part of it, somewhere else. In this post, I’ll cover the most common scenario: a complete migration of a single or multi-node Qlik Sense system, with the bundled PostgreSQL database (Qlik Sense Repository Database service) in a new environment. So, how do we do that? Introduction and preparationBackup your old environmentDeploy and rest... Show MoreHi everyone,

For various and valid reasons, you might need to migrate your entire Qlik Sense environment, or part of it, somewhere else.

In this post, I’ll cover the most common scenario: a complete migration of a single or multi-node Qlik Sense system, with the bundled PostgreSQL database (Qlik Sense Repository Database service) in a new environment.

So, how do we do that?

- Introduction and preparation

- Backup your old environment

- Deploy and restore the new central environment

- What about my rim nodes?

- Finalizing your migration

- Data Connection

- Licensing

- Best practices

If direct assistance is needed and you require hands-on help with a migration, engage Qlik Consulting. Qlik Support cannot provide walk-through assistance with server migrations outside of a post-installation and migration completion break/fix scenario.

Introduction and preparation

Let’s start with a little bit of context: Say that we are running a 3 nodes Qlik Sense environment (Central node / Proxy-Engine node / Scheduler node).

On the central node, I also have the Qlik shared folder and the bundled Qlik Sense Repository Database installed.

If you have previously unbundled your PostgreSQL install, see How To migrate a Qlik Sense Enterprise on Windows environment to a different host after unbundling PostgreSQL for instructions on how to migrate.

This environment has been running well for years but I now need to move it to a brand new hardware ensuring better performance. It’s not possible to reinstall everything from scratch because the system has been heavily used and customized already. Redoing all of that to replicate the environment is too difficult and time-consuming.

I start off with going through a checklist to verify if the new system I’m migrating to is up to it:

- Do I meet the system requirements? Qlik Sense System Requirements

- Am I following virtualization best practices? Virtualization Best Practices In QlikView And Qlik Sense

And then I move right over to…

Backup your old environment

The first step to migrate your environment in this scenario is to back it up.

To do that, I would recommend following the steps documented on help.qlik.com (make sure to select your Qlik Sense version top left of the screen).

Once the backup is done you should have:

- A backup of the database in .tar format

- A backup of the content of the file share which includes your applications, application content, archived logs, extensions,…

- Backups of any data source files that need to be migrated and are not stored in the shared folder like QVDs

Then we can go ahead and…

Deploy and restore the new central environment

The next steps are to deploy and restore your central node. In this scenario, we will also assume that the new central node will have a different name than the original one (just to make things a bit more complicated 😊).

Let’s start by installing Qlik Sense on the central node. That’s as straightforward as any other fresh install.

You can follow our documentation. Before clicking on Install simply uncheck the box “Start the Qlik Sense services when the setup is complete.”

The version of Qlik Sense you are going to install MUST be the same as the one the backup is taken on.

Now that Qlik Sense is deployed you can restore the backup you have taken earlier into your new Qlik Sense central node following Restoring a Qlik Sense site.

Since the central node server name has also changed, you need to run a Bootstrap command to update Qlik Sense with the new server name. Instruction are provided in Restoring a Qlik Sense site to a machine with a different hostname.

The central node is now almost ready to start.

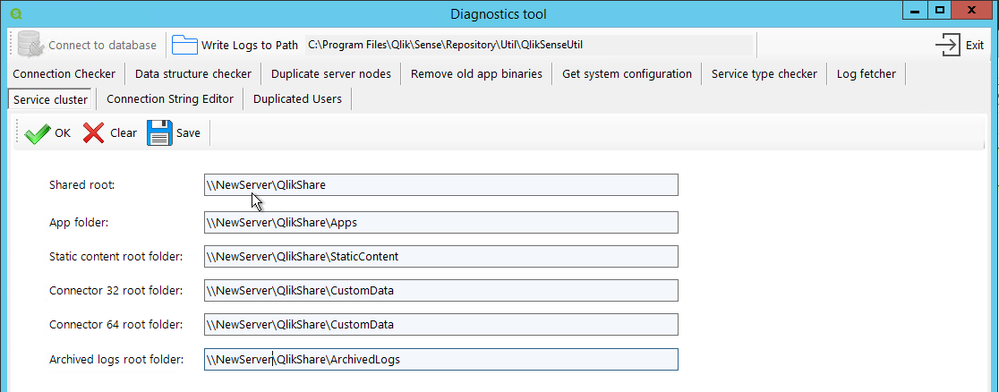

If you have changed the Qlik Share location, then the UNC path has also changed and needs to be updated.

To do that:

- Go to C:\Program Files\Qlik\Sense\Repository\Util\QlikSenseUtil

- Run QlikSenseUtil.exe as Administrator

- Click on Connect to the database and enter the credential to connect to the new PostgreSQL database

- Click on Service Cluster and press OK. This should display the previously configured UNC Path

- You simply need to update each path, save and start/restart all the Qlik sense services.

At this point make sure you can access the Qlik Sense QMC and Hub on the central node. Eventually, check that you can load applications (using the central node engine of course). You can also check in the QMC > Service Cluster that the changes you previously made have been correctly applied.

Troubleshooting tips: If after starting the Qlik Sense services, you cannot access the QMC and/or Hub please check the following knowledge article How to troubleshoot issue to access QMC and HUB

What about my rim nodes?

You’ve made it here?! Then congratulations you have passed the most difficult part.

If you had already run and configured rim nodes in your environment that you now need to migrate as well, you might not want to remove them from Qlik Sense to add the new ones since you will lose pretty much all the configuration you have done so far on these rim nodes.

By applying the following few steps I will show you how to connect to your “new” rim node(s) and keep the configuration of the “old” one(s).

Let’s start by installing Qlik Sense on each rim node like it was a new one.

The process is pretty much the same as installing a central node except that instead of choosing “Create Cluster”, you need to select “Join Cluster”

Detailed instructions can be found on help.qlik.com: Installing Qlik Sense in a multi-node site

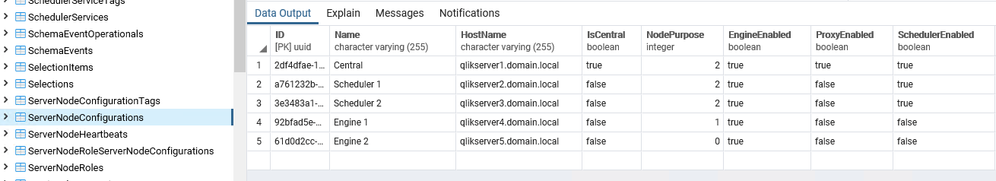

Once Qlik Sense is installed on your future rim node(s) and the services are started, we will need to connect to the “new” Qlik Sense Repository Database and change the hostname of the “old” rim node(s) to the “new” one so that the central node can communicate with it.

To do that install PGAdmin4 and connect to the Qlik Sense Repository Database. Detailed instruction in Installing and Configuring PGAdmin 4 to access the PostgreSQL database used by Qlik Sense or NPrinting knowledge article.

Once connected navigate to Databases > QSR > Schemas > public > Tables

You need to edit the LocalConfigs and ServerNodeConfigurations table and change the Hostname of your rim node(s) from the old one to the new corresponding one (Don’t forget to Save the change)

LocalConfigs table

ServerNodeConfigurations table

Once this is done, you will need to restart all the services on the central node.

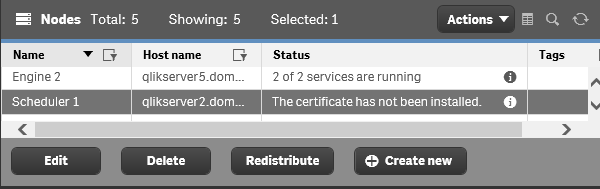

When you have access back, login to the QMC and go to Nodes. Your rim node(s) should display the following status, “The certificate has not been installed”

From this point, you can simply select the node, click on Redistribute and follow the instruction to deploy the certificates on your rim node. After a moment the status should change and you should see the services being up and running.

Do the same thing on the remaining rim node(s).

Troubleshooting tips: If the rim node status is not showing “The certificate has not been installed” it means that either the central node cannot reach the rim node or the rim node is not ready to receive new certificates.

Check that the port 4444 is opened between the central and rim node and make sure the rim node is listening on port 4444 (netstat -aon in command prompt).

Still no luck? You can completely uninstall Qlik Sense on the rim node and reinstall it.Finalizing your migration

At this point, your environment is completely migrated and most of the stuff should work.

Data Connection

There is one thing to consider in this scenario. Since the Qlik Sense certificates between the old environment and the new one are not the same, it is likely that data connections with passwords will fail. This is because passwords are saved in the repository database with encryption. That encryption is based on a hash from the certs. When the Qlik Sense self-signed cert is rebuilt, this hash is no longer valid, and so the saved data connection passwords will fail. You will need to re-enter the passwords in each data connection and save. This can be done in the QMC -> Data Connections.

See knowledge article: Repository System Log Shows Error "Not possible to decrypt encrypted string in database"

Licensing

Do not forget to turn off your old Qlik Sense Environment once you are finished. While Qlik's Signed License key can be used across multiple environments, you will want to prevent accidental user assignments from the old environment.

Note: If you are still using a legacy key (tokens), the old environment must be shut down immediately, as you can only use a legacy license on one active Qlik Sense environment. Reach out to your account manager for more details.

Best practices

Finally, don’t forget to apply best practices in your new environment:

- Qlik Sense Folder And Files To Exclude From AntiVirus Scanning

- Recommended practice on configuration for Qlik Sense

-

The 2026 Data Trends Are Here. Is Education Ready?

Data is at the center of the AI revolution. But as Bernard Marr explains in his Forbes article The 8 Data Trends That Will Define 2026 the biggest changes are not just technical; they are changing how people work, learn, and build careers. These 2026 data trends are already reshaping education and jobs. AI agents and agent-ready data are changing how work gets done, making it essential to understand how data is structured, accessed, and secure... Show MoreData is at the center of the AI revolution. But as Bernard Marr explains in his Forbes article The 8 Data Trends That Will Define 2026 the biggest changes are not just technical; they are changing how people work, learn, and build careers.

These 2026 data trends are already reshaping education and jobs.

-

AI agents and agent-ready data are changing how work gets done, making it essential to understand how data is structured, accessed, and secured.

-

Generative AI for data engineering is automating technical tasks, shifting skills toward design, logic, and critical thinking.

-

Data provenance and trust are becoming core requirements as data volumes grow, and decisions rely more on AI.

-

Compliance and regulation are expanding globally, making responsible data use a necessary skill across roles.

-

Generative data democracy allows more people to access insights, increasing the importance of data literacy for everyone.

-

Synthetic data is opening new opportunities while raising ethical and privacy considerations.

-

Data sovereignty is shaping how organizations manage data across borders and jurisdictions.

Together, these trends show why data literacy is becoming a universal skill for education and careers in 2026.

The Qlik Academic Program helps academic communities respond to these changes by putting data literacy at the center of learning. Students develop the ability to read, question, and explain data while working hands-on with real analytics tools to explore data, build insights, and understand how AI-driven decisions are made. Professors are supported with training and teaching resources that make it easier to embed data literacy and modern data topics across disciplines.

As the Forbes article makes clear, the future belongs to those who can work confidently with data, alongside AI, within regulations, and with trust.

By giving students, professors, and universities free access to analytics software, learning content, and certifications, the Qlik Academic Program helps education stay aligned with the data trends shaping 2026 and prepares learners for the jobs of tomorrow.

Join our global community for free: Qlik Academic Program: Creating a Data-Literate World

-

-

Watch! Q&A with Qlik: New to Qlik Cloud

Don't miss our previous Q&A with Qlik! Hear from our panel of experts to help you get the most out of your Qlik experience. Let our Qlik experts offer solutions to common issues encountered when upgrading Qlik Sense, best practices, and important configuration settings. SEE RECORDING HERE -

-

Upcoming changes to Qlik Talend Nexus Repository January 26th, 2026

Hello Qlik Talend admins, Qlik is updating the Qlik Talend Nexus repository. The changes are rolled out in a phased approach. Phase One was completed on July 16th, 2025. Phase Two is scheduled for January 26th, 2026. What is the expected impact? The impact is minimal. Qlik Talend Studio: no impact Qlik Talend Administration Center version lower than 8.0.1 with R2025-07 patch: you'll no longer see any patches in the Qlik Talend Administration... Show MoreHello Qlik Talend admins,

Qlik is updating the Qlik Talend Nexus repository. The changes are rolled out in a phased approach. Phase One was completed on July 16th, 2025.

Phase Two is scheduled for January 26th, 2026.

What is the expected impact?

The impact is minimal.

Qlik Talend Studio:

- no impact

Qlik Talend Administration Center

- version lower than 8.0.1 with R2025-07 patch: you'll no longer see any patches in the Qlik Talend Administration Center UI

- version 8.0 with patch R2025-07 or higher: you'll see all patches available in the Qlik Talend Administration Center UI

If you have any questions, we're happy to assist. Reply to this blog post or start a chat with us.

Thank you for choosing Qlik,

Qlik Support -

-

Why Adding Analytics Doesn’t Have to Be Hard for Professors

Why Qlik Makes It Easy As data literacy and analytics becomes essential across higher education, many faculty want to introduce analytics into their courses—but hesitate for one simple reason: time. For most professors, the challenge isn’t interest—it’s practicality. Traditional analytics tools often require: Significant time to learn and teach Building course materials from scratch Managing software access and technical issues Justifying... Show MoreWhy Qlik Makes It EasyAs data literacy and analytics becomes essential across higher education, many faculty want to introduce analytics into their courses—but hesitate for one simple reason: time.For most professors, the challenge isn’t interest—it’s practicality. Traditional analytics tools often require:-

Significant time to learn and teach

-

Building course materials from scratch

-

Managing software access and technical issues

-

Justifying tools that don’t clearly align with real-world outcomes

When added together, these hurdles can make analytics feel like more work than impact. Qlik’s Academic Program is designed with faculty realities in mind.Professors get:-

Ready-to-use course materials (syllabi, labs, and assessments)

-

Easy, cloud-based access with no cost or complex setup

-

Flexible integration, from a single module to a full course

-

Industry-relevant analytics tools students actually use in the workforce

Instead of asking faculty to redesign their courses, Qlik fits seamlessly into what they already teach. Adding an analytics tool doesn’t have to be difficult.Qlik removes the biggest barriers—time, complexity, and curriculum creation—making it one of the easiest analytics programs for professors to adopt and teach.Explore more here: https://www.qlik.com/us/company/academic-program -

-

Unlock Your Qlik Superpower

Discover your current skill level and receive recommendations to accelerate your learning. Access the new Skills Assessments free and receive training recommendations designed to strengthen and expand your skills. -

Mastering the HyperCube: High-Volume Data Strategies with @qlik/api

If you have been building custom web applications or mashups with Qlik Cloud, you have likely hit the "10K cells ceiling" when using Hypercubes to fetch data from Qlik.(Read my previous posts about Hypercubes here and here) You build a data-driven component, it works perfectly with low-volume test data, and then you connect it to production; and now suddenly, your list of 50,000+ customers cuts off halfway, or your export results look incomplete.... Show MoreIf you have been building custom web applications or mashups with Qlik Cloud, you have likely hit the "10K cells ceiling" when using Hypercubes to fetch data from Qlik.

(Read my previous posts about Hypercubes here and here)You build a data-driven component, it works perfectly with low-volume test data, and then you connect it to production; and now suddenly, your list of 50,000+ customers cuts off halfway, or your export results look incomplete.

This happens because the Qlik Engine imposes a strict limit on data retrieval: a maximum of 10,000 cells per request. If you fetch 4 columns, you only get 2,500 rows (4 (columns) x 2500 = 10,000 (max cells)).

In this post, I’ll show you how to master high-volume data retrieval using the two strategies: Bulk Ingest and On-Demand Paging, using the @qlik/api library.

What is the 10k Limit and Why Does It Matter?

The Qlik Associative Engine is built for speed and can handle billions of rows in memory. However, transferring that much data to a web browser in one go would be inefficient. To protect both the server and the client-side experience, Qlik forces you to retrieve data in chunks.

Understanding how to manage these chunks is the difference between an app that lags and one that delivers a good user experience.

Step 1: Defining the Data Volume

To see these strategies in action, we need a "heavy" dataset. Copy this script into your Qlik Sense Data Load Editor to generate 250,000 rows of transactions (or download the QVF attached to this post):

// ============================================================ // DATASET GENERATOR: 250,000 rows (~1,000,000 cells) // ============================================================ Transactions: Load RecNo() as TransactionID, 'Customer ' & Ceil(Rand() * 20000) as Customer, Pick(Ceil(Rand() * 5), 'Corporate', 'Consumer', 'Small Business', 'Home Office', 'Enterprise' ) as Segment, Money(Rand() * 1000, '$#,##0.00') as Sales, Date(Today() - Rand() * 365) as [Transaction Date] AutoGenerate 250000;Step 2: Choosing Your Strategy

There are two primary ways to handle this volume in a web app. The choice depends entirely on your specific use case.

1- Bulk Ingest (The High-Performance Pattern)

In this pattern, you fetch the entire dataset into the application's local memory in iterative chunks upon loading.

-

The Goal: Provide a "zero-latency" experience once the data is loaded.

-

Best For: Use cases where users need to perform instant client-side searches, complex local sorting, or full-dataset CSV exports without waiting for the Engine.

2- On-Demand (The "Virtual" Pattern)

In this pattern, you only fetch the specific slice of data the user is currently looking at.

-

The Goal: Provide a near-instant initial load time, regardless of whether the dataset has 10,000 or 10,000,000 rows as you only load a specific chunk of those rows at a time.

-

Best For: Massive datasets where the "cost" of loading everything into memory is too high, or when users only need to browse a few pages at a time.

Step 3: Implementing the Logic

While I'm using React and custom react hooks for the example I'm providing, these core Qlik concepts translate to any JavaScript framework (Vue, Angular, or Vanilla JS). The secret lies in how you interact with the HyperCube.

The Iterative Logic (Bulk Ingest):

The key is to use a loop that updates your local data buffer as chunks arrive.

To prevent the browser from freezing during this heavy network activity, we use setTimeout to allow the UI to paint the progress bar.

qModel = await app.createSessionObject({ qInfo: { qType: 'bulk' }, ...properties }); const layout = await qModel.getLayout(); const totalRows = layout.qHyperCube.qSize.qcy; const pageSize = properties.qHyperCubeDef.qInitialDataFetch[0].qHeight; const width = properties.qHyperCubeDef.qInitialDataFetch[0].qWidth; const totalPages = Math.ceil(totalRows / pageSize); let accumulator = []; for (let i = 0; i < totalPages; i++) { if (!mountedRef.current || stopRequestedRef.current) break; const pages = await qModel.getHyperCubeData('/qHyperCubeDef', [{ qTop: i * pageSize, qLeft: 0, qWidth: width, qHeight: pageSize }]); accumulator = accumulator.concat(pages[0].qMatrix); // Update state incrementally setData([...accumulator]); setProgress(Math.round(((i + 1) / totalPages) * 100)); // Yield thread to prevent UI locking await new Promise(r => setTimeout(r, 1));The Slicing Logic (On-Demand)

In this mode, the application logic simply calculates the qTop coordinate based on the user's current page index and makes a single request for that specific window of data (rowsPerPage).

const width = properties.qHyperCubeDef.qInitialDataFetch[0].qWidth; const qTop = (page - 1) * rowsPerPage; const pages = await qModelRef.current.getHyperCubeData('/qHyperCubeDef', [{ qTop, qLeft: 0, qWidth: width, qHeight: rowsPerPage }]); if (mountedRef.current) { setData(pages[0].qMatrix); }I placed these two methods in custom hooks (useQlikBulkIngest & useQlikOnDemand) so they can be easily re-used in different components as well as other apps.

Best Practices

Regardless of which pattern you choose, always follow these three Qlik Engine best practices:

-

Engine Hygiene (Cleanup): Always call app.destroySessionObject(qModel.id) when your component or view unmounts.

-

Cell Math: Always make sure your qWidth x qHeight is strictly < 10,000. For instance, if you have a wide table (20 columns), your max height is only 500 rows per chunk.

-

UI Performance: Even if you use the "Bulk" method and have 250,000 rows in JavaScript memory, do not render them all to the DOM at once. Use UI-level pagination or virtual scrolling to keep the browser responsive.

Choosing between Bulk and On-Demand is a trade-off between Initial Load Time and Interactive Speed. By mastering iterative fetching with the @qlik/api library, you can ensure your web apps remain robust, no matter how much data is coming in from Qlik.

💾 Attached is the QVF and here is the GitHub repository containing the full example in React so you can try it in locally - Instructions are provided in the README file.

(P.S: Make sure you create the OAuth client in your tenant and fill in the qlik-config.js file in the project with your tenant-specific config).

Thank you for reading!

-

-

Qlik Replicate and an update on Salesforce “Use Any API Client” Permission Depre...

Dear Qlik Replicate customers, Salesforce announced (October 31st, 2025) that it is postponing the deprecation of the Use Any API Client user permission. See Deprecating "Use Any API Client" User Permission for details. Qlik will keep the OAUT plans on the roadmap to deliver them in time with Salesforce's updated plans. Salesforce has announced the deprecation of the Use Any API Client user permission. For details, see Deprecating "Use Any API ... Show MoreDear Qlik Replicate customers,

Salesforce announced (October 31st, 2025) that it is postponing the deprecation of the Use Any API Client user permission. See Deprecating "Use Any API Client" User Permission for details.

Qlik will keep the OAUT plans on the roadmap to deliver them in time with Salesforce's updated plans.

Salesforce has announced the deprecation of the Use Any API Client user permission. For details, see Deprecating "Use Any API Client" User Permission | help.salesforce.com.

We understand that this is a security-related change, and Qlik is actively addressing it by developing Qlik Replicate support for OAuth Authentication. This work is a top priority for our team at present.

If you are affected by this change and have activated access policies relying on this permission, we recommend reaching out to Salesforce to request an extension. We are aware that some customers have successfully obtained an additional month of access.

By the end of this extension period, we expect to have an alternative solution in place using OAuth.

Customers using the Qlik Replicate tool to read data from the Salesforce source should be aware of this change.Thank you for your understanding and cooperation as we work to ensure a smooth transition.

If you have any questions, we're happy to assist. Reply to this blog post or take your queries to our Support Chat.Thank you for choosing Qlik,

Qlik Support -

Qlik Sense November 2025 (Client-Managed) now available!

What’s New & What It Means for YouWe’re excited to announce the November 2025 release of Qlik Sense Enterprise on Windows. This update brings enhancements across app settings, visualizations, dashboards, and connectors making your analytics smoother, more powerful and easier to maintain. Below is a breakdown of the key features, how your teams – analytics creators, business users, data integrators and administrators – can benefit, and some practi... Show MoreWhat’s New & What It Means for You

We’re excited to announce the November 2025 release of Qlik Sense Enterprise on Windows. This update brings enhancements across app settings, visualizations, dashboards, and connectors making your analytics smoother, more powerful and easier to maintain. Below is a breakdown of the key features, how your teams – analytics creators, business users, data integrators and administrators – can benefit, and some practical next-steps to get ready. -

【2026/1/27(火)15:00 開催】AI の未来を創る:データ・エージェント・人間のタッグが生む新たな価値

AI を活用して投資利益率を高める 2026年のトレンド 多くの企業が AI に投資しているにもかかわらず、投資利益率を高めている企業はごく少数です。何を改善すべきなのか? 何十年もの間、企業は振り子のように揺れ動いてきました。前進している時は自由度を高め、後退している時は規律を強める…を繰り返してきました。2026年のデータで成功する戦略モデルは、二者択一ではありません。管理とイノベーションを両立して活かし、新たな価値を生み出すことが重要になります。 1月 27日(火)開催 Web セミナー「AI の未来を創る:データ・エージェント・人間のタッグが生む新たな価値」では、Qlik のマーケットインテリジェンスリードの Dan Sommer と Qlik APAC の分析・AI 部門 最高技責任者の Charlie Farah が、2026年の重要なトレンドについて解説します。 本 Web セミナーでは、ビジネスを成功に導くために押さえるべき 3 つの重要なポイントをご紹介します。このポ... Show MoreAI を活用して投資利益率を高める 2026年のトレンド

多くの企業が AI に投資しているにもかかわらず、投資利益率を高めている企業はごく少数です。何を改善すべきなのか?

何十年もの間、企業は振り子のように揺れ動いてきました。前進している時は自由度を高め、後退している時は規律を強める…を繰り返してきました。2026年のデータで成功する戦略モデルは、二者択一ではありません。管理とイノベーションを両立して活かし、新たな価値を生み出すことが重要になります。

1月 27日(火)開催 Web セミナー「AI の未来を創る:データ・エージェント・人間のタッグが生む新たな価値」では、Qlik のマーケットインテリジェンスリードの Dan Sommer と Qlik APAC の分析・AI 部門 最高技責任者の Charlie Farah が、2026年の重要なトレンドについて解説します。

本 Web セミナーでは、ビジネスを成功に導くために押さえるべき 3 つの重要なポイントをご紹介します。このポイントをビジネスに適用すると、データの整合性を確保してすべてのシステムをシームレスにつなぎ、ビジネスに革新を起こすことができます。さらに、この新たなモデルの基礎となるトレンドを探ることで、貴社のデータ戦略をレベルアップする方法も解説します。

偏った方針に振り回されることなく、分断を解消して統合基盤を構築するには?Web セミナーに参加して、AI を最大限に活用するために、新たなモデルの導入の重要性をご確認ください。

※ 参加費無料。日本語字幕付きでお届けします。パソコン・タブレット・スマートフォンで、どこからでもご参加・ご視聴いただけます。

-

Dynamic Engine Now Supports Google Kubernetes Engine: Deploy Anywhere, Scale Eve...

The evolution of enterprise cloud strategy is no longer about choosing a single provider - it's about deploying flexibly across AWS, Azure, Google Cloud, and on-premises infrastructure, depending on the unique needs of your business, industry, and regulatory environment. Today, we're excited to announce that Qlik Talend Dynamic Engine officially supports Google Kubernetes Engine (GKE), joining our existing support for AWS EKS, Azure AKS, and on-p... Show MoreThe evolution of enterprise cloud strategy is no longer about choosing a single provider - it's about deploying flexibly across AWS, Azure, Google Cloud, and on-premises infrastructure, depending on the unique needs of your business, industry, and regulatory environment. Today, we're excited to announce that Qlik Talend Dynamic Engine officially supports Google Kubernetes Engine (GKE), joining our existing support for AWS EKS, Azure AKS, and on-premises Kubernetes distributions like RKE2 and K3S.

-

Write Table now available in Qlik Cloud Analytics

Introducing Write Table a simple, interactive way for users to work directly with their data inside their analytics apps