Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- All Forums

- :

- QlikView App Dev

- :

- Counting sum in variable

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Counting sum in variable

Hi all

I'm working on a dataset with student applications to a University where we have 66 different types of educations (Biology, Law, Economics etc.).

For that I need to make a Straight Table (preferably) where I can calculate the following:

A Count of Number of Educations this Year that have: '0' applications, '1-10' applications, '11-30' applications, '31-100' applications and '>100' applications.

I'm able to count the number of educations

(Count(DISTINCT {1<Year={$(vCurrentYear)}>} [Education]) )

But not the number of Educations that have '0' applications, '1-10' applications and so forth.

Can anyone help me on this please

Thanks in advance ![]()

Cheers

Bruno

- Tags:

- new_to_qlikview

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

in script

if(applicant fieldname=0,0,

if(applicant fieldname>0 and applicant fieldname<=10,'1-10',

if(applicant fieldname>10 and applicant fieldname<=30,'10-30',

if(applicant fieldname>30 and applicant fieldname<=100,'10-30',

if(applicant fieldname>100,'>100'))))) as youfieldname

and take this youfieldname in dimension and drag it tohorizontally

hope this helps

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Luminary

Thanks for your quick reply, I can't get it to work though.

I've done the following in the script:

if(Education=0,'0',

if(Education>0 and Education<=10,'1-10',

if(Education>10 and Education<=30,'11-30',

if(Education>30 and Education<=100,'31-100',

if(Education>100,'>100'))))) as EducationCountGrouped,

Can you see what I'm doing wrong?

I've attached a cleaned copy of my data in the original post so you can see it ![]()

Thanks

Bruno

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

its looking good to me

but dont know why its not working

Please attached file stating requirement again without any gap if possible

hope this helps

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Luminary

I'm not sure I expressed myself appropiate in my original post. I'm sorry, I'll try again:

The thing i'm looking for is the following:

I have a dataset with app. 10.000 rows, 1 for each person who applied to an Education on our University. We have 66 different Educations an applicant can choose from, f.ex. Biology, Law, Medicin and Economics. So if there is 29 applicants for Biology the dataset have 29 rows under the Education 'Biology' (1 for each person who applied).

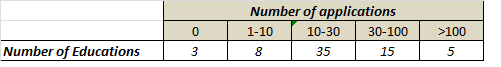

I need to make a table that can summarize how many Educations that have had 0 applications, 1-10 applications, 11-30 applications 31-100 applications and >100 applications. F.ex. Biology recieved 29 applications, Economics 71 applications, Law 201 applications and Medicin 1.504 applications.

Based on these 4 Educations, my table would need to look like this:

0 app. 1-10 app. 11-30 app. 31-100 app. >100 app.

0 0 1 1 2

if this is also what you understood in the first place, what do I need to write in my expression in my table? I can't' figure it out ![]()

Thanks a lot ![]()

Bruno

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

see the attched file

hope this helps

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

Thanks for your effort.

I still can't get it to work though. The problem is that I do not have aggregatet data (for data see attachment in original post). I get all my Educations within 1-10, because I have a variable I'm counting on (CountVar) that equals 1.

Your TEST1 loads the data aggregatet as far as I can see.

It's probably a little thing, but I can't' figure it out.

Thanks

Bruno ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

See attached qvw

talk is cheap, supply exceeds demand

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is exactly what I was looking for - thanks a lot ![]()

Bruno