Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

Recent Documents

-

Where are the tHashInput and tHashOutput components?

Talend Version 7.2.1, 7.3.1, 8.0.1 Summary Can't find the tHashInput and tHashOutput components in Studio's Palette.Additional Versions... Show More -

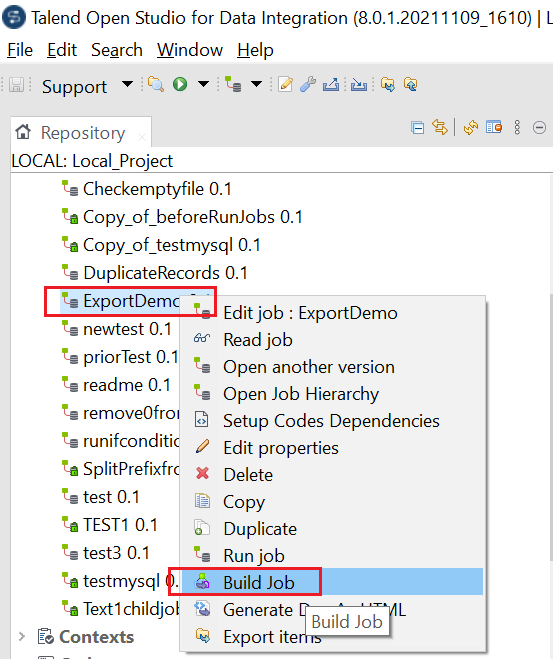

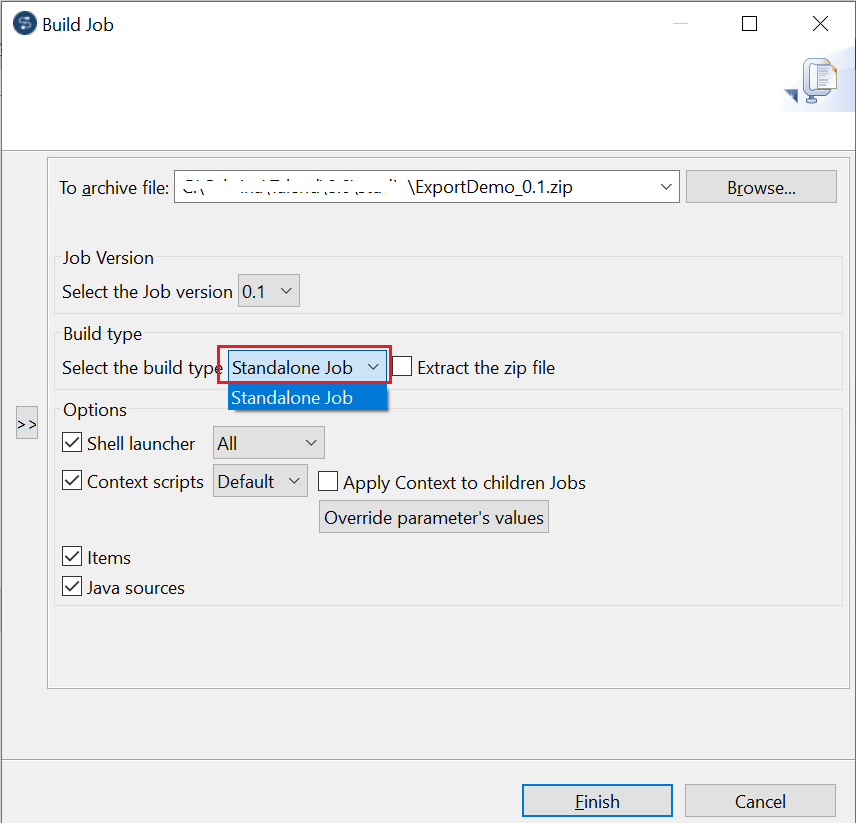

Exporting a Job script and executing it outside of Talend Studio

Overview Talend Jobs support cross-platform execution. You can develop your Job on one machine, export the Job script, and then move it to another m... Show More -

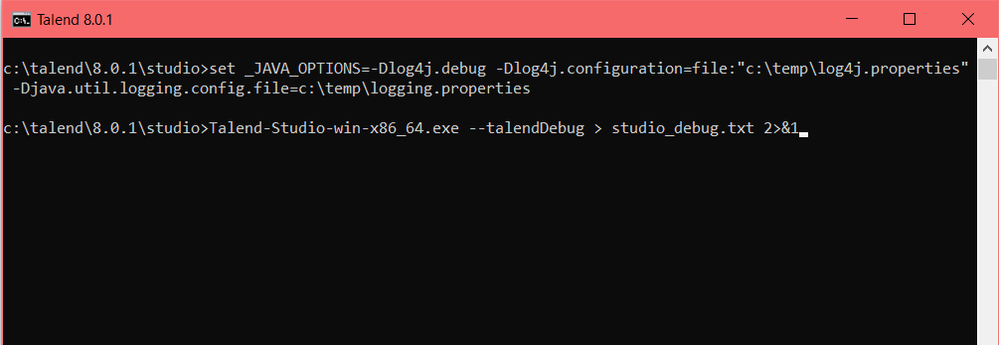

How to generate a trace for the HTTP requests executed by Studio

Question How do you generate a trace of the HTTP requests executed by the Talend Studio without using a third-party tool? Answer 1. Create a lo... Show More -

How to integrate Talend Jobs containing dynamic queries with Cloudera Navigator ...

Introduction Talend Jobs that are developed using context variable and dynamic SQL queries are not supported; therefore, Talend Data Catalog (TDC)... Show MoreIntroduction

Talend Jobs that are developed using context variable and dynamic SQL queries are not supported; therefore, Talend Data Catalog (TDC) is unable to harvest metadata and trace data lineage from a Talend dynamic integration Job using the Talend ETL bridge.

This article shows you how to work around these limitations in Talend Jobs that use resources from a Cloudera Cluster using Cloudera Navigator and Talend Data Catalog bridge.

Sources for the project are attached to this article.

Prerequisites

-

Cloudera Cluster CDH 5.10 and above

-

Cloudera Navigator 2.15.1 and above

-

MySQL server to store metadata table of the dynamic integration framework

-

Talend Big Data Platform 7.1.1 and above

-

Talend Data Catalog 7.1 Advanced (or Plus) Edition and above, with latest cumulative patches

Setting up Talend Studio

-

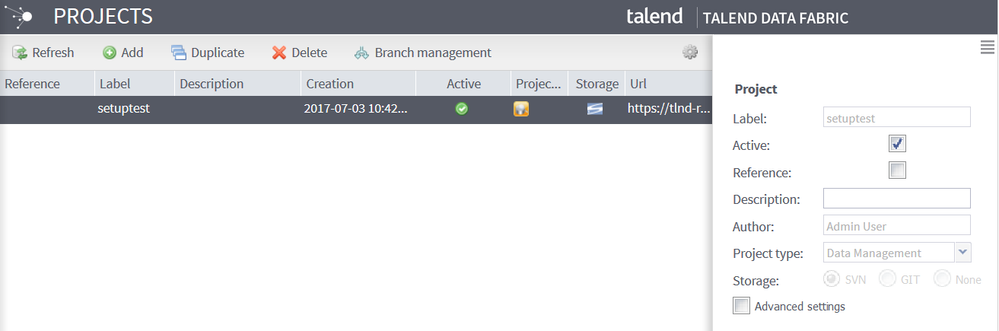

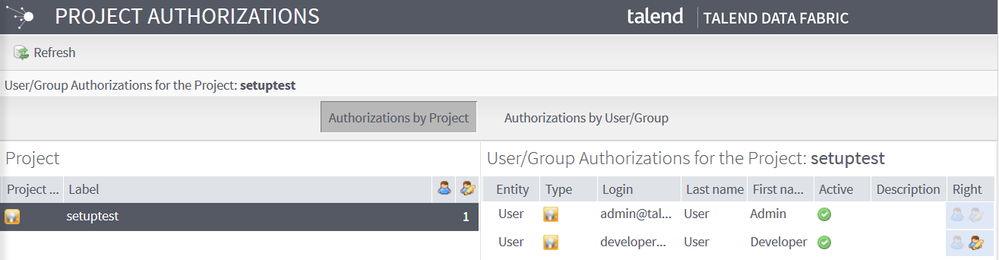

Open Talend Studio and create a new project.

-

In the Repository, expand Metadata, right-click Hadoop Cluster, then select Create Hadoop Cluster.

-

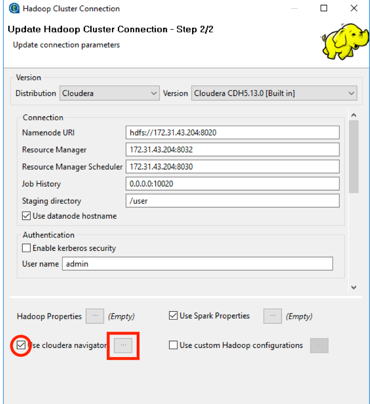

Using the Hadoop Cluster Connection wizard, create a connection to your Cloudera Cluster, and make sure that you select the Use cloudera navigator check box.

-

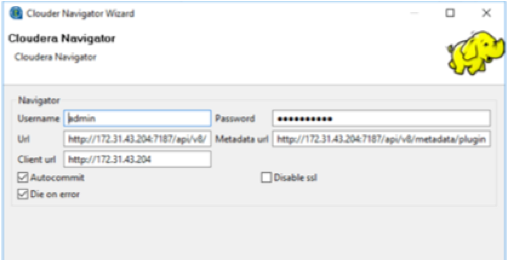

Click the ellipsis to the right of Use Cloudera Navigator, then set up your connection to Cloudera Navigator, as shown below:

For more information on leveraging Cloudera Navigator in Talend Jobs, see the How to set up data lineage with Cloudera Navigator page of the Talend Big Data Studio User Guide available in the Talend Help Center.

Building the dynamic integration Job

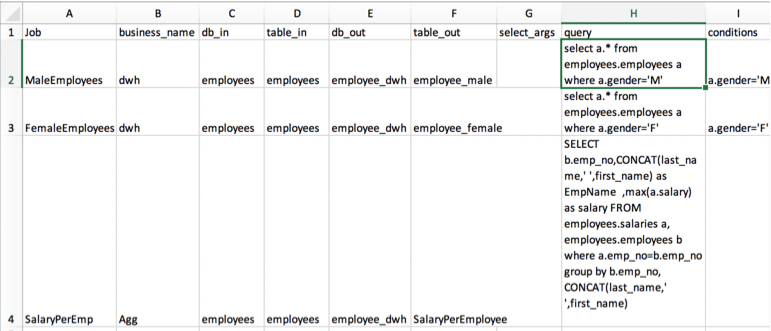

This use case uses MySQL to store metadata such as table source/target, queries, and filters, then stores these values in context variables that are used to build integration Jobs at runtime. The dynamic Job reads data from source tables in Hive and writes data to target tables in Hive.

-

Upload the metadata for the dynamic integration Job to the MySQL server (or any other DB of your choice), using the Metadata_Demo_forMySql.xlsx file attached to this article.

-

Upload the source data, located in the employees.csv and salaries.csv files attached in this article, to Hive.

-

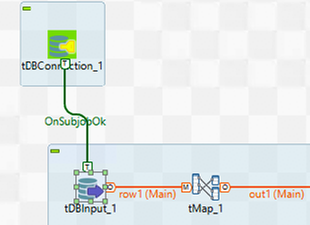

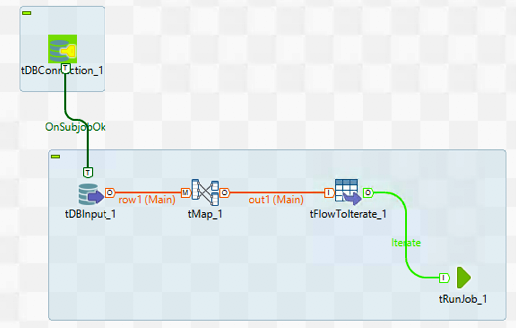

Create a standard Job, then add a tDBConnection component to connect to the metadata database. Note: The complete preparation Job, located in the prepare_load_dwh_Hive.zip file, is attached to this article.

-

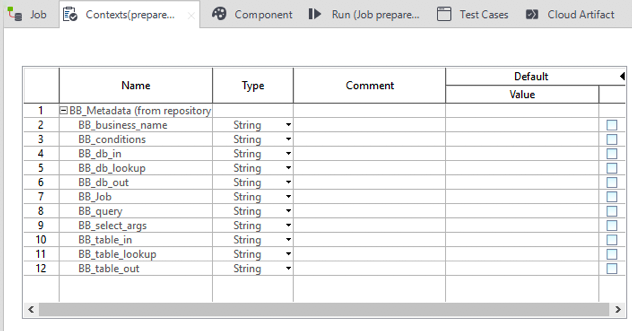

Replicate all of the fields in the metadata table by creating the following Context variables:

-

Add a tDBInput component.

-

Connect the tDBConnection component to the tDBInput component using the OnSubjobOk trigger.

-

Double-click the tDBInput component to open the Basic settings view. Click the [...] button next to the Table name text box, then select the table name where you've uploaded the metadata, in this case, meta_tables, apply the appropriate schema, and use the following query:

"SELECT `meta_tables`.`Job`, `meta_tables`.`business_name`, `meta_tables`.`db_in`, `meta_tables`.`tabel_in`, `meta_tables`.`db_out`, `meta_tables`.`table_out`, `meta_tables`.`select_args`, `meta_tables`.`query`, `meta_tables`.`conditions`, `meta_tables`.`db_lookup`, `meta_tables`.`table_lookup` FROM `meta_tables` WHERE `meta_tables`.`business_name`='Agg'"

Notice that the value for bussiness_name is hardcoded with Agg. Depending on the type of dynamic query you want to run, you could use a context variable so that at runtime, the Job uses the context variable value and filters the metadata table on the business_name (in this case, Agg or Dwh).

-

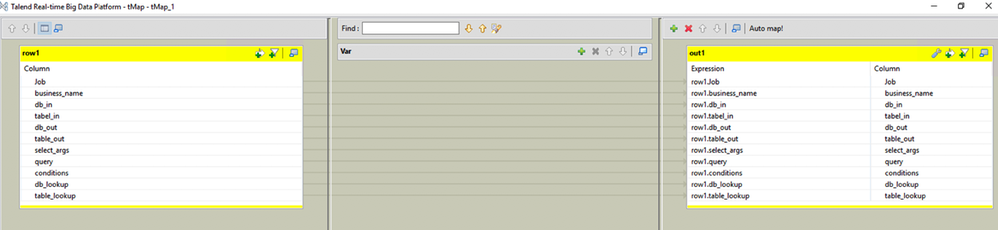

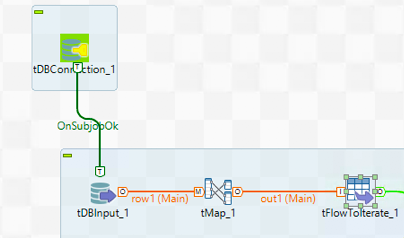

Add a tMap component after the tDBInput component, then connect it using a Main row. The tMap component acts as a pass-through and creates output that contains all the input fields.

-

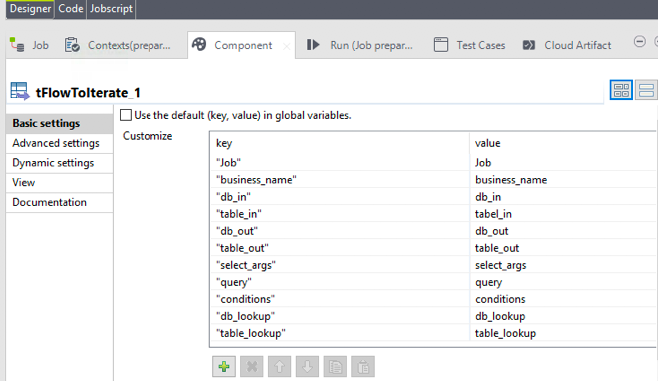

Connect the tMap to a tFlowToIterate component, then create a key-value pair for each of the fields in the metadata table.

-

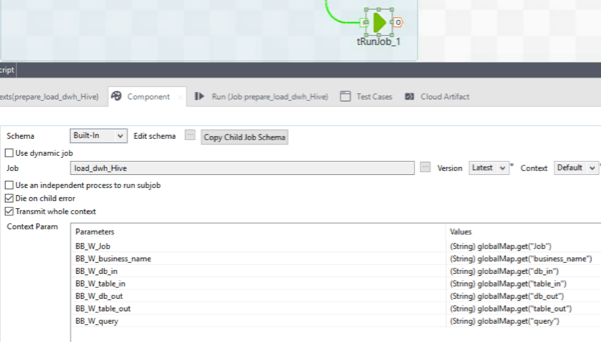

Add a tRunJob component. Connect the tFlowToIterate component to the tRunJob component using Row > Iterate.

-

Set up the tRunJob component to transmit the whole context to the child Job for each iteration, as shown below:

Building the data integration Job

In this section, you build a Job that is triggered by the tRunJob component from the previous Job.

Note: The complete integration Job, located in the load_dwh_Hive.zip file, is attached to this article.

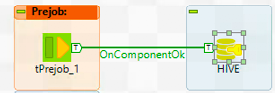

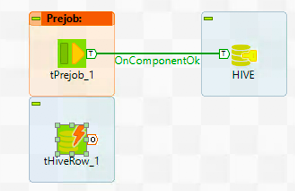

- Create a new standard Job, then add a tPreJob component and a tHiveConnection component.

-

Connect tPreJob to tHiveConnection using the OnComponentOK trigger.

-

Add a tHiveRow component below the tPreJob component.

-

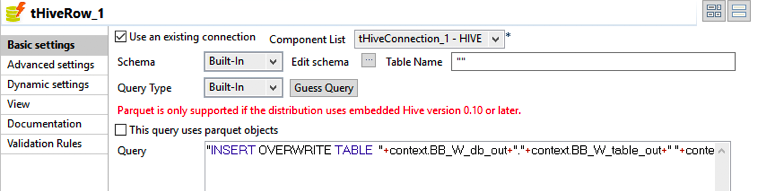

Configure the tHiveRow component, as shown below:

-

Use the context parameter transmitted by the parent Job by entering the following query in the Query text box.

"INSERT OVERWRITE TABLE "+context.BB_W_db_out+"."+context.BB_W_table_out+" "+context.BB_W_query+" "

The integration Job (child Job) runs as many times as the number of rows returned by the metadata table filtered by the context business_name in the parent Job.

-

Run the Job.

-

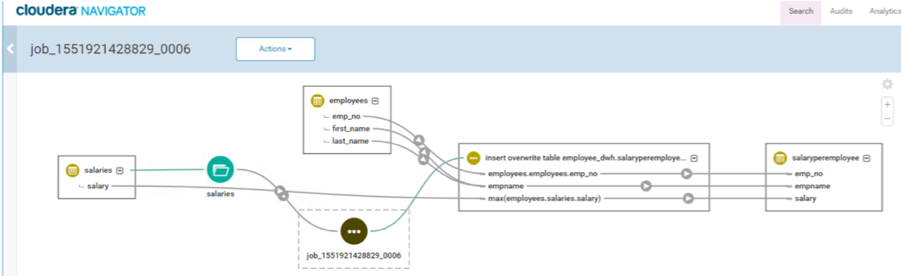

Open Cloudera Navigator, then search for Hive Jobs. Locate the Talend Job and review trace the data lineage.

Configuring Talend Data Catalog

-

Open Talend Data Catalog (TDC).

-

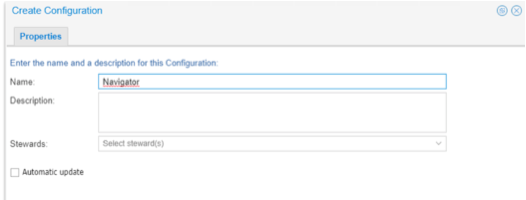

Create a new configuration.

Cloudera Hive bridge

-

For the bridge to work, you'll need to the Cloudera JDBC connector for Hive and the JDBC driver of your Hive metastore (in this case, Postgres).

-

Ensure both of the drivers are accessible by TDC server or an Agent.

-

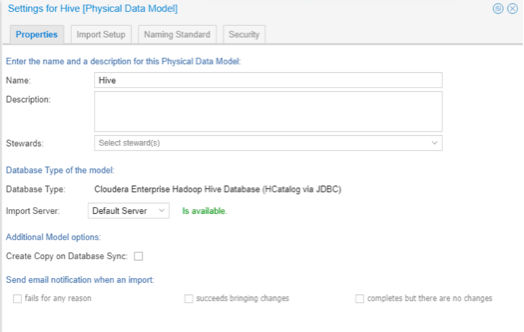

Create a new Physical Data Model to harvest the Hive metastore.

-

On the Properties tab, select the Cloudera Enterprise Hadoop Hive Database.

-

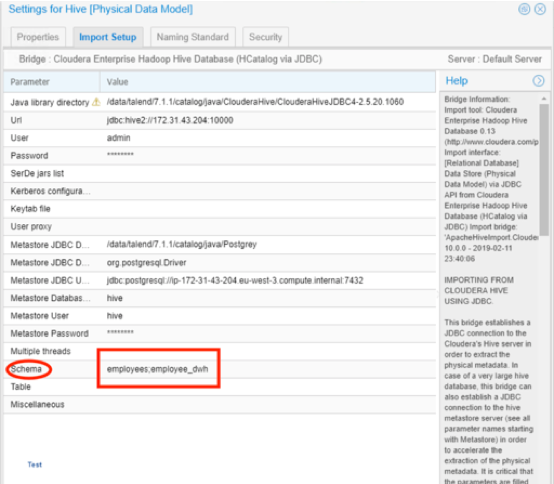

On the Import Setup tab, in the User and Password fields, enter your Hive metastore credentials (typically set up during the cluster installation). If you are not able to retrieve it, use the StackExchange, connect to PostgreSQL server: FATAL: no pg_hba.conf entry for host tutorial for a Hive metastore with Postgres.

-

Review your settings, when you're finished, the Import setup tab should look like this:

Click Import to start the metadata import.

-

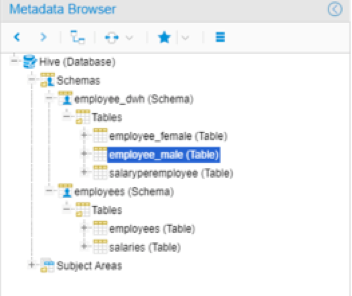

After a successful import, navigate to Data Catalog > Metadata Explorer > Metadata Browser, and locate the harvested metadata, in this case, employee_male (Table).

Cloudera Navigator bridge

-

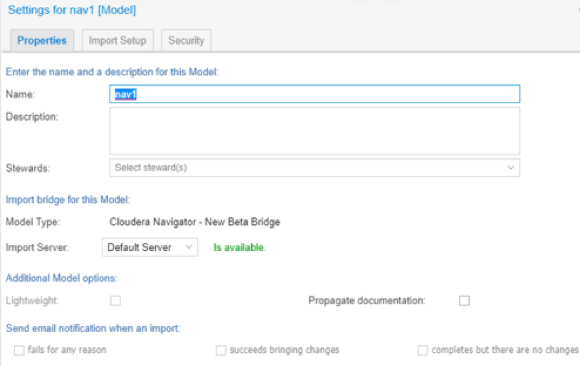

Create a new model, then in Model Type, select Cloudera Navigator - New Beta Bridge.

-

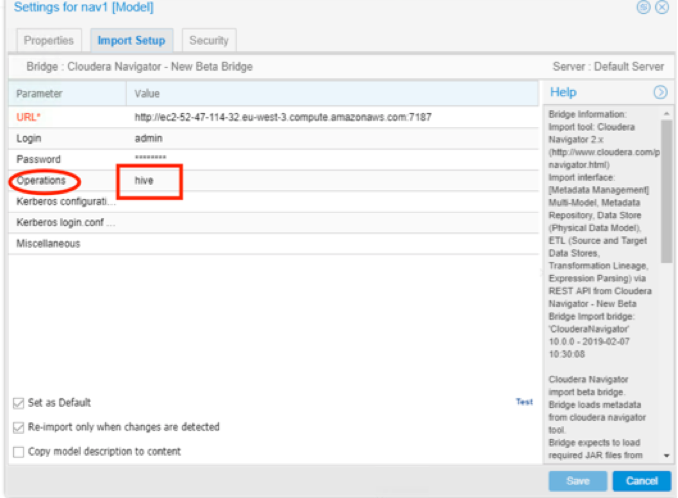

On the Import Setup tab, fill in the Navigator URL*, Login, Password, then filter the Operations you want to harvest, in this case, hive.

-

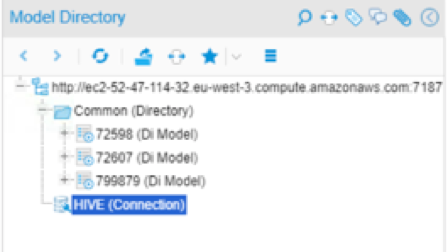

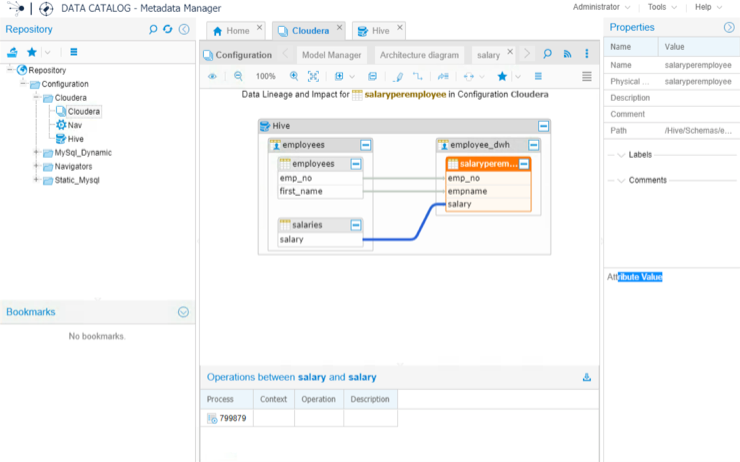

After a successful import, you'll find metadata harvest for Navigator, the Hive (connection), and the dynamic Data Integration Job (Di Model).

Stitching

-

Make sure that your harvested models belong to your configuration, by dragging them to the configuration you set up earlier.

-

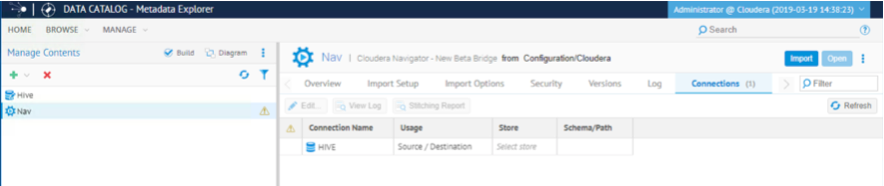

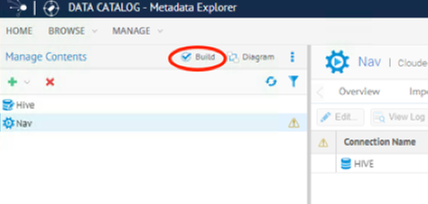

On the Manage menu, select Manage Contents, click the Nav model, then select the Connection tab on the right.

-

Select the Connection Name, in this case, Hive.

-

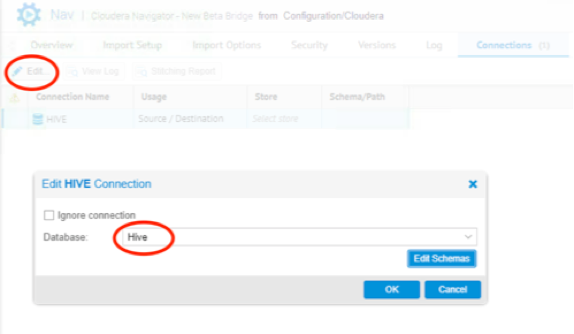

Click Edit, then, from the Database pull-down list, select Hive (click Edit Schemas if it wasn't done before), then click OK.

-

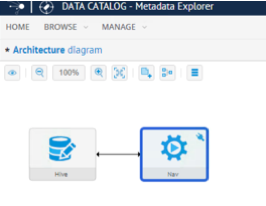

Click Build, then click the Diagram to see the connection between models.

-

Trace the data lineage of the Talend Job containing dynamic queries executed against Hive.

Conclusion

This article showed you how to handle the Talend Data Catalog Harvesting process of DI Jobs using context variables and dynamic queries on a Cloudera Cluster leveraging Navigator and Hive bridges to trace data lineage.

-

-

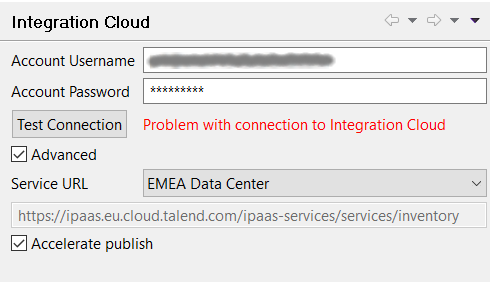

'Problem with connection to Integration Cloud' error when testing the connectio...

Problem Description Testing the Integration Cloud connection in Studio (Preferences > Talend > Integration Cloud) fails with the following error:... Show More -

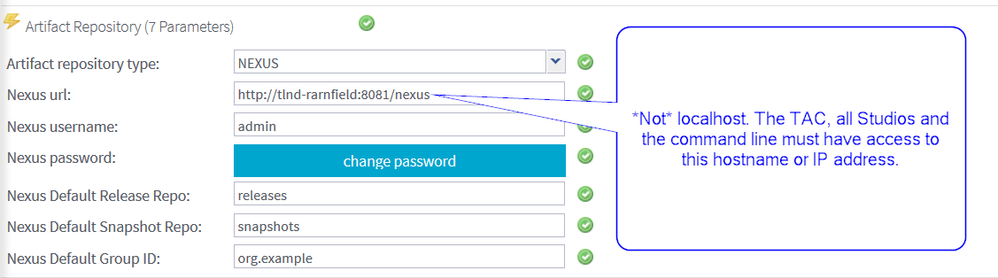

Talend Studio: Repository does not allow updating assets: releases (400) error w...

Talend is configured with a Nexus installed on a different machine. A Job is published for the first time to Nexus using Studio. When publishing, or ... Show More -

Causes of the "UnsupportedClassVersionError" exception

Symptoms This error can occur when you execute a Job script outside of Talend Studio, and the JVM used to execute the Job is different from the J... Show More -

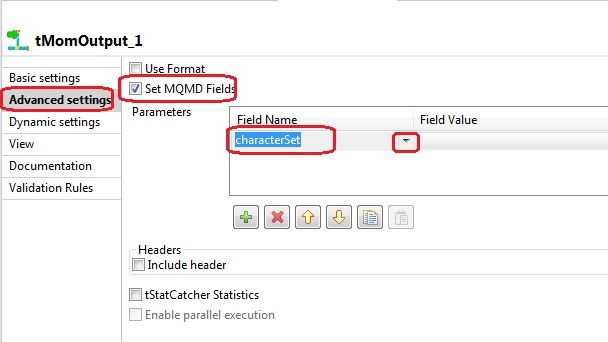

Which encoding does tMomOutput use?

Question If not specified, which encoding/code-page does tMomOutput use? Answer tMomOutput will use the default encoding of the machin... Show More -

What is the difference between Built-In and Repository?

Answer Built-in: all information is stored locally in the Job. You can enter and edit all information manually. Repository: all information is ... Show More -

Tracing records with breakpoints

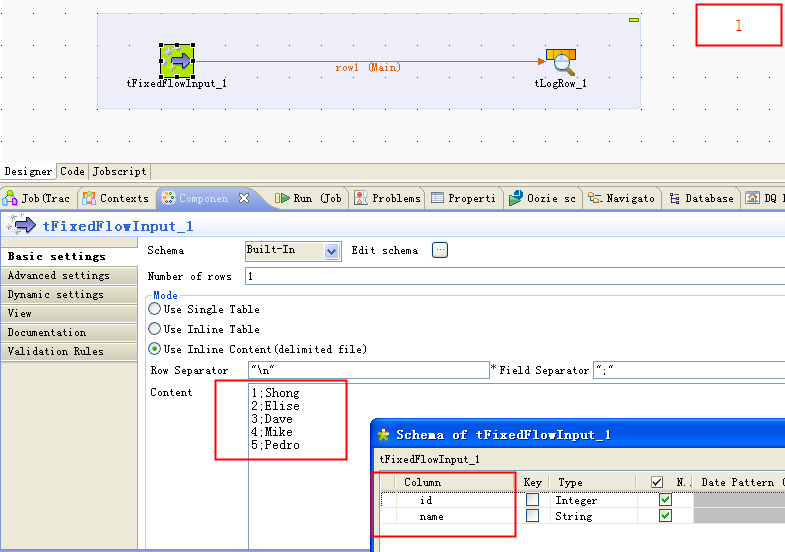

Overview Talend Studio is an IDE based on Eclipse RCP. It provides a proprietary record trace debugger and allows you to run Talend Jobs in Trace... Show MoreCreate an example Job

Create an example Job called TraceRecordsWithBreakpoint. Use a tFixedFlowInput to generate some source data such as:

1;Shong 2;Elise 3;Dave 4;Mike 5;Pedro

The detailed Job settings are shown in the following figure:

Set a breakpoint

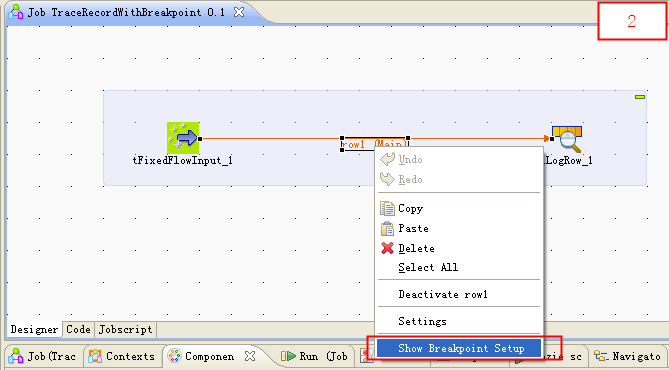

To set a breakpoint on the data flow, proceed as follows:

-

Right-click the connector between two components and select Show Breakpoint Setup.

Note: This feature is available only in Talend Data Integration (on subscription only).

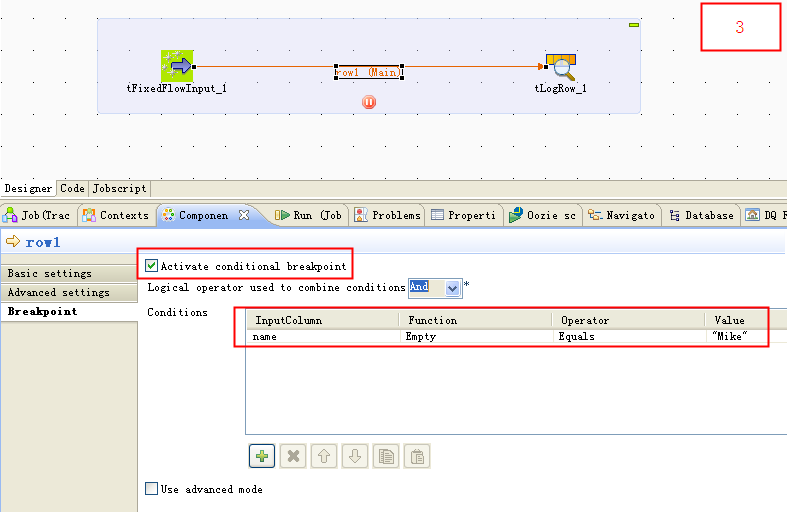

-

In the Breakpoint tab, check Active conditional breakpoint and/or Use advanced mode to set a breakpoint. In this example, if you check Active conditional breakpoint and set a breakpoint in the Condition table, the Job will pause when the value of the Name column equals Mike.

Trace records with breakpoint

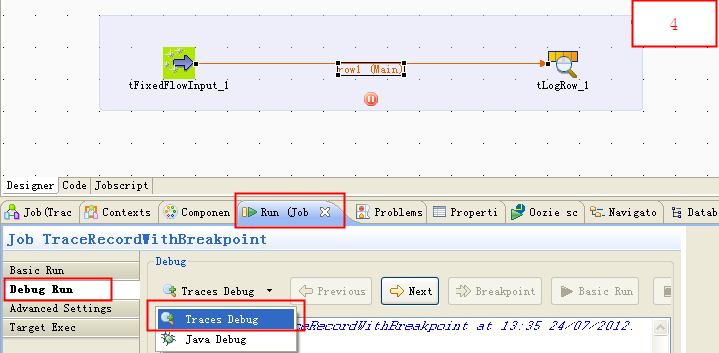

Now run the Job in Traces Debug mode and trace records. Follow these steps:

-

In the Run view, click Debug Run tab, select Traces Debug from the Debug list, and click Traces Debug to run the Job.

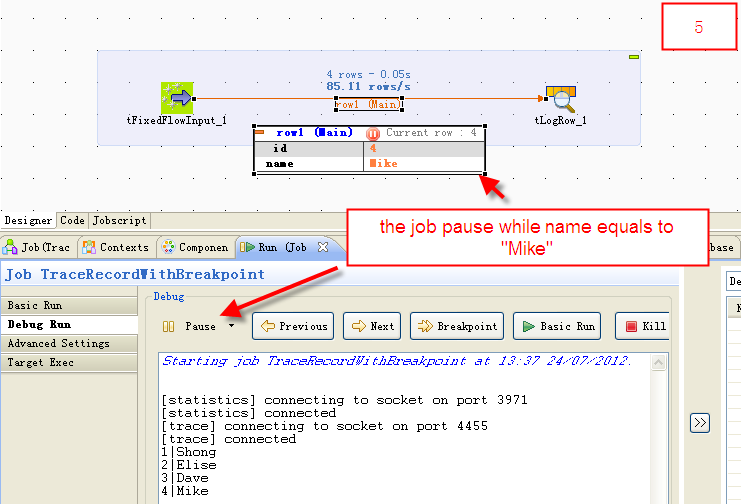

-

As the figure shows, the Job pauses when the value of the Name column equals Mike, when it matches the condition of the breakpoint.

Result

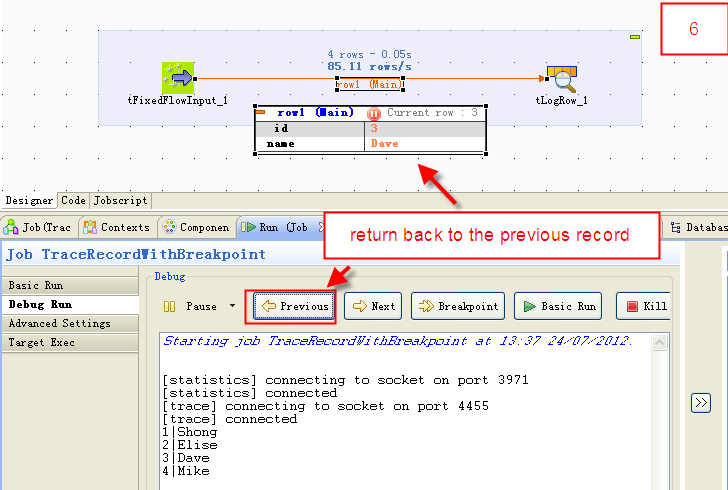

Now you can trace the records by clicking Previous, Next, and Breakpoint.

- Previous: return back to the previous record.

- Next: go to the next record.

- Breakpoint: continue to run and pause until next breakpoint.

- Basic Run: continue to run until ends.

- Kill: kill the Job.

-

-

"HTTP ERROR: 500" in Studio when selecting "Help Contents" from the Help menu

Symptom When Help Contents is selected from the Studio Help menu, the following error appears: HTTP ERROR: 500 Problem accessing /help/index.jsp. Rea... Show More -

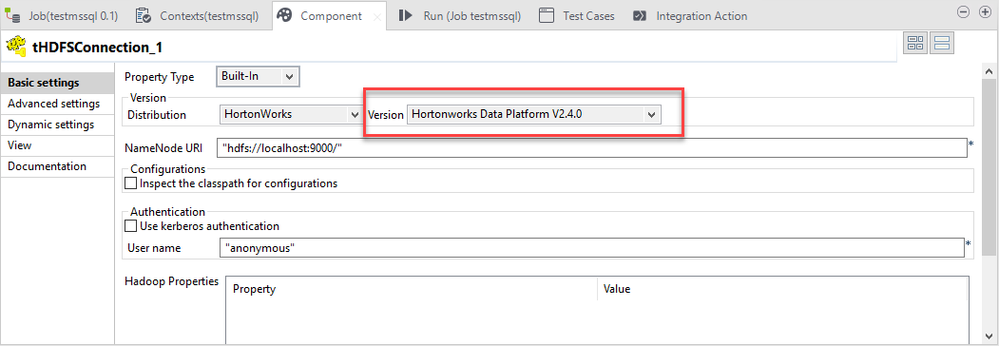

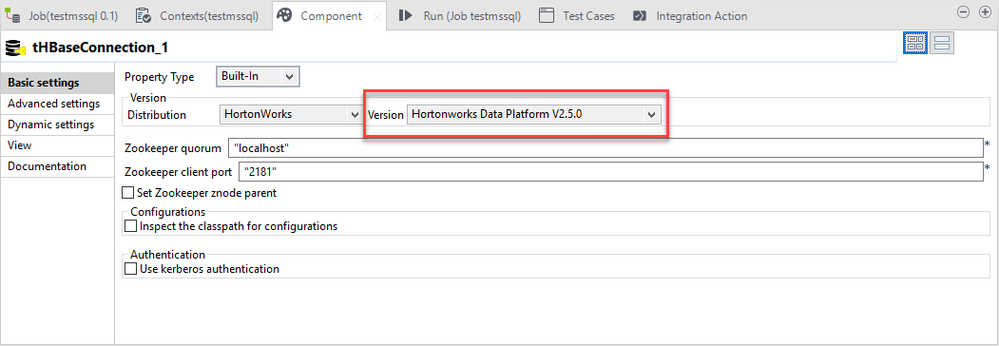

Cannot use tHDFSconnection and tHBASEconnection in the same Job

Symptom Cannot use tHDFSconnection and tHBASEconnection in the same Job. Diagnosis java.io.IOException: java.lang.reflect.InvocationT... Show More -

Executing a Job using a tHiveLoad component, results in a 'SemanticException [Er...

Problem Description When executing a Job with a tHiveLoad component, the following error is observed in the Job log: Error while compiling stat... Show More -

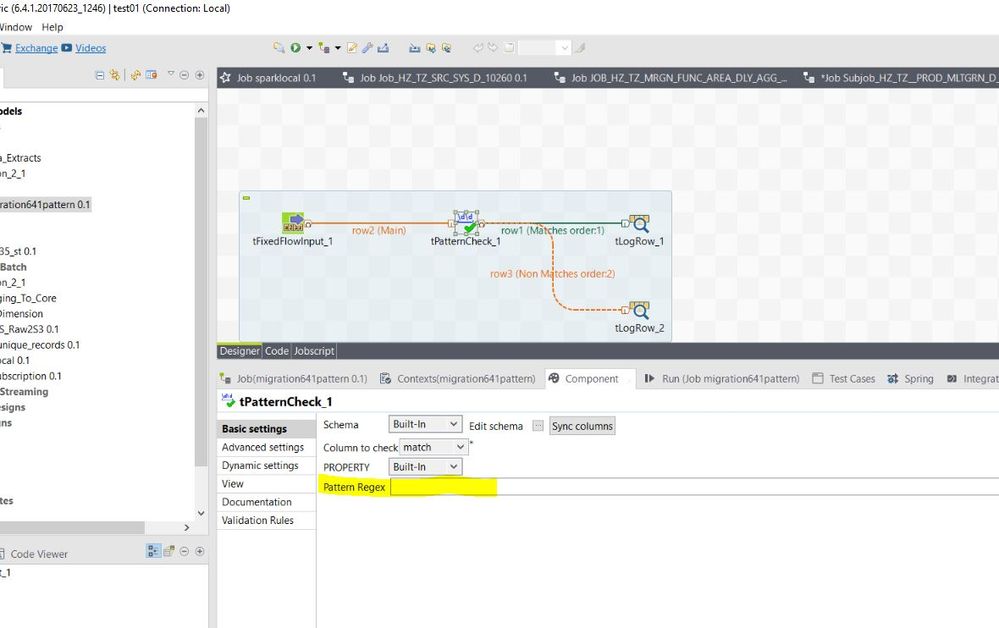

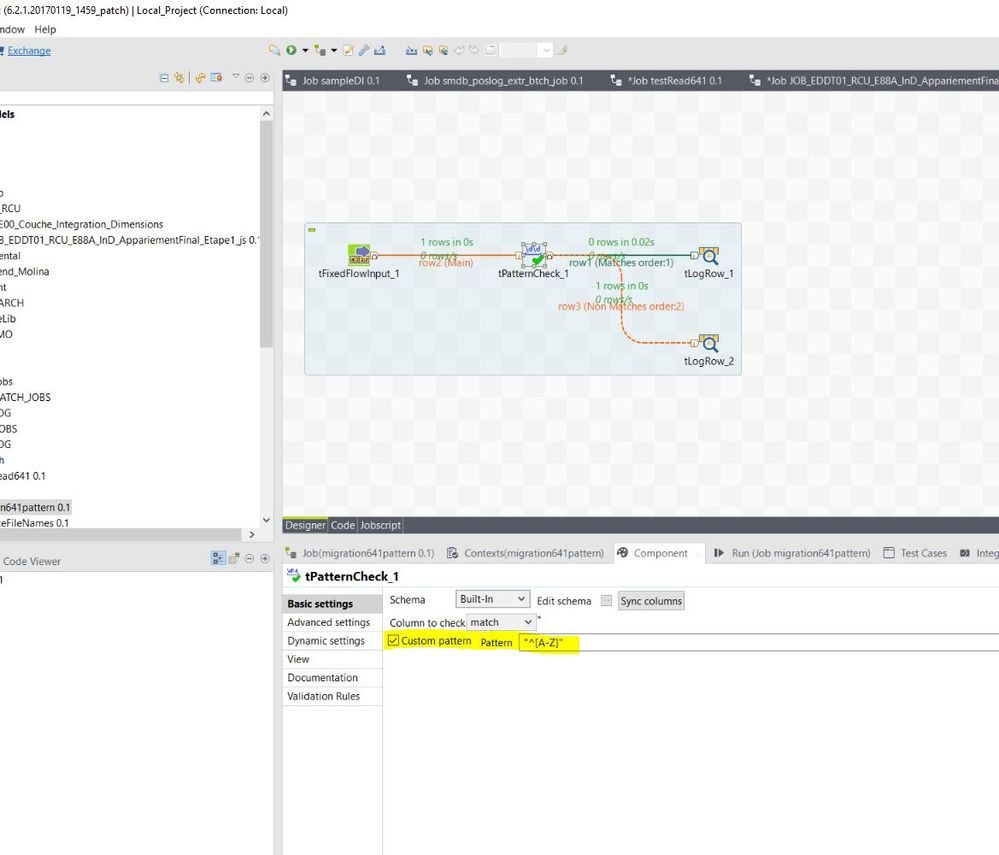

tPatternCheck component loses custom pattern after migrating from 6.2.1 to 6.4.1

Talend Version v6.4.1 Summary The tPatternCheck component loses a custom pattern after migrating from 6.2.1 to 6.4.1.Additional Versions Produ... Show More -

Talend 6.4.1 Platform Installer - Error with 8 or more CPU Windows system

Talend Version (Required) v6.4.1 Summary Issue with running platform installer with 8 CPU or more in Windows systemAdditional Versionsv6.3.1P... Show More -

tESBProviderRequest: 'Port 80 already in use'

Problem Description When running a Job designed with a tEsbProviderRequest component, you may encounter the following exception: Exception in t... Show More -

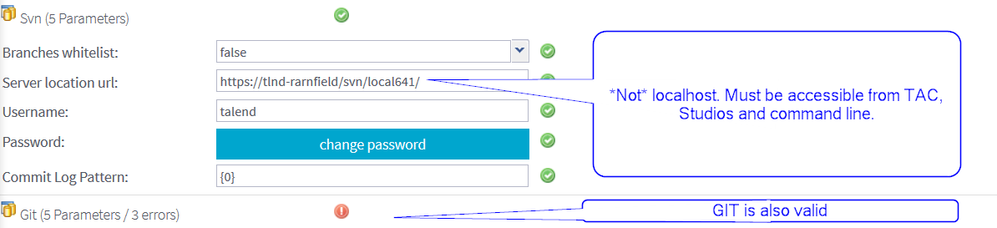

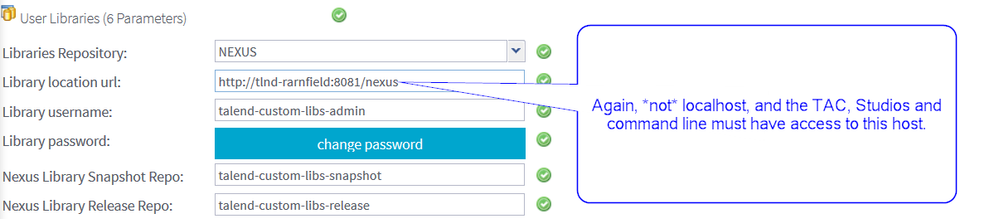

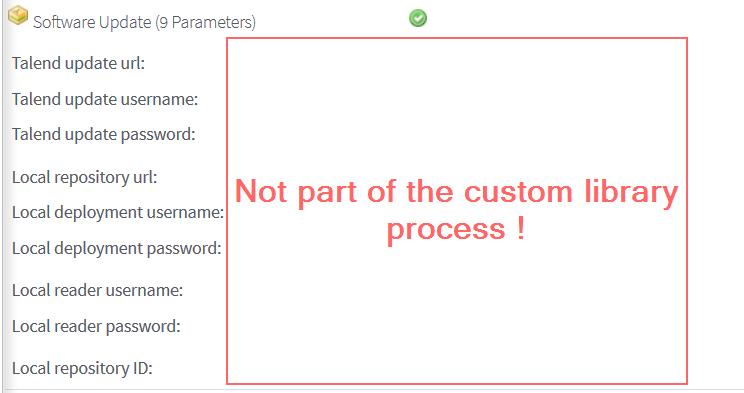

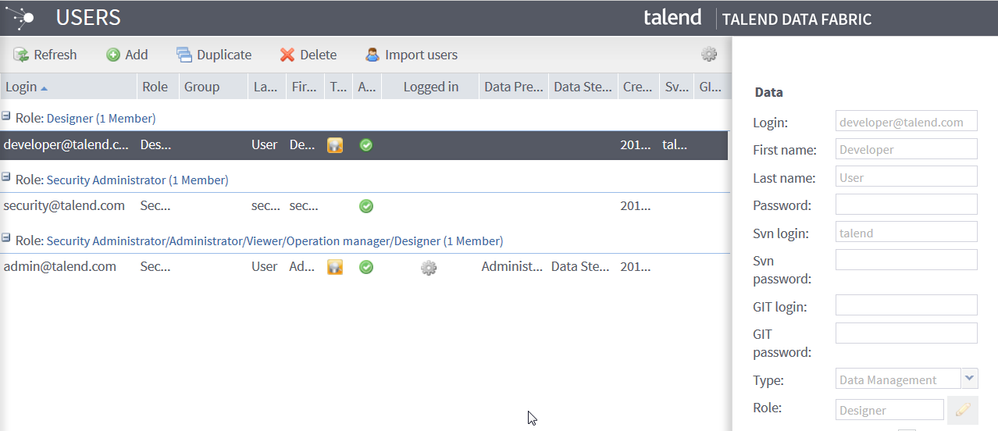

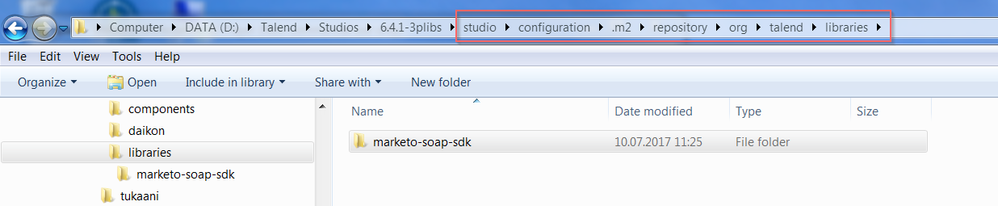

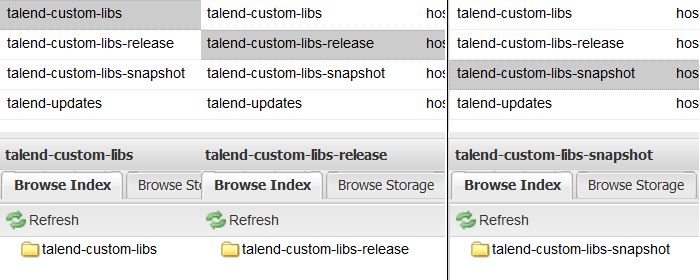

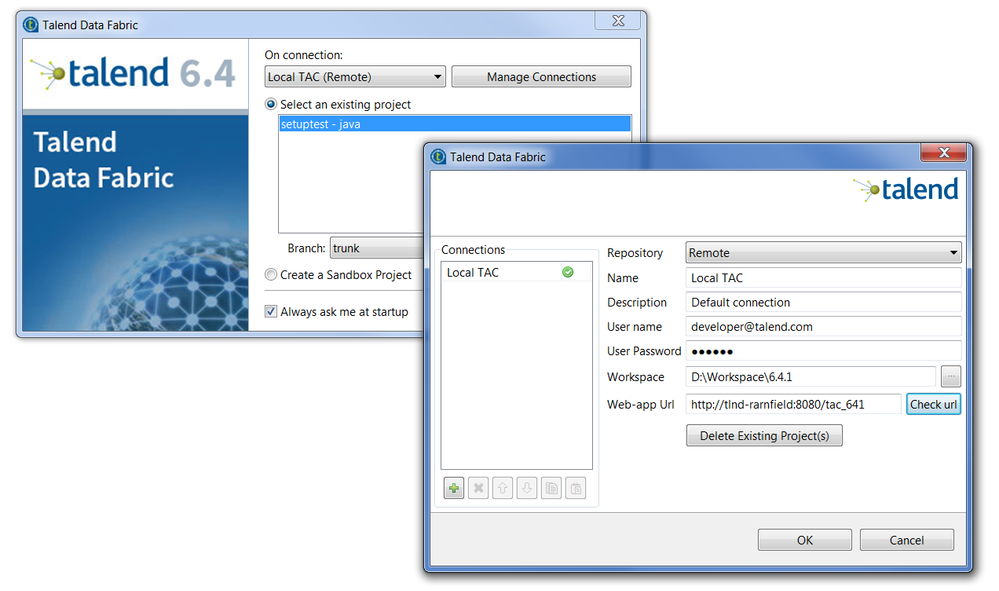

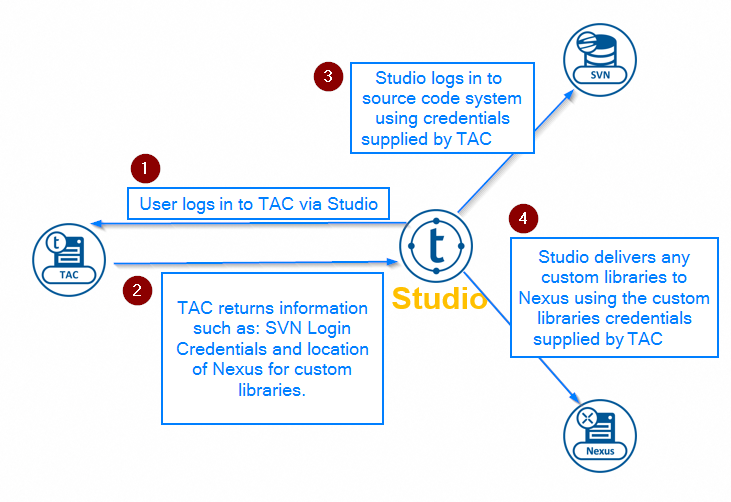

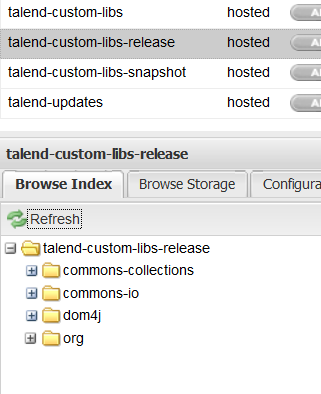

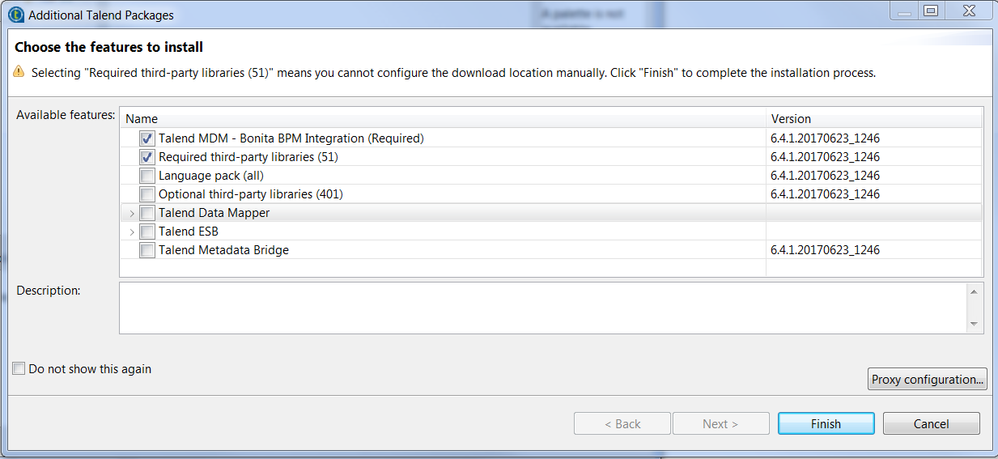

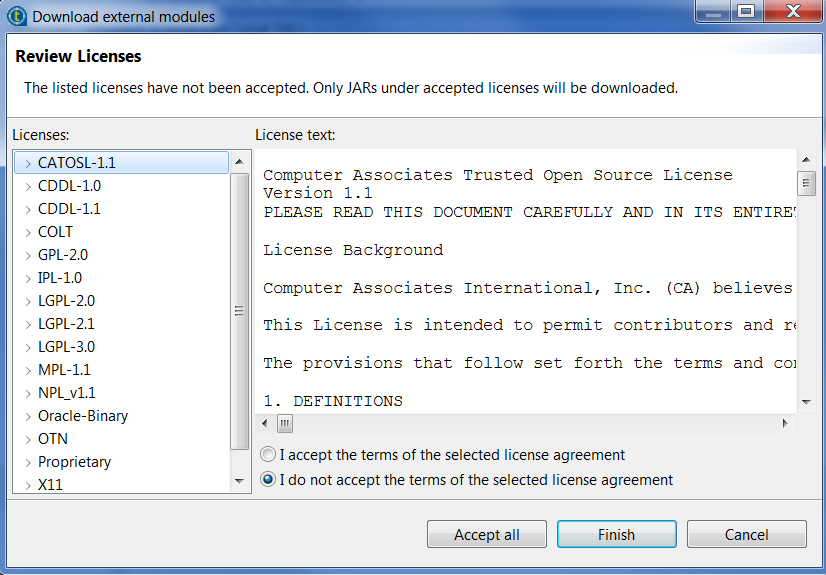

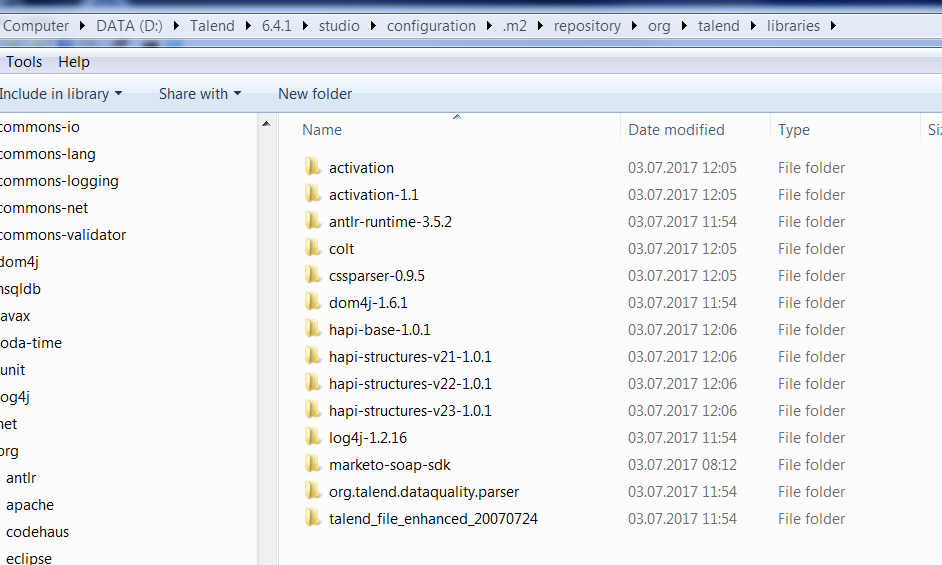

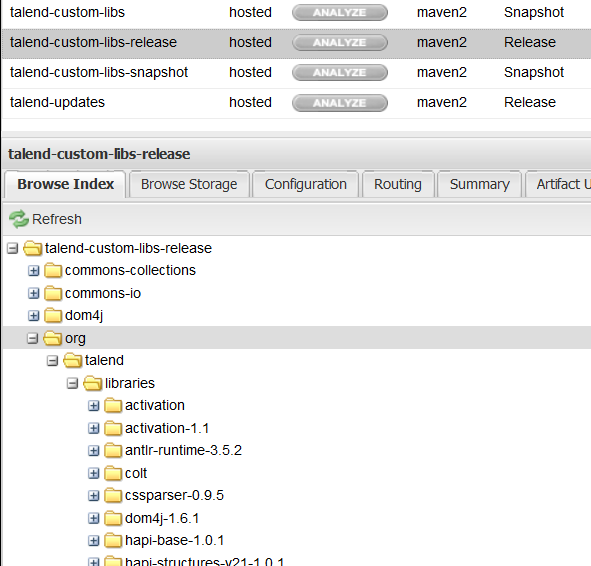

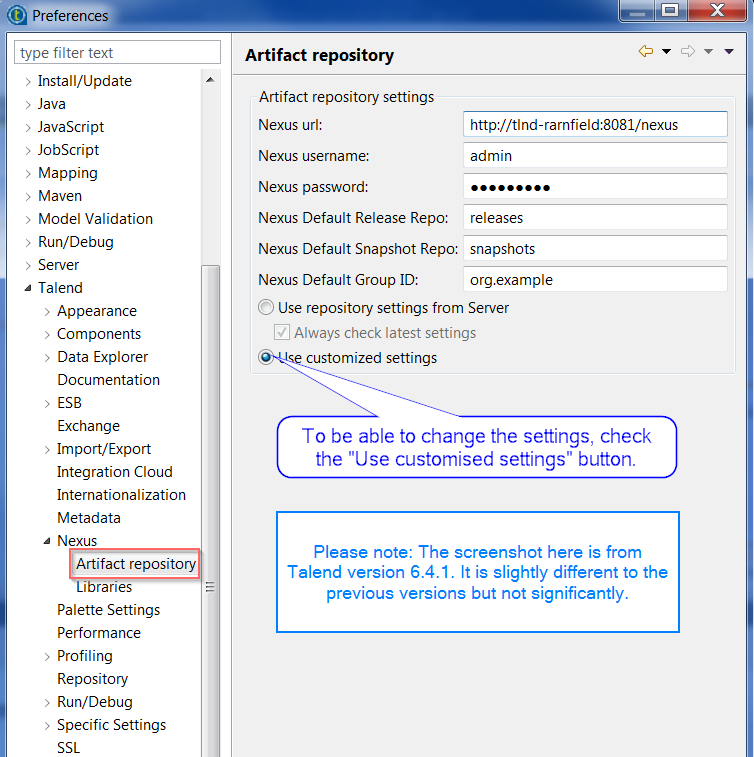

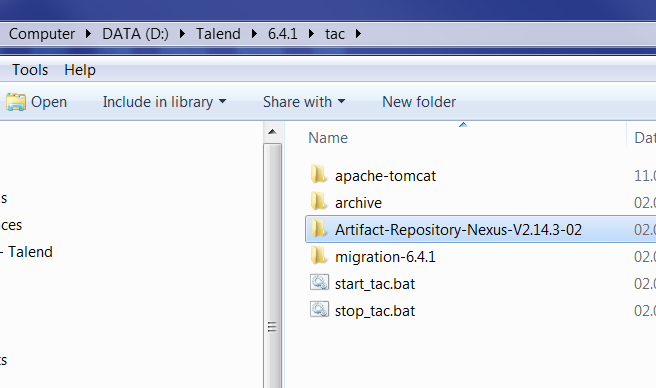

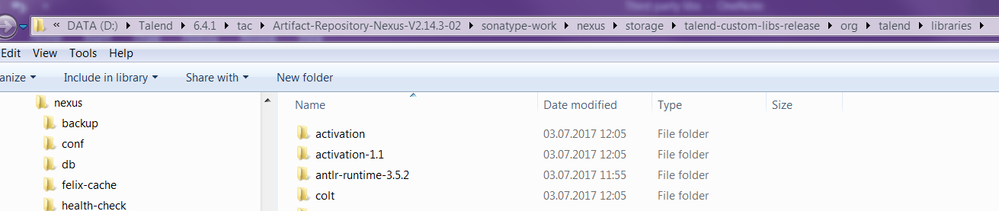

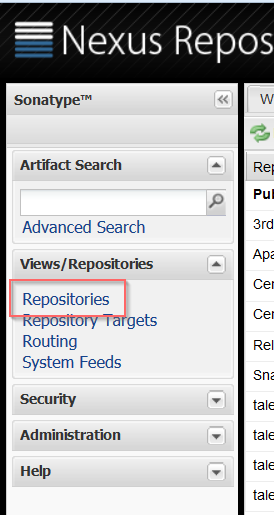

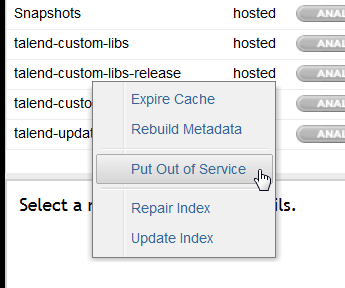

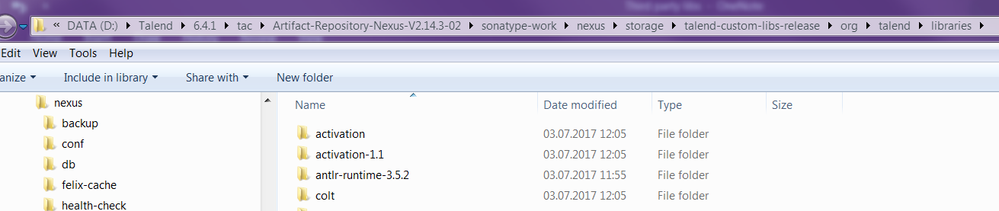

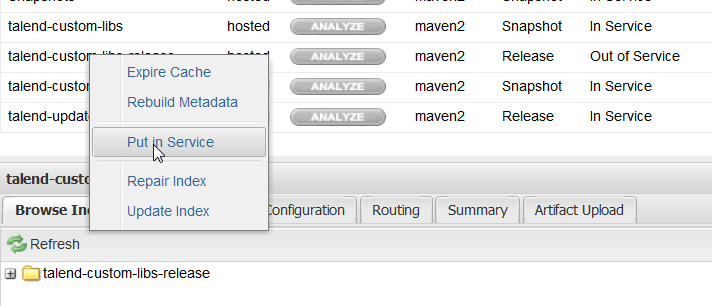

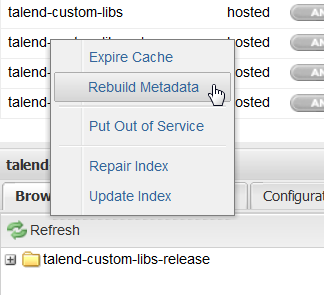

Talend Studio: Custom libraries process

This document describes the process for working with the third-party libraries (custom libraries) used within Talend. Custom libraries are needed for... Show More -

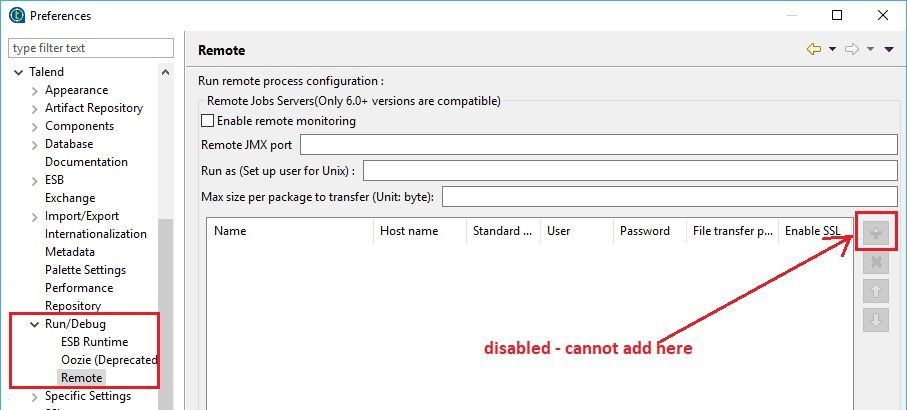

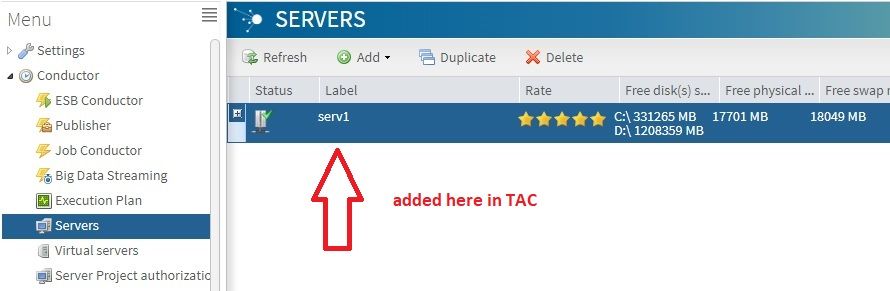

Cannot add a Remote JobServer from Studio

Problem Description The + button is disabled in the Preferences > Talend > Run/Debug > Remote window, and you cannot add a Remote JobServer to ru... Show More -

Jobs migrated to Talend 6.4 from Talend 6.3 or earlier throw a compilation error

Symptoms While running a Job in Talend 6.4, a compilation error occurs. The error pop up contains the error message Routines routine_name has com... Show More -

How to read attachments of an Account module in Salesforce

In Salesforce, Attachment and Account are different modules. An attachment of account means the attachment is a child record of the account. Every ... Show More