Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

Analytics & AI

Forums for Qlik Analytic solutions. Ask questions, join discussions, find solutions, and access documentation and resources.

Data Integration & Quality

Forums for Qlik Data Integration solutions. Ask questions, join discussions, find solutions, and access documentation and resources

Explore Qlik Gallery

Qlik Gallery is meant to encourage Qlikkies everywhere to share their progress – from a first Qlik app – to a favorite Qlik app – and everything in-between.

Qlik Community

Get started on Qlik Community, find How-To documents, and join general non-product related discussions.

Qlik Resources

Direct links to other resources within the Qlik ecosystem. We suggest you bookmark this page.

Qlik Academic Program

Qlik gives qualified university students, educators, and researchers free Qlik software and resources to prepare students for the data-driven workplace.

Recent Blog Posts

-

Qlik Replicate and an update on Salesforce “Use Any API Client” Permission Depre...

Dear Qlik Replicate customers, Salesforce announced (October 31st, 2025) that it is postponing the deprecation of the Use Any API Client user permiss... Show MoreDear Qlik Replicate customers,

Salesforce announced (October 31st, 2025) that it is postponing the deprecation of the Use Any API Client user permission. See Deprecating "Use Any API Client" User Permission for details.

Qlik will keep the OAUT plans on the roadmap to deliver them in time with Salesforce's updated plans.

Salesforce has announced the deprecation of the Use Any API Client user permission. For details, see Deprecating "Use Any API Client" User Permission | help.salesforce.com.

We understand that this is a security-related change, and Qlik is actively addressing it by developing Qlik Replicate support for OAuth Authentication. This work is a top priority for our team at present.

If you are affected by this change and have activated access policies relying on this permission, we recommend reaching out to Salesforce to request an extension. We are aware that some customers have successfully obtained an additional month of access.

By the end of this extension period, we expect to have an alternative solution in place using OAuth.

Customers using the Qlik Replicate tool to read data from the Salesforce source should be aware of this change.Thank you for your understanding and cooperation as we work to ensure a smooth transition.

If you have any questions, we're happy to assist. Reply to this blog post or take your queries to our Support Chat.Thank you for choosing Qlik,

Qlik Support -

Unlock Your Qlik Superpower

Not sure where to begin your Qlik journey? The Qlik Skills Assessment is a free, easy-to-use tool that helps you quickly evaluate where you are on you... Show MoreNot sure where to begin your Qlik journey? The Qlik Skills Assessment is a free, easy-to-use tool that helps you quickly evaluate where you are on your Qlik learning journey. Once you complete the assessment, you’ll receive training recommendations designed to strengthen and expand your skills.

We’ve expanded our assessments to include Qlik Data Analytics and Data Integration, with 11 Skills Assessments now available to you.

Why take a Skills Assessment?

- Understand where you are on your Qlik Learning journey

- Get clear guidance on which training to take next

- Measure team skill levels and identify gaps

- Retake assessments to track ongoing progress

- Compare skill levels before and after training

How do I take a Skills Assessment?

- Access the Skills Assessments page. If you’re logged into Qlik Learning, navigate to

Topics menu → Skills Assessment. - Select the Product/Capability Skills Assessment you would like to take.

- Complete the assessment in 30 minutes and receive your results and learning recommendations immediately.

Track your progress

- View your results anytime under My Learning → History.

- Retake assessments to monitor skill growth over time and measure the impact of your training.

Take your Qlik Skills Assessment today to understand where you are on your learning journey—and get the guidance you need to build and expand your Qlik expertise with confidence. 🚀

-

Qlik Sense November 2025 (Client-Managed) now available!

Key Highlights Refreshed App Settings Design The app-settings area has been refurbished with a more modern layout and tabbed navigation between cat... Show MoreKey Highlights

- Refreshed App Settings Design

The app-settings area has been refurbished with a more modern layout and tabbed navigation between categories of settings.

Why this matters:- Saves time when configuring apps

- Helps with onboarding and reducing configuration errors

- Improvements to the Sheet Editing Experience

Several usability upgrades have landed in the sheet-edit experience:

- The source table viewer and filters now appear directly from the sheet edit view, so you can see data tables and fields in-context.

- Filters have been added to the properties panel, making building and applying filters to visualizations faster.

Why this matters: - Makes authoring apps more intuitive and reduces back-and-forth between data manager and sheet view

- Helps streamline development, speeding time to insight

- New Straight Table — Now the Default

The new straight table object has graduated and is now included under the chart section as the default table visual. The older table object remains accessible in the asset panel for now and its eventual deprecation will be announced well in advance.

Why this matters:- The new straight table has enhanced usability (such as improved selection, sorting, performance)

- Encourages migration to the newer, better-supported table, ahead of eventual deprecation

- New Visual Enhancements & Chart Capabilities

Some powerful visual upgrades arrived in this release:

- Indent row setting in the new pivot table: For the new pivot table object, you can now choose “indent row dimensions” for a more compact view (ideal for text-heavy tables)

- Pivot table indicators: The new pivot table also supports icons and colors based on thresholds, enabling quick visual cues on measures.

- Shapes in bar and combo charts: Building on the success of shapes in line charts, you can now add shapes to bar and combo charts, enriching context and visual data literacy.

- Line chart shapes improved: For line charts, labels and symbols (size, color, placement) are now available in the shape options.

- Org chart now supports images & new styling: The org-chart object can now include an image via URL and enhanced styling settings making hierarchy charts look sharper.

- Map chart stability enhancements: Map charts now use a local webmap rather than one from Qlik’s servers resulting in faster load times and improved stability.

Why this matters: - These visual enhancements offer more expressive, compelling dashboards and reduce friction for authors

- Stability enhancements (e.g., for maps) reduce risk of user-experience issues in production

- Removal of Deprecated Objects

With this release, we are updating our announcement from our Qlik Sense May 2025 release regarding the roadmap for removing deprecated visualization objects. The following deprecated charts are now scheduled to be removed from the Qlik Analytics distribution in May 2027.

- Bar & area

- Bullet chart (old one)

- Heatmap chart

- Button for navigation

- Share button

- Show/hide container

- Container (old one)

In Closing

The November 2025 release for Qlik Sense Enterprise on Windows delivers meaningful improvements across usability, visualizations, and stability. Whether you're an analytics author, a business user, or an administrator/architect, there are advantages to be gained with this release.

As always, we recommend reviewing the full “What’s New” documentation and aligning your upgrade and adoption strategy accordingly.

-

New Feature: Templates

Qlik and Qlik Cloud are always innovating, adding new features to make the user experience even better. Today I would like to tell you about Qlik’s ne... Show MoreQlik and Qlik Cloud are always innovating, adding new features to make the user experience even better. Today I would like to tell you about Qlik’s newest feature: Templates. Templates are a new feature in Qlik Cloud that prompts the user when creating a new sheet.

To use Templates, go into any Qlik Cloud app and click on ‘sheets’ then ‘Create new sheet’.

There you will be greeted with the new Templates feature. Please know that if you do not wish to see this screen when you are creating a new sheet, you can simply uncheck the box next to ‘Show when creating a sheet’.

The Templates feature is broken down into a few different categories to help navigate the feature. The vast number of Templates available can seem a bit overwhelming, but if you find a template that you find yourself using often, you can click on the star next to it to add it to your ‘Favorites’.

Additionally, if you would like the freedom to create your own sheet, without a template, you can simply select the ‘Empty sheet’ option.

Using a template is easy!

Let’s take a look at one of the templates from the Highlights section ‘Charts with filters on the side’. With just the click of a button, my new sheet with the various placeholders for my charts has been created.

From here I can begin creating my sheet. As we can see, charts have been added to the sheet, including a Straight table at the bottom, KPIs at the top, and Bar charts in the middle with our Filter Panes to the side. Of course, I have the freedom to add, delete and change these charts as I see fit.

Then we add a bit of color and add a bit of spacing.

And we have a finished sheet! Of course there is still so much more we could do with this sheet to customize it to our needs, but it’s a start!

There are so many templates that you can use to help create your sheets. Take a look at new Template feature and drop which template you think will be most useful. Thank you for reading!

-

【2026/1/27(火)15:00 開催】AI の未来を創る:データ・エージェント・人間のタッグが生む新たな価値

AI を活用して投資利益率を高める 2026年のトレンド 多くの企業が AI に投資しているにもかかわらず、投資利益率を高めている企業はごく少数です。何を改善すべきなのか? 何十年もの間、企業は振り子のように揺れ動いてきました。前進している時は自由度を高め、後退している時は規律を強める…を繰り返し... Show MoreAI を活用して投資利益率を高める 2026年のトレンド

多くの企業が AI に投資しているにもかかわらず、投資利益率を高めている企業はごく少数です。何を改善すべきなのか?

何十年もの間、企業は振り子のように揺れ動いてきました。前進している時は自由度を高め、後退している時は規律を強める…を繰り返してきました。2026年のデータで成功する戦略モデルは、二者択一ではありません。管理とイノベーションを両立して活かし、新たな価値を生み出すことが重要になります。

1月 27日(火)開催 Web セミナー「AI の未来を創る:データ・エージェント・人間のタッグが生む新たな価値」では、Qlik のマーケットインテリジェンスリードの Dan Sommer と Qlik APAC の分析・AI 部門 最高技責任者の Charlie Farah が、2026年の重要なトレンドについて解説します。

本 Web セミナーでは、ビジネスを成功に導くために押さえるべき 3 つの重要なポイントをご紹介します。このポイントをビジネスに適用すると、データの整合性を確保してすべてのシステムをシームレスにつなぎ、ビジネスに革新を起こすことができます。さらに、この新たなモデルの基礎となるトレンドを探ることで、貴社のデータ戦略をレベルアップする方法も解説します。

偏った方針に振り回されることなく、分断を解消して統合基盤を構築するには?Web セミナーに参加して、AI を最大限に活用するために、新たなモデルの導入の重要性をご確認ください。

※ 参加費無料。日本語字幕付きでお届けします。パソコン・タブレット・スマートフォンで、どこからでもご参加・ご視聴いただけます。

-

Dynamic Engine Now Supports Google Kubernetes Engine: Deploy Anywhere, Scale Eve...

V1 milestone reinforces Dynamic Engine as the most versatile, cloud-agnostic execution runtime for enterprise data integration and API workloads. ... Show MoreV1 milestone reinforces Dynamic Engine as the most versatile, cloud-agnostic execution runtime for enterprise data integration and API workloads.

Multi-Cloud by Design: Meeting Industries Where They Are

Different industries have distinct cloud preferences, driven by existing vendor relationships, regulatory requirements, and regional data residency mandates:

- Financial Services & Healthcare often rely on Azure for compliance-ready environments and tight integration with Microsoft enterprise ecosystems.

- Retail, Media & E-commerce frequently leverage AWS for its mature services catalog, global reach, and scale.

- Technology & SaaS companies increasingly adopt Google Cloud (GKE) for its cutting-edge AI/ML capabilities, data analytics tools (BigQuery), and developer-first infrastructure.

- Manufacturing & Government may deploy on-premises or hybrid environments for data sovereignty, air-gapped scenarios, or legacy system integration.

With Dynamic Engine, you can now deploy a unified data integration runtime across all these environments, using the same orchestration, monitoring, and management tools via Talend Management Console (TMC).

GKE Support: Cloud-Native Power Meets Google Innovation

Dynamic Engine on Google Kubernetes Engine (GKE) brings the same enterprise-grade capabilities customers already enjoy on AWS and Azure, now optimized for Google Cloud infrastructure:

- Conformance with GKE standards: Dynamic Engine adheres to GKE specificities and best practices.

- Multi-version compatibility: Supports Kubernetes versions 1.30 through 1.34, with Dynamic Engine versions 0.22.x through 1.0.x.

- Work-in-progress: GKE-Autopilot and EKS Auto-mode are on the roadmap, further simplifying cluster management.

Kubernetes versions

Compatible Dynamic Engine versions

1.30

0.23, 0.24, 1.0

1.31

0.23, 0.24, 1.0

1.32

0.23, 0.24, 1.0

1.33

0.24, 1.0

1.34

0.24, 1.0

For detailed setup instructions, see our official guide: Configuring Google Kubernetes Engine.

Helm: Effortless Deployment, Enterprise-Grade Customization

One of Dynamic Engine's standout features is its native Helm support, which dramatically simplifies deployment while offering deep customization for enterprise DevOps teams.

Why Helm Matters

Helm transforms Dynamic Engine deployment into a repeatable, version-controlled, GitOps-ready process:

- One-command deployment: Deploy engine instances and environments with simple helm install commands.

- Custom namespaces: Define your own namespace conventions to align with internal Kubernetes governance.

- Custom registries & air-gap support: Deploy in fully disconnected environments using your own container registries.

- HTTP proxy configuration: Integrate seamlessly with corporate proxies for secure, compliant deployments.

- Reusable configurations: Store and version your values.yaml files alongside infrastructure-as-code.

Helm charts for Dynamic Engine are publicly available and include:

- Core custom resource definitions

- Engine instance chart

- Environment-specific chart

For complete guidance, see: Recommended Helm Deployment.

Air-Gap Ready: Secure Deployments for Regulated Environments

For organizations operating in highly secure, air-gapped, or disconnected environments (common in defense, government, financial services, and healthcare), Dynamic Engine offers full support for:

- Custom Docker registries: Pull engine images from your own internal registry (e.g., Artifactory, Harbor, or private GCR/ECR/ACR).

- Private Helm chart repositories: Host and serve Helm charts internally.

- HTTP/HTTPS proxy support: Route traffic through corporate gateways with full TLS/mTLS support.

This makes Dynamic Engine one of the few enterprise data integration platforms that can operate in zero-trust, fully isolated network environments.

DevSecOps-Ready: Advanced Customization for Enterprise Standards

Dynamic Engine goes far beyond basic deployment, offering a rich set of enterprise customization capabilities:

- Signed artifacts for DevOps security: Verify the integrity of job artifacts using custom keystores, ensuring only trusted code runs in production.

- Custom namespaces: Align with your Kubernetes governance and multi-tenancy strategy.

- Custom StorageClass: Specify persistent volume configurations optimized for your infrastructure (e.g., SSD-backed volumes for high-throughput workloads).

- Custom trust stores: Securely connect to external services (databases, APIs, LDAP) using enterprise certificate authorities.

- Log management: Optionally deactivate log transfer to TMC for full on-prem log retention and compliance.

For advanced configurations, explore: Additional Customization with DevSecOps.

Frictionless Upgrades: Zero Downtime, Always

One of Dynamic Engine's most compelling features is its built-in, no-downtime upgrade mechanism.

How It Works

- Single version per release: Each monthly release includes one consolidated Dynamic Engine version, simplifying lifecycle management.

- Simple wizard in TMC: Pick a new version, download updated manifests or Helm charts, and apply - no complex migration scripts.

- GitOps-friendly: Use helm upgrade with --reuse-values to preserve customizations while applying new versions.

- No impact on running jobs: During upgrades, active tasks continue execution without interruption - critical for 24/7 production environments.

Learn more: Upgrading Dynamic Engine Version.

The Bottom Line: Cloud Freedom Without Compromise

With Google Kubernetes Engine support, Helm-based deployment, air-gap readiness, and zero-downtime upgrades, Dynamic Engine delivers:

- True cloud portability: Deploy on AWS, Azure, Google Cloud, or on-premises with the same codebase and management experience.

- Industry-aligned flexibility: Meet your organization where it is - whether you're cloud-first, hybrid, or air-gapped.

- Enterprise DevOps practices: GitOps, IaC, CI/CD, custom registries, and advanced security - all supported out of the box.

- Operational simplicity: No more patching downtime, no more manual upgrade scripts, no more vendor lock-in.

Dynamic Engine isn't just a runtime - it's a future-proof platform for data integration at enterprise scale, across any cloud, any Kubernetes distribution, and any regulatory environment.

Resources & Documentation

- Dynamic Engine Configuration Guide

- Configuring Google Kubernetes Engine

- Recommended Helm Deployment

- Upgrading Dynamic Engine

- Custom Namespace Setup

- Dynamic Engine Prerequisites

Ready to deploy Dynamic Engine on GKE, or take your existing AWS/Azure deployments to the next level with Helm customization? Reach out to your Qlik Talend account team or explore our documentation to get started today.

-

Write Table now available in Qlik Cloud Analytics

Turn insights into action... right inside your analytics! As we wrap up the year and before everyone starts tying bows on projects, dashboards and wi... Show MoreTurn insights into action... right inside your analytics!

As we wrap up the year and before everyone starts tying bows on projects, dashboards and wish lists, we wanted to add one more gift to the pile. Something that helps you move from insights to action faster, collaborate more easily, and keep your workflows flowing straight into the new year.

Unwrap Write Table, now available in Qlik Cloud Analytics.

Write Table is included with Premium, Enterprise, and Enterprise SaaS subscriptions. No add-ons or extra licensing required. You can find it in the Chart Library now.

A More Interactive Analytics Experience

Write Table introduces a new editable table chart that lets you update data, add context, validate decisions, and collaborate directly within your analytics apps. No reloads. No switching systems.

Just click, update, and watch your changes sync instantly across active sessions.

And because it’s built natively into Qlik Cloud Analytics, it seamlessly integrates into your existing workflows, transforming your apps into actionable workspaces.

"Probably the most awaited feature for customers who want to turn insights into action. Write table closes the gap between analytics and transaction applications, offering a compelling solution for those who always want to take it a step further. "

— Henri Rufin, Head of Data & Analytics, RadialFrom Decisions to Automation

Now you can capture decisions and act on them.

With real-time syncing and change tracking, every update you make in a Write Table is stored in a Qlik-managed database called a “change store” that can be exported and connected into Qlik Automate workflows. That means approvals, changes, or comments made in an app can instantly trigger downstream processes.

From updating operational records... to routing approvals... to notifying teams... to pushing changes into your operational systems....

Write Table helps you move smoothly from insight -> decision -> action in one place.

“The new Write Table is a game-changing addition to Qlik's data and analytics solution. It allows our users to interact with the data, add context to their insights and collaborate effortlessly within the same trusted end-to-end platform. We're going to use it for everything, from updating data easily to seamlessly integrating with third-party tools using Qlik Automate workflows.”

— Sebastian Björkqvist, Solution Lead, Fellowmind

Key Highlights

- Editable table chart for updating values, adding comments, and capturing decisions in the Chart Library

- Real-time sync across active sessions, without reloads

- Qlik-managed change store for secure update handling

- Integration with Qlik Automate to trigger downstream workflows and orchestrate actions

- Native to Qlik Cloud Analytics, included for Premium, Enterprise and Enterprise SaaS subscriptions

Learn more

- Write Table Product Tour

- What’s New in Qlik Cloud | Qlik Help

- Write Table | Qlik Help

- Write Table - SaaS in 60 | Video

- Write Table FAQ | Support Article

-

統合が進む市場では、データ統合が競争優位を左右する(Qlik Blog 翻訳)

ブログ著者:Drew Clarke 本ブログは「In a Consolidating Market, Data Integration Is Your Control Point」の翻訳になります。 Gartner 社は、「Gartner® データ統合ツールの Magic Quadrant」におい... Show Moreブログ著者:Drew Clarke

本ブログは「In a Consolidating Market, Data Integration Is Your Control Point」の翻訳になります。Gartner 社は、「Gartner® データ統合ツールの Magic Quadrant」において、再び Qlik をリーダーの 1 社として評価しました。Qlik は、今回で 10年連続の快挙を達成しました。この 10年間、データ統合を取り巻く環境は大きく変化しました。大規模なクラウドコンピューティングサービスを提供する企業群は存在感を高め、大手ベンダーは囲い込みを強化してきました。さらに、企業の買収が顧客の選択肢そのものを再編しています。

市場の統合が進んでいる今、最高情報責任者や最高データ責任者が考えるべきポイントが変わってきています。「Magic Quadrant のポジション」よりも、「自社のデータおよび AI 戦略の推進と継続性」が重要になっています。Deloitte 社が実施したグローバル CIO 調査においても同じ方向性が示されました。クラウドや AI を活用した業務が増えているが、ベンダー依存の回避とアーキテクチャの柔軟性の維持が、これまで以上に重要な課題となっています。

リアルタイム・ハイブリッド・オープン

高性能な CDC(変更データキャプチャ)と複製

Qlik のログベースの変更データキャプチャおよび複製は、市場でもトップクラスと評価されています。データベース・メインフレーム・クラウドなど、あらゆる環境間で信頼性の高いリアルタイムの連携を実現します。これにより、ゼロダウンタイムでハイブリッドクラウドへデータを移行することが可能になり、リアルタイムのリスク管理と業務運用をサポートします。膨大なデータの移行と変換

Gartner 社は、膨大なデータやバッチ処理におけるデータ移行と変換機能のパフォーマンスについても高く評価しています。ここで求められるのは、負荷が増大しても安定して動作し、本番環境での想定外のトラブルを最小限に抑制できることです。これにより、日々の運用維持に縛られずに AI や分析といった新たな価値の創出に注力できるようになります。ハイブリッドおよびマルチクラウド対応の幅広いコネクター

Qlik が提供している幅広いコネクター、オンプレミス・クラウド・レイクハウス環境におけるサポートは、小さな機能や細かい仕様に見えますが、実際は運用の柔軟性をサポートする立役者です。ある業務はオンプレミスで、別の業務は AWS / Azure / Google Cloud で実行するといった柔軟な運用が可能になります。また、新しいデータ形式(Apache Iceberg など)を導入する際に、既存の統合戦略を策定し直す必要もありません。ガバナンス・メタデータ管理・AI 活用への対応

Gartner 社は、Qlik のメタデータ管理とガバナンスを市場の平均以上と評価しており、データパイプライン全体の系統・ポリシーの適用・データの流れの可視化などが含まれます。AI がより重要な業務に介入するようになり、データの出所や利用方法における規制当局の監視が厳しくなっている中、こうした機能はオプションではなく必須となっています。

特許取得済みの Qlik Trust Score for AI は、AI がアシストするパイプライン設計や品質チェックにも活用されており、メタデータ管理とガバナンスの基盤で機能します。管理できるデータのみが、自動化と信頼度評価の対象になります。レイクハウスと AI の活用 - 運用の柔軟性も維持

Qlik のオープンレイクハウスや Apache Iceberg の取り込み、圧縮、ハイブリッド複製に関する取り組みは、先進的なレイクハウス戦略を証明するものだと評価されています。Icebergは、複数の処理エンジンで同じデータを共有できるオープンテーブル形式のため、エンジニアリング・コスト効率・リスク管理のすべてにおいて優れています。

管理された Iceberg テーブルに一度データを置くだけで、データウェアハウス・AI・分析ツールで即座に利用できるようになります。複雑でコストを要する複数のデータのコピーを作成する必要はありません。信頼できるデータ製品、オープンなレイクハウスレイヤー、AI がサポートする統合を組み合わせると、無意識にデータ活用にAI を使えるようになります。個別の特別なプロジェクトとして扱う必要はありません。

統合が進む市場での独立性

Magic Quadrant で見落としがちな一行ですが、Qlik は引き続き独立性を維持し、ハイブリッド環境に対応したオープンプラットフォームを提供しています。

もしデータ統合プラットフォームが CRM ベンダーや主要クラウドが所有している場合、プラットフォームはどうしてもベンダーの都合に合わせた設計になります。ロードマップや価格設定、機能やデータ連携の範囲なども影響を受けます。

一方で、独りした統合プラットフォームなら、自由な選択肢と交渉力を確保することができます。クラウドやデータウェアハウスを自由に組み合わせたり、価値に応じて条件を交渉したり、性能・コスト・規制の変化に応じて柔軟に移行することも可能です。これが私が主張したい「自由」です。自社のビジネスに必要なデータファブリックや AI 環境を設計し、状況の変化に応じてゼロから構築し直すこともなく柔軟に変更できる状態なのです。

まとめ

Magic Quadrant の図を度外視すると、今年のレポートで伝えたいことはシンプルです。リアルタイム対応、ハイブリッド環境、オープンアーキテクチャは、今の標準要件となっています。ガバナンスやメタデータ管理、AI 活用の準備は、意思決定の需要な判断基準になりつつあります。さらに、市場の統合が進む中でも、独立性と柔軟性は変わらず重要です。

この Magic Quadrant の評価は、Qlik の取り組みが正しい方向に進んでいることを示しています。CDC や膨大なデータの移行、コネクタ、ガバナンス、レイクハウス、AI 支援の統合といった一貫した取り組みが評価されたと言えます。

データリーダーが重要視していることは、シンプルです。

- 必要な時に、クラウドやデータウェアハウス、基幹システム間で自由にデータを移行できるのか。移行する度にゼロからやり直す必要はないのか。

- データが AI や規制に対応できる状態であることを、明確な系統、ポリシーの適用、信頼の指標で証明できるのか。

- 単一の統合されたデータ基盤を運用しているのか。もしくは分散したツールを寄せ集めた状態なのか。そして、それがコスト、リスク、スピードにどう影響するのか。

こうしたポイントに対応できるのが、Qlik Talend Cloud / Qlik オープンレイクハウス / AI 支援の統合アプローチです。

データ統合ツール分野で 10年連続でリーダーの 1 社に評価された功績を誇らしく感じますが、本当に重要なのは、実際にプラットフォームを使用した際の自由な操作性と管理を実感いただけることです。

-

【新着レポート】Qlik、データ統合ツールで 10 年連続リーダーの 1 社に!

Gartner 社は、「2025年 Gartner® データ統合ツールの Magic Quadrant」を発表しました。Gartner 社が評価した 20 社のデータ統合メーカーの中で、Qlik は 10 年連続でリーダーの 1 社に評価されました。 データはこれまで以上に多くのシステム間で高速で... Show MoreGartner 社は、「2025年 Gartner® データ統合ツールの Magic Quadrant」を発表しました。Gartner 社が評価した 20 社のデータ統合メーカーの中で、Qlik は 10 年連続でリーダーの 1 社に評価されました。

データはこれまで以上に多くのシステム間で高速で移行し、AI 主導の意思決定の要となっています。信頼できるデータ統合基盤は、このような状況を支えるだけではなく、極めて重要なものとなっています。

自社に最適なプラットフォームを選択するには?本レポートで 詳細をご確認ください。-

データ統合市場における Gartner 社 のインサイト

-

Qlik がリーダーの 1 社に評価された理由

-

データ統合市場における各メーカーの評価

-

-

Some of the best Qlik Sense extensions created so far

Since the release of Sense back in September 2014 a lot of good things have happened to the product. If you look back you almost can’t believe that it... Show MoreSince the release of Sense back in September 2014 a lot of good things have happened to the product. If you look back you almost can’t believe that it was just 6 months ago when we launched Sense. Since then, R&D guys and girls have added quite a lot of improvements and new functionality (and more is coming next) into the product.

Today, I don’t want a focus on the company’s centralized development. We talk enough about ourselves here, but on the decentralized Sense development guerrilla from out there. Since January 26 these individuals contributing with a fresh view to Qlik Sense (to QlikView as well) have a place to share their ideas and Open Source projects. It’s called Qlik Branch and it’s open for everyone to join.

From all the projects already submitted to Qlik Branch, I will nominate 3 of my favorites created so far.

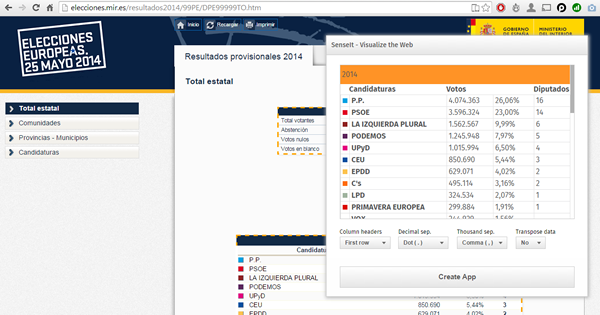

SenseIt by Alex Karlsson

It goes to my personal Top 1 for a variety of reasons but particularly because it opens a completely new and unexplored category for Extensions. We are used to seeing extensions (or visualizations) within the product itself but this is something completely different. SenseIt is a browser extension or plugin that will let you create a new app on the fly by capturing a table from Chrome and loading it as data into your Qlik Sense Desktop. Truly amazing experience and the name is cool too (isn’t it?)

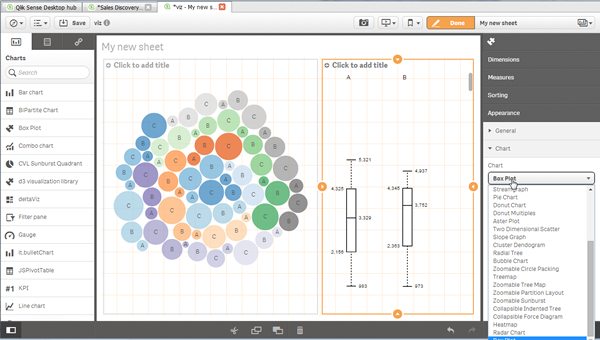

D3 Visualization Library by Speros Kokenes

As a visualization junkie I am, I love D3.js, I truly love some of the beautiful and smart visualizations built around the popular JavaScript library. I have seen (and ported) some of those charts to Sense, one by one, so you end up having a packed chart library on your Sense desktop. Speros have gone a bit further by converting the Visualization object into a truly D3js library where you can go and pick up your favorite D3js visualization, very entertaining. In future releases we might end up having control over the chart colors and some other cool stuff that will make this extension superb, remember you can contribute and make it even better.

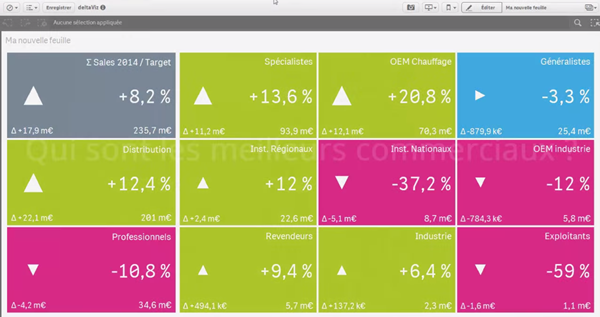

deltaViz self-service dashboard by Yves Blake

For those of us QlikView lovers any addition to the dashboard world in Sense it’s always very much appreciated. If it’s very well executed and designed as it is deltaViz, then there’s no reason to not try it. DetalViz is a complete solution for dashboards focused on comparisons and very well implemented to take advantage of Qlik Sense grid system. If you still have doubts about this visualization, you can see it live here: https://www.youtube.com/watch?v=4s30AEf4qJc

These are my top 3 favorite extensions/visualizations created so far, but what are yours?

AMZ

-

Celebrating a Year of Transformative Learning: Wrapping Up 2025 with the Qlik Ac...

Throughout 2025, the Qlik Academic Program has continued to empower students and educators worldwide by providing free access to our industry-leading ... Show MoreThroughout 2025, the Qlik Academic Program has continued to empower students and educators worldwide by providing free access to our industry-leading data analytics tools. From Qlik Sense to advanced data visualization techniques, we’ve helped thousands of learners gain the skills they need to excel in a data-driven world. Our mission remains the same: to transform education by making analytics accessible, engaging, and practical.

This year, we’ve seen particularly exciting developments in Germany. We welcomed several new universities into the Qlik family, expanding our reach and helping even more students harness the power of data in their coursework. These new partnerships are just one example of how the program is making a difference, bringing data literacy to more classrooms and inspiring the next generation of data-savvy professionals.

For those who haven’t yet taken advantage of the Qlik Academic Program, now is the perfect time to start. The program offers a wealth of resources, qualifications, and free access to Qlik Sense—all designed to help you integrate powerful analytics into your teaching and learning. You can click here https://qlik.com/academicprogram to learn more about how to get started and join us on this exciting journey!

As we wrap up the year, I want to extend a heartfelt thank you to all our educators, students, and partners who have made 2025 such a memorable and impactful year. We’re proud of what we’ve accomplished together and excited for the new possibilities that 2026 will bring.

Stay tuned for more stories, more learning, and more opportunities as we continue to grow the Qlik Academic Program.

Here’s to a great year behind us and an even brighter one ahead!

-

【オンデマンド配信】The AI Reality Tour Tokyo 2025 ダイジェスト

去る 10月 28日(火)に開催しました「The AI Reality Tour Tokyo 2025」では、予測 AI・生成 AI・エージェンティック AI を統合した Qlik の最新製品をご紹介する基調講演をはじめ、Qlik のユーザーが語る先進的な事例、Qlik のパートナー企業による最新の... Show More去る 10月 28日(火)に開催しました「The AI Reality Tour Tokyo 2025」では、予測 AI・生成 AI・エージェンティック AI を統合した Qlik の最新製品をご紹介する基調講演をはじめ、Qlik のユーザーが語る先進的な事例、Qlik のパートナー企業による最新のテクノロジーやソリューション、展示ブースなどで、貴社の AI 戦略を成功に導く最新情報をご紹介しました。

当日の講演の一部をオンデマンドでご視聴いただけます。さらに、オンデマンド配信限定のテクノロジー講演も配信中!ぜひ、この機会にご視聴ください。

※本 Web セミナーは、2025年 10月 28日(火)開催の「The AI Reality Tour Tokyo 2025」 における一部の講演を録画したものです。

※視聴無料。パソコン・タブレット・スマートフォンで、どこからでもご視聴いただけます。

今すぐ視聴する -

Wrapping Up the Semester & Looking Ahead With Qlik

As the fall semester comes to a close, now is the perfect time to pause, enjoy family and friends, and fully embrace the holiday season. Take the time... Show MoreAs the fall semester comes to a close, now is the perfect time to pause, enjoy family and friends, and fully embrace the holiday season. Take the time to relax, regroup, and recharge—you’ve earned it.

When you return refreshed for a new year and a new semester, Qlik has you covered. We’re here to support you with everything you need to bring data analytics to life in your classroom.

For Professors: We Make Next Semester Easy

We provide ready-to-use academic resources, including:

-

Course content

-

Training materials

-

Syllabi

-

Exams

-

Hands-on learning tools

And the best part? You’re not doing it alone.

What I Can Offer You

I’m available to Zoom into your class to introduce Qlik, explain who we are, and walk through the opportunities available through our academic program.

Once your students are signed up, we offer a student workshop designed to build confidence with Qlik from day one. We cover the fundamentals so that when you begin your lessons, students already feel comfortable navigating and using the platform.

Let’s Get You on the Schedule

We’d love to partner with you next semester—and spots will fill up quickly.

Please reach out to me directly to reserve your session or ask any questions.

brittany.fournier@qlik.comDuring the Break

Make sure to get signed up and spend a little time exploring the Qlik portal. It’s a great chance to see all the tools and resources we have to offer! Sign up here!Enjoy the holidays, rest well, and we look forward to supporting you and your students in the new year!

-

-

Update to the Automations Custom Code block version beginning January 31st 2026

We plan to update the Custom Code block in Qlik Automate to upgrade related software versions. When will this change go into effect? The update is exp... Show MoreWe plan to update the Custom Code block in Qlik Automate to upgrade related software versions.

When will this change go into effect?

The update is expected to begin rolling out on January 31st, 2026. We urge developers and automation users to review their Custom Code blocks for any possible compatibility issues before this date and to update them where necessary.

What exactly is changed?

The following software versions will be updated:

- PHP 8.3.20 upgraded to PHP 8.4 (Visit PHP docs)

- NodeJS 22 upgraded to NodeJS 24 (Visit NodeJS docs)

- Python 3.12 upgraded to Python 3.14 (Visit Python docs)

If you have any questions, we're happy to assist. Reply to this blog post or take similar queries to the Qlik Automate forum.

Thank you for choosing Qlik,

Qlik Support -

Qlik Open Lakehouse、ストリーミング対応、信頼性、オープン性、AI対応を強化(Qlik Blog 翻訳)

本ブログは "Supercharging Qlik Open Lakehouse: Now Streaming, Trusted, Open, and AI-Ready" の翻訳です。 著者:Vijay Raja 本年の AWS re:Invent 2025 において、Qlik Open Lake... Show More本ブログは "Supercharging Qlik Open Lakehouse: Now Streaming, Trusted, Open, and AI-Ready" の翻訳です。

著者:Vijay Raja

本年の AWS re:Invent 2025 において、Qlik Open Lakehouse の次世代機能を発表できたことを大変嬉しく思います。これは大きな飛躍であり、リアルタイムストリーミング取り込み、オンザフライ変換、組み込みのデータ品質とガバナンス、拡張されたエコシステム統合など、待望の機能を実現します。

このリリースにより、Qlik Open Lakehouse は AI、分析、オペレーショナルインテリジェンスのための完全な基盤へと進化します。これまでにない形で、オープン性、パフォーマンス、信頼性を統合します。

ストリーミング取り込み:リアルタイムデータを Iceberg に直接投入

AI 主導の現代において、リアルタイムデータはもはやオプションではなく、基盤となる要素です。

Qlik Open Lakehouse では、データベース、SaaS アプリケーション、SAP、メインフレームなど数百のソースからリアルタイム CDC データとバッチデータを、わずか数クリックで Iceberg テーブルに直接取り込むことが既に可能です。

この度、Apache Iceberg 向け高スループットストリーミング取り込み機能を発表します。これにより組織は、Apache Kafka、Amazon Kinesis、Amazon S3 などのストリーミングソースから毎秒数百万件のイベントを、データウェアハウスを介さずに Qlik Open Lakehouse 経由で直接 Iceberg テーブルに取り込めます。

これにより、Web およびモバイルアプリ、IoT デバイス、ログなどからペタバイト規模のリアルタイムデータを継続的に収集・クエリできるようになり、サイバーセキュリティ分析、IoT監視、予知保全、ライブ AI モデルトレーニングなどのユースケースを実現します。これらすべてを、オープンで柔軟性が高く相互運用可能なプラットフォームで実現します。

また、Qlik Open Lakehouse は設計上、コスト効率にも優れています。自動修復機能を備えたコスト効率の高い Amazon EC2 スポットインスタンスを活用し、取り込みコストを最大 70~90% 削減します。これにより数百万ドル規模のコスト削減が可能です。Qlik Open Lakehouse の取り込みコストとパフォーマンスを比較した最新ベンチマークをご覧ください。

さらに重要なのは、Qlik Open Lakehouse がスキーマの自動進化、ファイルの最適化、障害からの自動復旧を実行するため、ユーザーが手動で対応する必要がない点です。ネストされたスキーマを含む進化するスキーマに自動的に適応し、リアルタイムイベントの一貫性と信頼性の高い処理を保証します。

Amazon Kinesis から Iceberg へのストリーミング取り込みを実演する最新デモを、ここで一部ご紹介します。

https://videos.qlik.com/watch/G2MpJC2Bp3ZZXWivJhHVkS?

ストリーミング変換:データが流れると同時に整形

リアルタイム取り込みは始まりに過ぎません。ストリーミング変換により、複雑な手動バッチジョブを待ったり、データウェアハウスにロードしたりすることなく、データがレイクハウスに流入する過程で、そのデータをクレンジング、結合、再整形できるようになりました。

視覚的でノーコードのインターフェースを通じて、データチームはクリーニング、フィルタリング、標準化、ネスト解除、フラット化、マスキングなどの変換を定義できます。これらすべてがストリーム内で適用され、スキーマの進化は自動的に処理されます。

結果として、数時間や数日ではなく、数分で AI や分析に即対応可能なデータが得られます。

これにより、チームはデータ品質や鮮度の問題を、ソースに近い場所でリアルタイムに解決し、エンドユーザーに影響を与えることなく、データをキュレーション・整形できます。

さらに、Qlik のコスト最適化コンピューティングエンジン(EC2 スポットインスタンスベース)により、自動スケーリング、フェイルオーバー、信頼性を備えながら、従来手法の3分の1のコストでリアルタイム変換を実現します。

相互運用性をめざした設計により Iceberg エコシステムを拡張

Qlik Open Lakehouse はオープン性を追求して構築されています。今回のリリースにより、その相互運用性をさらに拡大します。

オープンレイクハウスアーキテクチャをさらに強力にする3つの主要なエコシステム統合をご紹介します。

- Snowflake Open Catalog のサポート – 現在利用可能な AWS Glue Catalog に加えSnowflake Open Catalog のサポートを追加します。これにより、お客様は Iceberg カタログの選択肢が広がり、環境をまたいだシームレスなデータ発見とガバナンスが可能になります。この統合により、拡大を続ける Iceberg エコシステムとの相互運用性が大幅に強化され、お客様は AWS ネイティブサービスか Snowflake のクラウドネイティブ環境のいずれにいても、モダンなレイクハウスを構築する自由を得られます。

- Databricks と Amazon Redshift へのゼロコピーミラーリング – Qlik は既に Snowflake へのゼロコピーミラーリングを実現しており、ユーザーはデータを複製することなく Snowflake で外部テーブルを作成し、クエリやダウンストリーム変換を実行できます。これによりパフォーマンスを維持しつつコストを削減します。今回、この強力な機能を Databricks および Amazon Redshift へのミラーリングにも拡大しました。これにより、チームは複数のプラットフォームにまたがる Iceberg データのクエリや変換を、データの複製やコスト増なしに実行できます。

- Apache Spark 統合の強化 – Apache Spark のサポートを強化し、あらゆる Spark ベースのエンジンが最新の Iceberg データに直接アクセスできるようにします。これにより、大規模な分析、AI、機械学習ワークロードを実現します。

これらの統合により、真にオープンで柔軟なマルチエンジン対応のレイクハウス環境を提供します。データチームはサイロ化を解消し、データの重複を最小限に抑え、インサイト獲得までの時間を短縮できます。

エンドツーエンドのデータ品質とガバナンス

オープンであることと、無秩序であることは同義語ではありません。Qlik Open Lakehouse により、Qlik Talend の信頼性の高いデータ品質とガバナンス機能を、Open Lakehouse および Iceberg 環境に直接統合します。

エンドツーエンドのデータリネージ、検証ルール、セマンティックタイプ、Qlik Trust Score といった Qlik の主要機能が利用可能となり、オープンレイクハウス内の全データセットの正確性、追跡可能性、AI 対応性を保証します。ユーザーは Iceberg テーブルやミラーリングされた Snowflake 環境を横断するデータの流れと変換を監視でき、データパイプラインに対する完全な可視性と信頼性を獲得できます。

大規模環境(Qlik の管理されたレイクハウス環境、データウェアハウス内部)においても、データ品質検証ルールは柔軟に実行可能で、最も重要な箇所で確実な保証を提供します。セマンティックタイプは多様なデータセット間で一貫した意味と文脈を確立し、企業全体での情報解釈方法を標準化します。

さらに Qlik Trust Score および Qlik Trust Score for AI により、Iceberg テーブル内のデータであっても、分析や機械学習に向けたデータ準備度の定量化が可能です。これにより、アクションの根拠となるインサイトの信頼性を確実に保証します。

Qlik Open Lakehouse は、開放性とガバナンスを両立と、信頼できるデータに基づいた革新の実現を可能にします。

Qlik Open Lakehouse の新たなベンチマーク

もちろん、これらが顧客にとって真のコスト削減と有意義なビジネス成果につながらなければ意味がありません。

これを検証するため、当社はベンチマーク調査を実施し、実際の顧客シナリオをシミュレートしながら、Qlik Open Lakehouse とクラウドデータウェアハウスのデータ取り込みコストとパフォーマンスを比較しました。

Qlik Open Lakehouse と Iceberg の組み合わせは、データウェアハウス管理の Iceberg ソリューションや同等の構成のネイティブデータウェアハウスと比較して、約77~89%のコスト削減とコンピューティング消費量削減を実現し、データ鮮度を2.5~5倍向上させることで、顧客に桁違いの価値を提供しました。ベンチマークテストの詳細と結果は Benchmarking Ingestion Costs and Performance of Qlik Open Lakehouse Vs a Data Warehouse でご確認いただけます。

なぜこれが重要なのか

現代の企業は、柔軟でオープンなアーキテクチャを活用し、AI とリアルタイムインサイトを実現する基盤を構築しています。しかし、その実現にはデータパイプライン、データ品質、ガバナンス、コスト効率の再考が不可欠です。

Qlik Open Lakehouse は、統一されたオープンかつガバナンスされた基盤を提供し、以下の機能を実現します。

- リアルタイムのデータのストリーム処理、変換、最適化

- AWS、Snowflake、Databricks などエコシステム間のシームレスな相互運用性

- データ取り込みのためのインフラストラクチャおよびコンピューティングコストを最大70~90% 削減

- 統制され信頼性が高い、分析のためのデータを大規模に提供

これが AI とデータ分析の未来のために構築されたオープンレイクハウスの次の進化形です。Qlik Open Lakehouse は、常にアクセス可能で相互運用性が高く、ロックインも妥協もない将来に備えたデータ環境を提供します。

一度構築すればどこでも活用可能——それがオープンレイクハウスアーキテクチャの真価です。

はじめましょう

Qlik Open Lakehouse は既に一般提供されています。この度、発表した主要な新機能は 2026年第1四半期に一般提供を開始し、拡張機能は 2026年前半にかけて順次提供されます。

Qlik が現代の企業向けに、オープンでリアルタイムかつ信頼性の高いデータで可能性を再定義する方法について詳しくご覧ください。

-

Introducing: Write Table now available in Qlik Cloud Analytics

Make your analytics apps interactive. Edit data, add comments, and watch your updates sync across sessions instantly. With Write Table’s instant sync... Show MoreMake your analytics apps interactive.

Edit data, add comments, and watch your updates sync across sessions instantly. With Write Table’s instant syncing and change tracking, teams can collaborate in real time and export updates through Qlik Automate.

Find it in the Chart Library when editing an app and get started!

Learn more here:

- Write Table | help.qlik.com

- Qlik Write Table FAQ

- SaaS in 60

- Write Table Product Tour

- Write Table now available in Qlik Cloud Analytics | Qlik Product Innovation Blog

Thank you for choosing Qlik,

Qlik Support -

ダッシュボード上で直接入力・編集が可能に! Qlik Cloud の新機能 「ライトテーブル」

従来のBIツールでは、ダッシュボードで分析した内容を踏まえ次のアクションを取るために他システムへ入力したり、Excelでデータを修正して再度取り込み、また確認するといった作業が必要でした。分析画面と日常業務がつながっていないと、気付きを反映するまでに時間がかかったり、担当者間で認識がずれたりするケ... Show More従来のBIツールでは、ダッシュボードで分析した内容を踏まえ次のアクションを取るために他システムへ入力したり、Excelでデータを修正して再度取り込み、また確認するといった作業が必要でした。分析画面と日常業務がつながっていないと、気付きを反映するまでに時間がかかったり、担当者間で認識がずれたりするケースも少なくありません。

こうした課題に応える新機能として、Qlik Cloudに「Write table(ライトテーブル)」が追加されました。これは分析画面上に配置できる編集可能なテーブルで、ユーザーが直接セルに入力したり、プルダウンを使って更新することができます。複数人による同時編集にも対応しており、行単位でロックされるため競合が起きません。編集内容は“change store”に保存され、APIを使って外部システムとの連携や自動化フローのトリガーとして利用することも可能です。

この機能がもたらす価値は、「分析」と「実務」がこれまで以上に近づく点にあります。例えば在庫数の修正、配送ステータスの更新、営業案件の進捗管理、社内コメントの書き込みなど、従来であれば別システムで行っていた作業を、Qlikアプリ内で完結できるようになります。特にビジネス部門において、現場が気付いた内容をその場で即時反映できることは大きな強みです。

さらに、外部システム連携や自動化機能(Qlik Automate)を組み合わせれば、入力内容をもとに通知や外部システム更新を自動化するなど、「分析 → 編集 → 実行」を継ぎ目なく実現できます。BIを見るためのツールから意思決定と実行を支えるプラットフォームへ進化させたい企業にとって、Write tableは大きな一歩となるでしょう。

データ修正の二度手間を減らし、ダッシュボードと現場作業を自然につなぐ体験が、業務プロセスの質を大きく変えるはずです。ぜひ一度試してみてください。

Write tableは、Qlik Cloud Analytics Premium以上のサブスクリプション、または Qlik Sense Enterprise SaaS でご利用いただけます。

Qlik TECH TALK セミナー:分析アプリでの直接データ操作とリアルタイム情報共有を実現!Write Tableのご紹介

-

【オンデマンド配信】製造業向け自社製品の製品価値を最大化する!

製造業における競争力は、いま“データを活かせる製品”かどうかで大きく変わりつつあります。本 Web セミナーでは、自社製品に Qlik Cloud Analytics をバンドルすることで実現できる新たな価値創出モデルをご紹介します。センサーデータや稼働ログを可視化し、予兆保全やリモート診断を可能に... Show More製造業における競争力は、いま“データを活かせる製品”かどうかで大きく変わりつつあります。本 Web セミナーでは、自社製品に Qlik Cloud Analytics をバンドルすることで実現できる新たな価値創出モデルをご紹介します。センサーデータや稼働ログを可視化し、予兆保全やリモート診断を可能にするだけでなく、製品改善につながる利用データの取得、アフターサービスの収益化など、多くのメリットを具体的なユースケースを交えて解説します。“売り切り”から“継続的な価値提供”へ。製品の競争力を高め、顧客満足度を向上させるための実践的な方法をぜひご確認ください。

※ パソコン・タブレット・スマートフォンで、どこからでもご視聴いただけます。

今すぐ視聴する -

Qlik Trail: an extension to record, replay, and share your selection journeys

When I’m exploring a Qlik app, I often want to show exactly how I reached a view—without typing instructions or jumping on a call. Qlik Trail is a sma... Show MoreWhen I’m exploring a Qlik app, I often want to show exactly how I reached a view—without typing instructions or jumping on a call.

Qlik Trail is a small extension I created that does just that: Click Record, make your selections, and you’ll get a tidy list of steps that you can replay from start to finish, or jump to a specific step. Then, when you’re happy with the journey, you can export the trail as JSON and hand it to someone else so they can import and run the same sequence on their side.

Why not just use bookmarks?

Bookmarks are great for destinations (one final selection state). Qlik Trail is more about the path:

-

Multiple steps in a defined order (A → B → C)

-

Replay from start for narration or Replay a specific step

-

Export/import a whole journey, not a bunch of one-offs

You can use bookmarks to save a point in time, and use Qlik Trail when the sequence matters more.

What can you do with the Qlik Trail extension?

-

Record steps manually, or toggle Auto to capture each change

-

Name, duplicate, reorder, delete, or ungroup steps

-

Organize steps into Groups (Executive Story, Sales Ops, Training, …)

-

Replay to here (apply one step) or Replay from start (play the group)

-

Export / Import trails as JSON

Demo

Before we go into how the extension was built, let's see a demo of how it can be used.

I’ll share two practical trails an Executive Story and Sales Ops with selections you can record and replay on your end, for this demo I'll use the Consumer Goods Sales app (which you can get here: https://explore.qlik.com/details/consumer-goods-sales)

Setup

-

Upload the extension .zip file in the Management Console, then add Qlik Trail (custom object) to a sheet.

-

Optional: enable Auto-record in the object’s properties if you want every change captured while you explore.

-

Toolbar (left to right): Record, Replay from start, Delete, Delete all, Auto, Group filter, New group, Export, Import.

Trail 1 — Executive Story

Create Group: Executive Story

Step 0 — “US overview: top categories”

Selections: (none)Step 1 — “Northeast · Fresh Vegetables”

Selections: Region = Northeast, Product Group = Fresh VegetablesStep 2 — “Northeast · Cheese (A/B)”

Selections: Region = Northeast, Product Group = Cheese

quick A/B inside the same region.Step 3 — “West vs Northeast · Fresh Fruit”

Selections: Region = West, Northeast, Product Group = Fresh Fruit

same category across two regions to see differences.Step 4 — “Northeast focus: PA · Fresh Fruit”

Selections: Region = Northeast, State = Pennsylvania, Product Group = Fresh Fruit

drill to a particular state.Presenting: select the group → Replay from start. If questions land on Step 4, use Replay to here.

Trail 2 — Sales Ops Story

Create Group: Sales Ops Story

Step 1 — “South · Ice Cream & Juice (summer basket)”

Selections: Region = South, Product Group = Ice Cream, JuiceStep 2 — “Central · Hot Dogs (promo check-in)”

Selections: Region = Central, Product Group = Hot DogsStep 3 — “West · Cheese (margin look)”

Selections: Region = West, Product Group = CheeseTips when building trails

-

One idea per step. (Region + Category) or (Region + a couple of States)

-

Duplicate then tweak for fast A/B comparisons

-

Group by audience. Exec, Ops, Training

-

Ungrouped steps = scratchpad. Move into a group when it’s ready

-

After recording, drag to reorder so replay tells a clean story

Sharing your trails

Click Export to download qliktrail_export_YYYY-MM-DD_HHMM.json.

Your teammate can Import, pick a group, and hit Replay from start. Same steps, same order—no instructions needed.

Tech notes

1) Module & CSS injection

Load CSS once at runtime so the object stays self-contained:define(['qlik','jquery','./properties','text!./style.css'], function(qlik, $, props, css){ if (!document.getElementById('qliktrail-style')) { const st = document.createElement('style'); st.id = 'qliktrail-style'; st.textContent = css; document.head.appendChild(st); } // … });2) Capturing selections per state

Read SelectionObject, group by qStateName, then fetch only selected rows via a lightweight list object. Store both text and numeric to be resilient to numeric fields:app.getList('SelectionObject', function(m){ // build grouped { state -> [fields] } }); ... app.createList({ qStateName: stateName || '$', qDef: { qFieldDefs: [fieldName] }, qInitialDataFetch: [{ qTop:0, qLeft:0, qWidth:1, qHeight:10000 }] }).then(obj => obj.getLayout() /* collect S/L/XS states */);Snapshot (what we export/import):

{ "ts": "2025-03-10T14:30:00Z", "states": [ { "state": "$", "fields": { "Region": { "text": ["Northeast"], "num": [] }, "Product Group": { "text": ["Fresh Vegetables"], "num": [] } } } ] }3) Replaying selections

Clear target state, then select values. Prefer numbers when available; fall back to text:app.clearAll(false, state);

... field.selectValues(pack.num.map(n=>({qNumber:n})), false, false) // fallback to qText field.selectValues(pack.text.map(t=>({qText:String(t)})), false, false);4) Auto-record with a quiet window

Avoid recording during replays to prevent ghost steps:ui.replayQuietUntil = Date.now() + (layout.props.replayQuietMs || 1200); if (Date.now() >= ui.replayQuietUntil && ui.autoOn) record();5) Groups, ordering & persistence

Trails live in localStorage per app key. Groups look like { id, name, members:[stepId,…] }. Ordering is a simple seq integer; drag-and-drop reassigns seq inside the open list.That’s Qlik Trail in a nutshell. It lets you hit record, name a few steps, and replay the story without hand-holding anyone through filters. Use Bookmarks to keep states, but Trail will help you keep tack of the sequence.

P.S: this extension is a work-in-progress experiment and not prod-ready, I'm planning to add more features to it and make it more stable in the future.

🖇 Link to download the extension: https://github.com/olim-dev/qlik-trail -

-

Qlik Cloud Analytics Enhancements

As we enter the last month of the year, let’s review some recent enhancements in Qlik Cloud Analytics visualizations and apps. On a continuous cycle, ... Show MoreAs we enter the last month of the year, let’s review some recent enhancements in Qlik Cloud Analytics visualizations and apps. On a continuous cycle, features are being added to improve usability, development and appearance. Let’s’ look at a few of them.

Straight Table

Let’s begin with the straight table. Now, when you create a straight table in an app, you will have access to column header actions, enabled by default. Users can quickly sort any field by clicking on the column header. The sort order (ascending or descending) will be indicated by the arrow. Users can also perform a search in a column by clicking the magnifying glass.

When the magnifying glass icon is clicked, the search menu is displayed as seen below.

If a cyclic dimension is being used in the straight table, users can cycle through the dimensions using the cyclic icon that is now visible in the column heading (see below).

When you have an existing straight table in an app, these new features will not be visible by default but can easily be enabled in the properties panel by going to Presentation > Accessibility and unchecking Increase accessibility.

Bar Chart

The bar chart now has a new feature that allows the developer to set a custom width of the bar when in continuous mode. Just last week, I put the bar chart below in continuous mode and the bars became very thin as seen below.

But now, there is this period drop down that allows developers to indicate the unit of the data values.

If I select Auto to automatically detect the period, the chart looks so much better.

Combo Chart

In a combo chart, a line can now be styled using area versus just a line, as displayed below.

Sheet Thumbnails

One of the coolest enhancements is the ability to auto-generate sheet thumbnails. What a time saver. From the sheet properties, simply click on the Generate thumbnail icon and the thumbnail will be automatically created. No more creating the sheet thumbnails manually by taking screenshots and uploading them and assigning them to the appropriate sheet. If you would like to use another image, that option is still available in the sheet properties.

From this

To this in one click

Try out these new enhancements to make development and analysis faster and more efficient.

Thanks,

Jennell