Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

Recent Documents

-

Enhancing Analytics with Qlik Predict

This Techspert Talks session covers: How ML Experiments work Real World Predictive use cases Time Series Chapters: 01:33 - Machine Learning Mo... Show More -

Qlik AutoML: This prediction failed to run successfully due to schema errors

Introduction "This prediction failed to run successfully due to schema errors" can occur during the prediction phase if there is a mismatch in the ta... Show MoreIntroduction

"This prediction failed to run successfully due to schema errors" can occur during the prediction phase if there is a mismatch in the table schema between training and prediction datasets.In this example, we will show how a column in the prediction dataset was profiled as a categorical rather than numeric because it contained dashes '-' for empty values.

Steps

1. Upload test_dash_training.csv and test_dash_prediction.csv to Qlik Cloud. See attachments on the article if you would like to download.

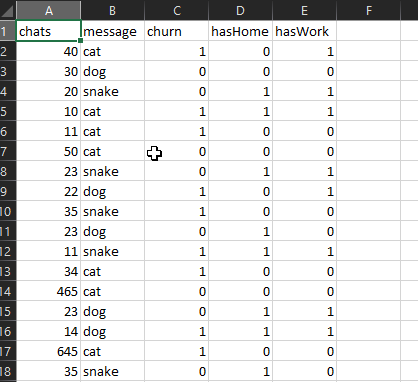

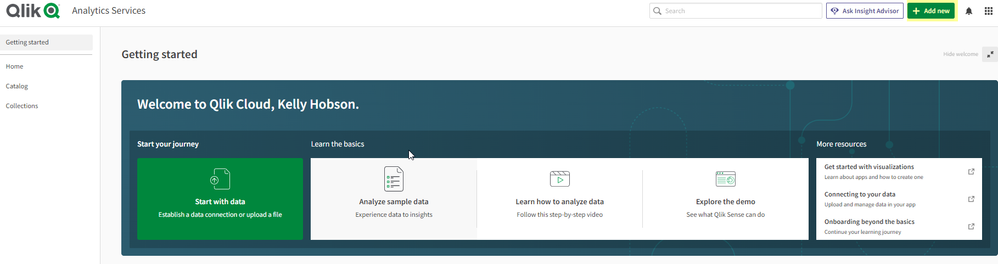

Training dataset:

Prediction sample:

2. Create a new ML experiment and choose test_dash_training.csv, and click 'Run Experiment'

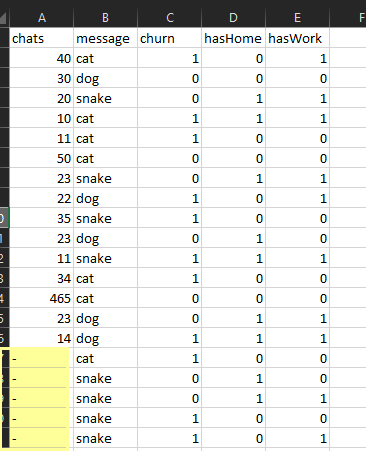

3. Deploy the top model

5. Create a new prediction and select test_dash_prediction.csv as the apply dataset

You will encounter a warning message, "Feature type does not match the required model schema."

If you continue, you will encounter another message after clicking 'Save and predict now'.

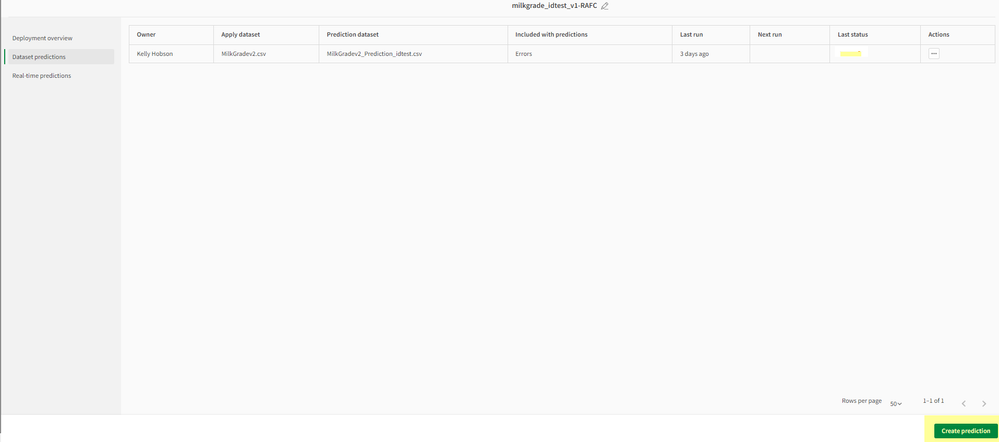

6. If you click 'Save configuration', the prediction will attempt to run but will not produce prediction dataset and will display an error.

Resolution

Clean up dashes from 'chats' column in test_dash_prediction.csv. Either remove them from the prediction dataset or transform to a numeric value such as zero.

Environment

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

-

Using AutoML in Qlik Sense

This Techspert Talks session addresses: How to get started Making API calls Using Predictive Analysis 00:56 - What is AutoML? 01:31 - Iris dem... Show MoreThis Techspert Talks session addresses:

- How to get started

- Making API calls

- Using Predictive Analysis

- 00:56 - What is AutoML?

- 01:31 - Iris demo intro

- 02:46 - Demo working AutoML Iris app

- 04:40 - How to setup data for AutoML

- 10:06 - ML Model Management

- 11:18 - How to add AutoML to a Sense App

- 13:56 - Prediction Analysis in a KPI object

- 15:19 - Batch predictions

- 16:35 - HR Promotion Analysis

- 18:00 - Additional Resources

- 19:00 - Q&A: Can the experiment data set be updated?

- 19:58 - Q&A: Is AutoML free?

- 20:36 - Q&A: What is the data size limit?

- 21:17 - Q&A: Can I choose my own index?

- 22:05 - Q&A: Is AutoML on for Qlik Cloud?

- 22:20 - Q&A: What data sources can be used?

- 22:52 - Q&A: Does AutoML work with Snowflake?

- 23:59 - Q&A: How to troubleshoot a connection error?

- 24:20 - Q&A: How do you schedule an the predictions?

- 24:43 - Q&A: Where can we find more documentation?

Q&A :

Q: I understand that I can invoke a production model through an API from Python. Are there any limitations to doing this?

A: We provide the APIs to invoke a model for real time predictions. These can be called from any platform/language like all Qlik’s APIs. In the case of python, the customer would call this using a library such as “requests”. This functionality is only available for customers on a paid for tier. More details can be found here.

Q: Is there a maximum amount of data sets which can be used for ML experiments?

A: For each ML experiment, it uses one dataset. There is no set limit on the number of datasets made available in Qlik Catalog, but there are limitations on the size of datasets.

Regarding dataset size, there are 2 limits in play:

1. The dataset size limit as set in the tier model.

a. Included: 100K cells

b. StartUp: 1M cells

c. ScaleUp: 10M cells

d. Premier: 100M cellsThe data must be profiled by the catalog and this currently has a limit of 1GB file size. By using QVD or Parquet data files you can process more data.

Resources:

How To Get Started with Qlik AutoML

Qlik AutoML Demonstration Overview

Introduction to Qlik AutoML (Learning.Qlik.com)

Machine learning with Qlik AutoML (Help.Qlik.com)

Qlik AutoML on Qlik Cloud - sample data

Qlik Continuous Classroom AutoML courses

-

Qlik AutoML: How to upload, model, deploy, and predict on Qlik Cloud platform

This article provides step by step guidelines for uploading a dataset (csv from local machine), creating a model, deploying a model, and generating ... Show MoreThis article provides step by step guidelines for uploading a dataset (csv from local machine), creating a model, deploying a model, and generating predictions with Qlik AutoML on Qlik Cloud environment.

Steps

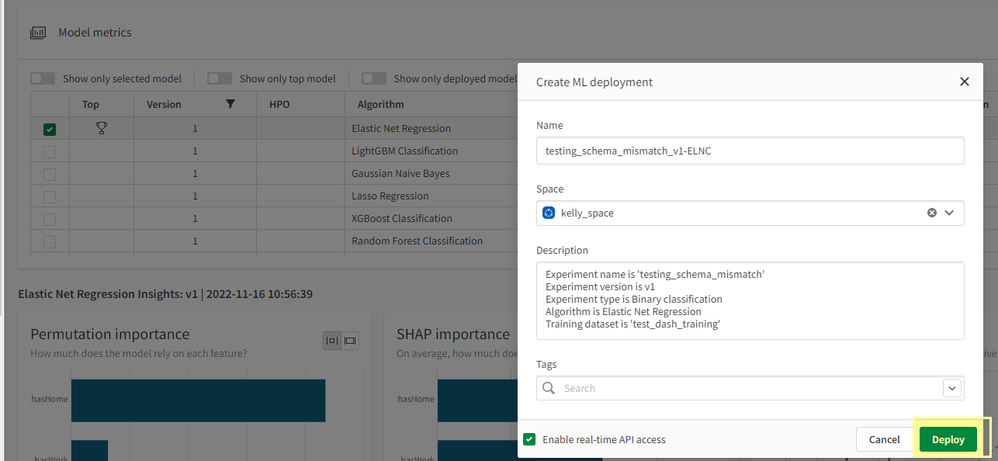

- Log into Qlik Cloud

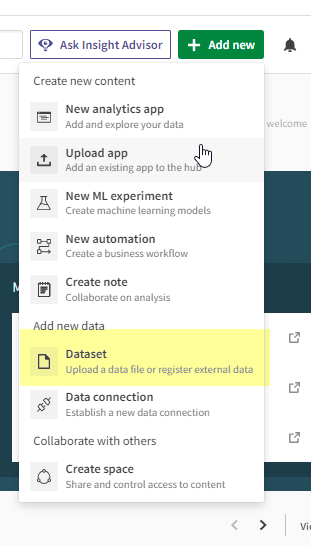

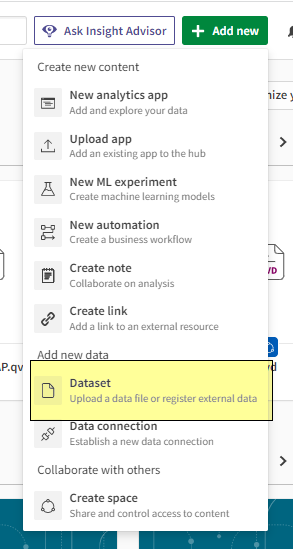

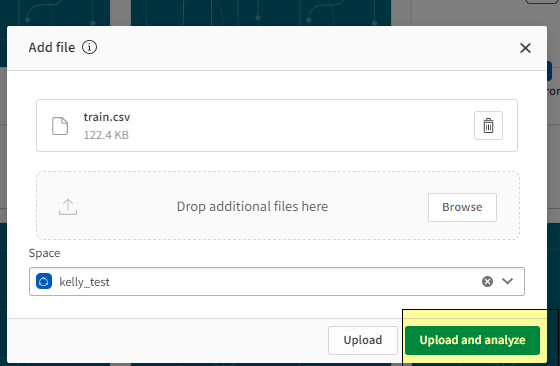

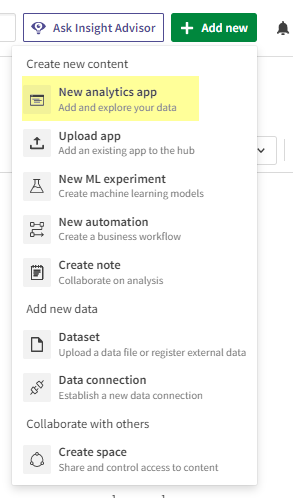

- Click +Add new and select "Dataset"

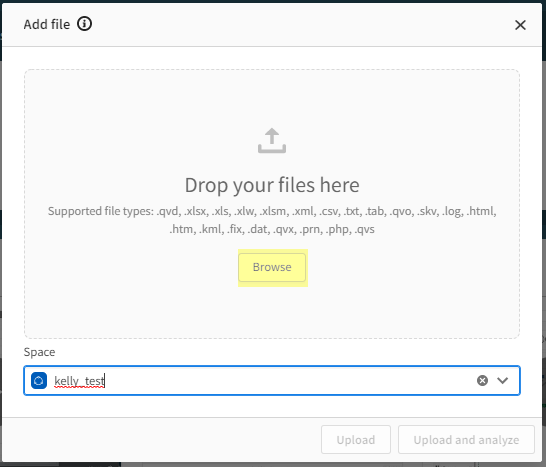

- Select "Upload data file", select Browse, navigate to your file, then click 'Upload'

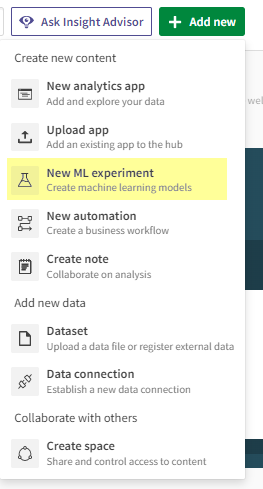

- Once uploaded, click +Add New -> 'New ML Experiment'

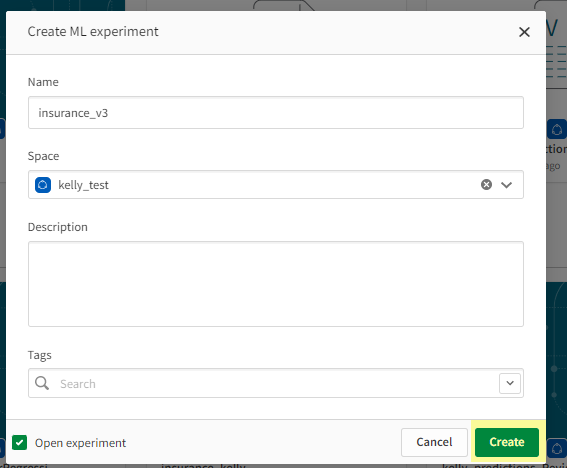

- Give ML experiment a meaningful name and assign to a space, then click 'Create'

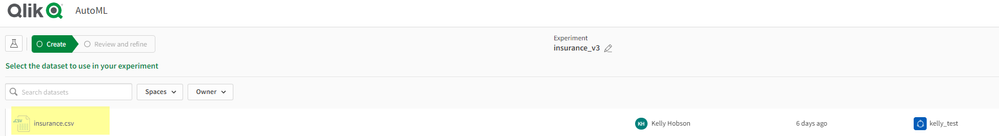

- Select the dataset you uploaded

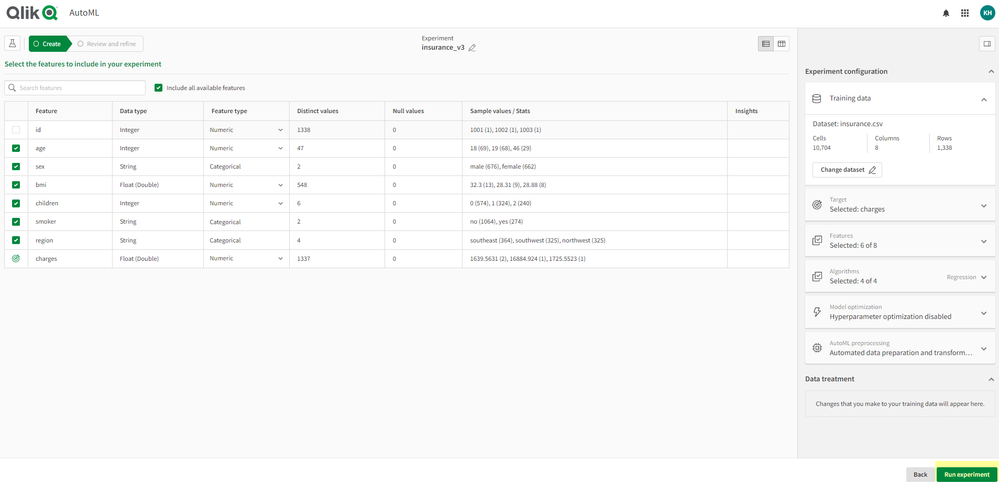

- Select the target variable and features to include in the model. On the right hand side you have additional options to select which algorithms, hyperparamteter optimization, and data prep steps. We are sticking with the defaults. Click 'Run Experiment' on the bottom right side of screen.

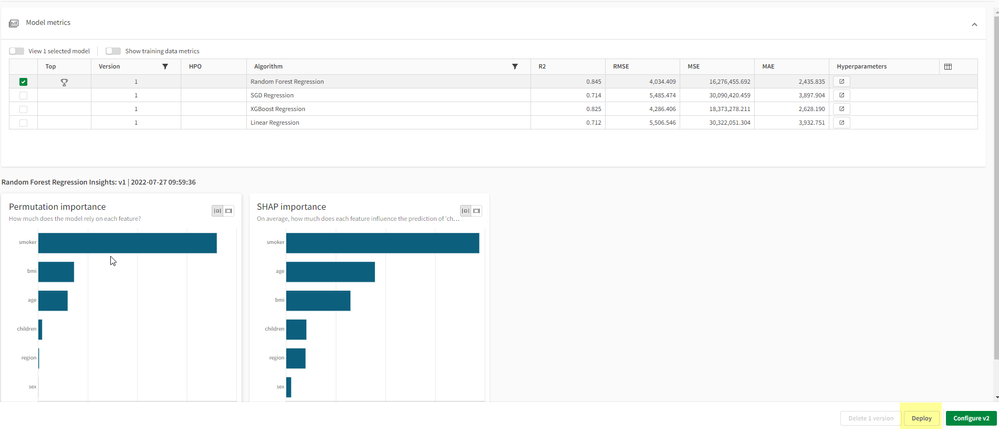

- Model metrics and insights are displayed on the page. If you would like to refine the model, you can select 'Configure V2' on the bottom right. If you are ready to deploy model, then click 'Deploy.'

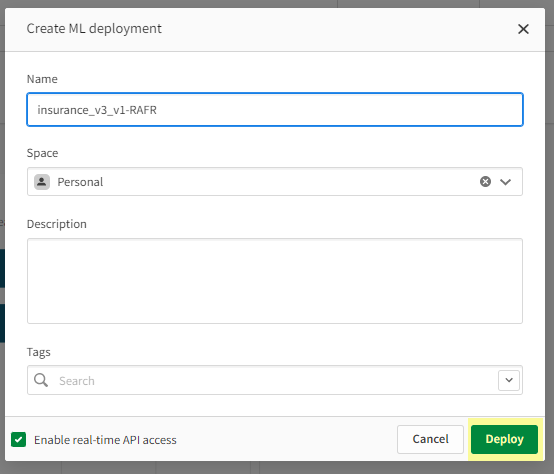

- Give the model a meaningful name and then click 'Deploy'

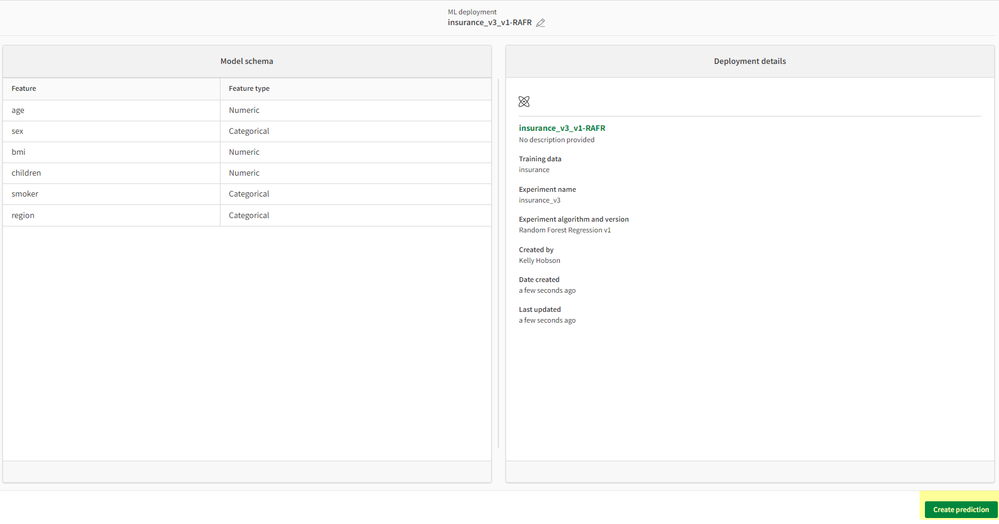

- At the top of the page you will get a notification, click this to open the deployed model. If you 'miss' the notification, you can always navigate to Home -> Catalog -> and search for type 'ML Deployments'. Once opened, you will see a page with metadata about the deployed model. At the bottom right, you will see an option to 'Create Prediction'

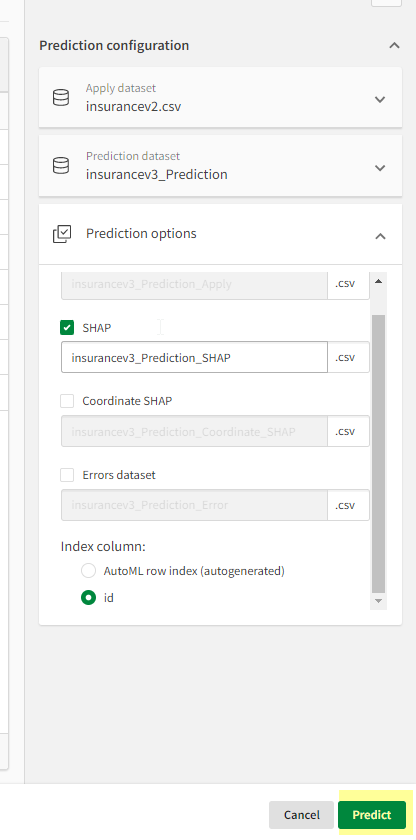

- Select an apply dataset. In this example, I am using the same dataset I used to train the model. (Not advisable but easy for learning purposes).

- Name your prediction dataset something meaningful and add to working space. Click 'Confirm'

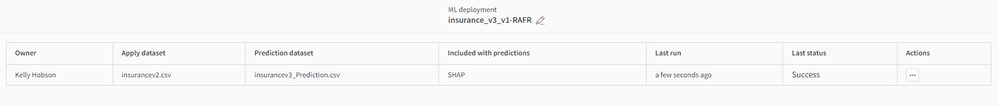

For this particular example, we have an ID column and I will use this as the index column (and later for joining) - Congratulations! You now have a generated predictions on using your deployed model.

If you navigate to 'Home' - > 'Catalog' -> 'Data' you will see your predicted outputs.

Related Content:

Qlik AutoML: Overview of SHAP values

Environment

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

- Log into Qlik Cloud

-

How To Get Started with Qlik AutoML

IntroductionSystem RequirementsPricing and PackagingSoftware updatesTypes of Models SupportedGetting Started with AutoMLData ConnectionsData Preparati... Show More- Introduction

- System Requirements

- Pricing and Packaging

- Software updates

- Types of Models Supported

- Getting Started with AutoML

- Data Connections

- Data Preparation abilities

- Using realtime-prediction API

- Integration with Qlik Sense

- Contacting Support

- Additional resources

- Environment

This is a guide to get you started working with Qlik AutoML.

Introduction

AutoML is an automated machine learning tool in a code free environment. Users can quickly generate models for classification and regression problems with business data.

System Requirements

Qlik AutoML is available to customers with the following subscription products:

Qlik Sense Enterprise SaaS

Qlik Sense Enterprise SaaS Add-On to Client-Managed

Qlik Sense Enterprise SaaS - Government (US) and Qlik Sense Business does not support Qlik AutoML

Pricing and Packaging

For subscription tier information, please reach out to your sales or account team to exact information on pricing. The metered pricing depends on how many models you would like to deploy, dataset size, API rate, number of concurrent task, and advanced features.

Software updates

Qlik AutoML is a part of the Qlik Cloud SaaS ecosystem. Code changes for the software including upgrades, enhancements and bug fixes are handled internally and reflected in the service automatically.

Types of Models Supported

AutoML supports Classification and Regression problems.

Binary Classification: used for models with a Target of only two unique values. Example payment default, customer churn.

Customer Churn.csv (see downloads at top of the article)

Multiclass Classification: used for models with a Target of more than two unique values. Example grading gold, platinum/silver, milk grade.

MilkGrade.csv (see downloads at top of the article)

Regression: used for models with a Target that is a number. Example how much will a customer purchase, predicting housing prices

AmesHousing.csv (see downloads at top of the article)

Getting Started with AutoML

What is AutoML (14 min)

Exploratory Data Analysis (11 min)

Model Scoring Basics (14 min)

Prediction Influencers (10 min)

Qlik AutoML Complete Walk Through with Qlik Sense (24 min)

Non video:

How to upload data, training, deploying and predicting a model

Data Connections

Data for modeling can be uploaded from local source or via data connections available in Qlik Cloud.

You can add a dataset or data connection with the 'Add new' green button in Qlik Cloud.

There are a variety of data source connections available in Qlik Cloud.

Once data is loaded and available in Qlik Catalog then it can be selected to create ML experiments.

Data Preparation abilities

AutoML uses variety of data science pre-processing techniques such as Null Handling, Cardinality, Encoding, Feature Scaling. Additional reference here.

Using realtime-prediction API

Please reference these articles to get started using the realtime-prediction API

Integration with Qlik Sense

By leveraging Qlik Cloud, predicted results can be surfaced in Qlik Sense to visualize and draw additional conclusions from the data.

How to join predicted output with original dataset

Contacting Support

If you need additional help please reach out to the Support group.

It is helpful if you have tenant id and subscription info which can be found with these steps.

Additional resources

Please check out our articles in the AutoML Knowledge Base.

Or post questions and comments to our AutoML Forum.

Environment

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

-

Qlik AutoML: How to schedule predictions?

Introduction The scheduling feature is now available in Qlik AutoML to run a prediction on a daily, weekly, or monthly cadence. Steps 1. Open a ... Show MoreIntroduction

The scheduling feature is now available in Qlik AutoML to run a prediction on a daily, weekly, or monthly cadence.

Steps

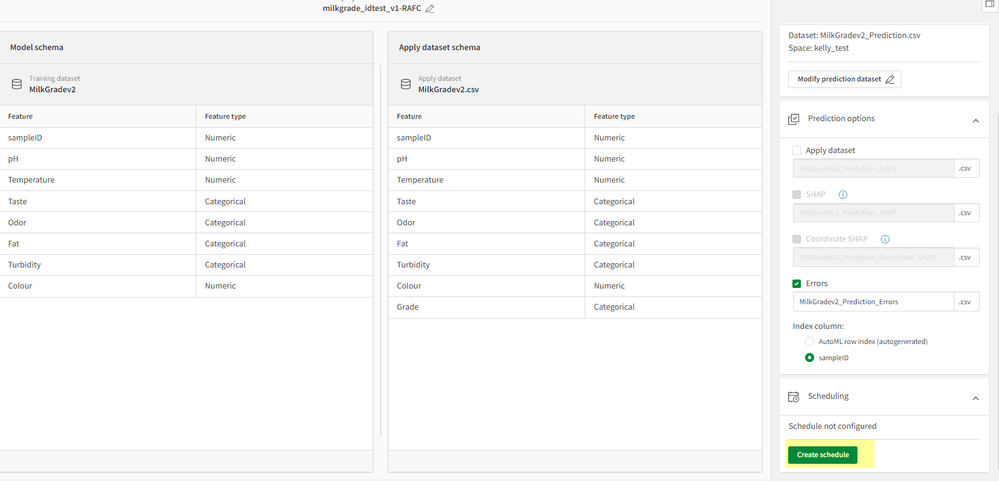

1. Open a deployed model from Qlik Catalog

2. Navigate to 'Dataset predictions' and click on 'Create prediction' on bottom right

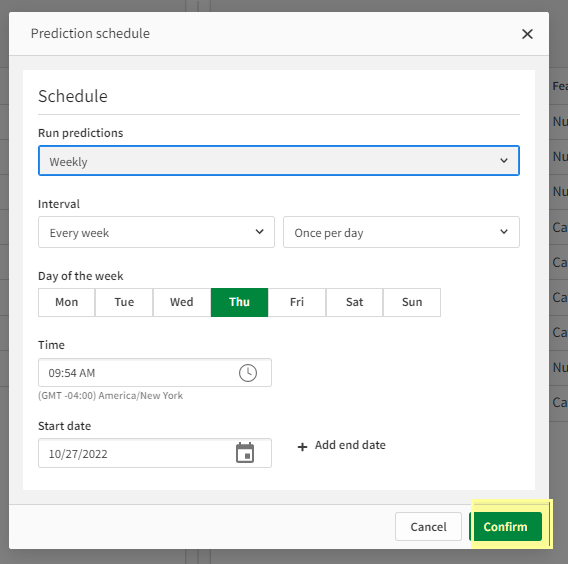

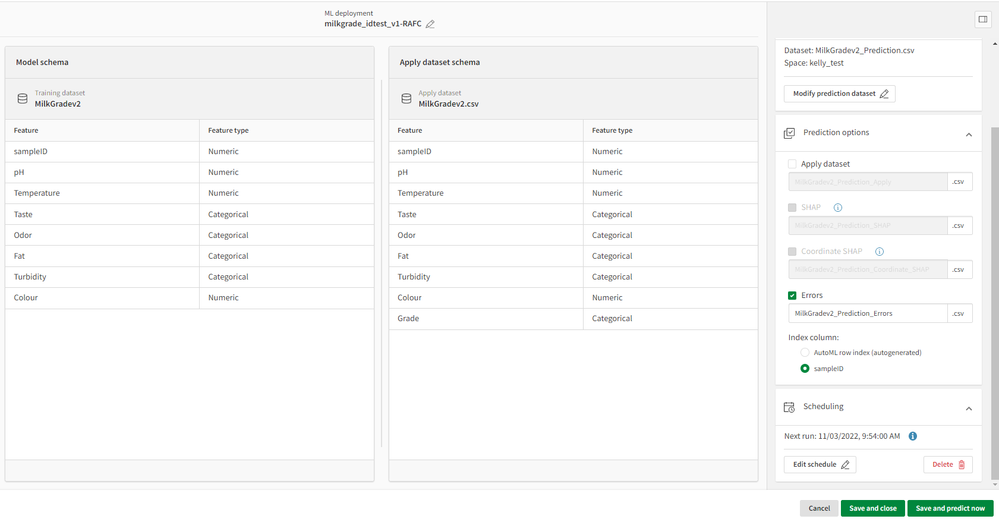

3. Select Apply Dataset, Name prediction datset, select your options, then click on 'Create Schedule'

4. Set your schedule options you would like to follow then click confirm

5. Your options now are to 'Save and close' (this will not run a prediction until the next scheduled) or 'Save and predict now' (this will run a prediction now in addition to the schedule)

Note: Users need to ensure the predicted dataset is updated and refreshed ahead of the prediction schedule.

Environment

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

-

Qlik AutoML: How to save a csv table upload as a QVD?

Introduction I needed to test a ML experiment recently with a QVD instead of a csv file. Here are the steps I followed below to create a QVD file wh... Show MoreIntroduction

I needed to test a ML experiment recently with a QVD instead of a csv file.

Here are the steps I followed below to create a QVD file which was then available in Catalog.

Steps

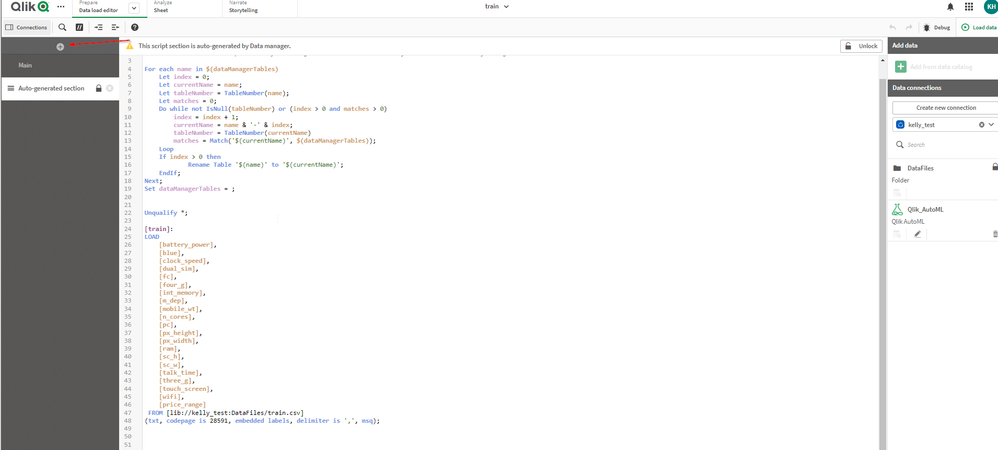

1. Upload the local csv dataset (or xlsx,etc) and analyze which will create an analytics app

2. Open up the app and navigate to "Data Load Editor"

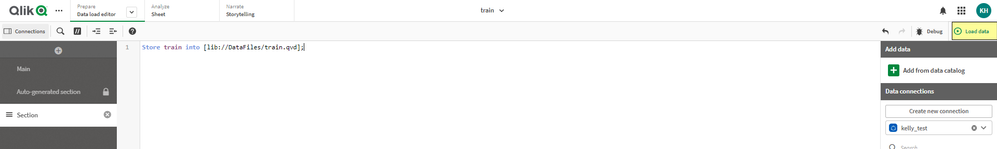

3. Add a new section under the Auto-generated section (with the + symbol marked with a red arrow above. Note this section must run after the Auto-generated section or will error the data is not loaded.

Add the following statement:

Store train into [lib://DataFiles/train.qvd];

or

Store tablename into [lib://DataFiles/tablename.qvd];

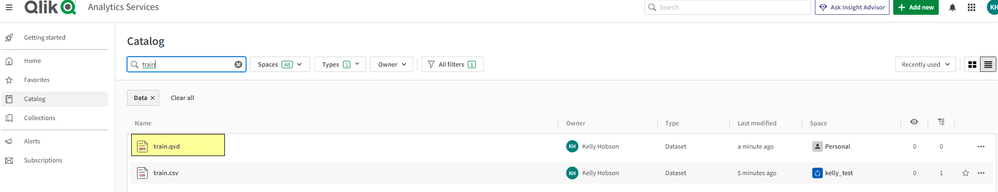

4. Run "Load data"

5. Check Catalog for recently created QVD

Environment

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

-

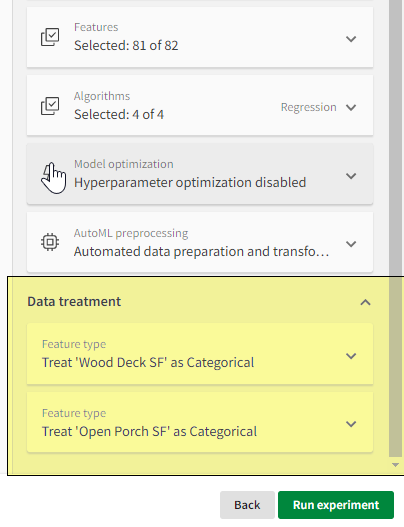

Qlik AutoML: What is the Data Treatment option in ML experiments?

Introduction During the 'Create' phase of an AutoML experiment, there is a section at the right hand pane called 'Data Treatment'. This secti... Show MoreIntroduction

During the 'Create' phase of an AutoML experiment, there is a section at the right hand pane called 'Data Treatment'.

This section tracks any Feature Type changes you make to your training dataset.

Example

I started the process of creating a ML experiment for the Ames housing dataset.

Then I changed 'Wood Deck SF' and 'Open porch SF' to Categorical instead of Numeric type under the 'Feature Type' column.

The result is these changes are now tracked in the Data Treatment section.

EnvironmentThe information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

-

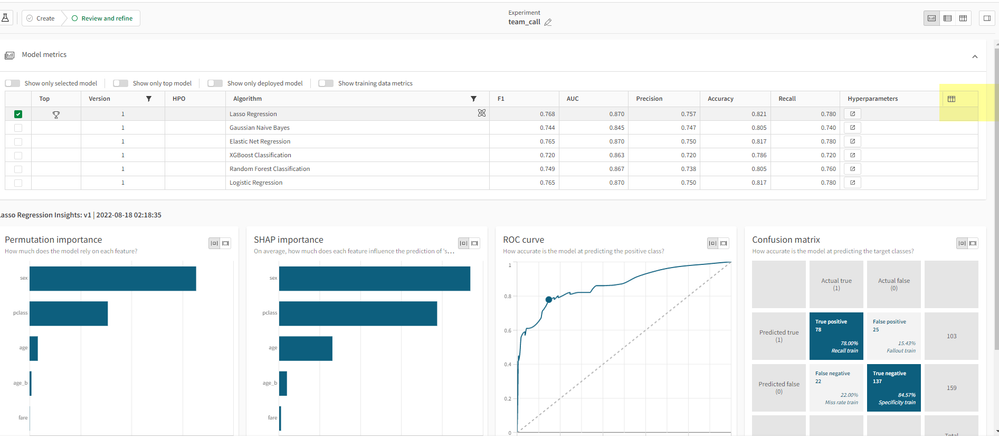

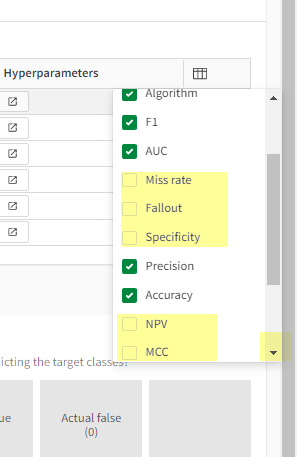

Qlik AutoML: How to list additional model metrics for binary target

For models with binary targets, there are extra model metrics which can be selected from the drop down in addition to the default metrics. To access... Show MoreFor models with binary targets, there are extra model metrics which can be selected from the drop down in addition to the default metrics.

To access, follow these steps from the Model Experiment output.

The additional metrics are:

Miss rate

Fallout

Specificity

NPV

MCC

Threshold

Log LossEnvironment

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

-

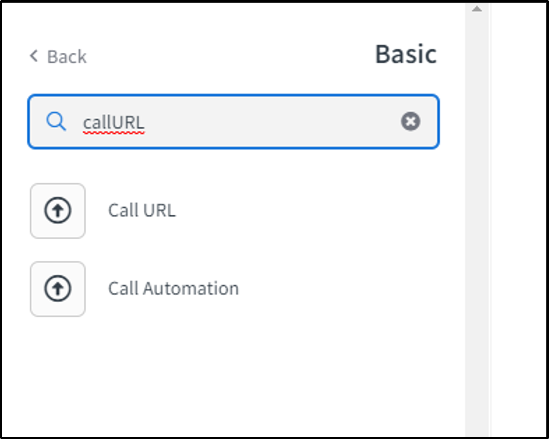

How to run Qlik AutoML prediction using Call URL block in Qlik Application Autom...

Qlik Application Automation has a "Call URL" block that allows you to call a URL when the automation needs data from a URL or when it needs to send da... Show MoreQlik Application Automation has a "Call URL" block that allows you to call a URL when the automation needs data from a URL or when it needs to send data to a URL. This article explains how this block can be used to run a Qlik AutoML prediction right from the Qlik Application Automation.

The Call URL block calls a URL, typically from an API.

Configuring a call URL block to run a Qlik AutoML prediction

Things we need from the Qlik AutoML environment

- The base Url to use

Ex: https://{enter_your_automl_env_here}/api/v1/automl/graphql

- API key (you can get it from the Profile Settings -> API key, from your tenant.

- Deployment Id: You can get it from the Url of “ML Deployment” from the hub.

Ex: ML Deployment Url:

https://{automl_environment_url}/automl/deployed-model/5048076e-bea6-xxxx-b79e-a8a45b316b08

Deployment Id: 5048076e-xxxx-43fa-b79e-a8a45b316b08

Inputs for the block

- URL: You can get it from the Qlik AutoML environment as mentioned above.

- Method: POST

- Headers: You need to add two headers as a key-value pair.

- Authorization: Bearer {Enter API Key Here}

- Content-Type: application/json

How this works

Running a Prediction Dataset makes use of 2 API calls:

- Step1: we call the “List Predictions” Api which takes in “deploymentId” as input and returns the “predictionIDs”.

- Step2: We use this predictionID in the next API call to run the prediction. This process is explained in two steps below.

Please see below for details

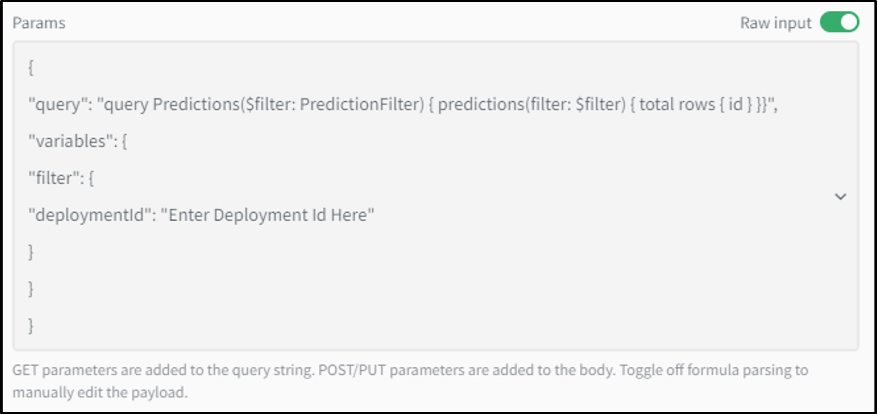

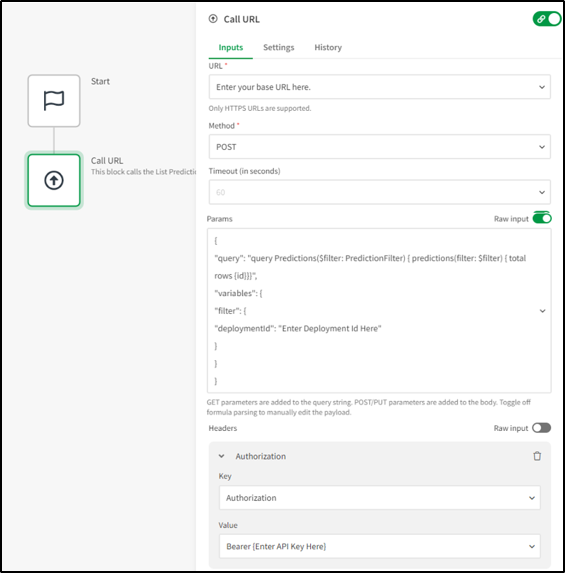

Step 1(List Predictions): First we need to call the API to list the prediction datasets from a deployed model. For this, we need to add proper ‘query” and “variable” params in the call URL block.

You can use the ‘Raw Input’ option to enter these parameters.

IMP Note: Your ML Deployment model must have at least one dataset prediction to run.

Please copy the following JSON and replace the deploymentId with your deploymentId you got from ML Deployment Url and paste it into the automation after triggering the raw input option of ‘Params’.

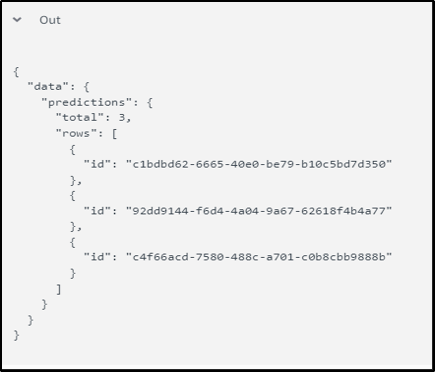

{ "query": "query Predictions($filter: PredictionFilter) {\r\n predictions(filter: $filter) { total rows { id } }}", "variables": { "filter": { "deploymentId": "5048076e-xxxx-43fa-xxxx-a8a45b316b08" } }}Output from this Call Url block will contain the list of Ids of Prediction datasets from your selected ML Deployment.

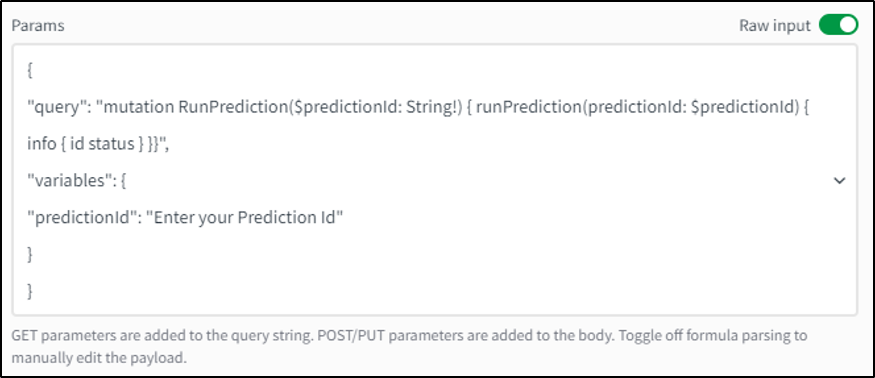

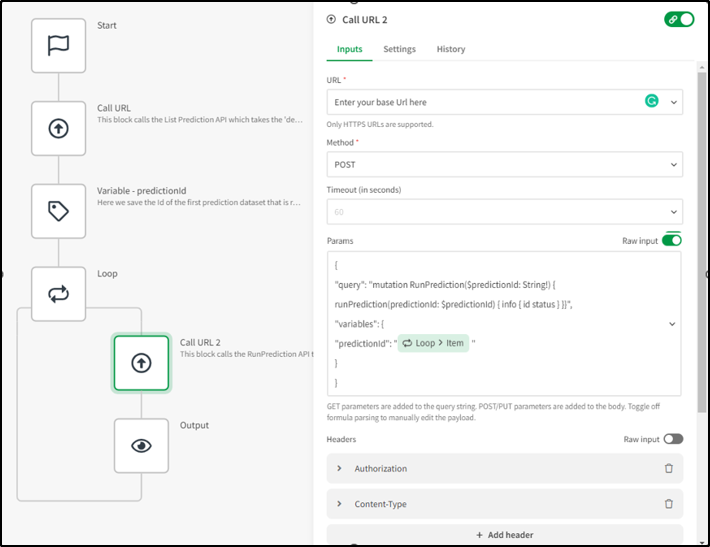

Step 2(Run Prediction): Now we need to call the RunPrediction API with the prediction dataset Id we obtained in the previous step as the input. For this, we need to add proper ‘query” and “variable” params in the call URL block.

You can use the ‘Raw Input’ option to enter these params

Please copy the following JSON and replace the predictionID with the predictionID you got in the output in the previous step and paste it into the automation after triggering the raw input option of ‘Params’.

{ "query": "mutation RunPrediction($predictionId: String!) { runPrediction(predictionId: $predictionId) { info { id status } }}", "variables": { "predictionId": "c1bdbd62-xxxx-40e0-xxxx-b10c5bd7d350" } }Running this will run the Prediction dataset whose Id you provided.

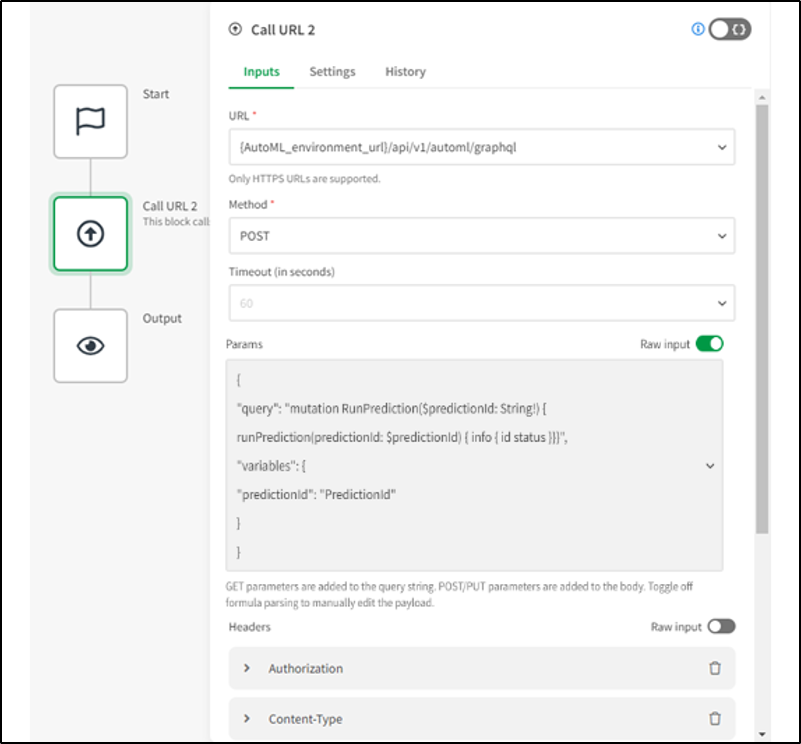

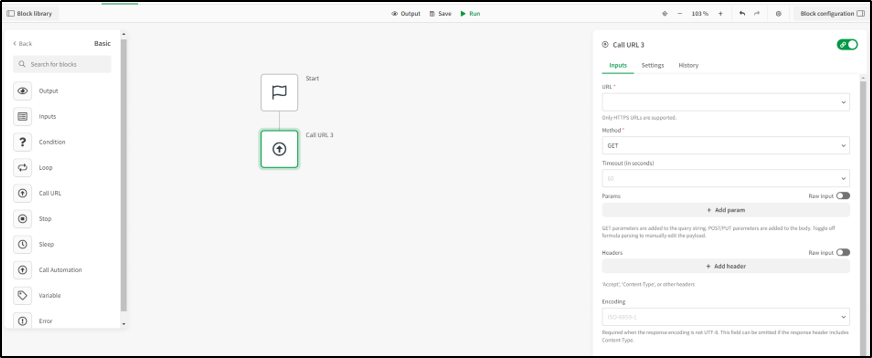

Steps to create an automation that will run on a schedule

- Create new automation and drag a Call URL block from the left panel onto the canvas.

- In the settings of the start block, select the run mode as ‘Scheduled’ and select the ‘Scheduled Every’ to desired option to make the automation run regularly.

- Now set up the Call Url block as explained above to call the “List Predictions” API. Enter your deployment Id in the parameters.

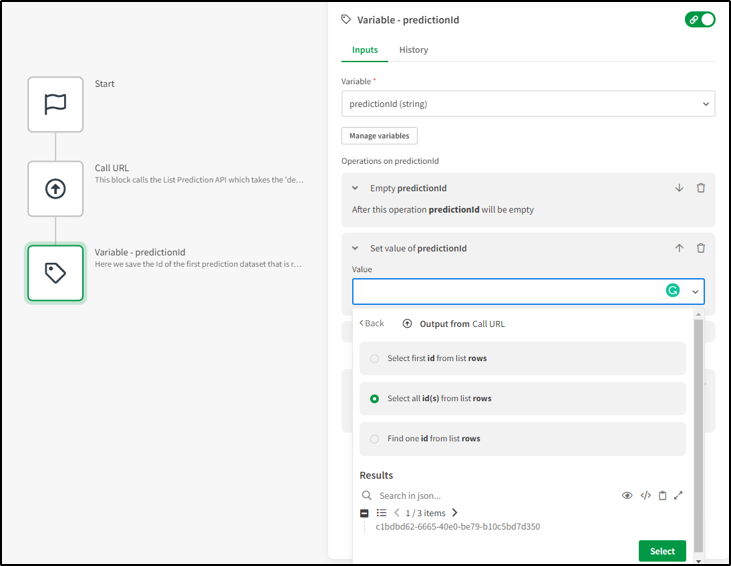

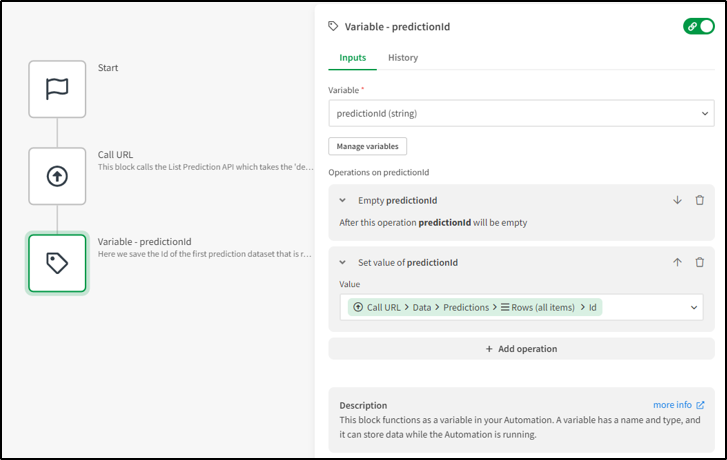

- Take a variable block from the left panel, add a string variable, and set the value to ‘id’ from the result of the above Call Url block by selecting the ‘Select all id(s) from list rows’ option.

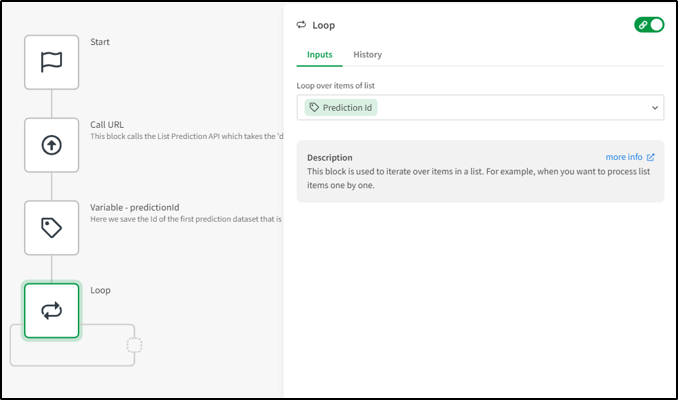

- Now take a loop block from the left panel and set it to loop through the values in the “PredictionId’ variable.

- Take another Call Url block from the left panel and set it to call the ‘Run Prediction’ API as explained above. Set the predictionID value to the ‘item in loop’ in the params. Add this block inside the loop.

- Add an output block and set it to get the output from the second Call Url block.

We have attached an example template showcasing the above automation as a JSON file. You can upload(import) this file into your application automation.

You can find the process to import the file into automation here: How-to-import-and-export-automations

The information in this article is provided as-is and will be used at your discretion. Depending on the tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

-

Qlik AutoML: How to generate API Keys

Introduction This article outlines the steps for generating API keys for AutoML in the Qlik Cloud environment. API keys can be used for real time pr... Show MoreIntroduction

This article outlines the steps for generating API keys for AutoML in the Qlik Cloud environment. API keys can be used for real time prediction pipelines.

Steps

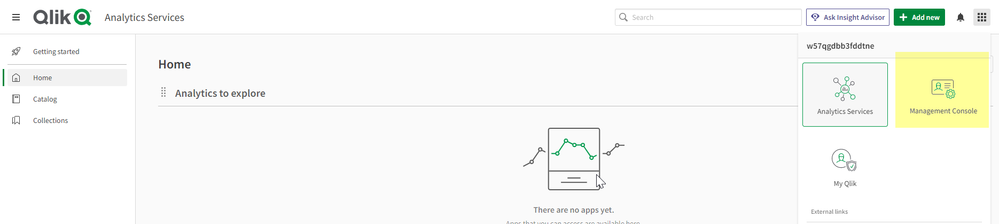

1. Log into your Qlik Cloud Environment

2. Navigate to Management Console

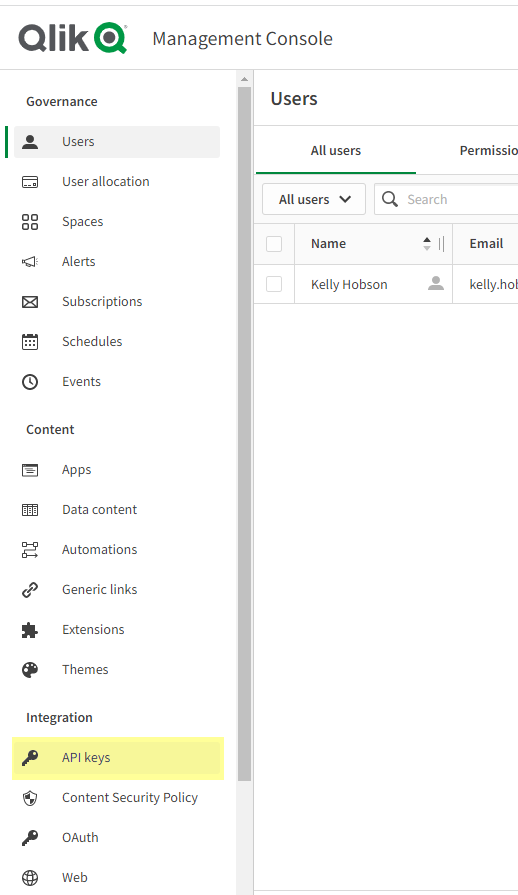

3. On left hand side scroll down to Integration-> API Keys

4. Click to open this page. On the right hand side click 'Generate Keys'

An API key is generated.

Copy the API key and store it in a safe place.

Note: you need developer role on your tenant to generate API keys.

Environment

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

-

Qlik AutoML: How to join predicted output to original trained dataset

After generating model predictions in Qlik AutoML on Qlik Cloud, you can view the data in a Qlik Sense App. This way the data is hosted in the Cloud a... Show MoreAfter generating model predictions in Qlik AutoML on Qlik Cloud, you can view the data in a Qlik Sense App. This way the data is hosted in the Cloud and you can view your output with Qlik Sense while it remains staged in the Catalog.

Viewing predictions helps to understand the model output and is also useful in generating visualizations.

For this example, we pick up from the previous article (Qlik AutoML: How to upload, model, deploy, and predict on the Qlik Cloud platform) after we have generated the predictions.

Note: in this example, there is an 'ID' column linking all tables together in a 1-to-1 relationship.

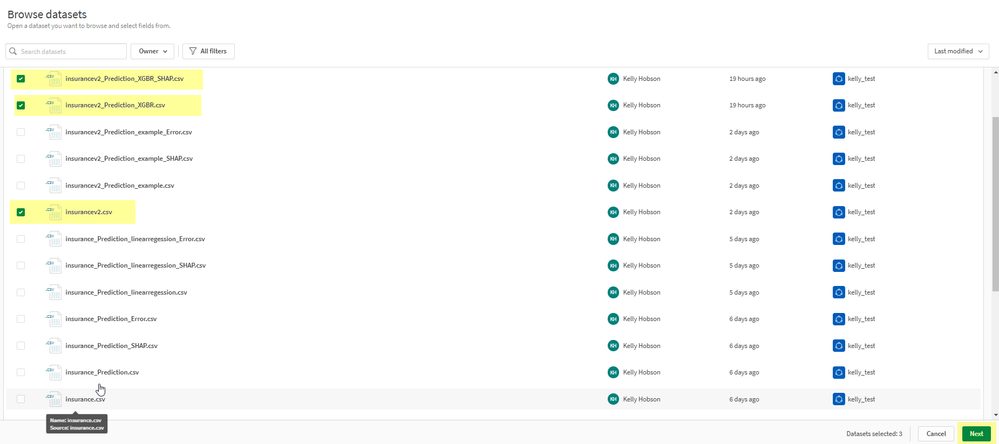

- Navigate to Qlik Cloud Console and Catalog. In this example, I've generated predictions for a Gradient Boost model (_XGBR)

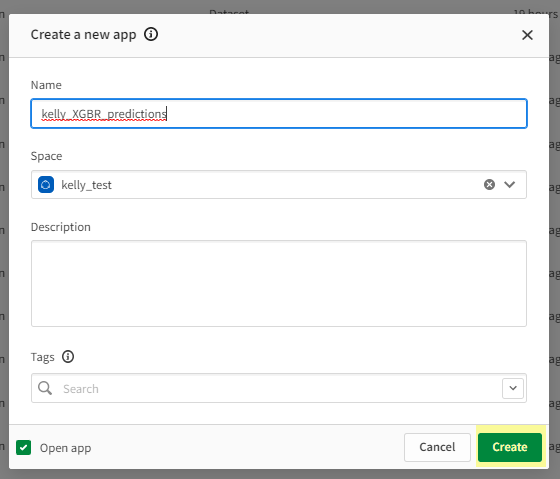

- Go to Add new -> "New Analytics App"

- Give it a meaningful name and click "Create"

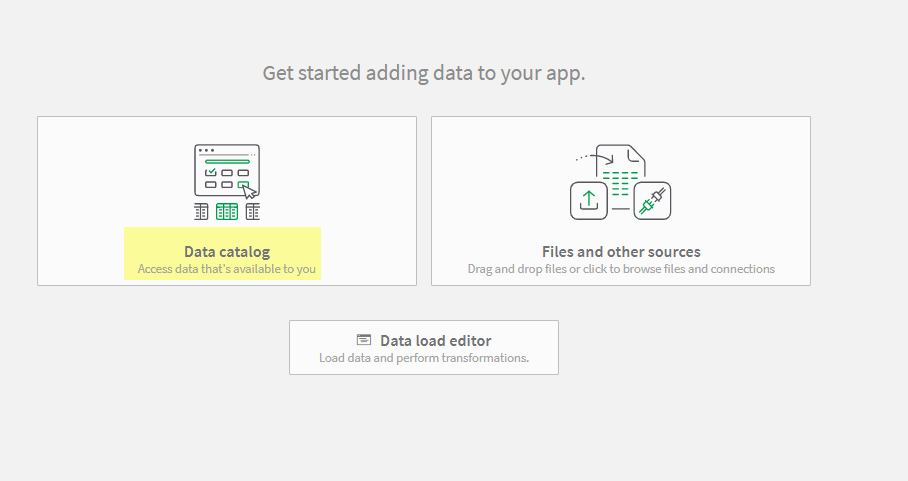

- In this example, we will generate from files in Data Catalog

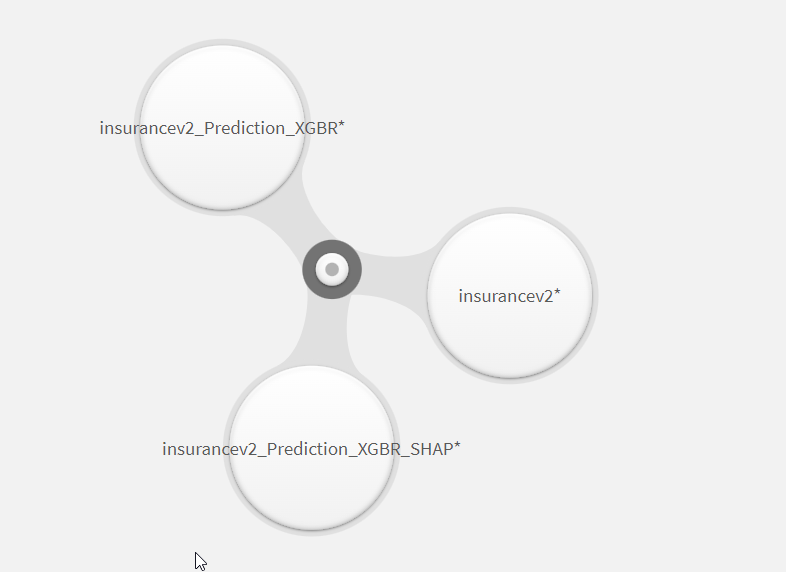

- Select ….Prediction_XGBR_SHAP, …Prediction_XGBR and the original dataset insurancev2.csv then click 'Next' on bottom right, the 'Load into App'

- This will display the 'Data Manager' page. Click 'Apply' on the recommended associations on the right hand side of the page

- Now the tables will be linked by the common 'ID' value between all tables

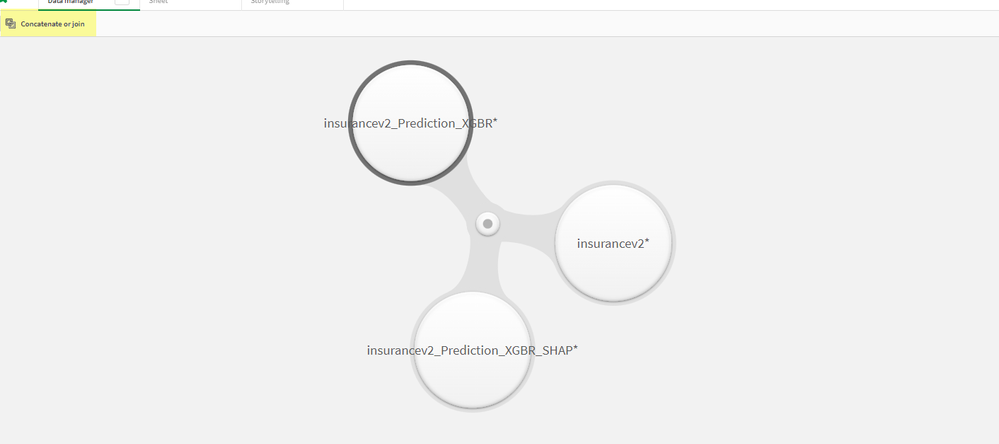

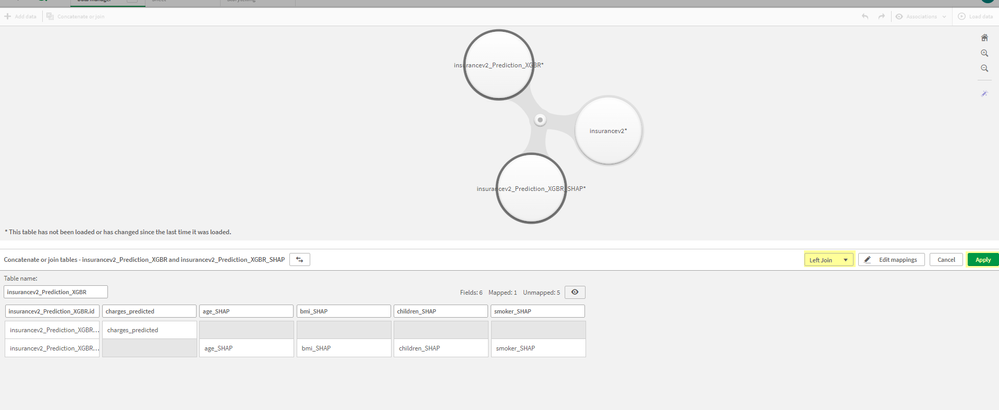

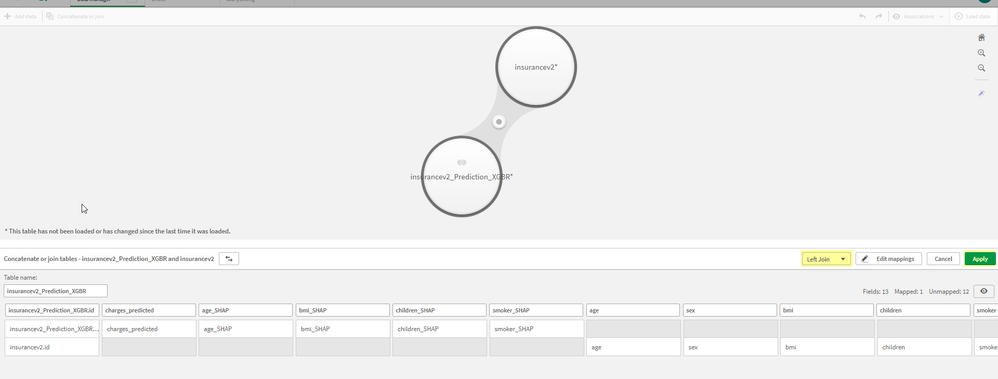

- In order to combine the datasets into 1 table for additional analysis, we will do simple Left join on ID. Select 1 of the tables and then click 'Concatenate or Join'

Will produce:

Select Left Join then Apply

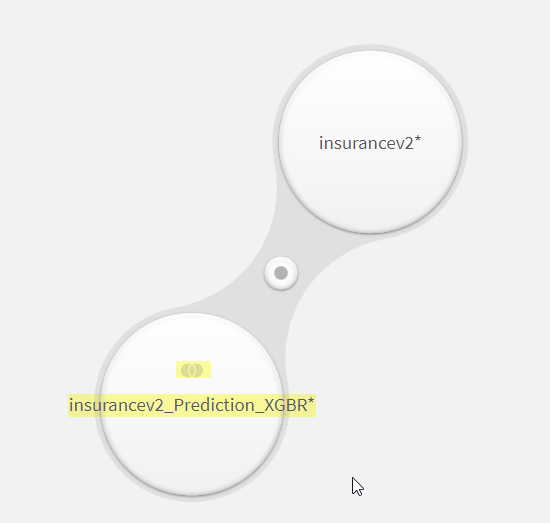

Produces a combined table: - Now follow the same steps to combing insurancev2_Prediction_XGBR with insurancev2. Select insurancev2_Prediction_XGBR and click 'Concatenate or join'. Select left join and apply.

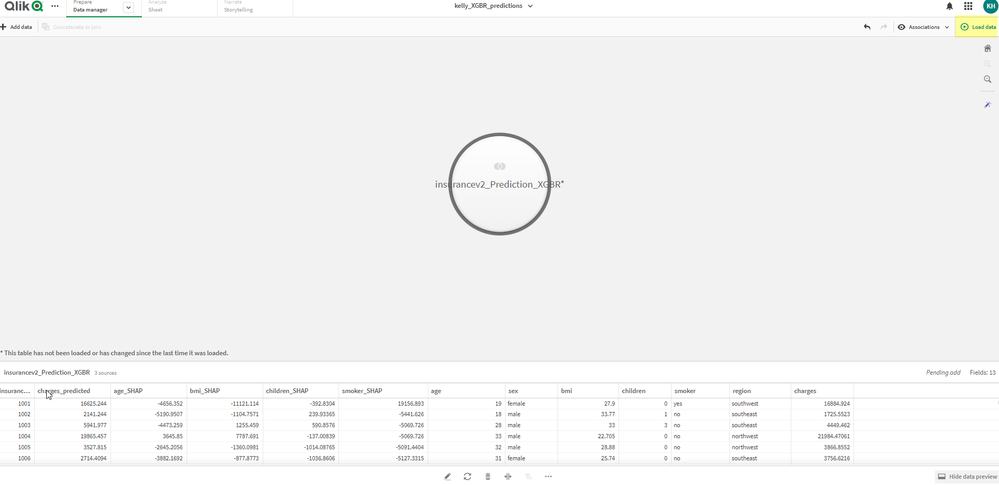

- Now, we have 1 table with columns from all three tables which were joined by 'ID'. Click on 'Load Data' in top right corner to load the data into the app and begin making visualizations or exploring SHAP values.

After clicking 'Load Data':

More info to come about Visualizations you can create in Qlik Sense!

Environment

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

- Navigate to Qlik Cloud Console and Catalog. In this example, I've generated predictions for a Gradient Boost model (_XGBR)

-

Qlik AutoML: Overview of SHAP values

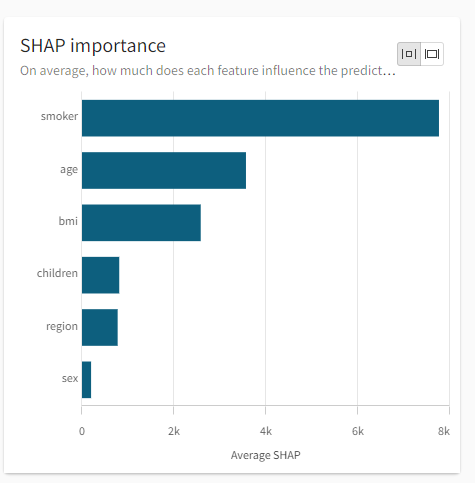

The goal of this article is to give an overview of SHAP values which are generated from Qlik AutoML model predictions. SHAP values serve as a way to m... Show MoreThe goal of this article is to give an overview of SHAP values which are generated from Qlik AutoML model predictions. SHAP values serve as a way to measure variable importance and how much they influence the predicted value of the model.

SHAP Importance explainedSHAP Importance represents how a feature influences the prediction of a single row relative to the other features in that row and to the average outcome in the dataset.

The goal of SHAP is to explain the prediction of an instance x by computing the contribution of each feature to the prediction. The SHAP explanation method computes Shapley values from coalitional game theory. The feature values of a data instance act as players in a coalition. Shapley values tell us how to fairly distribute the "payout" (the prediction) among the features. A player can be an individual feature value or a group of feature values.

For more information and mathy fun please reference this chapter from Interpretable Machine Learning:

https://christophm.github.io/interpretable-ml-book/shap.html

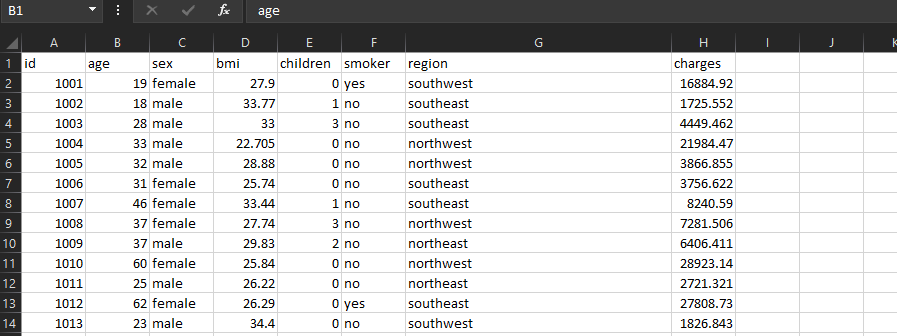

ExampleMedical Cost Personal dataset: https://www.kaggle.com/datasets/mirichoi0218/insurance

Note: I added an ID column, but not including as a feature

Features:

age, sex, bmi, children (number of), smoker, regionTarget:

chargesI uploaded this dataset into Qlik Cloud and generated 4 models. Random Forest Regression was the champion model.

From the UI, we see the SHAP Importance visualization. This shows that smoker, age, and bmi are the top 3 prediction influencers. Meaning their values have the most effect on the predicted charges.

Understanding how the values are calculated

I deployed the model and generated predictions from the Qlik Cloud interface. At this point you can open the data as a Qlik Sense app and combine the predicted output table with the original dataset (see Qlik AutoML: How to join predicted output to original trained dataset).

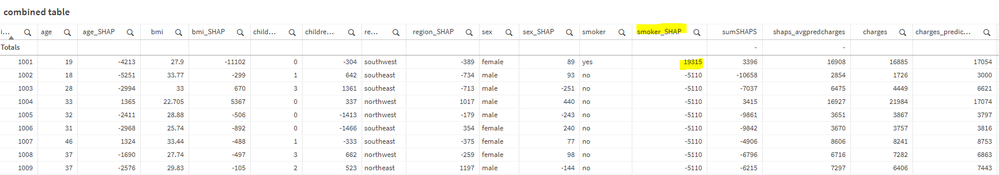

This is an example of the original table combined with the SHAP values by record.

Click the image below to enlarge.

Example interpretation of record 1001->

Smoker_SHAP value is 19315 which represents the following:

How much does Smoker=Yes affect the amount of charges given that the account holder is a Female, 19 years old, has a bmi of 27.9, has no children, and is in the Southwest region.

The sum of Shapley values for each row is how much that rows prediction differs from average.Average Predicted Charges (across all records) = 13511.5

Sum of SHAP values = 3396

Predicted charges manual SHAP calculation = 16908

Where:

sumSHAPS is a calculated column of the sum of the SHAP values in the record.

f(x) = age_SHAP+sex_SHAP+bmi_SHAP+smoker_SHAP+children_SHAP+region_SHAP

shaps_avgpredcharges is sumSHAPS+average(predicted_charges)

f(x) = sumSHAPS+13511.5

Charges is from the original dataset

Charges_predicted is the model predicted value

Value of generated SHAP values

The _SHAP values can be used in visualizations and further analysis to understand which features are driving the model predictions. For 1001, smoking increased total charges while non-smokers this led to reduced charges.

Notes

- I rounded the numeric values in the combined table to nearest whole number for readability in the article.

Ex: f(x) = round(bmi_SHAP,1) - Qlik AutoML Random Forest use approximate Shapley values. This is why in our example, shaps_avgpredcharges does not equal charges_predicted but are fairly close.

- Average Predicted Charges , f(x) = average(charges_predicted)

Environment

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

- I rounded the numeric values in the combined table to nearest whole number for readability in the article.

-

AutoML Kraken: Project Sharing best practice

Before accepting a Project share from another user (by clicking either the link or the button in the share invite email) you need to be logged in to... Show MoreBefore accepting a Project share from another user (by clicking either the link or the button in the share invite email) you need to be logged in to the AutoML platform.

If you are prompted to login when accepting the share, the share will not be successful; accepting the share when you're already logged in will result in a successful share.

Environment

- Qlik AutoML

- #Kraken

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

-

AutoML Kraken: Snowflake naming best practices

Special characters in Snowflake column names may prevent the dataset from being processed correctly. Avoid using < > [ ] { } characters in your colu... Show MoreSpecial characters in Snowflake column names may prevent the dataset from being processed correctly. Avoid using < > [ ] { } characters in your column names in Snowflake tables.

Environment

- Qlik AutoML

- #Kraken

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

-

AutoML Kraken: Domo naming best practices

Below are guidelines when using Domo as a source data provider. 1. Domo datasets with a forward slash ("/") in the dataset name may result in the i... Show MoreBelow are guidelines when using Domo as a source data provider.

1. Domo datasets with a forward slash ("/") in the dataset name may result in the ingestion of two datasets, each named on either side of the slash. Avoid using a slash in the dataset name.

2. If your dataset contains column names with spaces (e.g. "Annual Revenue") your dataset may fail to be processed correctly. Avoid spaces in column names.

Environment

- Qlik AutoML

- #Kraken

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

-

AutoML Kraken: avoid carriage returns or other non-standard new line characters

To avoid unexpected errors, ensure that your datasets - both training and apply - do not contain carriage returns and other non-standard "new line" ... Show MoreTo avoid unexpected errors, ensure that your datasets - both training and apply - do not contain carriage returns and other non-standard "new line" characters.

Environment

- Qlik AutoML

- #Kraken

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

-

AutoML Kraken: Error requesting stats. sql: no rows in result set

When viewing or refreshing the list of available datasets, you may see one or more "ghost" datasets that have been deleted from your data provider a... Show MoreWhen viewing or refreshing the list of available datasets, you may see one or more "ghost" datasets that have been deleted from your data provider and are no longer available for analysis or predictions.

When you select one of these datasets, a yellow caution icon will be displayed on the right-hand side of the screen, along with a:

"Error requesting stats. sql: no rows in result set" hover message.

Because the dataset is not actually available, you will be unable to proceed with using the dataset for analysis or predictions. This situation will be resolved in a future update, so that datasets that are no longer available will not be displayed in the dataset list.

This affects Qlik AutoML on the Kraken platform.

Environment

- Qlik AutoML

- #Kraken

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

-

How to predict customer churn risk using Qlik Predict and Qlik Automate

The automation built in this article won't be a perfect fit for every churn problem since every company has different customers and different data. Th... Show MoreThe automation built in this article won't be a perfect fit for every churn problem since every company has different customers and different data. The main goal of this article is to provide the right pointers and tips to build your own churn solution and to show what's possible when it comes to machine learning and automations.

Content

- Prerequisites

- 1. Customer records

- 2. Qlik Predict model

- 3. Prepare CRM

- 4. MySQL database

- 5. Qlik Sense App

- 6. Marketing campaign

- Automation

- Automation run mode

This article explains how to build an automation that uses Qlik Predict to predict the churn risk for customers. In this example, the following tools and systems are used:

- Qlik Automate

- Qlik Sense: to analyze churn risk in an app

- Qlik Predict: for training and deploying machine learning models

- MySQL database: to feed customer data (inc. churn risk) to the Qlik Sense app

- Salesforce CRM: as a source for customer records (Accounts)

- Email: to alert stakeholders when churn risk is too high

- Marketo: to auto-assign customers to marketing campaigns if their churn risk is too high

The following image provides an overview of how these systems are tied together by the automation:

Prerequisites

Before automation can be built, several steps must be taken to ensure all systems can work together effectively.

1. Customer records

Export your CRM customer records to a CSV file. This should include both customers who have churned and existing customers who have not (yet) churned.

2. Qlik Predict model

Unfamiliar with Qlik Predict? See:

Use the dataset from the previous step to train a model in Qlik Predict. Once the model is trained, deploy it so it can be used in automation.

3. Prepare CRM

Not every CRM contains fields to store churn risk (0% - 100%) or a churn prediction (yes/no). If you plan on writing this information back to your CRM, add these fields to the customer object.

4. MySQL database

Create a new MySQL database to store customer information together with the churn risk. This database will be used to import records to your Qlik Sense App.

You can use a different type of database or directly load the customers by creating a new connection in the Load Script.

5. Qlik Sense App

Build a new Qlik Sense App that you'll use to analyze the customer records and their churn risk.

Do not forget to feed the app with data from the previous step.

Need an example? See the Churn analysis demo app

6. Marketing campaign

We'll automatically assign customers with a too-high churn risk to a marketing campaign focusing on churn risk. In this example, we'll be using Marketo, but this can be changed to any marketing campaign solution you use in your organization.

Our Marketo instance is set up to assign leads (customers) who are added to a certain list to a marketing campaign that's connected to that list.

Automation

Once the prerequisites are completed, the automation can be built. Go to your Qlik Sense tenant and create a new automation.

- Search for the List New Updated And Deleted Accounts Incrementally block in the Salesforce connector and add it to the automation. When this block is executed for the first time, it will retrieve all account records from Salesforce. It will also set a pointer with the DateTime of its last execution. On the next executions, the block will only retrieve accounts that were created or updated after the pointer and it will update the pointer.

- We have no interest in predicting the churn risk for deleted customers so add a Filter List block and set the condition to only include records where IsDeleted is 'false'.

- Add a Condition block to verify if any new or updated records were received. If the output of the Filter List block is empty, a Stop block should be executed. This block will stop the automation and requires no further configuration.

- Add a Transform List block to transform the field names of the objects returned by the Filter List block into names that correspond with the fields in your Qlik Predict model. You can skip this step if you used the raw output from your CRM to train the model and didn't apply any transformations before training.

- The list of accounts is now ready to generate predictions on, search for the Qlik Predict connector and add the Generate Multiple Realtime Prediction Results block to the automation. Specify your model's Deployment ID in the block's Inputs tab. Set the Primary Key in the Inputs tab to the name of the field your CRM system uses for the customer object's id. For Salesforce this is the Id field but we renamed it to RECORDID in the Transform List block. Specifying this Primary Key will make it easier to later map the right predictions to the right customer.

- Add a Merge List block to merge the predictions from Qlik Predict back into the customer records from the CRM that are outputted by the Filter List block. Having specified a Primary Key in the previous step will be very helpful now.

- Now that we have a list containing both the customer records and the predictions we can write them back to the CRM. Add an Update Account block from the Salesforce connector and add it to the loop created by the Merge Lists block. Update the fields that contain the churn risk and churn prediction, in Salesforce these need to be created as custom fields.

- Do the same for the MySQL database. Add the Upsert Record block of the MySQL connector after the Upsert Account block and configure it to send all relevant fields to the database.

- Add a Condition block in the loop that verifies if the predicted churn risk is greater than a certain threshold (we're using 0.75 which corresponds to a churn risk of 75%). Add a Send Mail block to the Yes part of the Condition block, now an email will be sent every time a customer exceeds the churn risk threshold. Make sure to configure the Send Mail block so it contains an informative message. Feel free to add alerting functionalities to other systems like Microsoft Teams.

- Add the blocks that interact with the Marketo marketing campaigns.

- Add a Create Or Update Lead block from the Marketo connector right before the Condition block. Configure this block with the customer data from the CRM so it has the necessary information to create or update leads.

- Add an Add Lead To List block after the Send Mail block in the Yes part of the Condition block. A churn-focused Marketo marketing campaign is configured to monitor this list and import any newly added leads.

- Add a Remove Lead From List block to the No part of the Condition block. This will make sure that customers without a high churn risk receive wrongly-targeted content from the Marketing campaign.

- Add a Do Reload block from the Qlik Cloud connector after the loop from the Merge Lists block to reload your Qlik Sense app that analyzes customer churn risk. Make sure to configure the app's load script so it imports the customer records from the MySQL database.

Automation run mode

Since this automation only processes new and updated records from the CRM, it's best to configure its run mode to Scheduled to make sure the automation is executed every x minutes. In this example, we've used 15 minutes but this will depend on your use case and type of customers.

For information about automation run modes, see Working with run modes.

Attached to this article, you'll find an exported version of the above automation as 'Predict Customer Churn Risk automation.json' See the How to import and export automations article to learn how to import exported automations.

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

-

Qlik Cloud - How to enable predictive analytics (Qlik AutoML)

Description Qlik AutoML empowers users with automated machine learning capabilities to build and deploy machine learning models with native connectors... Show MoreDescription

Qlik AutoML empowers users with automated machine learning capabilities to build and deploy machine learning models with native connectors to leading enterprise data warehouses and business intelligence systems.

Prerequisites:

- Tenant admin role

Step by step:

Open the management console and go to "Setttings", right below the "Feature control" section you will have to enable the "Machine learning endpoints" option.

Double-click on top of the .gif to expand it

Once the option "Machine learning endpoints" has been activated the connector will appear for all the users.

Environment

- Qlik Cloud Data Services

Related Content