Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

Analytics & AI

Forums for Qlik Analytic solutions. Ask questions, join discussions, find solutions, and access documentation and resources.

Data Integration & Quality

Forums for Qlik Data Integration solutions. Ask questions, join discussions, find solutions, and access documentation and resources

Explore Qlik Gallery

Qlik Gallery is meant to encourage Qlikkies everywhere to share their progress – from a first Qlik app – to a favorite Qlik app – and everything in-between.

Qlik Community

Get started on Qlik Community, find How-To documents, and join general non-product related discussions.

Qlik Resources

Direct links to other resources within the Qlik ecosystem. We suggest you bookmark this page.

Qlik Academic Program

Qlik gives qualified university students, educators, and researchers free Qlik software and resources to prepare students for the data-driven workplace.

Recent Blog Posts

-

Qlik Talend Job Server and Talend Runtime - New Security Patches Available Now

Hello Qlik Users, A critical security issue in the Talend JobServer and Talend Runtime has been identified. This issue was resolved in later patches, ... Show MoreHello Qlik Users,

A critical security issue in the Talend JobServer and Talend Runtime has been identified. This issue was resolved in later patches, which are already available. Details can be found in the Security Bulletin Critical Security fix for the Qlik Talend JobServer and ESB Runtime (CVE-2026-XXXX).

Affected Software

- All versions of Talend JobServer before TPS-6017 (8.0) or TPS-6018 (7.3).

- All versions of Talend Runtime before 8.0.1.R2026-01-RT or 7.3.1-R2026-01

Recommendation

Upgrade at the earliest. The following table lists the patch versions addressing the vulnerability (CVE-2026-pending).

Always update to the latest version. Before you upgrade, check if a more recent release is available.

Product Patch Release Date Talend JobServer 8.0 TPS-6017 January 16, 2026 Talend Jobserver 7.3 TPS-6018 January 16, 2026 Talend Runtime 8.0 8.0.1.R2026-01-RT January 24, 2026 Talend Runtime 7.3 7.3.1-R2026-01 January 24, 2026 The Remote Engine and Dynamic Engine are not impacted by this vulnerability. However, we will update the embedded jobserver in version 2.14.1 of the remote engine available in February 2026.

Thank you for choosing Qlik,

Qlik Support -

Upgrade advisory for Qlik Sense on-premise November 2024 through November 2025: ...

Edit January 21st 2026: Updated the title and content to include the November 2024 release During recent testing, Qlik has identified an issue that ca... Show MoreEdit January 21st 2026: Updated the title and content to include the November 2024 release

During recent testing, Qlik has identified an issue that can occur after upgrading Qlik Sense on-premise to specific releases. While the upgrade completes successfully, some environments may experience problems with ODBC-based connectors after the upgrade.

The issue is upgrade path dependent and relates to connector components that are included as part of the Qlik Sense client-managed installation.

The advisory applies to the following releases:

- Qlik Sense Enterprise on Windows November 2024

- Qlik Sense Enterprise on Windows May 2025

- Qlik Sense Enterprise on Windows November 2025

Recommendation: After upgrading Qlik Sense on-premise, verify your connector functionality as part of your post-upgrade checks.

How can I identify if I am affected?

The issue can typically be identified by files being missing after the upgrade. In this example, the Athena connector is not working, and the following file is missing:

C:\Program Files\Common Files\Qlik\Custom Data\QvOdbcConnectorPackage\athena\lib\AthenaODBC_sb64.dll

In this example, all ODBC connectors stopped working:

C:\Program Files\Common Files\Qlik\Custom Data\QvOdbcConnectorPackage\QvxLibrary.dll

With the QvxLibrary.dll missing, both existing and newly created ODBC connections will fail.

How do I resolve the issue?

A fix will be delivered in upcoming patches. Stay up to date with the most recent version by reviewing our Release Notes.

Workaround

If your connectors have been impacted by this upgrade, rollback your ODBC connector package to the previously working version based on a pre-update backup. See How to manually upgrade or downgrade the Qlik Sense Enterprise on Windows ODBC Connector Packages for details.

The workaround is intended to be temporary. Apply the fixed Qlik Sense Enterprise on Windows patch for your respective version as soon as it becomes available.

If you are unable to perform a rollback, please contact Support.

If you have any questions, we're happy to assist. Reply to this blog post or take your queries to our Support Chat.

Thank you for choosing Qlik,

Qlik Support -

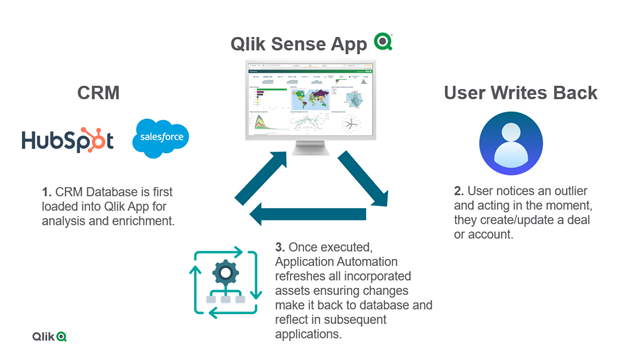

Creating a write back solution with Qlik Cloud is now possible!

Static, read-only dashboards are a thing of the past compared to what's possible now in Qlik Cloud. ‘Write back’ solutions offer the ability to input... Show MoreStatic, read-only dashboards are a thing of the past compared to what's possible now in Qlik Cloud.

‘Write back’ solutions offer the ability to input data or update dimensions in source systems, such as databases or CRMs, all while staying within a Qlik Sense app.

The solution incorporates both Qlik Cloud and Application Automation to enable users to input data from a dashboard or application and run the appropriate data refresh across the source system as well as the analytics.

Example Use Cases:

- Ticket/ Incident Creation

- Create Ticket or Incident in JIRA or ServiceNow.

- Data Changes

- Update Deals/Accounts in a CRM like HubSpot or Salesforce.

- Data Annotations

- Add a comment to or more records in a source system.

This new feature is possible with all of the connectors located in Application Automation, including:

- CRMs like HubSpot or Salesforce

- Databases like Snowflake, Databricks, Google Bigquery

- SharePoint

- and more!

Below you can see technical diagram based around using Application Automation for a write back solution.

The ability to write back in Qlik Cloud is a game changer for customers who want to operationalize their existing Qlik Sense applications to enhance decision making right inside an app where the analytics live. This not only streamlines business processes across an ever-growing data landscape, but it also enables users to to act in the moment. With Application Automation powering the write back executions, customers can unlock more value across their data and analytics environment.

To learn more for a more ‘hands-on tutorial’ please see video here.

- Ticket/ Incident Creation

-

Qlik DataTransfer Deprecation Notice for Q1 2026

Qlik DataTransfer will be officially End-of-Life by the end of Q1 2026. It will be removed from the Product Downloads site later this year and will no... Show MoreQlik DataTransfer will be officially End-of-Life by the end of Q1 2026.

It will be removed from the Product Downloads site later this year and will no longer be available for new installations or upgrades. Qlik will provide support until April 30, 2026.

To ensure a smooth transition, we recommend you begin utilizing Qlik Data Gateway – Direct Access, the supported alternative.

Note that the initial release of Qlik DataTransfer (November 2024, version 10.4.0) will not work after June 24th, 2025. If you still need to use Qlik DataTransfer beyond June, upgrade to the Service Release version 10.4.4.

How do I get started with Qlik Data Gateway - Direct Access?

We have compiled a list of resources to assist you in adopting Qlik Data Gateway - Direct Access:

- Setup Guide: Qlik Data Gateway – Direct Access

- Walkthrough Video: Qlik Data Gateway – Direct Access

- Troubleshooting Video: STT - Troubleshooting Qlik Data Gateway - Direct Access

Additionally, for those needing feature parity with Qlik DataTransfer, we recommend pairing with the File Connector via Direct Access, REST Connector via Direct Access, and the generic ODBC Connector.

Further Resources:

- Connector Factory Blog: Connector Factory January and February 2025 Releases

- SaaS in 60: File Connector via Direct Access Data Gateway - SaaS in 60

We will share an update later this year with the exact deprecation and end-of-support dates.

For assistance, please contact Qlik Support. Questions on how to contact Qlik Support.

Thank you for choosing Qlik,

Qlik Support -

Improving your Live Chat experience with Qlik Support: scheduled maintenance Feb...

We're improving your Live Chat experience! The maintenance window was moved from the 5th to the 12th. When will the maintenance take place? It will be... Show MoreWe're improving your Live Chat experience!

The maintenance window was moved from the 5th to the 12th.

When will the maintenance take place?

It will begin on the 12th of February, 2026, at 9 AM EST and is expected to last for three hours.

How will I be impacted?

No interruption is expected for the maintenance on the 12th.

In the event of unplanned complications, you will still be able to chat with the bot during this time to get answers to most of your questions, but we will be unable to connect you to a live agent. If you require assistance beyond what the bot can provide during this time, please log a support ticket directly on the customer portal.

We will update this blog post once the maintenance has been completed.

Thank you for choosing Qlik,

Qlik Support -

Qlik Talend Studio 2026-06 will move from Java 17 to Java 21

To all Talend customers, This is an advance notice that, to enhance both your security and experience, Java 21 will be required to launch Talend Studi... Show MoreTo all Talend customers,

This is an advance notice that, to enhance both your security and experience, Java 21 will be required to launch Talend Studio starting with the 2026-06 release.

This change only concerns the Talend Studio desktop application. It has no impact on the Jobs and Services you build with it, which will continue being compliant with Java 17 runtime environments (and Java 8 for Big Data Jobs).

You have multiple options to make a smooth transition:

- The Talend Studio embedded upgrade mechanism (Feature Manager) will offer handling the JDK version upgrade

- Should you prefer, a new installer of Talend Studio 2026-06 with JDK 21 embedded will also be provided

- You will also be free to manually configure Talend Studio to start with JDK 21, provided you are on Talend Studio 2025-09 or higher

Additional details:

Along with this change, the June release will come with many additions from a Java version perspective:

- Talend Studio will allow building Jobs and Services for Java 21 runtimes, in addition to Java 17 runtimes. Java 17 will remain the default setup for runtimes for the time being

- Remote Engines, Cloud Engines, Dynamic Engines, Job Server, and Talend Runtime will support running Jobs and Services in Java 21, in addition to the Java versions they already support

- Talend Administration Center (TAC), Data Preparation (client-managed), Data Stewardship (client-managed), Talend SAP RFC Server, Talend Semantic Dictionary, and Talend Identity and Access Management will also support running with Java 21 in addition to Java 17

For further questions, please start a chat with us to contact Qlik Support and subscribe to the Support Blog for future updates.

Thank you for choosing Qlik,

Qlik Support -

Operations Execution Dashboard

Operations Execution DashboardCOFCOThe operations team uses it, to allow them to control the payment requests created overtime and have a visual infor... Show MoreOperations Execution DashboardCOFCOThe operations team uses it, to allow them to control the payment requests created overtime and have a visual information of the the countries with must PRs created and how the distribution is being done.

Discoveries

Allows the team to see where we can grow our business and were we alreay have a big impact.

Impact

Shows how the business is progressing and where we can grow.

Audience

Used by operations team manager.

Data and advanced analytics

Has helped the operations team to manage how the payment requests are being impacted over time.

-

Techspert Talks - Building Streaming Data Pipelines with Qlik Open Lakehouse

Hi everyone, Want to stay a step ahead of important Qlik support issues? Then sign up for our monthly webinar series where you can get first-hand insi... Show MoreHi everyone,

Want to stay a step ahead of important Qlik support issues? Then sign up for our monthly webinar series where you can get first-hand insights from Qlik experts.Next Thursday, February 12 Qlik will host another Techspert Talks session and this time we are looking at Building Streaming Data Pipelines with Qlik Open Lakehouse.

But wait, what is it exactly?

Techspert Talks is a free webinar held on a monthly basis, where you can hear directly from Qlik Techsperts on topics that are relevant to Customers and Partners today.In this session we will cover:

- Streaming live data via Amazon Kinesis

- End-to-End architecture

- Embedded Analytics

Choose the webinar time that's best for you

The webinar is hosted using ON24 in English and will last 30 minutes plus time for Q&A.

Hope to see you there!! -

Qlik Add-in now Available for Microsoft Word and PowerPoint

Qlik Cloud users can now create Word and PowerPoint reports in Qlik Cloud. What does this mean? Add-ins can be thought of as a sort of extension. In t... Show MoreQlik Cloud users can now create Word and PowerPoint reports in Qlik Cloud.

What does this mean?

Add-ins can be thought of as a sort of extension. In this case, we’re connecting our Qlik Cloud tenant to Microsoft Word and PowerPoint to make generating reports easier. So now, users can connect their tenants and import data from their apps directly into a Word Document or a PowerPoint presentation. For Word, these documents can be exported as either .docx, or .PDF formats, and PowerPoint allows for .pptx or .PDF as well.

For more information on this, please see the post in What’s New in Qlik Cloud here.

How can I set this up?

To begin setting these add-ons up, we first need to create a new OAuth client. Start by accessing your tenant, then hit the waffles menu in the top left-corner and select ‘Administration’. In the ‘Administration’ selection, we’ll then select ‘OAuth’. Inside of OAuth, we’ll click ‘Create new’. Our ‘Client type’ will be ‘single page application’. Name your client accordingly, and then select ‘user_default’ . Other Scopes may be selected, but you need to select ‘user_default’ or else the installation will not work.

For the ‘Add redirect URLs’ enter your tenant plus ‘office-add-ins/OAuthLoginSuccess.html’. So it should look like tenant.us.qlikcloud.com/office-add-ins/OAuthLoginSuccess.html . For your ‘Add allowed origins field, just add in your tenant URL. Then select Create.

Now we’ll download our manifest to add to Microsoft. This manifest is basically a handshake between your Qlik Cloud tenant and Word or PowerPoint, allowing Word and PowerPoint access to the tenant. To create this manifest click the waffle menu again and select ‘Settings’. From settings, select ‘Email and reports’ then go down to ‘Sharing and reports. Here you’ll see a dropdown, use that dropdown to select what you named your OAuth client and click ‘Download’. This will download a file named ‘manifest.xml’.

Now open either Microsoft PowerPoint or Word. Open a blank document and select ‘Add-ins’. It’s an orange box at the top right-hand corner of your screen. Once that opens, select ‘Advanced’, then ‘Upload My Add-in’, once that box opens, go to the destination of your manifest, and select it. This will finish implementing your Add-in.

If you have issues uploading your manifest, help can be found here.

Once your manifest is uploaded, if you receive the error below, this article may assist you.

So now that we have our Add-in set up in our Microsoft app, how can we use them?

Now we can click our Add-in and we’re greeted with this screen:

Here we’re asked to select the space, and the app we’re going to be pulling our information from. Once we’ve finalized where our information is coming from, we’re given this screen:

Our selections from left to right are:

Home: This option allows us to change what the source app is from our tenant and allow us to change our tenant.

With the Table option, I can display the data from any of my visualizations in a chart format. Please note that when assembling this report, instead of all of the data immediately imported onto your Word document, the data will not show until the report is generated.

With the Charts option, users can import any of the Charts from their app into their report.

The next option, Variables and expressions, allows users to change what is seen in the Word Document Report. Variables are very useful when creating a report template, for example, displaying the correct year when generating the report.

The Levels feature allows users to show a certain level of their data. For example, I could want to show the data for each product group. I could use the sales, margins and cost of each ‘Product Group’ in my application. I could sandwich those metrics between my ‘Product Group’ levels and when the report is generated it would show me those metrics for each ‘Product Group’.

Thank you for taking the time to read this post. If you would like to learn more about using the features of the Qlik Add-in for Word and PowerPoint, a great video series for the Excel version can be found here. How do you think you could use these Add-ins in your work life? Drop your ideas in a comment below.

-

Connector Factory: October - December 2025 releases

Qlik Analytics New version of Direct Access gateway The Qlik Data Gateway - Direct Access allows Qlik Sense SaaS applications to securely access behin... Show MoreQlik Analytics

New version of Direct Access gateway

The Qlik Data Gateway - Direct Access allows Qlik Sense SaaS applications to securely access behind the firewall data, over a strictly outbound, encrypted, and mutually authenticated connection.

We recently released Direct Access gateway 1.7.8 and 1.7.9 which, in addition to bug fixes, introduced the following enhancements:

- Support for the updated Databricks OAuth authentication options (mentioned in next section)

- Override the default starting port set by process isolation

- Support for the Application Default Credentials OAuth mechanism with Google BigQuery

- Support for metadata commands with process isolation

- Support for Brazil (br) and France (fr) regions

- New metrics collector offers basic logging and monitoring of resource utilization by Direct Access gateway, connectors, and the Operating System.

More authentication options for Databricks connector

Two additional authentication mechanisms are now available with the Databricks connector: Databricks OAuth Service Principal and Databricks OAuth User Account. In addition, authentication with Personal Access Token is now an explicit option and basic authentication (with username) is no longer available as it is no longer supported by Databricks.

New Connector: SAP OData

SAP has implemented restrictions on their customers on which technologies that can be used to source and extract data from SAP applications. While Qlik will continue to support all existing source endpoints, we have introduced an “SAP compliant” endpoint for customers. This new SAP OData source connector will use a secure web service connection to extract data from SAP applications.

API to enable and disable access to data sources

Qlik Cloud tenant administrators will now be able to update the access to a data source by enabling or disabling the related connectors. Disabling a connector will not only prevent access to the data source but will also hide it within the Qlik Cloud user interface (ex. Analytics activity center, Data manager, and Data load editor).

This capability is also available in Qlik Cloud Government and Qlik Cloud Government - DoD.

Qlik Automate (Formerly Qlik Application Automation)

Updated Connectors

- Qlik Cloud Services and Qlik Platform Operations

- New automation connection blocks

- Dedicated blocks for the changes-store (write table) API. Learn more

- Microsoft Outlook: Send emails with attachments. This blog describes how you can migrate automations from the Mail connector to this connector.

- Qlik Reporting: Generate HMTL reports. Learn more

- Qlik Talend Data Integration: New “List Runtime Dataset States” block

Qlik Talend

New Qlik Talend Cloud connectors

We’re continuing to expand the connectivity of Qlik Talend Cloud with new connectors for FormKeep (form endpoints management) and Zoom (videotelephony). Being able to quickly use these two applications as a data source helps companies eliminate the lengthy development time associated with custom connectors.

-

Revenue_Reports

Revenue_ReportsClay_Tech_SystemThis Qlik app is designed to provide a comprehensive overview of sales performance across multiple dimensions, includin... Show MoreRevenue_ReportsClay_Tech_SystemThis Qlik app is designed to provide a comprehensive overview of sales performance across multiple dimensions, including region, city, product category, and time. It consolidates key metrics such as total sales, total customers, number of sub-categories, and average unit price, while allowing users to dynamically filter the data by category and region. The visualizations include region-wise sales, quantity and sales by sub-category, city-wise total sales, product-wise units sold, quarterly sales trends, and state-wise sales contributions, offering a clear and interactive view of the business performance. It is primarily used by sales managers, marketing teams, and business analysts to monitor trends, identify top-performing products, and assess regional and city-level performance. By providing actionable insights through an interactive and easily navigable interface, the app enables stakeholders to make informed, data-driven decisions that improve sales strategies, optimize inventory, and enhance overall business performance.

Discoveries

The Qlik app reveals clear differences in sales performance across regions, cities, and product categories, with certain regions and states contributing a significantly higher share of total sales. Major cities emerge as key revenue drivers, while other locations show potential for growth. Product-level analysis indicates that a limited number of high-demand items account for a large portion of total units sold, particularly within electronics and fashion categories. Sales trends show steady growth over recent years, with minor fluctuations in the most recent period that may be influenced by seasonality or market conditions. Overall, the app highlights stable pricing, strong regional concentration, and identifiable top-performing products, enabling stakeholders to focus on high-impact areas while addressing underperforming segments to improve overall business performance.

Impact

This report enables stakeholders to quickly understand sales performance across regions, products, and time through an interactive and unified view. It supports data-driven decisions by identifying high-performing areas, trends, and improvement opportunities. As a result, it helps optimize sales strategies, inventory planning, and overall business efficiency.

Audience

This app is used by sales managers, marketing teams, business analysts, and senior leadership to track and evaluate sales performance across regions, cities, and product categories. It is primarily accessed through Qlik Sense dashboards during daily and weekly performance reviews and is a critical tool for monitoring business health, identifying trends, and supporting strategic decision-making.

Data and advanced analytics

The app has improved the business’s ability to analyze sales data by providing interactive, real-time insights across multiple dimensions. It enables faster identification of high-performing areas and underperforming segments, leading to more effective sales strategies and improved operational efficiency.

-

Watch! Q&A with Qlik: AI in Qlik Talend Data Integration

Don't miss our previous Q&A with Qlik! Pull up a chair and chat with our panel of experts to help you get the most out of your Qlik experience. WA... Show MoreDon't miss our previous Q&A with Qlik! Pull up a chair and chat with our panel of experts to help you get the most out of your Qlik experience.

-

Community Updates & What to Explore Next

Hi Qlik Community, We hope you’re having a great start to the year so far! As we move further into 2026, we wanted to share a few recent updates, prof... Show MoreHi Qlik Community,

We hope you’re having a great start to the year so far! As we move further into 2026, we wanted to share a few recent updates, profile enhancements, and learning opportunities now available across the Qlik Community.

Here’s what’s worth checking out.

Refresh Your Community Profile

We’ve rolled out a refreshed set of Community avatars that were designed by Qlik! This will give you more options to personalize your profile.

To explore them, head to Community Settings and navigate to Avatars. You’ll find the updated designs available in the dropdown under “Qlik.”We’ve also introduced an optional Company Name field under Community Settings → Personal → Personal Information. Adding this can help our Community team better understand which organization you’re aligned with when reviewing activity across the Community. This information is only visible to Community admins and moderators.

Suzy’s Tip: Finding and Updating Your Username

Our latest Suzy’s Tip walks through how to find and change your Community username. You can watch it in the Watercooler forum, and we encourage you to subscribe to the Suzy’s Tip label to stay up to date as more videos are released.

If there’s a question or topic you’d like to see covered in a future Suzy’s Tip, let us know, your feedback helps shape what we create next.

On-Demand and Upcoming Sessions

Whether you’re catching up on content you may have missed or looking ahead to what’s coming up, here’s a snapshot of the latest on-demand resources and upcoming live sessions you won’t want to miss:

Qlik Data & Analytics Trends 2026: On Demand

If you missed the live experience, the full 2026 Data & Analytics Trends content is now available on demand. Dive into the insights shaping the future of data, analytics, and AI, and explore expert perspectives at your own pace. Check it out here!Qlik Insider: 2026 Product Roadmap

In this special Qlik Insider webinar, we’ll share a first look at Qlik’s 2026 product roadmaps, highlighting the strategic direction guiding innovation across analytics and data integration.Join us live:

- AMER: February 18, 2026 - 1:00 PM EST / 10:00 AM PDT

- EMEA: February 18, 2026 - 11:00 AM CET / 10:00 AM UK

- APAC: February 24, 2026 - 9:30 AM IST / 12:00 PM SGT / 3:00 PM AEDT

Register for the webinar here!

Q&A with Qlik: AI in Qlik Talend Data Integration

On February 3 at 10:00 AM ET, join Qlik experts for a live Q&A focused on AI in Qlik Talend Cloud Data Integration. Bring your questions and hear creative approaches to common data integration challenges. Join us here!

Building Smart Solutions with Qlik (On Demand)

Explore how Qlik helps teams build smarter, more impactful solutions across analytics and data integration in this on-demand session. Read the on-demand briefing!

Qlik Cloud Kickstart: Start with Qlik Cloud Analytics Effortlessly

On February 6, join this 90-minute session to learn how to quickly find the data you need, save time, and make smarter decisions with Qlik Cloud Analytics. Register here!Qlik Connect 2026

Time is running out to save on Qlik Connect 2026. Register by January 31 to receive $300 off your pass.Thank you, as always, for being an active part of the Qlik Community. We appreciate your engagement and look forward to sharing more updates with you soon!

Your Qlik Community Managers,

Sue, Jamie, Caleb, and Brett

@Sue_Macaluso @Jamie_Gregory @Brett_Cunningham -

Qlik and Talend Subprocessors - Version 6.9 - 28th January 2026

Version 6.9 Current as of: 28th January 2026 Qlik and Talend, a Qlik company, may from time to time use the following Qlik and Talend group companies ... Show MoreVersion 6.9 Current as of: 28th January 2026

Qlik and Talend, a Qlik company, may from time to time use the following Qlik and Talend group companies and/or third parties (collectively, “Subprocessors”) to process personal data on customers’ behalf (“Customer Personal Data”) for purposes of providing Qlik and/or Talend Cloud, Support Services and/or Consulting Services.

Qlik and Talend have relevant data transfer agreements in place with the Subprocessors (including group companies) to enable the lawful and secure transfer of Customer Personal Data.

You can receive updates to this Subprocessor list by subscribing to this blog or by enabling RSS feed notifications.

Third party subprocessors for Qlik Cloud

Third Party

Location of processing (e.g., tenant location)

Service Provided/Details of processing

Address of contracting party

Contact

Amazon Web Services

(AWS)

See Qlik Cloud locations at: https://www.qlik.com/us/regions

Qlik Cloud is hosted through AWS

Amazon Web Services, Inc. 410 Terry Avenue North, Seattle, WA 98109-5210, U.S.A

MongoDB

See Qlik Cloud locations at: https://www.qlik.com/us/regions

Any data inputted into the Notes feature in Qlik Cloud

Mongo DB, Inc.

229 West 43rd St.,

New York, NY 10036

USAThird party subprocessors for Qlik mobile device apps

Google Firebase

United States

Push notifications

Google LLC

1600 Amphitheatre Parkway

Mountain View

California

United StatesThird party subprocessors for Talend Cloud

Third Party

Location of processing (e.g., tenant location)

Service Provided/Details of Processing

Address of contracting party

Contact

Amazon Web Services (AWS)

See Talend Cloud locations at: https://www.qlik.com/us/regions

These Talend Cloud locations are hosted through AWS

Amazon Web Services, Inc.

410 Terry Avenue North, Seattle, WA 98109-5210, U.S.A.Microsoft Azure

See Talend Cloud locations at: https://www.qlik.com/us/regions

These Talend Cloud locations are hosted through Microsoft Azure

Microsoft Corporation

1 Microsoft Way, Redmond, WA 98052, USAMicrosoft Enterprise Service Privacy

Microsoft Corporation

1 Microsoft Way

Redmond, Washington 98052 USAMongoDB

See Talend Cloud locations at: https://www.qlik.com/us/regions

Any data inputted into the DataPrep and Stewardship modules of Talend Cloud.

Hosted in the same region as the customer’s Talend Cloud environment on AWS or Microsoft Azure, as selected by the customer.

Mongo DB, Inc.

229 West 43rd St.,

New York, NY 10036

USAThird party subprocessors for Qlik and Talend Support Services and/or Consulting Services

The vast majority of Qlik’s support data that it processes on behalf of customers is stored in Germany (AWS). However, in order to resolve and facilitate the support case, such support data may also temporarily reside on the other systems/tools below.

Third Party

Location of processing (e.g., tenant location)

Service Provided/Details of processing

Address of contracting party

Contact

Amazon Web Services

(AWS)

Germany

Support case management tools

Amazon Web Services, Inc.

410 Terry Avenue North, Seattle, WA 98109-5210, U.S.A.

Salesforce

UK

Support case management tools

Salesforce UK Limited

Village 9

Floor 26 Salesforce Tower

110 Bishopsgate

London, UK

EC2N 4AYMicrosoft

United States

Customer may send data through Office 365

Microsoft Corporation

One Microsoft Way,

Redmond, WA

98052

USAChief Privacy Officer

One Microsoft Way,

Redmond, WA

98052

USAAda

Germany

Support Chatbot

Ada Support

371 Front St W,

Unit 314

Toronto, Ontario

M5V 3S8

CANADAPersistent

India

R&D Support Services

2055 Laurelwood Road

Suite 201

Santa Clara, California 95054

USAAtlassian

(Jira Cloud)

Germany, Ireland (Back-up)

R&D support management tool

350 Bush Street

Floor 13

San Francisco, CA 94104

United StatesStretch Qonnect APs

Denmark

2nd line support for the Qlik Analytics Migration Tool

Kompagnistræde 21

1208 Copenhagen

Denmark

Affiliate Subprocessors for Qlik and Talend

Affiliate Subprocessors

These affiliates may provide services, such as Consulting or Support, depending on your location and agreement(s) with us. Our Support Services are predominantly performed in the customer’s region:

EMEA – France, Sweden, Spain, Israel; Americas – USA; APAC – Japan, Australia, India.

Subsidiary Affiliate

Location of processing (e.g., tenant location)

Service Provided/Details of Processing

Address of contracting party

Contact

QlikTech International AB

Sweden

These affiliates may provide services, such as Consulting or Support, depending on your location and agreement(s) with us. Our Support Services are predominantly performed in the customer’s region: EMEA – France, Sweden, Spain, Israel; Americas – USA; APAC – Japan, Australia, India.

Scheelevägen 26

223 63 Lund

Sweden

QlikTech Nordic AB

Sweden

QlikTech Latam AB

Sweden

QlikTech Denmark ApS

Denmark

Dampfaergevej 27-29, 5th Floor 2100 København Ø Denmark

QlikTech Finland OY

Finland

Simonkatu 6 B 5th Floor FI-00100 Helsingfors Finland

QlikTech France SARL, Talend SAS

France

93 Ave Charles de Gaulle 92200 Neuilly Sur Seine France

QlikTech Iberica SL (Spain)

Spain

"Blue Building", 3rd Floor Avinguda Litoral nº 12-14 08005 Barcelona Spain

QlikTech Iberica SL (Portugal liaison office), Talend Sucursal Em Portugal

Portugal

QlikTech GmbH

Germany

Joseph-Wild-Str. 23 81829 München Germany

QlikTech GmbH (Austria branch)

Austria

Am Euro Platz 2, Gebäude G A-1120, Wien, Austria

QlikTech GmbH (Swiss branch)

Switzerland

c/o Küchler Treuhand

Brünigstrasse 25, CH-6055 Alpnach Dorf

Switzerland

QlikTech Italy S.r.l.

Italy

Piazzale Luigi Cadorna 4 20123 Milano (MI)

QlikTech Netherlands BV

Netherlands

Evert van de Beekstraat 1-122

Building B, 6th Floor

1118 CL SchipholQlikTech Netherlands BV (Belgian branch)

Belgium

Culliganlaan 2D

1831 DiegemBlendr NV

Belgium

Bellevue Tower Bellevue 5, 4th Floor, Ledeberg 9050 Ghent Belgium

QlikTech UK Limited

United Kingdom

1020 Eskdale Road, Winnersh, Wokingham, RG41 5TS United Kingdom

Qlik Analytics (ISR) Ltd.

Israel

1 Atir Yeda St, Building 2 7th floor 4464301, Kfar Saba Israel

QlikTech International Markets AB (DMCC Branch)

United Arab Emirates

AB (DMCC Branch)

JBC 3 Building, Cluster Y

3rd Floor, Office 301

P.O. Box 120115

Jumeirah Lake Towers DubaiQlik Business Solutions Company Kingdom of Saudi Arabia

6629 King Abdul Aziz, District King Salman, Riyadh, Kingdom of Saudi Arabia

QlikTech Inc.

United States

211 South Gulph Road Suite 500 King of Prussia, Pennsylvania 19406

QlikTech Corporation (Canada)

Canada

1133 Melville Street Suite 3500, The Stack Vancouver, BC V6E 4E5 Canada

QlikTech México S. de R.L. de C.V.

Mexico

c/o IT&CS International Tax and Consulting Service San Borja 1208 Int. 8 Col. Narvate Poniente, Alc Benito Juarez 03020 Ciudad de Mexico Mexico

QlikTech Brasil Comercialização de Software Ltda.

Brazil

51 – 2o andar - conjunto 201 Vila Olímpia – São Paulo – SP Brazil

QlikTech Japan K.K.

Japan

105-0001 Tokyo Toranomon Global Square 13F, 1-3-1. Toranomon, Minato-ku, Tokyo, Japan

QlikTech Singapore Pte. Ltd.

Singapore

9 Temasek Boulevard Suntec Tower Two Unit 27-01/03 Singapore 038989

QlikTech Hong Kong Limited

Hong Kong

Unit 19 E Neich Tower 128 Glouchester Road Wanchai, Hong Kong

Qlik Technology (Beijing) Limited Liability Company, Talend China Beijing Technology Co. Ltd.

China

51-52, 26F, Fortune Financial Center, No. 5 Dongsan Huanzhong Road, Chaoyang district, Pekin / Beijing, 100020 China

QlikTech India Private Limited, Talend Data Integration Services Private Limited

India

“Kalyani Solitaire” Ground Floor & First Floor 165/2 Krishna Raju Layout Doraisanipalya Off Bannerghatta Road, JP Nagar, Bangalore 560076

QlikTech Australia Pty Ltd

Australia

McBurney & Partners Level 10 68 Pitt Street Sydney NSW 2000 Australia

QlikTech New Zealand Limited

New Zealand

Kensington Swan 40 Bowen Street Wellington 6011 New Zealand

In addition to the above, other professional service providers may be engaged to provide you with professional services related to the implementation of your particular Qlik and/or Talend offerings; please contact your Qlik account manager or refer to your SOW on whether these apply to your engagement.

Qlik and Talend reserve the right to amend its products and services from time to time. For more information, please see www.qlik.com/us/trust/privacy and/or https://www.talend.com/privacy/.

-

Write Table now available in Qlik Cloud Analytics

Turn insights into action... right inside your analytics! As we wrap up the year and before everyone starts tying bows on projects, dashboards and wi... Show MoreTurn insights into action... right inside your analytics!

As we wrap up the year and before everyone starts tying bows on projects, dashboards and wish lists, we wanted to add one more gift to the pile. Something that helps you move from insights to action faster, collaborate more easily, and keep your workflows flowing straight into the new year.

Unwrap Write Table, now available in Qlik Cloud Analytics.

Write Table is included with Premium, Enterprise, and Enterprise SaaS subscriptions. No add-ons or extra licensing required. You can find it in the Chart Library now.

A More Interactive Analytics Experience

Write Table introduces a new editable table chart that lets you update data, add context, validate decisions, and collaborate directly within your analytics apps. No reloads. No switching systems.

Just click, update, and watch your changes sync instantly across active sessions.

And because it’s built natively into Qlik Cloud Analytics, it seamlessly integrates into your existing workflows, transforming your apps into actionable workspaces.

"Probably the most awaited feature for customers who want to turn insights into action. Write table closes the gap between analytics and transaction applications, offering a compelling solution for those who always want to take it a step further. "

— Henri Rufin, Head of Data & Analytics, RadialFrom Decisions to Automation

Now you can capture decisions and act on them.

With real-time syncing and change tracking, every update you make in a Write Table is stored in a Qlik-managed database called a “change store” that can be exported and connected into Qlik Automate workflows. That means approvals, changes, or comments made in an app can instantly trigger downstream processes.

From updating operational records... to routing approvals... to notifying teams... to pushing changes into your operational systems....

Write Table helps you move smoothly from insight -> decision -> action in one place.

“The new Write Table is a game-changing addition to Qlik's data and analytics solution. It allows our users to interact with the data, add context to their insights and collaborate effortlessly within the same trusted end-to-end platform. We're going to use it for everything, from updating data easily to seamlessly integrating with third-party tools using Qlik Automate workflows.”

— Sebastian Björkqvist, Solution Lead, Fellowmind

Key Highlights

- Editable table chart for updating values, adding comments, and capturing decisions in the Chart Library

- Real-time sync across active sessions, without reloads

- Qlik-managed change store for secure update handling

- Integration with Qlik Automate to trigger downstream workflows and orchestrate actions

- Native to Qlik Cloud Analytics, included for Premium, Enterprise and Enterprise SaaS subscriptions

Learn more

- Write Table Product Tour

- What’s New in Qlik Cloud | Qlik Help

- Write Table | Qlik Help

- Write Table - SaaS in 60 | Video

- Write Table FAQ | Support Article

-

Qlik Sense November 2025 (Client-Managed) now available!

Key Highlights Refreshed App Settings Design The app-settings area has been refurbished with a more modern layout and tabbed navigation between cat... Show MoreKey Highlights

- Refreshed App Settings Design

The app-settings area has been refurbished with a more modern layout and tabbed navigation between categories of settings.

Why this matters:- Saves time when configuring apps

- Helps with onboarding and reducing configuration errors

- Improvements to the Sheet Editing Experience

Several usability upgrades have landed in the sheet-edit experience:

- The source table viewer and filters now appear directly from the sheet edit view, so you can see data tables and fields in-context.

- Filters have been added to the properties panel, making building and applying filters to visualizations faster.

Why this matters: - Makes authoring apps more intuitive and reduces back-and-forth between data manager and sheet view

- Helps streamline development, speeding time to insight

- New Straight Table — Now the Default

The new straight table object has graduated and is now included under the chart section as the default table visual. The older table object remains accessible in the asset panel for now and its eventual deprecation will be announced well in advance.

Why this matters:- The new straight table has enhanced usability (such as improved selection, sorting, performance)

- Encourages migration to the newer, better-supported table, ahead of eventual deprecation

- New Visual Enhancements & Chart Capabilities

Some powerful visual upgrades arrived in this release:

- Indent row setting in the new pivot table: For the new pivot table object, you can now choose “indent row dimensions” for a more compact view (ideal for text-heavy tables)

- Pivot table indicators: The new pivot table also supports icons and colors based on thresholds, enabling quick visual cues on measures.

- Shapes in bar and combo charts: Building on the success of shapes in line charts, you can now add shapes to bar and combo charts, enriching context and visual data literacy.

- Line chart shapes improved: For line charts, labels and symbols (size, color, placement) are now available in the shape options.

- Org chart now supports images & new styling: The org-chart object can now include an image via URL and enhanced styling settings making hierarchy charts look sharper.

- Map chart stability enhancements: Map charts now use a local webmap rather than one from Qlik’s servers resulting in faster load times and improved stability.

Why this matters: - These visual enhancements offer more expressive, compelling dashboards and reduce friction for authors

- Stability enhancements (e.g., for maps) reduce risk of user-experience issues in production

- Removal of Deprecated Objects

With this release, we are updating our announcement from our Qlik Sense May 2025 release regarding the roadmap for removing deprecated visualization objects. The following deprecated charts are now scheduled to be removed from the Qlik Analytics distribution in May 2027.

- Bar & area

- Bullet chart (old one)

- Heatmap chart

- Button for navigation

- Share button

- Show/hide container

- Container (old one)

In Closing

The November 2025 release for Qlik Sense Enterprise on Windows delivers meaningful improvements across usability, visualizations, and stability. Whether you're an analytics author, a business user, or an administrator/architect, there are advantages to be gained with this release.

As always, we recommend reviewing the full “What’s New” documentation and aligning your upgrade and adoption strategy accordingly.

-

Qlik Replicate and an update on Salesforce “Use Any API Client” Permission Depre...

Dear Qlik Replicate customers, Salesforce announced (October 31st, 2025) that it is postponing the deprecation of the Use Any API Client user permiss... Show MoreDear Qlik Replicate customers,

Salesforce announced (October 31st, 2025) that it is postponing the deprecation of the Use Any API Client user permission. See Deprecating "Use Any API Client" User Permission for details.

Qlik will keep the OAUT plans on the roadmap to deliver them in time with Salesforce's updated plans.

Salesforce has announced the deprecation of the Use Any API Client user permission. For details, see Deprecating "Use Any API Client" User Permission | help.salesforce.com.

We understand that this is a security-related change, and Qlik is actively addressing it by developing Qlik Replicate support for OAuth Authentication. This work is a top priority for our team at present.

If you are affected by this change and have activated access policies relying on this permission, we recommend reaching out to Salesforce to request an extension. We are aware that some customers have successfully obtained an additional month of access.

By the end of this extension period, we expect to have an alternative solution in place using OAuth.

Customers using the Qlik Replicate tool to read data from the Salesforce source should be aware of this change.Thank you for your understanding and cooperation as we work to ensure a smooth transition.

If you have any questions, we're happy to assist. Reply to this blog post or take your queries to our Support Chat.Thank you for choosing Qlik,

Qlik Support -

Announcing the 2026 class of Qlik Academic Program Educator Ambassadors!

The Qlik Academic Program is proud to announce our 2026 class of Educator Ambassadors. Academic Program Ambassadors are educators who champion the Qli... Show MoreThe Qlik Academic Program is proud to announce our 2026 class of Educator Ambassadors.

Academic Program Ambassadors are educators who champion the Qlik Academic Program at their universities and beyond, with a passion for preparing students for the data driven workplace. These individuals are some of our most active participants of the Qlik Academic Program who fully utilize the free software, training resources and qualifications that we provide to university students and educators. The members of our 2026 class are:

Marcin Stawarz

Blerim Emruli

Dr. Javier Leon

Dr. K. Kalaiselvi

Katherine Taylor Pearson

Angelika Klidas

Dr. Terrence Perera

Chee-wai Ho

Dr Ravi Aavula

Alexander Flaig

Gabriel Navassi

Daniel O'Leary

Angel Monjarás

Marisa Sánchez

Priscila de Jesus Papazissis Paolinelli

Manikant Roy

Meet the Qlik Academic Program Professor Ambassadors for 2026

We are thrilled to be recognizing the efforts of these individuals to help the Qlik Academic Program to achieve its mission - to create a data literate world, one student at a time. Each ambassador has been selected through a self-nominated application process, where they were required to answer various questions covering their motivations for becoming an ambassador, and to evidence their passion for upskilling their students in analytics over the past 12 months. This year, we are excited to select another 16 ambassadors, 3 new ones and 13 returning ambassadors whose efforts continued to impress us. By way of thanks for their efforts our ambassadors will receive exclusive benefits such as webinars and discussion groups with Qlik leaders, opportunities to showcase their experience with the Qlik Academic Program and the chance to grow their network with other educators across various fields and geographies.

Throughout 2026 our ambassadors will continue their advocacy for the Qlik Academic Program and help us to reach even more students and educators with our free resources. Stay tuned over the coming months for more in-depth profiles on each of our ambassadors, and get to know who they are, what they teach and why they are so passionate about bridging the data literacy skills gap!

Learn more about the program and how to apply for future classes.

-

Decommissioning the legacy support portal (support.qlik.com) January 23rd, 2026

The legacy Qlik Support portal (previously known as support.qlik.com) has been decommissioned on the 23rd of January, 2026. How will this affect me? Y... Show MoreThe legacy Qlik Support portal (previously known as support.qlik.com) has been decommissioned on the 23rd of January, 2026.

How will this affect me?

You will be largely unaffected. Access to our existing and up-to-date portal is not impacted by this change, meaning chat, knowledge, and your support cases remain exactly where you expect them to.

Did you know? We're launching additional My Account features for customerportal.qlik.com. Read more here in Your Qlik Customer Portal My Account features.

I used to access my licenses on the old portal. Where do I go now?

If you previously accessed your legacy (perpetual) Qlik licenses and control numbers on support.qlik.com, your next stop is our Support Chat. A live agent will share the license, control number, and LEF with you on request.

Thank you for choosing Qlik,

Qlik Support -

How Qlik Academic Program is shaping careers in Analytics

In today’s job market, data literacy and analytics skills are among the most sought-after capabilities employers look for. To help prepare students fo... Show MoreIn today’s job market, data literacy and analytics skills are among the most sought-after capabilities employers look for. To help prepare students for meaningful careers in analytics, business intelligence, and data science, Qlik’s Academic Program is making waves in universities across India and the Asia Pacific — providing students with hands-on experience, real tools, and industry-recognized qualifications that boost employability and spark innovation.

The Qlik Academic Program gives students and educators free access to Qlik’s powerful analytics platform, Qlik Sense, along with a suite of learning resources, training modules, and qualifications — all at no cost. This means students can learn real analytics skills long before entering the workforce.

Whether you’re studying business, computer science, engineering, or humanities, you can use Qlik to:

-

Analyze real data and build interactive visualizations

-

Understand patterns and trends that drive decision-making

-

Apply analytics to real business challenges — just like professionals do

This practical, hands-on experience helps students not just learn theory, but do analytics. Instead of just reading about data visualization or BI dashboards, they build them. That’s a major differentiator when employers review resumes.

One of the most powerful aspects of the program is the ability for students to earn qualifications and digital badges — such as Qlik Sense Business Analyst and Qlik Sense Data Architect that they can display on their CVs and LinkedIn profiles. These credentials signal to recruiters that the student can effectively use analytics tools that many corporations rely on.

Case studies from institutions in India show how Qlik has enhanced employability. For example, at Christ University, analytics graduates with Qlik skills have seen high job placement rates and report that their hands-on Qlik experience helped them stand out in interviews and secure roles in analytics and BI.

The reach of the Qlik Academic Program continues to expand. In India alone, over 1100+ universities and institutions are now part of the initiative, including leading IITs, IIMs, and top MBA programs.

Institutions like Kristu Jayanti University have embedded Qlik into their core curriculum, allowing students to earn academic credit while mastering analytics skills — a huge step toward integrating practical analytics into mainstream education.

It’s not just students who benefit. Educators receive ready-to-teach content, exercises, sample datasets, and guided teaching materials that make it easier to bring analytics into the classroom. This support helps universities continuously upgrade their curricula to reflect evolving industry needs.

As industries increasingly rely on data-driven decision-making, businesses everywhere — from startups to global corporations — seek professionals who can interpret and act on data insights. With programs like Qlik’s Academic initiative, students are graduating not just with academic knowledge, but with practical analytics skills that employers value.

Whether students want to become data analysts, BI specialists, or business leaders with strong analytical acumen, tools like Qlik provide the foundation they need to launch and grow their careers in the competitive world of data analytics.

If you wish to know more about the Qlik Academic Program and how it could benefit you, please visit: https://qlik.com/academicprogram

-