Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

Search our knowledge base, curated by global Support, for answers ranging from account questions to troubleshooting error messages.

Featured Content

-

How to contact Qlik Support

Qlik offers a wide range of channels to assist you in troubleshooting, answering frequently asked questions, and getting in touch with our technical e... Show MoreQlik offers a wide range of channels to assist you in troubleshooting, answering frequently asked questions, and getting in touch with our technical experts. In this article, we guide you through all available avenues to secure your best possible experience.

For details on our terms and conditions, review the Qlik Support Policy.

Index:

- Support and Professional Services; who to contact when.

- Qlik Support: How to access the support you need

- 1. Qlik Community, Forums & Knowledge Base

- The Knowledge Base

- Blogs

- Our Support programs:

- The Qlik Forums

- Ideation

- How to create a Qlik ID

- 2. Chat

- 3. Qlik Support Case Portal

- Escalate a Support Case

- Phone Numbers

- Resources

Support and Professional Services; who to contact when.

We're happy to help! Here's a breakdown of resources for each type of need.

Support Professional Services (*) Reactively fixes technical issues as well as answers narrowly defined specific questions. Handles administrative issues to keep the product up-to-date and functioning. Proactively accelerates projects, reduces risk, and achieves optimal configurations. Delivers expert help for training, planning, implementation, and performance improvement. - Error messages

- Task crashes

- Latency issues (due to errors or 1-1 mode)

- Performance degradation without config changes

- Specific questions

- Licensing requests

- Bug Report / Hotfixes

- Not functioning as designed or documented

- Software regression

- Deployment Implementation

- Setting up new endpoints

- Performance Tuning

- Architecture design or optimization

- Automation

- Customization

- Environment Migration

- Health Check

- New functionality walkthrough

- Realtime upgrade assistance

(*) reach out to your Account Manager or Customer Success Manager

Qlik Support: How to access the support you need

1. Qlik Community, Forums & Knowledge Base

Your first line of support: https://community.qlik.com/

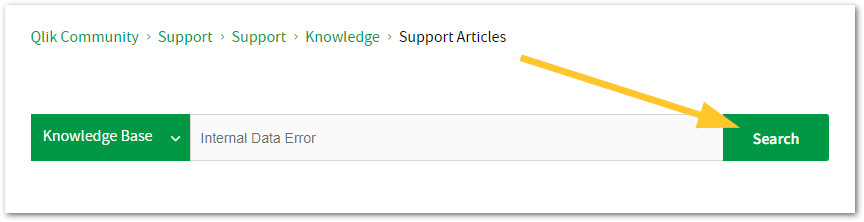

Looking for content? Type your question into our global search bar:

The Knowledge Base

Leverage the enhanced and continuously updated Knowledge Base to find solutions to your questions and best practice guides. Bookmark this page for quick access!

- Go to the Official Support Articles Knowledge base

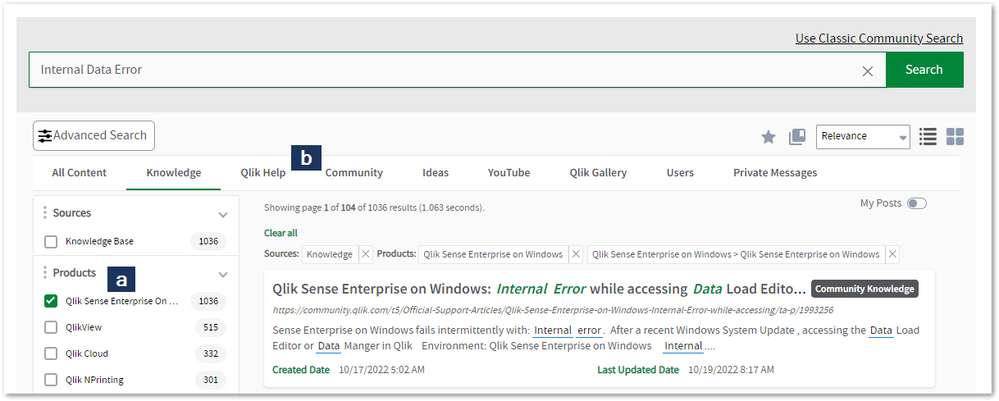

- Type your question into our Search Engine

- Need more filters?

- Filter by Product

- Or switch tabs to browse content in the global community, on our Help Site, or even on our Youtube channel

Blogs

Subscribe to maximize your Qlik experience!

The Support Updates Blog

The Support Updates blog delivers important and useful Qlik Support information about end-of-product support, new service releases, and general support topics. (click)The Qlik Design Blog

The Design blog is all about product and Qlik solutions, such as scripting, data modelling, visual design, extensions, best practices, and more! (click)The Product Innovation Blog

By reading the Product Innovation blog, you will learn about what's new across all of the products in our growing Qlik product portfolio. (click)Our Support programs:

Q&A with Qlik

Live sessions with Qlik Experts in which we focus on your questions.Techspert Talks

Techspert Talks is a free webinar to facilitate knowledge sharing held on a monthly basis.Technical Adoption Workshops

Our in depth, hands-on workshops allow new Qlik Cloud Admins to build alongside Qlik Experts.Qlik Fix

Qlik Fix is a series of short video with helpful solutions for Qlik customers and partners.The Qlik Forums

- Quick, convenient, 24/7 availability

- Monitored by Qlik Experts

- New releases publicly announced within Qlik Community forums (click)

- Local language groups available (click)

Ideation

Suggest an idea, and influence the next generation of Qlik features!

Search & Submit Ideas

Ideation GuidelinesHow to create a Qlik ID

Get the full value of the community.

Register a Qlik ID:

- Go to: qlikid.qlik.com/register

- You must enter your company name exactly as it appears on your license or there will be significant delays in getting access.

- You will receive a system-generated email with an activation link for your new account. NOTE, this link will expire after 24 hours.

If you need additional details, see: Additional guidance on registering for a Qlik account

If you encounter problems with your Qlik ID, contact us through Live Chat!

2. Chat

Incidents are supported through our Chat, by clicking Chat Now on any Support Page across Qlik Community.

To raise a new issue, all you need to do is chat with us. With this, we can:

- Answer common questions instantly through our chatbot

- Have a live agent troubleshoot in real time

- With items that will take further investigating, we will create a case on your behalf with step-by-step intake questions.

3. Qlik Support Case Portal

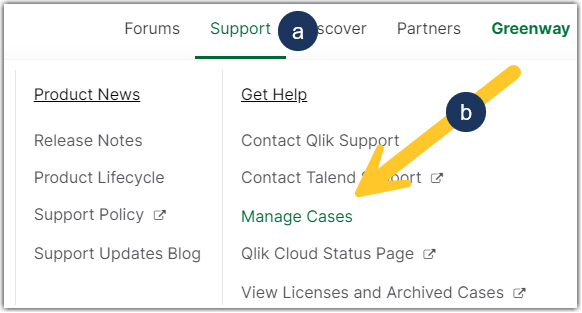

Log in to manage and track your active cases in Manage Cases. (click)

Please note: to create a new case, it is easiest to do so via our chat (see above). Our chat will log your case through a series of guided intake questions.

Your advantages:

- Self-service access to all incidents so that you can track progress

- Option to upload documentation and troubleshooting files

- Option to include additional stakeholders and watchers to view active cases

- Follow-up conversations

When creating a case, you will be prompted to enter problem type and issue level. Definitions shared below:

Problem Type

Select Account Related for issues with your account, licenses, downloads, or payment.

Select Product Related for technical issues with Qlik products and platforms.

Priority

If your issue is account related, you will be asked to select a Priority level:

Select Medium/Low if the system is accessible, but there are some functional limitations that are not critical in the daily operation.

Select High if there are significant impacts on normal work or performance.

Select Urgent if there are major impacts on business-critical work or performance.

Severity

If your issue is product related, you will be asked to select a Severity level:

Severity 1: Qlik production software is down or not available, but not because of scheduled maintenance and/or upgrades.

Severity 2: Major functionality is not working in accordance with the technical specifications in documentation or significant performance degradation is experienced so that critical business operations cannot be performed.

Severity 3: Any error that is not Severity 1 Error or Severity 2 Issue. For more information, visit our Qlik Support Policy.

Escalate a Support Case

If you require a support case escalation, you have two options:

- Request to escalate within the case, mentioning the business reasons.

To escalate a support incident successfully, mention your intention to escalate in the open support case. This will begin the escalation process. - Contact your Regional Support Manager

If more attention is required, contact your regional support manager. You can find a full list of regional support managers in the How to escalate a support case article.

Phone Numbers

When other Support Channels are down for maintenance, please contact us via phone for high severity production-down concerns.

- Qlik Data Analytics: 877-754-5843

- Qlik Data Integration: 781-730-4060

- Talend AMER Region: 1-800-810-3065

- Talend UK Region: 44 800-098-8473

- Talend APAC Region: 65-3163-2072

Resources

A collection of useful links.

Qlik Cloud Status Page

Keep up to date with Qlik Cloud's status.

Support Policy

Review our Service Level Agreements and License Agreements.

Live Chat and Case Portal

Your one stop to contact us.

Recent Documents

-

How to BackUp and Restore Qlik NPrinting Repository Database from End to End

! Note: Do NOT modify the NPrinting Database for any reason using PG Admin postres query or any other execution tools as this will damage your NPrin... Show More! Note: Do NOT modify the NPrinting Database for any reason using PG Admin postres query or any other execution tools as this will damage your NPrinting Deployment and prevent successful NPrinting Database backup and restore operations.

! Note: Do NOT restore an older version of an NPrinting Database to a New NPrinting server or restore a newer version of the NPrinting database to an older version of NPrinting Server.

Examples:

- NP 21.0.0.0 to NP 21.0.0.0 This is OK

- NP 20.29.7.0 to NP 20.39.6.0 NOT OK This will compromise your deployment of NPrinting

- NP 20.39.6.0 to NP 20.29.7.0 NOT OK This will compromise your deployment of NPrinting

These rules apply to general releases and service releases: The point version of the NPrinting Database being restored must match the point version of NPrinting Server being restored to (see Backup and restore Qlik NPrinting).

- How to Identify an incorrectly/unnecessarily restored database: Identify NPrinting Server restored with incorrect NPrinting database version

! Note: From NPrinting February 2020 and later versions, it is NOT necessary to enter a superuser database password

! Note: If you are making a backup for the Qlik Support team, please add the following NPrinting user information so that we can log onto the NPrinting Web Console following the local restore of the database (also ensure that NPrinting Authentication is enabled. Go to Admin > Settings > Authenticationuser: npadmin@qlik.com

password: npadmin@qlik.com

NP Role: Administrator

This procedure is meant to backup and restore (partial backup and restore of these individual items is not possible*):NP Web Console Items:

- NPrinting apps

- Tasks

- Connections

- Filters

- Schedules

- *Reports (can separately be exportable/importable however only and exclusively between identical versions of NPrinting. See Related Content section link below)

- Conditions and most settings applied to an existing system

NP Backup zip File Contents (do NOT open and modify the contents of this file):

- A dump of the NPrinting database repository

- All files used by report templates

- All files related to reports published on the NewsStand

- All files related to On Demand reports

- The backup file size can be 'roughly' estimated by enumerating the size of the folder "c:\programdata\nprinting\apps"

NOTE: Settings that are not backed up or restored are documented below.Resolution:

NOTE:

- Do not unzip the backup file (np_backup.zip), simply copy it to

c:\nprintingbackups

Before Proceeding: Please log on as the NPrinting service account used to run the NPrinting Web Engine and Scheduler services before proceeding

- The video below makes no mention of the Qlik NPrinting License Service which was included on newer versions. It also needs to be stopped as documented below.

- If restoring to a different NPrinting server environment, see step 7 below for additional consideration not covered in the video below.

1. Stop only the following Qlik NPrinting services (do NOT stop any other services)

Open the Windows Service Manager (services.msc), and stop the following services (by right-clicking them, and then clicking Stop). This will ensure any manual or scheduled NPrinting Publish Tasks are not executed during the backup or restore process:

- Qlik NPrinting Engine Service (NOTE: Stop all NP Engines connected to your NP server before proceeding)

- Qlik NPrinting Scheduler Service

- Qlik NPrinting WebEngine Service

2. Create a folder for the backup in:

C:\NPrintingBackups3. Open Command prompt and change directory path

Do NOT modify any syntax or add any additional unnecessary spaces

Open the command prompt making sure to run cmd.exe as Administrator and change directory as follows:cd C:\Program Files\NPrintingServer\Tools\Manager4. Backup

Copy and paste the following backup syntax to the command prompt console. Replace superuserDBpassword with your password first used when installing NPrinting.

Qlik.Nprinting.Manager.exe backup -f C:\NPrintingBackups\NP_Backup.zip -p "C:\Program Files\NPrintingServer\pgsql\bin" --pg-password YourSuperuserDBpasswordHereor with Current Supported versions of NPrinting (no password required)

Qlik.Nprinting.Manager.exe backup -f C:\NPrintingBackups\NP_Backup.zip -p "C:\Program Files\NPrintingServer\pgsql\bin"5. RestoreCopy and paste the following backup syntax to the command prompt console. Replace superuserDBpassword with your password first used when installing NPrinting.Qlik.Nprinting.Manager.exe restore -f C:\NPrintingBackups\NP_Backup.zip -p "C:\Program Files\NPrintingServer\pgsql\bin" --pg-password YourSuperuserDBpasswordHereor with Current Supported versions of NPrinting (no password required)Qlik.Nprinting.Manager.exe restore -f C:\NPrintingBackups\NP_Backup.zip -p "C:\Program Files\NPrintingServer\pgsql\bin"Keep in mind: You may safely ignore any restore 'error' messages found in the nprinting_manager.log file as seen below.File C:\Users\domainuser\AppData\Local\Temp\2\nprintingrestore_20201203082300\files\xxxxxxxxxxxxxxxxxxxxxxxxxxxx does not exist in the source backup package.- These are references to NPrinting Apps/Connections/Reports that once existed and have been subsequently deleted from the NPrinting Web Console.

- For example, A report/connection/NP App may have been deemed retired, then manually deleted by a user with appropriate NP role permissions since it no longer served any specific purpose.

6. Start all NPrinting server services that were stopped earlier.

Restoring to a Different Environment! Note: If re-installing on existing or restoring to a different NPrinting server environment, ensure that the destination NPrinting server license is enabled/activated before restoring the NP database.

If restoring to a different NPrinting server environment (same NP database version as the NP server version that you have restored to),

Note that once the restore to the Target/Destination server is complete you will need to update the following applicable settings in the NP Web Console on the Target/destination server that the NP database has been restored to:

NPrinting Engine:

- Sept 2019 and earlier NPrinting versions: Update the NP Engine in the Engine Manager via the NPrinting Web Console. Add the name of the new NP Engine Computer name here and disable or delete the original NP Engine entry.

- November 2019 and later versions, you will need to follow the Resend Certificate section of the following article New Engine Certificate Installation Process and Resolving NP Engine Offline in order to resend the NPrinting Engine certificates

NP Connections:

- QlikView NP Connection paths if local QVW or QVP connection paths have changed

- Sense connections: if the Sense IDs, Proxy address and NP Identity (NP service account used on the target NP server) has changed at the time of the NP database restore

Qlik Sense Certificates (if using NPrinting Qlik Sense connections)

- April 2019 and earlier NPrinting versions: you will need to re-import the Qlik Sense certificates to the new NPrinting server

- If June 2019 and later NPrinting versions: you may simply copy the previously exported client.pfx file to the following folder and Restart all NPrinting server services

C:\Program Files\NPrintingServer\Settings\SenseCertificates

- How to Connect NPrinting to Single or Multiple Qlik Sense servers (NP June 2019 and later versions only)

- NPrinting May 2023 and Newer: If working exclusively with May 2023 and later NPrinting versions, use this certificates help page: https://help.qlik.com/en-US/nprinting/Content/NPrinting/DeployingQVNprinting/NPrinting-with-Sense.htm

Related Content:

Other helpful information about the NP Backup and Restore tool and process:

- NPrinting Manual Backups creates (the following is also true for the programmatic backups performed during the NPrinting Upgrade process)

- a temp file and folder backup that uses up c:drive space

- This temp backup folder is removed once the manual backup is completed

- Note: The temp folder backup location cannot be modified

- NPrinting performs Three Automated Backups of the NPrinting Database during the upgrade process only. The c:\drive where NPrinting Server is installed must have sufficient space to allow the upgrade process to proceed without failure. The upgrade process creates:

- a pre-upgrade backup of the NP database (pre-upgrade backup of the NP Database)

- a post upgrade backup (upgraded version of the NP database).

- a temp file and folder backup (which is removed once the upgrade is completed)

- The pre and post upgrade backup files can be found here:

Note:The pre and post upgrade backup files are appended with the NP version number and backup dateC:\ProgramData\NPrinting - Backup and restore log history is found here:

C:\ProgramData\nprinting\logs\nprinting_manager.log - The automated NP backup contains the same file and folder structure as the manual NP backup process. (these files and folders must NOT be modified).

- The NP backup/restore tools and process cannot be used to backup and/or restore individual NPrinting reports.

- To 'Backup/Export' and or Restore/Import individual reports, please visit Moving reports between environments

*NOTE:

- This article applies to Supported Versions of NPrinting only

Online Help Tutorial Reference Sources (choose the applicable version):- NPrinting Server: Disk space optimization

- Qlik NPrinting Upgrade Install from NPrinting 17.3.x or higher

- Exporting and installing Qlik Sense certificates

- Backup and restore Qlik NPrinting

- Backing up the Qlik NPrinting audit trail

- Restoring Qlik NPrinting audit trail data from a backup

- Identify wrong NPrinting database version restored to NPrinting Server

-

Required Basic Information For Qlik Support Cases

Are you looking to contact Support? See How to contact Support for details. All reported Support cases must include basic information and details to ... Show MoreAre you looking to contact Support? See How to contact Support for details.

All reported Support cases must include basic information and details to enable efficient analysis and investigation by Qlik Support. The relevant details can most of the time be gathered by answering the below Five Ws and How (a.k.a. Six Ws). Additionally, supporting material matching the provided details will expedite problem-solving and identify a solution to any given issue on time.

Each question should have a factual answer — facts necessary to include for a report to be considered complete. Importantly, none of these questions can be answered with a simple "yes" or "no".What is the issue about?

- Symptom

- Products

- Error message

Who is affected by the issue?

- The end-customer

- License

- Support entitlement

- Single end-user

- Group of users

Where have you seen the issue?

- Product(s)

- Qlik Service

- Client

- Device

- Environment

When did (or does) the issue occur?

- Last working

- Issue occurred

- Frequency

- Occurrence pattern

How did you try solving the issue?

Why does the issue occur?

- Root cause identified

- Known issue

- Workaround

Supporting Material

- Log files

- Screenshots

- Sample files

- Data sources

- Qlik applications

- Deployment overview

Reference:

https://en.wikipedia.org/wiki/Five_Ws -

How to Assign Users to Talend Academy Platform

Introduction Assigning users to the Talend Academy Platform involves clear communication and necessary documentation. For Customer Domain Administ... Show More -

How to Change Admin User in Talend Academy

Introduction Changing the Admin User in Talend Academy requires following specific steps and ensuring accurate details are provided. For Customer Doma... Show MoreIntroduction

Changing the Admin User in Talend Academy requires following specific steps and ensuring accurate details are provided.

For Customer Domain Administrators needing assistance, please refer to the templates provided below.

If encountering difficulties, use the support template to contact us for assistance.

Email Template for Contacting Customer Domain Administrators

Subject: Request to Change Admin User in Talend Academy

Dear Customer Domain Administrator,

I hope this message finds you well.

I am writing to request a change in the Admin User for our organization's Talend Academy account. Below are the necessary details:

- Current Admin User Email ID: [Insert Current Admin User Email]

- New Admin User Email ID: [Insert New Admin User Email]

- Reason for Change: [Briefly explain your request]

- Attached Document: Please refer to the attached document for detailed instructions.

Note: Please refer to the document attached to check if you have the authority to make the requested changes. If you are unable to proceed, kindly contact Talend Support for further assistance.

Your prompt assistance in changing the Admin User would be greatly appreciated.

Thank you for your support and consideration.

Best regards,

[Your Name]

Email Template for Contacting Support

Subject: Request to Change Admin User in Talend Academy

Dear Academy Team,

I hope this message finds you well.

I am writing to request a change in the Admin User for our organization's Talend Academy account. Below are the necessary details:

- Current Admin User Email ID: [Insert Current Admin User Email]

- New Admin User Email ID: [Insert New Admin User Email]

- Reason for Change: [Briefly explain your request]

- Attached Document: Please refer to the attached document for detailed instructions.

Your prompt assistance in changing the Admin User would be greatly appreciated.

Thank you for your support and consideration.

Best regards,

[Your Name]

Important Notes

- Ensure all details provided are accurate and complete before sending your request.

- Use the appropriate email address: customercare@qlik.com.

- Adhere to Qlik community guidelines when submitting requests.

- If you are a Customer Domain Administrator and encounter difficulties, please refer to the attached document for detailed instructions on changing the Admin User in Talend Academy.

-

How to Request Access to the Talend Support Portal

Introduction If you need to request access to the Talend Support Portal, follow these steps to ensure a smooth process and provide the necessary ... Show More -

How to Assign a Talend Course to a User ( Talend Academy)

Introduction Assigning a Talend course to a user involves specific steps to ensure the process is carried out accurately. For Customer Domain Administ... Show MoreIntroduction

Assigning a Talend course to a user involves specific steps to ensure the process is carried out accurately. For Customer Domain Administrators needing assistance, please refer to the templates provided below. If you encounter any challenges, utilize the support template to contact us for further assistance.

Template for Customer Domain Administrators

Subject: Request to Assign Talend Course to User

Dear Customer Domain Administrator,

I hope this message finds you well.

I am writing to request the assignment of a Talend course to a user within our organization. Below are the necessary details:

- User Email ID: [Insert User Email]

- Course Name: [Insert Course Name]

- Reason for Assignment: [Briefly explain your request]

- Attached Document: Please refer to the attached document for detailed instructions.

Note: Please refer to the document attached to verify if you have the authority to assign courses to users. If you encounter any difficulties, please contact Talend Support for further assistance.

Your prompt attention to this request would be greatly appreciated. Thank you for your support and consideration.

Best regards,

[Your Name]

Template for Support

Subject: Request to Assign Talend Course to User

Dear Academy Team,

I hope this message finds you well.

I am writing to request the assignment of a Talend course to a user within our organization. Below are the necessary details:

- User Email ID: [Insert User Email]

- Course Name: [Insert Course Name]

- Reason for Assignment: [Briefly explain your request]

- Attached Document: Please refer to the attached document for detailed instructions.

Your prompt assistance in assigning the Talend course to the specified user would be greatly appreciated. Thank you for your support and consideration.

Best regards,

[Your Name]

Important Notes

- Ensure all details provided are accurate and complete before sending your request.

- Use the appropriate email address: customercare@qlik.com.

- Adhere to Qlik community guidelines when submitting requests.

- If you are a Customer Domain Administrator and have any uncertainties regarding course assignment, please refer to the attached document for detailed instructions or contact Talend Support for assistance.

-

How to Request a Talend Migration Token

Introduction Requesting a Talend migration token involves several specific steps to ensure a smooth transition from one version of Talend Studio to an... Show MoreIntroduction

Requesting a Talend migration token involves several specific steps to ensure a smooth transition from one version of Talend Studio to another.

If you encounter any challenges, utilize the support template to contact us for further assistance.

Template for Requesting a Talend Migration Token

Subject: Request for Talend Migration Token

Dear Support Team,

I hope this message finds you well.

I am writing to request a migration token for our organization's Talend Studio. Below are the necessary details:

- Source Talend Studio Version: [Insert Source Version]

- Target (Migration) Talend Studio Version: [Insert Target Version]

- Destination Studio License Key: [Attach Destination Studio License Key]

- Reason for Migration: [Briefly explain your request]

- Attached Document: Please refer to the attached document for detailed instructions.

Your prompt attention to this request would be greatly appreciated.

Thank you for your support and consideration.

Best regards,

[Your Name]

Example Request

Subject: Request for Talend Migration Token

Dear Support Team,

I hope this message finds you well.

I am writing to request a migration token for our organization's Talend Studio. Below are the necessary details:

- Source Talend Studio Version: Talend Data Integration Version 7.3.1

- Target (Migration) Talend Studio Version: Talend Data Integration Version 8.0.1

- Destination Studio License Key: [Attach Destination Studio License Key]

- Reason for Migration: Due to our project requirements, we need to migrate to the latest version of Talend Data Integration.

- Attached Document: Please refer to the attached document for detailed instructions.

Your prompt assistance in providing the migration token would be greatly appreciated.

Thank you for your support and consideration.

Best regards,

John Doe

Important Notes

- Ensure all details provided are accurate and complete before sending your request.

- Use the appropriate email address: customercare@qlik.com.

- Adhere to Qlik community guidelines when submitting requests.

- Attach the destination studio license key when requesting a migration token.

-

Qlik Replicate - MySQL source defect and fix (2023.11)

Upgrade installation or fresh installation of Qlik Replicate 2023.11 (includes builds GA, PR01 & PR02), Qlik Replicate reports errors for MySQL or Mar... Show MoreUpgrade installation or fresh installation of Qlik Replicate 2023.11 (includes builds GA, PR01 & PR02), Qlik Replicate reports errors for MySQL or MariaDB source endpoints. The task attempts over and over for the source capture process but fail, Resume and Startup from timestamp leads to the same results:

[SOURCE_CAPTURE ]T: Read next binary log event failed; mariadb_rpl_fetch error 0 () [1020403] (mysql_endpoint_capture.c:1060)

[SOURCE_CAPTURE ]T: Error reading binary log. [1020414] (mysql_endpoint_capture.c:3998)

Environment:

- Replicate 2023.11 (GA, PR01, PR02)

- MySQL source database , any version

- MariaDB source database , any version

Fix Version & Resolution:

Upgrade to Replicate 2023.11 PR03 (expires 8/31/2024).

The fix is included in Replicate 2024.05 GA.

Workaround:

If you are running 2022.11, then keep run it.

No workaround for 2023.11 (GA, or PR01/PR02) .

Cause & Internal Investigation ID(s):

Jira: RECOB-8090 , Description: MySQL source fails after upgrade from 2022.11 to 2023.11

There is a bug in the MariaDB library version 3.3.5 that we started using in Replicate in 2023.11.

The bug was fixed in the new version of MariaDB library 3.3.8 which be shipped with Qlik Replicate 2023.11 PR03 and upper version(s).Related Content:

support case #00139940, #00156611

Replicate - MySQL source defect and fix (2022.5 & 2022.11)

-

How to request a control number and LEF

The instructions apply to obtaining your control number and LEF. Signed License Keys (SLK) are sent by email and need to be requested from Qlik Suppo... Show MoreThe instructions apply to obtaining your control number and LEF. Signed License Keys (SLK) are sent by email and need to be requested from Qlik Support.

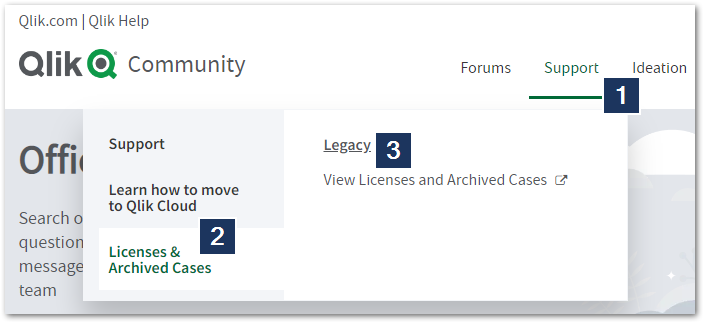

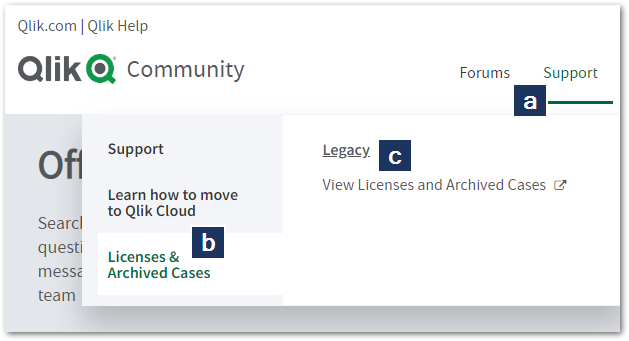

- Login to the Qlik Community and click Support.

*If you do not have a Qlik account (username and password) please see the article How to Register for a Qlik Account - Click Licenses & Archived Cases

- Click View Licenses and Archived Cases

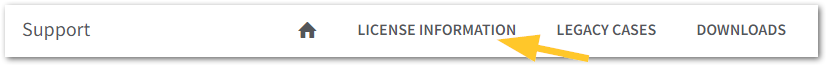

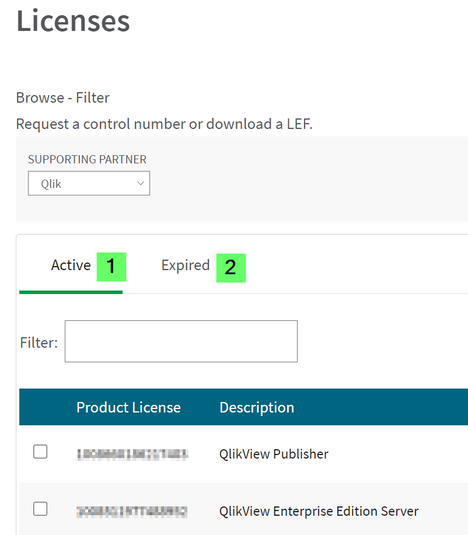

- Click License Information

- Choose the appropriate license/s.

- Click Request Control Number" or "Download LEF"

For LEF downloads note that Pop-Up should be enabled on your browser to allow the above page to be shown.

Clicking Download LEF provides the LEF immediately.

Request Control Number triggers an email that will arrive in a few minutes.

Transcript

This video will demonstrate how to view a license Enabler File or LEF and Control Number.

If you are looking for a Signed License Key,

this has been sent via email.

If you can’t find your Signed License Key, contact Support.

First visit Qliksupport.force.com/QS_Logininfo and login with your QlikID email address and password.

Click on License Information at the top of the page

and verify the account.

You will be shown a list of Active and Expired Licenses.

You can use the filter to search for a specific license.

Click the checkbox next to the license.

Then click on Request Control Number" or "Download LEF"

Clicking Download LEF provides the LEF immediately.

Make sure that no pop up blockers are enabled that prevent the pop up from showing.

Request Control Number triggers an email that will arrive in a few minutes.I hope this helped.

If you'd like more information

Take advantage of the expertise of peers, product experts, Community MVPs and technical support engineers

by asking a question in a Qlik Product Forum.Hiding in plain sight is perhaps the most powerful feature on the Community:

the Search tool.

This engine allows you to search Qlik Knowledge Base Articles,

Or across the Qlik Community,

Help dot Qlik dot com, Qlik Gallery,

multiple Qlik YouTube channels and more, all from one place.There’s also the Support space.

We recommend you subscribe to the Support Updates Blog,

And learn directly from Qlik experts via a Support webinar, like Techspert Talks or Q&A with Qlik.Nialed it.

- Login to the Qlik Community and click Support.

-

How to view cases in Support Portal

This article explains how to view your own and your colleague's cases in the Support Case Portal. Steps: Login to the Qlik Community Click on Support... Show MoreThis article explains how to view your own and your colleague's cases in the Support Case Portal.

Steps:

- Login to the Qlik Community

- Click on Support in the top navigational ribbon

- Click Manage Cases (or use the direct link: Case Portal)

- You will then have access to your cases.

- From there, choose between OPEN and RESOLVED cases. The default view is your cases only.

Cases with "Solution Proposed" status are listed in the "RESOLVED" tab. - Click 3 dots, then choose 'My Organization's Cases' to view your colleague's cases

If you do not have this option, see How To View Other User Cases Within Your Organization.

Related Content:

How to create a case and contact Qlik Support

-

Qlik Support Policy and SLAs

This video explains what is covered by Qlik Support, how the severity of a case is defined, and how that affects the service license agreement (SLA) i... Show MoreThis video explains what is covered by Qlik Support, how the severity of a case is defined, and how that affects the service license agreement (SLA) in accordance with the Qlik Support Policy.

If you are looking for Qlik's Support Policy, see Product Terms for the most recent agreement.

Support Policy

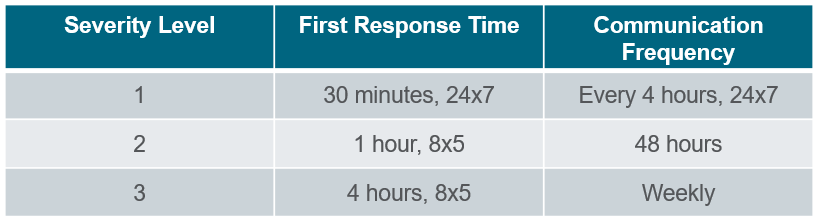

SLA Response Times - Enterprise Support Coverage

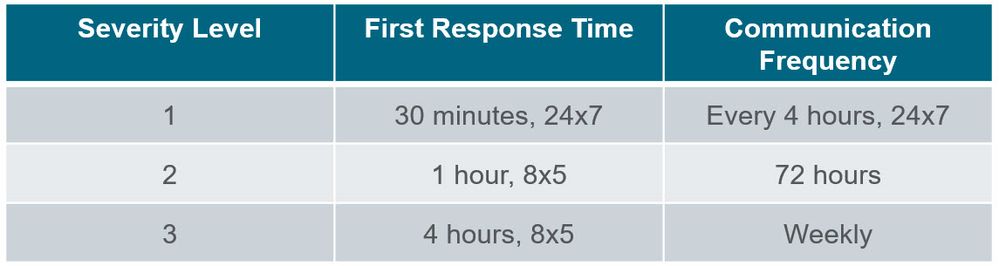

SLA Response Times - OEM / MSP Support Coverage

Related Content

Legal Product Terms and Documentation

How and When to Contact the Consulting Team?

How to raise a technical support case

Transcript

At Qlik, we want to help you succeed.

How we can best help you depends on the challenge you’re facing.

This video will explain what is covered by Qlik Support,

how the severity of a case is defined,

and how that affects the service license agreement.

At Qlik, we want to help you succeed.

How we can best help you depends on the challenge you’re facing.Qlik Support is an important part of Customer Success.

Our most successful customers are engaged along this entire journey

with our customer onboarding, Education and Professional services.To help clarify the difference between these offerings,

Qlik support is here there to help when your Qlik product is not performing as expected;

certainly with errors, task failures, or possible bug reports in a break – fix fashion.

And we will work with you to investigate performance degradation or latency issues.

Professional services are available in a more proactive way to offer best practices, upgrades and performance tuning

to help you get the most from your Qlik implementation.

Your environment and data is unique, and professional services can work with you to develop a strategy to be the most successful.Before creating a case with Qlik Support,

try to find a quick solution with the search tool on Qlik Community.

It searches across our Knowledge base, Qlik Help, and Qlik YouTube channels, and more all from one place.

If you can’t find the help you need in Qlik Community, you can raise a support case with us.

To help us understand the severity of the issue, here is how case severity is defined:

Severity one errors are when the software is inoperable,

or your production environment is inaccessible,

These will be worked 24 - 7 until service is restored.

Severity 2 issues can be a significant performance impact,

An issue that is preventing a future launch,

Or an error that has a temporary work around, but requires significant manual intervention.

Severity 3 issues are when data is delayed,

Results are not matching expectations,

Reporting defects,

Misleading or incomplete documentation,

Or general disruptions in production.Having an appropriate Severity set on the case is important because it directly results in how quickly the case will receive a response and the frequency of communication during the investigation of the case.

Especially with high severity cases, the severity is expected to change during the life of an investigation.

The initial response time of a Severity once case is directly within 30 minutes of completing the registry of the case.

After that, the support team member will follow up with you at-least every 4 hours or until access to the production environment has been restored

and the severity of the case can be lowered.

For severity 2 cases,

a first response will be made within the first 60 minutes of case creation, during normal business hours, Monday through Friday.

And follow-ups every 2 days after that.

For severity 3 cases, the initial response will be received within 4 hours, and weekly communication until the issue is resolved.For the best results with your support case,

set the appropriate severity,

Enter a meaningful and detailed description of the issue

Preemptively provide all the necessary logs and technical details,

and maintain effective activity on the case.I hope this has helped clarify some details of the Qlik Support policy, and demonstrate how Qlik Support is here to partner with your success.

Thanks for watching. -

Connect NPrinting Server to One or More Qlik Sense servers

How to Connect NPrinting to Single or Multiple Qlik Sense servers: You may use this article to connect to NPrinting to a single or multiple QS server... Show MoreHow to Connect NPrinting to Single or Multiple Qlik Sense servers:

- You may use this article to connect to NPrinting to a single or multiple QS servers

- In some cases you may wish to connect your NPrinting server to multiple Qlik Sense server environments. The process described in this article will describe how to enable this feature and how to manage possible errors encountered.

- Note that all additional QS servers must be in the same domain as the original QS and NP servers.

- If having issues connecting to multiple Qlik Sense servers, see Qlik NPrinting will not read Qlik Sense certificates

Environments:

- This feature is ONLY available in NPrinting June 2019 release and newer versions.

- NOTE: This feature is not supported in NPrinting April 2019 and earlier versions.

Implement the solution:

- Go to Qlik Sense QMC>Start>Certificates

- Export the Qlik Sense server certificates from each additional Qlik Sense server.

- Use the NPrinting server computer name or the Friendly Url Alias of the NPrinting Server as the "Machine Name" that is used to log into the NPrinting Web Console with.

- Export a certificate for both if both addresses below are used to access the NP web console.

- Computer name: nprintingserverPROD1

- Friendly URL/Alias address: Internal.nprintingserver.domain.com

- Select include secret key and

- do NOT include a password when exporting the certificates. See Exporting certificates through the QMC on the Qlik Sense Online Help for details.

- Navigate to the exported certificates location on the QS server and rename the exported file 'client.pfx' with a suitable name. ie: if your Qlik Sense server name is QS1, prepend the file client certificate file name as follows: QS1client.pfx (Naming of this file should ideally reflect the Qlik Sense server that the file was exported from. You may use however, any name that you wish)

- Copy this file to the NPrinting server path:

"C:\Program Files\NPrintingServer\Settings\SenseCertificates"- Restart all NPrinting services

- If newly installing NPrinting May 2023 IR or later, please check this article if you are having NPrinting Qlik Sense connection failures: NPrinting Qlik Sense Connections Fail with Fresh Install of May 2023 NPrinting and Higher Version

NOTE: Reminder that the NPrinting Engine service domain user account MUST be ROOTADMIN on each Qlik Sense server which NPrinting is connecting to.

Test access to the additional Qlik Sense server.

- Open the NPrinting Web Console

- Create a new NP App. ie: "NP_App_QSserver-2"

- Create a new NP connection and use the Virtual Proxy Address for the new target Qlik Sense server

- Verify your connection

- Save your connection to load the metadata for the first time.

- Create a test report and preview

- If having issues connecting to multiple Qlik Sense servers, see Qlik NPrinting will not read Qlik Sense certificates

Notes regarding this feature:- Connecting additional Qlik Sense servers will have an impact on NPrinting server system resources. Ensure to carefully monitor NPrinting Server/NPrinting Engine RAM memory and CPU usage and increase each respectively as needed to ensure normal NPrinting server/engine system operation.

- You may only publish Qlik NPrinting reports only to a single Qlik Sense Hub. Ie: the QS hub defined in the NPrinting Web Console under 'Destinations\Hub' while logged on as an NPrinting administrator.

- Publishing to multiple Qlik Sense Hubs is not supported

The Qlik NPrinting server target folder for exported Qlik Sense certificates

"C:\Program Files\NPrintingServer\Settings\SenseCertificates"- Is retained when Qlik NPrinting is upgraded

- However, this folder is deleted when you uninstall Qlik NPrinting.

- Therefore you need to re-add the exported Qlik Sense certificate to this folder after installing NPrinting again

- Ensure that NO older Qlik Sense server certificates are kept in the Sense certificates folder nor in any sub-folders of this folder

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

Related Content

- NPrinting Qlik Sense Connections Fail with Fresh Install of May 2023 NPrinting and Higher Version

- https://help.qlik.com/en-US/nprinting/Content/NPrinting/DeployingQVNprinting/NPrinting-with-Sense.htm

- https://help.qlik.com/en-US/nprinting/February2021/Content/NPrinting/DeployingQVNprinting/NPrinting-with-Sense.htm#anchor-1

-

Replicate - Oracle source: Long Object Names exceeding 30 Bytes

In Replicate Oracle source endpoint there was a limitation: Object names exceeding 30 characters are not supported. Consequently, tables with names ex... Show MoreIn Replicate Oracle source endpoint there was a limitation:

Object names exceeding 30 characters are not supported. Consequently, tables with names exceeding 30 characters or tables containing column names exceeding 30 characters will not be replicated.

Cause

The limitation comes from low versions Oracle behavior. However since Oracle v12.2, Oracle can support object name up to 128 bytes, long object name is common usage at present. The limitation in User Guide Object names exceeding 30 characters are not supported can be overcome now.

There are two major types of long identifier name in Oracle, 1- long table name, and 2- long column name.

Resolution

1- Error messages of long table name

[METADATA_MANAGE ]W: Table 'SCOTT.VERYVERYVERYLONGLONGLONGTABLETABLETABLENAMENAMENAME' cannot be captured because the name contains 51 bytes (more than 30 bytes)

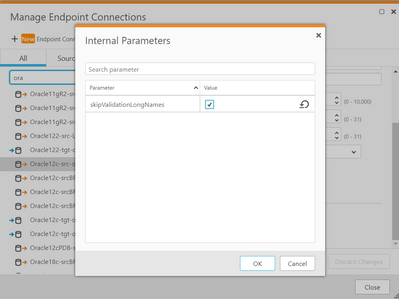

Add an internal parameter skipValidationLongNames to the Oracle source endpoint and set its value to true (default is false) then re-run the task:

2- Error messages of long column name

There are different messages if the column name exceeds 30 characters

[METADATA_MANAGE ]W: Table 'SCOTT.TEST1' cannot be captured because it contains column with too long name (more than 30 bytes)

Or

[SOURCE_CAPTURE ]E: Key segment 'CASE_LINEITEM_SEQ_NO' value of the table 'SCOTT.MY_IMPORT_ORDERS_APPLY_LINEITEM32' was not found in the bookmark

Or (incomplete WHERE clause)

[TARGET_APPLY ]E: Failed to build update statement, statement 'UPDATE "SCOTT"."MY_IMPORT_ORDERS_APPLY_LINEITEM32"

SET "COMMENTS"='This is final status' WHERE ', stream position '0000008e.64121e70.00000001.0000.02.0000:1529.17048.16']There are 2 steps to solve above errors for long column name :

(1) Add internal parameter skipValidationLongNames (see above) in endpoint

(2) It also requires a parameter called "enable_goldengate_replication" is enabled in Oracle. This can only be done by end user and their DBA:

alter system set ENABLE_GOLDENGATE_REPLICATION=true;

Take notes this is supported when the user has GoldenGate license, and Oracle routinely audits licenses. Consult with the user DBA before alter the system settings.Environment

- Oracle (version 12.2 and up) source endpoint for Qlik Replicate

- Qlik Replicate versions 2021.5/2021.11/2022.5 and up

Related Content

Internal support case ID: # 00045265.

-

Replicate - DB2 LUW ODBC Client error Specified driver could not be loaded due t...

While working with DB2 LUW endpoint, Replicate reports error after the 64-bit IBM DB2 Data Server Client 11.5 installation: SYS-E-HTTPFAIL, Cannot con... Show MoreWhile working with DB2 LUW endpoint, Replicate reports error after the 64-bit IBM DB2 Data Server Client 11.5 installation:

SYS-E-HTTPFAIL, Cannot connect to DB2 LUW Server.

SYS,GENERAL_EXCEPTION,Cannot connect to DB2 LUW Server,RetCode: SQL_ERROR SqlState: IM003 NativeError: 160 Message: Specified driver could not be loaded due to system error 1114: A dynamic link library (DLL) initialization routine failed. (IBM DB2 ODBC DRIVER, C:\Program Files\IBM\SQLLIB\BIN\DB2CLIO.DLL).Resolution

Install the 64-bit IBM DB2 Data Server Client 11.5.4 (for example 11.5.4.1449) rather than 11.5.0 (actual version is 11.5.0.1077).

Environment

Qlik Replicate : all versions

Replicate Server platform: Windows Server 2019

DB2 Data Server Client : version 11.5.0.xxxxInternal Investigation ID(s):

Support cases, #00076295

-

Qlik NPrinting Training and Information Resources- Step by Step Help Pages, Vid...

If you are new to Qlik NPrinting 2020 platform (and higher versions) or just want to brush up on your NPrinting report development skills, we have goo... Show MoreIf you are new to Qlik NPrinting 2020 platform (and higher versions) or just want to brush up on your NPrinting report development skills, we have good news for you.

You can find a vast wealth of Installation, Design and Distribution Tutorial videos at the following link:

- https://help.qlik.com/en-US/videos

- https://learning.qlik.com/mod/page/view.php?id=24708&Category=How%20do%20I%20Videos

- Select Qlik NPrinting from the product/program drop down selector

- Note that you must have site access to enroll in either Complimentary or Paid Courses

Once you arrive on this page, just click the "Qlik NPrinting" link under "Filter on products" to access the tutorial videos. You may also of course choose any of the other Qlik product tutorial video links as well as needed.

If needed, you can also filter on product version as well in case you are running an older version of NPrinting.

NPrinting Design tutorials here:

Note: It is very important for normal, unimpeded NPrinting report design to keep in mind that the NPrinting designer must be the same version as the NPrinting Server and should not be upgraded independently. You will need to contact your NPrinting Administrator to request an entire NPrinting system upgrade if you need a newer version of the NPrinting Designer.

Report Distribution Help Information:

NPrinting 16 to 19 + Migration Tool FAQ (If you are currently operating an NPrinting 16 environment):

Additionally, you will also find Qlik Product training resources here as well:

- https://qcc.qlik.com/

- https://www.qlik.com/us/services/training

- http://inter.viewcentral.com/events/uploads/qlik/QlikEducationServices-CourseDiagram.pdf (page 17)

If you prefer an on site engagement, please visit the following to request an engagement as needed:

For Self Service NPrinting Product installation information, please visit:

- Getting started

- Deploying

- NPrinting, QlikView and Qlik Sense need to be installed in the same domain and on separate computers to ensure the greatest level of success and robust performance. See: Supported and unsupported configurations

- NPrinting installation and upgrades: https://community.qlik.com/t5/Support-Knowledge-Base/Qlik-NPrinting-Upgrade-Install-from-NPrinting-17-3-x-or-higher/ta-p/1713304

Keep an eye out for unsupported items

- https://help.qlik.com/en-US/nprinting/Content/NPrinting/GettingStarted/HowCreateConnections/Connect-to-QlikView-docs.htm

- https://help.qlik.com/en-US/nprinting/Content/NPrinting/GettingStarted/HowCreateConnections/Connect-to-QlikSense-apps.htm

NOTE: If you have an issue regarding your NP designer or NPrinting server, you may begin the troubleshooting process by typing your query into the Qlik Community Search. Often times you will find the same question as well as answers already mentioned in the community.

Also feel free to start a new community discussion thread or contact the Qlik Support Desk or your Qlik Support Partner of you are unable to get the answers you need within this community forum

-

Qlik Replicate Oracle Source Redo Log with Sequence not found error when DEST_ID...

Going to retrieve archived REDO log with sequence 2056016, thread 1 but fails REDO log with a sequence not found error. The Archived Redo Log has Prim... Show MoreGoing to retrieve archived REDO log with sequence 2056016, thread 1 but fails REDO log with a sequence not found error. The Archived Redo Log has Primary Oracle DB as DEST_ID 1 and the Standby DEST_ID 32 pointing to the correct location.

Environment

Qlik Replicate Release 2022.11 previous versions

Resolution

Cannot use DEST_ID greater than 31 for Primary or Standby Oracle Redo Log locations in prior releases of Replicate 2022.11. The new 2023.5 SR03 supports DEST_ID 32

Cause

Qlik Replicate 2022.11 only supported the DEST_ID 0 through 31

Related Content

2023.5 SR03

https://files.qlik.com/url/QR_2023_5_PR03

Internal Investigation ID(s)

JIRA RECOB-7509

-

Replicate - MySQL source defect and fix (2022.5 & 2022.11)

Replicate reported errors during resume task if source MySQL running on Windows (while MySQL running on Linux then no problem) [SOURCE_CAPTURE ]I: Str... Show MoreReplicate reported errors during resume task if source MySQL running on Windows (while MySQL running on Linux then no problem)

[SOURCE_CAPTURE ]I: Stream positioning at context '$.000034:3506:-1:3506:0'

[SOURCE_CAPTURE ]T: Read next binary log event failed; mariadb_rpl_fetch error 1236 (Could not find first log file name in binary log index file)Replicate reported errors at MySQL source endpoints sometimes (does not matter what's the MySQL source platforms):

[SOURCE_CAPTURE ]W: The given Source Change Position points inside a transaction. Replicate will ignore this transaction and will capture events from the next BEGIN or DDL events.

Environment:

- Replicate 2022.5 PR4 (2022.5.0.815)

- Replicate 2022.11 PR1 (2022.11.0.289)

- MySQL source database , any version

Fix Version & Resolution:

Upgrade to Replicate 2022.11 PR2 (2022.11.0.394, released already) or higher, or Replicate 2022.5 PR5 (coming soon)

Workaround:

If you are running 2022.5 PR3 (or lower), then keep run it, or upgrade to PR5 (or higher) .

No workaround for 2022.11 (GA, or PR01) .

Cause & Internal Investigation ID(s):

Jira: RECOB-6526 , Description: It would not be possible to resume a task if MySQL Server was on Windows

Jira: RECOB-6499 , Description: Resuming a task from a CTI event, would sometimes result in missing events or/and a redundant warning message

Related Content:

support case #00066196

support case #00063985 (#00049357)

Qlik Replicate - MySQL source defect and fix (2023.11)

-

Qlik Replicate: Support JSONB datatype for PostgreSQL ODBC data source

While working with PostgreSQL ODBC DSN as source endpoint, The ODBC Driver is interpreting JSONB datatype as VARCHAR(255) by default, it leads the JSO... Show MoreWhile working with PostgreSQL ODBC DSN as source endpoint, The ODBC Driver is interpreting JSONB datatype as VARCHAR(255) by default, it leads the JSONB column values truncated no matter how the LOB size or data type length in target table were defined.

In general the task report warning as:2022-12-22T21:28:49:491989 [SOURCE_UNLOAD ]W: Truncation of a column occurred while fetching a value from array (for more details please use verbose logs)

Resolution

There are several options to solve the problem (any single one is good enough😞

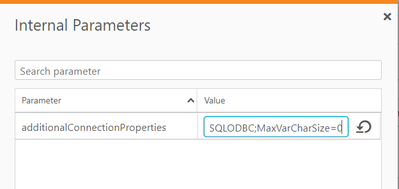

I) Change PostgreSQL ODBC source endpoint connection string

- Open PostgreSQL ODBC source endpoint

- Go to the Advanced tab

- Open Internal Parameters

- Add a new parameter named additionalConnectionProperties

- Press <Enter> and set the parameter's value to:

MaxVarCharSize=0

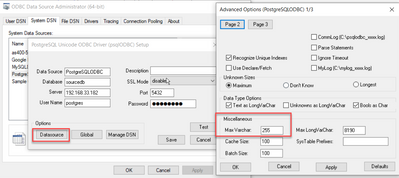

II) Or on Windows/Linux Replicate Server, add one line to "odbc.ini" in the DSN definition:

MaxVarCharSize=0

III) Or on Windows, set "Max Varchar" to 0 from default value 255 in ODBC Manager GUI (64-bit):

Environment

Qlik Replicate all versions

PostgreSQL all versionsInternal Investigation ID(s):

Support cases, #00062911

Ideation article, Support JSONB

-

How To Get Started with Qlik AutoML

IntroductionSystem RequirementsPricing and PackagingSoftware updatesTypes of Models SupportedGetting Started with AutoMLData ConnectionsData Preparati... Show More- Introduction

- System Requirements

- Pricing and Packaging

- Software updates

- Types of Models Supported

- Getting Started with AutoML

- Data Connections

- Data Preparation abilities

- Using realtime-prediction API

- Integration with Qlik Sense

- Contacting Support

- Additional resources

- Environment

This is a guide to get you started working with Qlik AutoML.

Introduction

AutoML is an automated machine learning tool in a code free environment. Users can quickly generate models for classification and regression problems with business data.

System Requirements

Qlik AutoML is available to customers with the following subscription products:

Qlik Sense Enterprise SaaS

Qlik Sense Enterprise SaaS Add-On to Client-Managed

Qlik Sense Enterprise SaaS - Government (US) and Qlik Sense Business does not support Qlik AutoML

Pricing and Packaging

For subscription tier information, please reach out to your sales or account team to exact information on pricing. The metered pricing depends on how many models you would like to deploy, dataset size, API rate, number of concurrent task, and advanced features.

Software updates

Qlik AutoML is a part of the Qlik Cloud SaaS ecosystem. Code changes for the software including upgrades, enhancements and bug fixes are handled internally and reflected in the service automatically.

Types of Models Supported

AutoML supports Classification and Regression problems.

Binary Classification: used for models with a Target of only two unique values. Example payment default, customer churn.

Customer Churn.csv (see downloads at top of the article)

Multiclass Classification: used for models with a Target of more than two unique values. Example grading gold, platinum/silver, milk grade.

MilkGrade.csv (see downloads at top of the article)

Regression: used for models with a Target that is a number. Example how much will a customer purchase, predicting housing prices

AmesHousing.csv (see downloads at top of the article)

Getting Started with AutoML

What is AutoML (14 min)

Exploratory Data Analysis (11 min)

Model Scoring Basics (14 min)

Prediction Influencers (10 min)

Qlik AutoML Complete Walk Through with Qlik Sense (24 min)

Non video:

How to upload data, training, deploying and predicting a model

Data Connections

Data for modeling can be uploaded from local source or via data connections available in Qlik Cloud.

You can add a dataset or data connection with the 'Add new' green button in Qlik Cloud.

There are a variety of data source connections available in Qlik Cloud.

Once data is loaded and available in Qlik Catalog then it can be selected to create ML experiments.

Data Preparation abilities

AutoML uses variety of data science pre-processing techniques such as Null Handling, Cardinality, Encoding, Feature Scaling. Additional reference here.

Using realtime-prediction API

Please reference these articles to get started using the realtime-prediction API

Integration with Qlik Sense

By leveraging Qlik Cloud, predicted results can be surfaced in Qlik Sense to visualize and draw additional conclusions from the data.

How to join predicted output with original dataset

Contacting Support

If you need additional help please reach out to the Support group.

It is helpful if you have tenant id and subscription info which can be found with these steps.

Additional resources

Please check out our articles in the AutoML Knowledge Base.

Or post questions and comments to our AutoML Forum.

Environment

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

-

How to view Active & Expired Licenses in Support Portal

Users can view all licenses by navigating between the Active and Expired tab. Login to the legacy Support Portal by following this link or Login t... Show MoreUsers can view all licenses by navigating between the Active and Expired tab.

- Login to the legacy Support Portal by following this link or

- Login to Community (https://community.qlik.com) and click Support in the navigational ribbon

- Then click Licenses & Archived Cases

- And choose View Licenses and Archived Cases to be redirected

- On the Legacy Portal, click on View License Information or Archived Cases at the top of the page and select View My Licenses.

- You will be redirected to the License List page or Legacy Cases list.

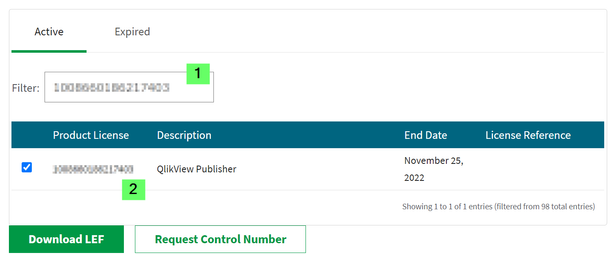

Select Active (1) to view Active License.

Select Expired (2) to view Expired Maintenance Licenses

- If you would like to see a particular license, you can filter the list with the license key.

Note: Evaluation Licenses have a "TimeLimit Value" in the LEF and licenses with "TimeLimit Value" are issued for evaluation purposes only. When the timelimit value on a license expired the license stops working but the license might still be listed as active in the support portal because of an "End Date" is active. Once the End Date is expired- the license will no longer be visible under the Active tab in the support portal.Also note that the legacy license portal only holds perpetual licenses, new subscription license are not updated in the legacy license portal, if you need the license details of Subscription based license then kindly contact Support via chat.

If there is any discrepancy with Licenses, please contact Qlik Customer Support via Qlik Support Portal

- Login to the legacy Support Portal by following this link or