Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

Search our knowledge base, curated by global Support, for answers ranging from account questions to troubleshooting error messages.

Featured Content

-

How to contact Qlik Support

Qlik offers a wide range of channels to assist you in troubleshooting, answering frequently asked questions, and getting in touch with our technical e... Show MoreQlik offers a wide range of channels to assist you in troubleshooting, answering frequently asked questions, and getting in touch with our technical experts. In this article, we guide you through all available avenues to secure your best possible experience.

For details on our terms and conditions, review the Qlik Support Policy.

Index:

- Support and Professional Services; who to contact when.

- Qlik Support: How to access the support you need

- 1. Qlik Community, Forums & Knowledge Base

- The Knowledge Base

- Blogs

- Our Support programs:

- The Qlik Forums

- Ideation

- How to create a Qlik ID

- 2. Chat

- 3. Qlik Support Case Portal

- Escalate a Support Case

- Phone Numbers

- Resources

Support and Professional Services; who to contact when.

We're happy to help! Here's a breakdown of resources for each type of need.

Support Professional Services (*) Reactively fixes technical issues as well as answers narrowly defined specific questions. Handles administrative issues to keep the product up-to-date and functioning. Proactively accelerates projects, reduces risk, and achieves optimal configurations. Delivers expert help for training, planning, implementation, and performance improvement. - Error messages

- Task crashes

- Latency issues (due to errors or 1-1 mode)

- Performance degradation without config changes

- Specific questions

- Licensing requests

- Bug Report / Hotfixes

- Not functioning as designed or documented

- Software regression

- Deployment Implementation

- Setting up new endpoints

- Performance Tuning

- Architecture design or optimization

- Automation

- Customization

- Environment Migration

- Health Check

- New functionality walkthrough

- Realtime upgrade assistance

(*) reach out to your Account Manager or Customer Success Manager

Qlik Support: How to access the support you need

1. Qlik Community, Forums & Knowledge Base

Your first line of support: https://community.qlik.com/

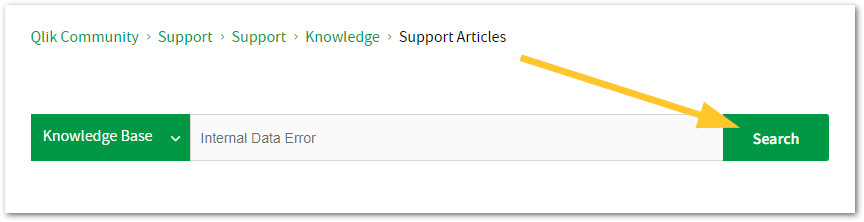

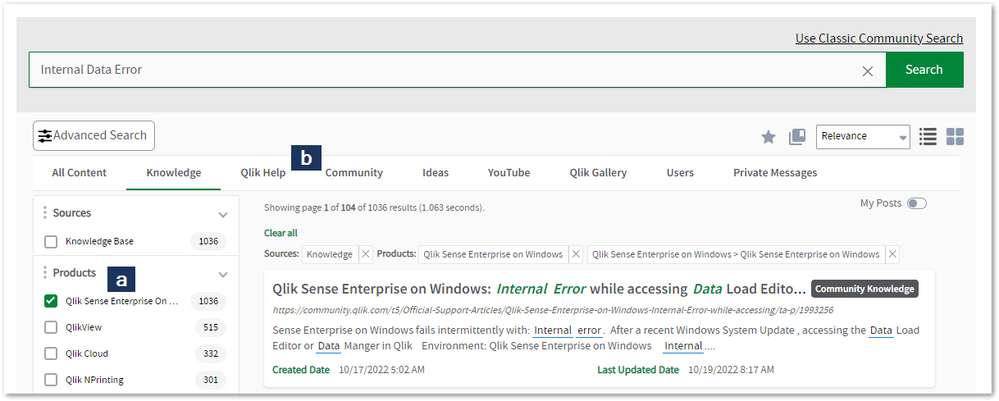

Looking for content? Type your question into our global search bar:

The Knowledge Base

Leverage the enhanced and continuously updated Knowledge Base to find solutions to your questions and best practice guides. Bookmark this page for quick access!

- Go to the Official Support Articles Knowledge base

- Type your question into our Search Engine

- Need more filters?

- Filter by Product

- Or switch tabs to browse content in the global community, on our Help Site, or even on our Youtube channel

Blogs

Subscribe to maximize your Qlik experience!

The Support Updates Blog

The Support Updates blog delivers important and useful Qlik Support information about end-of-product support, new service releases, and general support topics. (click)The Qlik Design Blog

The Design blog is all about product and Qlik solutions, such as scripting, data modelling, visual design, extensions, best practices, and more! (click)The Product Innovation Blog

By reading the Product Innovation blog, you will learn about what's new across all of the products in our growing Qlik product portfolio. (click)Our Support programs:

Q&A with Qlik

Live sessions with Qlik Experts in which we focus on your questions.Techspert Talks

Techspert Talks is a free webinar to facilitate knowledge sharing held on a monthly basis.Technical Adoption Workshops

Our in depth, hands-on workshops allow new Qlik Cloud Admins to build alongside Qlik Experts.Qlik Fix

Qlik Fix is a series of short video with helpful solutions for Qlik customers and partners.The Qlik Forums

- Quick, convenient, 24/7 availability

- Monitored by Qlik Experts

- New releases publicly announced within Qlik Community forums (click)

- Local language groups available (click)

Ideation

Suggest an idea, and influence the next generation of Qlik features!

Search & Submit Ideas

Ideation GuidelinesHow to create a Qlik ID

Get the full value of the community.

Register a Qlik ID:

- Go to: qlikid.qlik.com/register

- You must enter your company name exactly as it appears on your license or there will be significant delays in getting access.

- You will receive a system-generated email with an activation link for your new account. NOTE, this link will expire after 24 hours.

If you need additional details, see: Additional guidance on registering for a Qlik account

If you encounter problems with your Qlik ID, contact us through Live Chat!

2. Chat

Incidents are supported through our Chat, by clicking Chat Now on any Support Page across Qlik Community.

To raise a new issue, all you need to do is chat with us. With this, we can:

- Answer common questions instantly through our chatbot

- Have a live agent troubleshoot in real time

- With items that will take further investigating, we will create a case on your behalf with step-by-step intake questions.

3. Qlik Support Case Portal

Log in to manage and track your active cases in Manage Cases. (click)

Please note: to create a new case, it is easiest to do so via our chat (see above). Our chat will log your case through a series of guided intake questions.

Your advantages:

- Self-service access to all incidents so that you can track progress

- Option to upload documentation and troubleshooting files

- Option to include additional stakeholders and watchers to view active cases

- Follow-up conversations

When creating a case, you will be prompted to enter problem type and issue level. Definitions shared below:

Problem Type

Select Account Related for issues with your account, licenses, downloads, or payment.

Select Product Related for technical issues with Qlik products and platforms.

Priority

If your issue is account related, you will be asked to select a Priority level:

Select Medium/Low if the system is accessible, but there are some functional limitations that are not critical in the daily operation.

Select High if there are significant impacts on normal work or performance.

Select Urgent if there are major impacts on business-critical work or performance.

Severity

If your issue is product related, you will be asked to select a Severity level:

Severity 1: Qlik production software is down or not available, but not because of scheduled maintenance and/or upgrades.

Severity 2: Major functionality is not working in accordance with the technical specifications in documentation or significant performance degradation is experienced so that critical business operations cannot be performed.

Severity 3: Any error that is not Severity 1 Error or Severity 2 Issue. For more information, visit our Qlik Support Policy.

Escalate a Support Case

If you require a support case escalation, you have two options:

- Request to escalate within the case, mentioning the business reasons.

To escalate a support incident successfully, mention your intention to escalate in the open support case. This will begin the escalation process. - Contact your Regional Support Manager

If more attention is required, contact your regional support manager. You can find a full list of regional support managers in the How to escalate a support case article.

Phone Numbers

When other Support Channels are down for maintenance, please contact us via phone for high severity production-down concerns.

- Qlik Data Analytics: 877-754-5843

- Qlik Data Integration: 781-730-4060

- Talend AMER Region: 1-800-810-3065

- Talend UK Region: 44 800-098-8473

- Talend APAC Region: 65-3163-2072

Resources

A collection of useful links.

Qlik Cloud Status Page

Keep up to date with Qlik Cloud's status.

Support Policy

Review our Service Level Agreements and License Agreements.

Live Chat and Case Portal

Your one stop to contact us.

Recent Documents

-

How to Move the Data Directory to a Different Replicate Server

The basic idea behind this procedure is that by default, the data repository of Replicate is encrypted by a machine key which is unique for each machi... Show MoreThe basic idea behind this procedure is that by default, the data repository of Replicate is encrypted by a machine key which is unique for each machine.

This means, you cannot move the data repository to another Replicate server because that server data repository is encrypted by that server’s machine key which is obviously different.

To allow that procedure, you’ll need to re-encrypt the source data repository with a predefined password which is not machine related, and this password can be used on the target machine to access that repository after it is migrated.

Environment

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

Note: Re-encrypting the data repository requires you to re-enter the endpoint passwords, therefore we strongly recommend encrypting the repository with a predefined key right after installation, before you set endpoints and tasks on the machine.

Since the Server and UI are using 2 different keys, both keys will need to be updated using the following procedure:

- Assuming both servers are members of the same domain, please make sure you can open the Replicate console with a domain user, otherwise in:

Server->User Permissions

Please add a domain user, grant it “Admin” access level, and save the new settings.

Please reopen the console and make sure you can access the console with the credentials of the domain user.

The above step is needed because otherwise you won’t have a valid user to open the console on the target machine.

- To set the server repository master key password on the source server, open "as Administrator":

“Attunity Replicate Command line”

Important: For all the repctl commands below, if the data directory is not in the default location, you must specify the full path to the data directory by using the "-d" switch immediately following "repctl" followed by the rest of the command:

repctl -d "YOUR_DATA_DIRECTORY_PATH"

Run the following command to set the Replicate server key:

C:\Program Files\Attunity\Replicate\bin>repctl setmasterkey master_key_scope=1

master_key=Server_Master_key

[setmasterkey command] Succeeded

- The UI master key password must contain 32 varying characters, you can generate it using the following command:

C:\Program Files\Attunity\Replicate\bin>RepUiCtl.exe utils genpassword

miBqZuBFgOJevgCt9myBqiWYjZKAdnEn

Note: you must save this password in a safe place for future use

Now, you can set the above password using the following command:

C:\Program Files\Attunity\Replicate\bin>RepUiCtl.exe masterukey set -p miBqZuBFg

OJevgCt9myBqiWYjZKAdnEn

The master user password has been changed. The change will only take effect after the service is restarted.

Replicate Control Program completed successfully.

- Restart both services:

AttunityReplicateServer

AttunityReplicateConsole

- Open the console. Since the Master key password has been changed, you’ll need to re-enter the password for each endpoint. Please do that and make sure you can test the connection successfully for each endpoint.

- On the target machine, please stop both Replicate services, and rename the Data directory.

- Copy the data directory from the source machine to the target machine.

- On the target Data directory, delete file:

ServiceConfiguration.xml

This file refers to the source machine name and is not valid on the target machine, starting the services on the target machine will create the file from scratch, matching localhost.

- On the target machine run the same password set command for the UI:

C:\Program Files\Attunity\Replicate\bin>RepUiCtl.exe masterukey set -p miBqZuBFg

OJevgCt9myBqiWYjZKAdnEn

- Start both Replicate services and open the console, and make sure tasks can be resumed properly.

-

How to Automatically be Notified of New Releases and Qlik Support Updates

Qlik Support communicates Product Releases in its Release Notes board, and information on Product alerts and Support related activities (Webinars and ... Show MoreQlik Support communicates Product Releases in its Release Notes board, and information on Product alerts and Support related activities (Webinars and Q&As) on the Qlik Support Updates blog.

To subscribe to the release notes:

- Go to the Release Notes

- Log in to the Community

- Click Subscribe

This will alert you for activities such as:

- New product releases

- Feature releases, such as patches and service releases

To subscribe to the blog:

- Go to the Support Updates Blog

- Log in to the Community

- Click Subscribe

This will alert you for activities such as:

- Security Updates

- Techspert Talks

- Q&A Sessions

- Support related updates

- Product deprecation

-

Qlik Replicate and DB2i source endpoint: source capture "Error parsing"

The IBM DB2 for iSeries source endpoint occasionally encounters an error during the CDC stage. This issue appears to be linked to the presence of the ... Show MoreThe IBM DB2 for iSeries source endpoint occasionally encounters an error during the CDC stage. This issue appears to be linked to the presence of the IBM i Access ODBC Driver versions 7.1.26 and 7.1.27.

The error message in task log file:

[SOURCE_CAPTURE ]E: Error parsing [1020109] (db2i_endpoint_capture.c:652)

The issue specifically arises during the CDC stage; however, the Full Load stage operates smoothly without any complications.

Resolution:

As a workaround please downgrade IBM i Access ODBC client from versions '07.01.027'/'07.01.026' to '07.01.025'

The most recent version of IBM i Access ODBC Client is '07.01.027' as of today. For compatibility reasons, it's advisable to revert to version '07.01.025', as '07.01.026' exhibits the same issue.

Various factors can contribute to encountering the 'Error parsing' message, including:

• DB2i ODBC Version '07.01.027' (as described in this article)

• In a single task, the total number of captured tables exceeds 300

• The source table is created by DDS

• Garbage data in table

• Special characters in table object identifier (table name, or column name)

If you continue to encounter the error after switching to '07.01.025', please reach out to Qlik Support for further assistance.Cause:

The behavior of the IBM DB2i ODBC Versions '07.01.026' & '07.01.027' differ slightly from that of '07.01.025'. In certain scenarios, it may return incorrect column lengths

Internal Investigation ID(s):

#00158029, #00160002, QB-26413

Environment:

- Qlik Replicate All versions

- IBM DB2i All versions

- IBM DB2i Client versions '07.01.026' and '07.01.027'

-

Qlik Replicate - MySQL source defect and fix (2023.11)

Upgrade installation or fresh installation of Qlik Replicate 2023.11 (includes builds GA, PR01 & PR02), Qlik Replicate reports errors for MySQL or Mar... Show MoreUpgrade installation or fresh installation of Qlik Replicate 2023.11 (includes builds GA, PR01 & PR02), Qlik Replicate reports errors for MySQL or MariaDB source endpoints. The task attempts over and over for the source capture process but fail, Resume and Startup from timestamp leads to the same results:

[SOURCE_CAPTURE ]T: Read next binary log event failed; mariadb_rpl_fetch error 0 () [1020403] (mysql_endpoint_capture.c:1060)

[SOURCE_CAPTURE ]T: Error reading binary log. [1020414] (mysql_endpoint_capture.c:3998)

Environment:

- Replicate 2023.11 (GA, PR01, PR02)

- MySQL source database , any version

- MariaDB source database , any version

Fix Version & Resolution:

Upgrade to Replicate 2023.11 PR03 (expires 8/31/2024).

The fix is included in Replicate 2024.05 GA.

Workaround:

If you are running 2022.11, then keep run it.

No workaround for 2023.11 (GA, or PR01/PR02) .

Cause & Internal Investigation ID(s):

Jira: RECOB-8090 , Description: MySQL source fails after upgrade from 2022.11 to 2023.11

There is a bug in the MariaDB library version 3.3.5 that we started using in Replicate in 2023.11.

The bug was fixed in the new version of MariaDB library 3.3.8 which be shipped with Qlik Replicate 2023.11 PR03 and upper version(s).Related Content:

support case #00139940, #00156611

Replicate - MySQL source defect and fix (2022.5 & 2022.11)

-

How to disable Task Log Data Encryption in Qlik Replicate

Environment Qlik Replicate May 2023 (Version 2023.5 and later) What is Replicate log file encryption? Starting from version "November 2021" (Versi... Show MoreEnvironment

- Qlik Replicate May 2023 (Version 2023.5 and later)

What is Replicate log file encryption?

Starting from version "November 2021" (Version 2021.11), Qlik Replicate introduced support for log data encryption to safeguard customer data snippets from being exposed in verbose task log files. However, this enhancement also presented its own set of challenges, such as making it very difficult to read the logs and complicating troubleshooting processes, the steps of Decrypting Qlik Replicate Verbose Task Log Files takes time.

How to disable Task Log Data Encryption?

In response to customer feedback and feature requests, we implemented a new feature allowing users the flexibility to disable log encryption in Qlik Replicate. This enhancement was rolled out with the release of Qlik Replicate version 2023.5 GA. This article serves as a guide on how to effectively disable Task Log Data Encryption.

Steps

- Stop the Replicate Server services.

- Open <REPLICATE_INSTALL_DIR>\bin\repctl.cfg and add one line disable_log_encryption.

{ "port": 3552, "plugins_load_list": "repui", ... ... "enable_data_logging": true, "disable_log_encryption": true } - Save the repctl.cfg file and start the Replicate Server services.

- Now the task log files display database data, sample lines like:

00029680: 2024-03-01T18:30:26:105005 [TARGET_LOAD ]V: Column name: ID value: 441260d008490: 2600012C000000000000000000000000 | &..,............ 1260d0084a0: 000000 | ... (sqlserver_endpoint_imp.c:2787) 00029680: 2024-03-01T18:30:26:105005 [TARGET_LOAD ]V: Column name: NAME value: trx222 1260d0084a8: 747278323232 | trx222 (sqlserver_endpoint_imp.c:2787)

Where the database table's column "ID" value is "44", and the column "NAME" value is "trx222" which are in plain text format as well as hexadecimal format datas.

Important Note:

Please exercise caution as verbose task logs may contain sensitive end-user data, and disabling encryption could potentially lead to data leakage. Always ensure appropriate measures are taken to protect sensitive information.Related Content

How to Decrypt Qlik Replicate Verbose Task Log Files

-

Qlik Replicate: Join different tables in source database and filter records in F...

Sometimes we need to join different tables in the source databases and filter records according to another table records values. In this article we ar... Show MoreSometimes we need to join different tables in the source databases and filter records according to another table records values. In this article we are using Oracle source endpoint, to demonstrate how to build up such a task in Qlik Replicate.

In the below sample task, table testfilter will be replicated from Oracle source database to SQL Server target database. During the replication, the records which value is INACTIVE in table testfiltercondition will be filtered out and are ignored in both Full Load and CDC stages. The two tables are joined by the same primary key column "id".

Detailed information:

- In Oracle database prepare 2 tables

create table testfilter (id integer not null primary key, name char(20), notes char(200));

insert into testfilter values (2,'John','ACTIVE will pass the filter');

insert into testfilter values (3,'Sybase','INACTIVE will be ignored');

insert into testfilter values (5,'Hana','ACTIVE will pass the filter');

create table testfiltercondition (id integer not null primary key, status char(20));

insert into testfiltercondition values (2,'ACTIVE');

insert into testfiltercondition values (3,'INACTIVE');

insert into testfiltercondition values (5,'ACTIVE'); - In Qlik Replicate the table testfilter is included in a Full Load and Apply Changes enabled task.

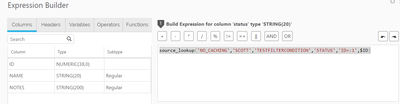

- In Table Settings --> Transformation, let's add an additional column name "status" which computed expression is:

source_lookup('NO_CACHING','SCOTT','TESTFILTERCONDITION','STATUS','ID=:1',$ID)In the above expression, the 2 tables testfilter and testfiltercondition are joined by the PK column "id" in function source_lookup.

- In Table Settings --> Filter add a filter on the column "status" which value equal to "ACTIVE"

- In target side tables, only rows with "status" equal to "ACTIVE" are replicated. Other rows were filtered out. The filter takes action for both Full Load and CDC stages.

Internal Investigation ID(s):

#00145952

Related Content

QnA with Qlik: Qlik Replicate Tips

Environment:

- Qlik Replicate All versions

- Oracle All versions

Qlik Replicate

- In Oracle database prepare 2 tables

-

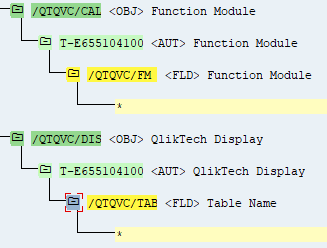

Qlik Replicate Extractor performance parameter

Qlik Replicate SAP Extractor Endpoint can be tuned to improve the performance of data being processed from an SAP environment. This is achieved by add... Show MoreQlik Replicate SAP Extractor Endpoint can be tuned to improve the performance of data being processed from an SAP environment. This is achieved by adding an internal parameter: sqlConnector. To confirm the performance improvements of the task, first record the task duration before implementing the parameter, then compare it to the subsequent runs.

Requirements

Before the internal parameter can be added to the Qlik Replicate Extractor Endpoint, the below SAP Transports must be installed:

- sqlConnector Transport

- H6AK900114 - Data Extractor

- E66K900154 - User profile Extractor for the same client

Once the transports have been installed make sure you follow the below steps to set the added Permissions on the SAP Replicate User:

Add the following authorization objects and settings to the RFC user ID:

Do the following:

- Import the appropriate data extraction transport into the base client, usually 000. This is client-independent.

- Import the appropriate user profile transports into every client where they will be used. This is client-dependent.

For more information on how to import/upgrade the sqlConnector Transport, see Importing the SAP transports.

Configuration

Configure the SAP Extractor Endpoint by defining the useSqlConnector Internal Parameter.

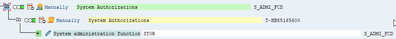

- Open the SAP Extractor Endpoint

- Switch to the Advanced tab

- Click Internal Parameters

- Enter '!' in the search bar to list available entries, then scroll to useSqlConnector.

Alternatively, enter the parameter directly.

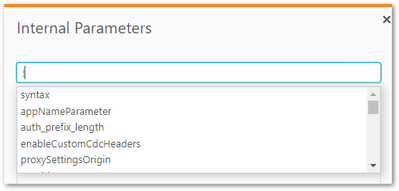

For more information about Internal Parameters, see Qlik Replicate: How to set Internal Parameters and what are they for? - Set the Internal Parameter as:

Parameter: useSqlConnector

Value: true - Save the Endpoint

Related Content

SAP Transports

Importing the SAP transportsEnvironment

-

Visualization Day

This Techspert Talks session covers: Future Visualization Updates New Layout Container Dynamic Linking with Bookmarks Chapters: 01:59 - New Str... Show MoreThis Techspert Talks session covers:

- Future Visualization Updates

- New Layout Container

- Dynamic Linking with Bookmarks

Chapters:

- 01:59 - New Straight Table

- 02:45 - Background Images, Fine Grid, Font Styling

- 03:45 - Turn off Sheet Title and Tool Bar

- 04:06 - Multi-Language App

- 04:41 - New Filter Panes

- 04:58 - Line Object

- 05:34 - New Rich Text Object

- 06:20 - Layout Container

- 07:21 - Tip 1: Don't go off grid

- 07:43 - Tip 2: Best things are odd, example app

- 09:26 - Tip 3: Image is everything, example app

- 12:15 - Tip 4: Simplicity

- 12:58 - Tip 5: You can, you don't have to

- 15:03 - Setting Default Bookmarks

- 16:34 - Creating Dynamic Bookmarks

- 17:46 - Search Expression Best Practices

- 18:44 - Update Bookmark

- 20:00 - Adding Layout options to bookmarks

- 21:34 - Sharing Bookmarks

- 22:50 - Q&A: Why selection gone after using bookmark?

- 24:30 - Q&A: How can we do multi-page exports?

- 25:08 - Q&A: Can container go inside containers?

- 25:50 - Q&A: How to learn about new releases?

- 26:43 - Q&A: How to change the font of an app?

- 27:12 - Q&A: Do chart settings override the theme?

- 27:46 - Q&A: How to make a filter pane collapse?

- 28:30 - Q&A: Where demo apps are available?

- 29:25 - Q&A: When will the new Text Object be available?

- 29:49 - Q&A: Can you import an Excel chart in Sense?

30:36 - Q&A: Are values in a Rich Text Object selectable? - 31:03 - Q&A: Does public bookmarks trigger a notification?

- 31:42 - Q&A: Where to find those Search Expressions?

- 32:26 - Q&A: How to send Bookmark links?

- 32:52 - Q&A: Are some of these add-on features?

- 33:12 - Q&A: How to apply a bookmark to a monitored chart?

Resources:

- Product Innovation Blog

- Color Brewer tool

- What's New - on Help.qlik.com

- Ideation - Technical Preview

- Demos at Qlik.com

- Visualization vocabulary app

- Search Expression Cheat Sheet 2.0

Q&A:

Q: Your multi language apps is really critical to my business as we globalise - where is there more content about how we can handle dimension name translation in line with the native Qlik langauge translations?

A: Dimension names can be renamed in the load script, but it may not be necessary, just translate the dimension labels in the app instead.

Making a Multilingual Qlik Sense AppQ: Do the objects within the new Container have to be master visualizations?

A: No, you don't need to use Master visualizations. You can add new charts to the object or drag and drop existing charts from the sheet.

Q: When will the Layout Container be available?

A: Most likely later this year

Q: After update, there is a problem with filtering a "toString" field. I can't open the application for 10 minutes. What wrong with that field? (e.g. load * inline [toString test1];)

A: Hard to tell without seeing the app and knowing what the field is. As a general rule, keep the cardinality of fields down. If I would guess toString in this case may stop the engine from optimizing the field. To learn more, read HiCs post.

Symbol Tables and Bit-Stuffed Pointers

Q: Will we be able to pin objects to certain locations on the grid? As shown, a sheet menu build using the layout container would be nice to pin to the top left corner for example?

A: In the first release positioning and size will be using percentages. So, if you would have the position 0% for both axis then it would be pinned in the corner.

Click here to see video transcript

-

Connect NPrinting Server to One or More Qlik Sense servers

How to Connect NPrinting to Single or Multiple Qlik Sense servers: You may use this article to connect to NPrinting to a single or multiple QS server... Show MoreHow to Connect NPrinting to Single or Multiple Qlik Sense servers:

- You may use this article to connect to NPrinting to a single or multiple QS servers

- In some cases you may wish to connect your NPrinting server to multiple Qlik Sense server environments. The process described in this article will describe how to enable this feature and how to manage possible errors encountered.

- Note that all additional QS servers must be in the same domain as the original QS and NP servers.

- If having issues connecting to multiple Qlik Sense servers, see Qlik NPrinting will not read Qlik Sense certificates

Environments:

- This feature is ONLY available in NPrinting June 2019 release and newer versions.

- NOTE: This feature is not supported in NPrinting April 2019 and earlier versions.

Implement the solution:

- Go to Qlik Sense QMC>Start>Certificates

- Export the Qlik Sense server certificates from each additional Qlik Sense server.

- Use the NPrinting server computer name or the Friendly Url Alias of the NPrinting Server as the "Machine Name" that is used to log into the NPrinting Web Console with.

- Export a certificate for both if both addresses below are used to access the NP web console.

- Computer name: nprintingserverPROD1

- Friendly URL/Alias address: Internal.nprintingserver.domain.com

- Select include secret key and

- do NOT include a password when exporting the certificates. See Exporting certificates through the QMC on the Qlik Sense Online Help for details.

- Navigate to the exported certificates location on the QS server and rename the exported file 'client.pfx' with a suitable name. ie: if your Qlik Sense server name is QS1, prepend the file client certificate file name as follows: QS1client.pfx (Naming of this file should ideally reflect the Qlik Sense server that the file was exported from. You may use however, any name that you wish)

- Copy this file to the NPrinting server path:

"C:\Program Files\NPrintingServer\Settings\SenseCertificates"- Restart all NPrinting services

- If newly installing NPrinting May 2023 IR or later, please check this article if you are having NPrinting Qlik Sense connection failures: NPrinting Qlik Sense Connections Fail with Fresh Install of May 2023 NPrinting and Higher Version

NOTE: Reminder that the NPrinting Engine service domain user account MUST be ROOTADMIN on each Qlik Sense server which NPrinting is connecting to.

Test access to the additional Qlik Sense server.

- Open the NPrinting Web Console

- Create a new NP App. ie: "NP_App_QSserver-2"

- Create a new NP connection and use the Virtual Proxy Address for the new target Qlik Sense server

- Verify your connection

- Save your connection to load the metadata for the first time.

- Create a test report and preview

- If having issues connecting to multiple Qlik Sense servers, see Qlik NPrinting will not read Qlik Sense certificates

Notes regarding this feature:- Connecting additional Qlik Sense servers will have an impact on NPrinting server system resources. Ensure to carefully monitor NPrinting Server/NPrinting Engine RAM memory and CPU usage and increase each respectively as needed to ensure normal NPrinting server/engine system operation.

- You may only publish Qlik NPrinting reports only to a single Qlik Sense Hub. Ie: the QS hub defined in the NPrinting Web Console under 'Destinations\Hub' while logged on as an NPrinting administrator.

- Publishing to multiple Qlik Sense Hubs is not supported

The Qlik NPrinting server target folder for exported Qlik Sense certificates

"C:\Program Files\NPrintingServer\Settings\SenseCertificates"- Is retained when Qlik NPrinting is upgraded

- However, this folder is deleted when you uninstall Qlik NPrinting.

- Therefore you need to re-add the exported Qlik Sense certificate to this folder after installing NPrinting again

- Ensure that NO older Qlik Sense server certificates are kept in the Sense certificates folder nor in any sub-folders of this folder

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

Related Content

- NPrinting Qlik Sense Connections Fail with Fresh Install of May 2023 NPrinting and Higher Version

- https://help.qlik.com/en-US/nprinting/Content/NPrinting/DeployingQVNprinting/NPrinting-with-Sense.htm

- https://help.qlik.com/en-US/nprinting/February2021/Content/NPrinting/DeployingQVNprinting/NPrinting-with-Sense.htm#anchor-1

-

Qlik Replicate and Kafka target: How to add tables name in Kafka message

When using Kafka as a target in a Qlik Replicate task, the source table's "Schema Name" & "Table Name" are not included in the Kafka message, this is ... Show MoreWhen using Kafka as a target in a Qlik Replicate task, the source table's "Schema Name" & "Table Name" are not included in the Kafka message, this is the default behavior.

In some scenarios, you may want to add some additional information into the Kafka messages.

In this article, we will summarize all available options and weigh out the pros/cons between the different options. We use the "Table Name" as an example in below alternatives:

- Table Settings → Transform

- Global Rules → Transformation

- Table Settings → Message Format

- Task Settings → Message Format

Resolution

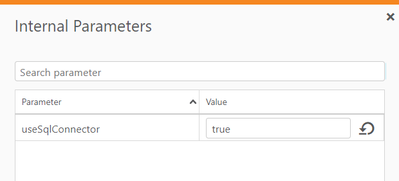

- Table Settings → Transform

We may add a column eg "tableName" in table settings output columns as below:Cons:

-- No variable is available, so it's not dynamic value but a fixed string. In our sample the expression string is 'kit'

-- Affects the single table only

-- The table name appears in message's data part (rather than headers part){

"magic": "atMSG",

"type": "DT",

"headers": null,

"messageSchemaId": null,

"messageSchema": null,

"message": {

"data": {

"ID": "2",

"NAME": "test Kafka",

"tableName": "kit"

},

"beforeData": {

"ID": "2",

"NAME": "ok",

"tableName": "kit"

},

"headers": {

"operation": "UPDATE",

"changeSequence": "20230911032325000000000000000000005",

"timestamp": "2023-09-11T03:23:25.000",

"streamPosition": "00000000.00bb2531.00000001.0000.02.0000:154.6963.16",

"transactionId": "00000000000000000000000000060008",

"changeMask": "02",

"columnMask": "07",

"transactionEventCounter": 1,

"transactionLastEvent": true

}

}

} - Global Rules → Transformation

Global Rules can be used to add table name column to all the tables messages

Prons:

-- Affects all the tables

-- Variables are available, in our sample the variable $AR_M_SOURCE_TABLE_NAME is used.

-- The table name can be customized by combining with other transformation eg adding suffix expression "__QA"

Cons:

-- The table name appears in message's data part (rather than headers part)

If both tables transform and global rules transformation are defined (and their values are different) then tables level transform overwrites the global transformation settings.{

"magic": "atMSG",

"type": "DT",

"headers": null,

"messageSchemaId": null,

"messageSchema": null,

"message": {

"data": {

"ID": "2",

"NAME": "test Kafka 2",

"tableName": "KIT"

},

"beforeData": {

"ID": "2",

"NAME": "test Kafka",

"tableName": "KIT"

},

"headers": {

"operation": "UPDATE",

"changeSequence": "20230911034827000000000000000000005",

"timestamp": "2023-09-11T03:48:27.000",

"streamPosition": "00000000.00bb28db.00000001.0000.02.0000:154.7632.16",

"transactionId": "00000000000000000000000000170006",

"changeMask": "02",

"columnMask": "07",

"transactionEventCounter": 1,

"transactionLastEvent": true

}

}

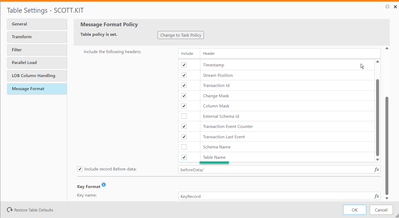

} - Table Settings → Message Format

Enable the "Table Name" option will include the header information in Kafka messages.

Cons:

-- Affects the single table only

Prons:

-- This new feature was released in Replicate 2023.5 and above versions

-- The table name appears in message's headers part (rather than data part){

"magic": "atMSG",

"type": "DT",

"headers": null,

"messageSchemaId": null,

"messageSchema": null,

"message": {

"data": {

"ID": "2",

"NAME": "test Kafka 3"

},

"beforeData": {

"ID": "2",

"NAME": "test Kafka 2"

},

"headers": {

"operation": "UPDATE",

"changeSequence": "20230911041053000000000000000000005",

"timestamp": "2023-09-11T04:10:53.000",

"streamPosition": "00000000.00bb2c30.00000001.0000.02.0000:154.9378.16",

"transactionId": "00000000000000000000000000060005",

"changeMask": "02",

"columnMask": "03",

"transactionEventCounter": 1,

"transactionLastEvent": true,

"tableName": "KIT"

}

}

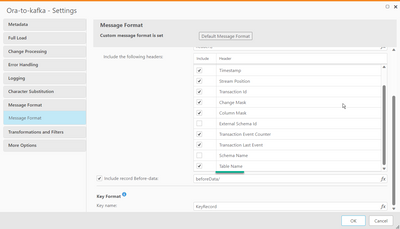

} - Task Settings → Message Format

Enable the "Table Name" option will include the header information in Kafka messages.

Prons:

-- Affects all the tables

-- This new feature was released in Replicate 2023.5 and above versions

-- The table name appears in message's headers part (rather than data part)

If both table level and task level "Message Format" are defined (and their values are different) then table level settings overwrites the task settings.{

"magic": "atMSG",

"type": "DT",

"headers": null,

"messageSchemaId": null,

"messageSchema": null,

"message": {

"data": {

"ID": "2",

"NAME": "test Kafka 4"

},

"beforeData": {

"ID": "2",

"NAME": "test Kafka 3"

},

"headers": {

"operation": "UPDATE",

"changeSequence": "20230911042445000000000000000000005",

"timestamp": "2023-09-11T04:24:45.000",

"streamPosition": "00000000.00bb2e56.00000001.0000.02.0000:154.9799.16",

"transactionId": "00000000000000000000000000080001",

"changeMask": "02",

"columnMask": "03",

"transactionEventCounter": 1,

"transactionLastEvent": true,

"tableName": "KIT"

}

}

}

Environment

Qlik Replicate (versions 2023.5 and above)

Kafka targetRelated Content:

-

Qlik Replicate and Kafka target: How to rename topic name of attrep_apply_except...

When using Kafka as a target in a Qlik Replicate task, the "Control Table" name in target is created in lower case, for example the Apply Exception to... Show MoreWhen using Kafka as a target in a Qlik Replicate task, the "Control Table" name in target is created in lower case, for example the Apply Exception topic name is "attrep_apply_exceptions" (if auto.create.topics.enable=true is set in Kafka broker config/server.properties file), This is the default behavior.

In some scenarios, you may want to use the non-default topic name or topic name in upper case etc to match the organization naming standards rule. This article describes how to rename control table topics' name.

In this article, we will use the topic name "attrep_apply_exceptions" as an example. You can customize below control topics using the same process:

- attrep_apply_exceptions

- attrep_status

- attrep_suspended_tables

- attrep_history

The same way works for more generic level , not only for Kafka target endpoint. In generic level we may rename other metadata eg "target_schema" too.

Resolution

- Export the JSON of the task

- Position to the line of the JSON file and identify the element "exception_table_settings" (usually is empty, as below):

"exception_table_settings": {}, - Override the element to add the table_name attribute and SAVE the changes:

"exception_table_settings": {"table_name": "CUSTOM_NAME_ATTREP_APPLY_EXCEPTIONS_TABLE"},

In above sample, we rename the topic exception_table_settings name to CUSTOM_NAME_ATTREP_APPLY_EXCEPTIONS_TABLE (it's case sensitive, and in upper case).

Kafka topic names cannot exceed 255 characters (249 from Kafka 0.10) and can only contain the following characters:

a-z|A-Z|0-9|. (dot)|_(underscore)|-(minus)

More detailed information can be found at Limitations and considerations.

The safest topic name length is 209 (rather than 255/249).

- Import the JSON of the task

After the import, The topic name shows in GUI is default name still, however the new topics name takes action, the task can be edited by using GUI freely until change the control topics name again by above steps. - Run the task.

Environment

Qlik Replicate (versions 2022.11, 2023.5 and above)

Kafka targetRelated Content:

case #00010983, #00108779

Generate a record to attrep_apply_exceptions topic for Kafka endpoint

-

Replicate - Oracle source: Long Object Names exceeding 30 Bytes

In Replicate Oracle source endpoint there was a limitation: Object names exceeding 30 characters are not supported. Consequently, tables with names ex... Show MoreIn Replicate Oracle source endpoint there was a limitation:

Object names exceeding 30 characters are not supported. Consequently, tables with names exceeding 30 characters or tables containing column names exceeding 30 characters will not be replicated.

Cause

The limitation comes from low versions Oracle behavior. However since Oracle v12.2, Oracle can support object name up to 128 bytes, long object name is common usage at present. The limitation in User Guide Object names exceeding 30 characters are not supported can be overcome now.

There are two major types of long identifier name in Oracle, 1- long table name, and 2- long column name.

Resolution

1- Error messages of long table name

[METADATA_MANAGE ]W: Table 'SCOTT.VERYVERYVERYLONGLONGLONGTABLETABLETABLENAMENAMENAME' cannot be captured because the name contains 51 bytes (more than 30 bytes)

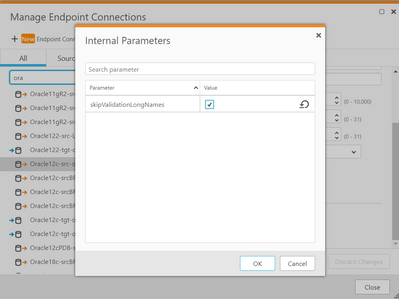

Add an internal parameter skipValidationLongNames to the Oracle source endpoint and set its value to true (default is false) then re-run the task:

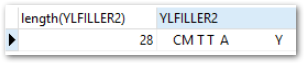

2- Error messages of long column name

There are different messages if the column name exceeds 30 characters

[METADATA_MANAGE ]W: Table 'SCOTT.TEST1' cannot be captured because it contains column with too long name (more than 30 bytes)

Or

[SOURCE_CAPTURE ]E: Key segment 'CASE_LINEITEM_SEQ_NO' value of the table 'SCOTT.MY_IMPORT_ORDERS_APPLY_LINEITEM32' was not found in the bookmark

Or (incomplete WHERE clause)

[TARGET_APPLY ]E: Failed to build update statement, statement 'UPDATE "SCOTT"."MY_IMPORT_ORDERS_APPLY_LINEITEM32"

SET "COMMENTS"='This is final status' WHERE ', stream position '0000008e.64121e70.00000001.0000.02.0000:1529.17048.16']There are 2 steps to solve above errors for long column name :

(1) Add internal parameter skipValidationLongNames (see above) in endpoint

(2) It also requires a parameter called "enable_goldengate_replication" is enabled in Oracle. This can only be done by end user and their DBA:

alter system set ENABLE_GOLDENGATE_REPLICATION=true;

Take notes this is supported when the user has GoldenGate license, and Oracle routinely audits licenses. Consult with the user DBA before alter the system settings.Environment

- Oracle (version 12.2 and up) source endpoint for Qlik Replicate

- Qlik Replicate versions 2021.5/2021.11/2022.5 and up

Related Content

Internal support case ID: # 00045265.

-

Qlik Replicate: keepCharTrailingSpaces in IBM DB2 for iSeries and IBM DB2 for z/...

Keeping the trailing spaces in IBM DB2 for iSeries and IBM DB2 for z/OS source endpoint s is supported by adding the internal parameter keepCharTraili... Show MoreKeeping the trailing spaces in IBM DB2 for iSeries and IBM DB2 for z/OS source endpoint s is supported by adding the internal parameter keepCharTrailingSpaces.

Source Data type ORlength, which holds trailing spaces

Target Data type length, Which removed the trailing spaces.

Resolution

Adding an Internal Parameter , detailed steps are:

- Open the DB2 iSeries Source Endpoint

- Navigate to Advanced

- Open Internal Parameters

- Add the following internal parameter:

keepCharTrailingSpaces

Value: true

For additional information on how to set Internal Parameters, see Qlik Replicate: How to set Internal Parameters and what are they for?

Environment

-

Replicate - DB2 LUW ODBC Client error Specified driver could not be loaded due t...

While working with DB2 LUW endpoint, Replicate reports error after the 64-bit IBM DB2 Data Server Client 11.5 installation: SYS-E-HTTPFAIL, Cannot con... Show MoreWhile working with DB2 LUW endpoint, Replicate reports error after the 64-bit IBM DB2 Data Server Client 11.5 installation:

SYS-E-HTTPFAIL, Cannot connect to DB2 LUW Server.

SYS,GENERAL_EXCEPTION,Cannot connect to DB2 LUW Server,RetCode: SQL_ERROR SqlState: IM003 NativeError: 160 Message: Specified driver could not be loaded due to system error 1114: A dynamic link library (DLL) initialization routine failed. (IBM DB2 ODBC DRIVER, C:\Program Files\IBM\SQLLIB\BIN\DB2CLIO.DLL).Resolution

Install the 64-bit IBM DB2 Data Server Client 11.5.4 (for example 11.5.4.1449) rather than 11.5.0 (actual version is 11.5.0.1077).

Environment

Qlik Replicate : all versions

Replicate Server platform: Windows Server 2019

DB2 Data Server Client : version 11.5.0.xxxxInternal Investigation ID(s):

Support cases, #00076295

-

Replicate - MySQL source defect and fix (2022.5 & 2022.11)

Replicate reported errors during resume task if source MySQL running on Windows (while MySQL running on Linux then no problem) [SOURCE_CAPTURE ]I: Str... Show MoreReplicate reported errors during resume task if source MySQL running on Windows (while MySQL running on Linux then no problem)

[SOURCE_CAPTURE ]I: Stream positioning at context '$.000034:3506:-1:3506:0'

[SOURCE_CAPTURE ]T: Read next binary log event failed; mariadb_rpl_fetch error 1236 (Could not find first log file name in binary log index file)Replicate reported errors at MySQL source endpoints sometimes (does not matter what's the MySQL source platforms):

[SOURCE_CAPTURE ]W: The given Source Change Position points inside a transaction. Replicate will ignore this transaction and will capture events from the next BEGIN or DDL events.

Environment:

- Replicate 2022.5 PR4 (2022.5.0.815)

- Replicate 2022.11 PR1 (2022.11.0.289)

- MySQL source database , any version

Fix Version & Resolution:

Upgrade to Replicate 2022.11 PR2 (2022.11.0.394, released already) or higher, or Replicate 2022.5 PR5 (coming soon)

Workaround:

If you are running 2022.5 PR3 (or lower), then keep run it, or upgrade to PR5 (or higher) .

No workaround for 2022.11 (GA, or PR01) .

Cause & Internal Investigation ID(s):

Jira: RECOB-6526 , Description: It would not be possible to resume a task if MySQL Server was on Windows

Jira: RECOB-6499 , Description: Resuming a task from a CTI event, would sometimes result in missing events or/and a redundant warning message

Related Content:

support case #00066196

support case #00063985 (#00049357)

Qlik Replicate - MySQL source defect and fix (2023.11)

-

Qlik Replicate: Support JSONB datatype for PostgreSQL ODBC data source

While working with PostgreSQL ODBC DSN as source endpoint, The ODBC Driver is interpreting JSONB datatype as VARCHAR(255) by default, it leads the JSO... Show MoreWhile working with PostgreSQL ODBC DSN as source endpoint, The ODBC Driver is interpreting JSONB datatype as VARCHAR(255) by default, it leads the JSONB column values truncated no matter how the LOB size or data type length in target table were defined.

In general the task report warning as:2022-12-22T21:28:49:491989 [SOURCE_UNLOAD ]W: Truncation of a column occurred while fetching a value from array (for more details please use verbose logs)

Resolution

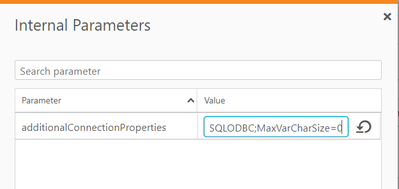

There are several options to solve the problem (any single one is good enough😞

I) Change PostgreSQL ODBC source endpoint connection string

- Open PostgreSQL ODBC source endpoint

- Go to the Advanced tab

- Open Internal Parameters

- Add a new parameter named additionalConnectionProperties

- Press <Enter> and set the parameter's value to:

MaxVarCharSize=0

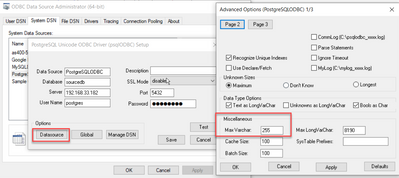

II) Or on Windows/Linux Replicate Server, add one line to "odbc.ini" in the DSN definition:

MaxVarCharSize=0

III) Or on Windows, set "Max Varchar" to 0 from default value 255 in ODBC Manager GUI (64-bit):

Environment

Qlik Replicate all versions

PostgreSQL all versionsInternal Investigation ID(s):

Support cases, #00062911

Ideation article, Support JSONB

-

How To Get Started with Qlik AutoML

IntroductionSystem RequirementsPricing and PackagingSoftware updatesTypes of Models SupportedGetting Started with AutoMLData ConnectionsData Preparati... Show More- Introduction

- System Requirements

- Pricing and Packaging

- Software updates

- Types of Models Supported

- Getting Started with AutoML

- Data Connections

- Data Preparation abilities

- Using realtime-prediction API

- Integration with Qlik Sense

- Contacting Support

- Additional resources

- Environment

This is a guide to get you started working with Qlik AutoML.

Introduction

AutoML is an automated machine learning tool in a code free environment. Users can quickly generate models for classification and regression problems with business data.

System Requirements

Qlik AutoML is available to customers with the following subscription products:

Qlik Sense Enterprise SaaS

Qlik Sense Enterprise SaaS Add-On to Client-Managed

Qlik Sense Enterprise SaaS - Government (US) and Qlik Sense Business does not support Qlik AutoML

Pricing and Packaging

For subscription tier information, please reach out to your sales or account team to exact information on pricing. The metered pricing depends on how many models you would like to deploy, dataset size, API rate, number of concurrent task, and advanced features.

Software updates

Qlik AutoML is a part of the Qlik Cloud SaaS ecosystem. Code changes for the software including upgrades, enhancements and bug fixes are handled internally and reflected in the service automatically.

Types of Models Supported

AutoML supports Classification and Regression problems.

Binary Classification: used for models with a Target of only two unique values. Example payment default, customer churn.

Customer Churn.csv (see downloads at top of the article)

Multiclass Classification: used for models with a Target of more than two unique values. Example grading gold, platinum/silver, milk grade.

MilkGrade.csv (see downloads at top of the article)

Regression: used for models with a Target that is a number. Example how much will a customer purchase, predicting housing prices

AmesHousing.csv (see downloads at top of the article)

Getting Started with AutoML

What is AutoML (14 min)

Exploratory Data Analysis (11 min)

Model Scoring Basics (14 min)

Prediction Influencers (10 min)

Qlik AutoML Complete Walk Through with Qlik Sense (24 min)

Non video:

How to upload data, training, deploying and predicting a model

Data Connections

Data for modeling can be uploaded from local source or via data connections available in Qlik Cloud.

You can add a dataset or data connection with the 'Add new' green button in Qlik Cloud.

There are a variety of data source connections available in Qlik Cloud.

Once data is loaded and available in Qlik Catalog then it can be selected to create ML experiments.

Data Preparation abilities

AutoML uses variety of data science pre-processing techniques such as Null Handling, Cardinality, Encoding, Feature Scaling. Additional reference here.

Using realtime-prediction API

Please reference these articles to get started using the realtime-prediction API

Integration with Qlik Sense

By leveraging Qlik Cloud, predicted results can be surfaced in Qlik Sense to visualize and draw additional conclusions from the data.

How to join predicted output with original dataset

Contacting Support

If you need additional help please reach out to the Support group.

It is helpful if you have tenant id and subscription info which can be found with these steps.

Additional resources

Please check out our articles in the AutoML Knowledge Base.

Or post questions and comments to our AutoML Forum.

Environment

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

-

Replicate - Generate a log.key file manually

When working with Qlik Replicate, log.key file(s) are used for Decrypt Qlik Replicate Verbose Task Log Files , the log.key file can be re-created by r... Show MoreWhen working with Qlik Replicate, log.key file(s) are used for Decrypt Qlik Replicate Verbose Task Log Files , the log.key file can be re-created by restarting tasks, or restarting Replicate services if the log.key file is missed/deleted. However sometimes we need the file creation prior to the first time task run eg

(1) Set proper file protection manually by DBA

(2) Task movement among different environment eg UAT and PROD

(3) In rare cases the file auto-creation failed due to some reasons

This article provide some methods to generate file "log.key" manually.

Environment

- Qlik Replicate Version 2021.11 and later

Resolution

There are several methods to get a "log.key" file manually.

1. Copy an existing "log.key" file from UAT/TEST task folder;

It's better to make sure the "log.key" uniqueness, so below method (2) is recommended:

2. Run "openssl" command on Linux or Windows

openssl rand -base64 32 >> log.keyThe command will return a 44-chars random unique string (the latest char is "=") in "log.key" file. For example

n1NJ7r2Ec+1zI7/USFY2H1j/loeSavQ/iUJPaiOAY9Y=Related Content

Support cases, #00059433

-

Qlik Replicate: Diagnostic Package Analysis Script

Introduction This is a handy tool to run against a diagnostic package downloaded from Replicate. The diagnostic package contains recent log files ... Show MoreIntroduction

This is a handy tool to run against a diagnostic package downloaded from Replicate. The diagnostic package contains recent log files (less than 10MB) and task information which is helpful for troubleshooting issues.

The goal of the script is to search for common key words or phrases quickly without having to open and read each log manually.

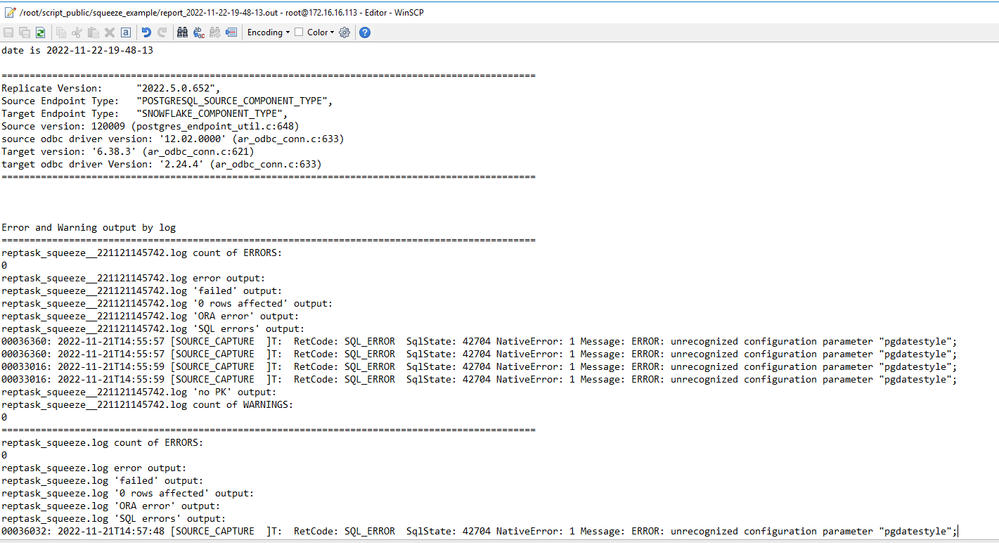

It is meant to be run on a linux environment with bash or shell scripting enabled. In the steps below, I am connecting to a Centos machine with MobaXterm. Then I am viewing the report with WinSCP after connecting to the same machine.

Files

runT.sh : shell script to set up instance folder, and then trigger the health_check.sh script

health_check.sh : script to search through task.json and log files for information related to metadata, errors, warnings and then prints a report.

These files are included in health_check.zip which is attached to the article. When you unpack them make sure they are executable (chmod +x ..).

Steps

1. Download both scripts and move them to an environment with bash/shell enabled.

2. In the same folder or directory location, upload the diagnostic package as a zipped file.

*Note this can be the only zip file in the directory when running the ./runT.sh

3. When you run the script, ./runT.sh, you must supply and folder name. When I use this, I call the folder the case name, but could be the task name, etc.

Example:

#./runT.sh squeeze_example

This will create a new folder in the directory called 'squeeze_example' with the unzipped contents of the diagnostic package and the report_date.out file.

Here is a sample of the report_date.out file.

Environment

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

-

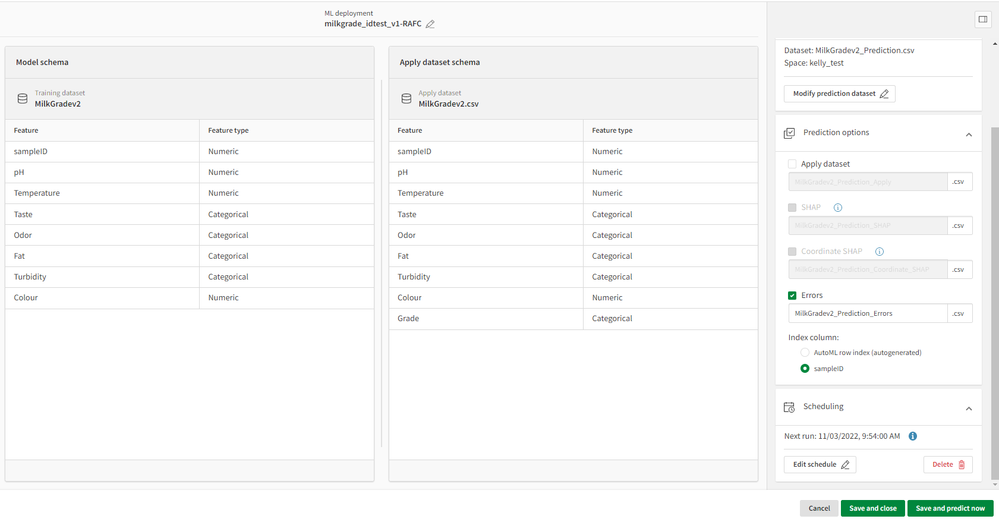

Qlik AutoML: How to schedule predictions?

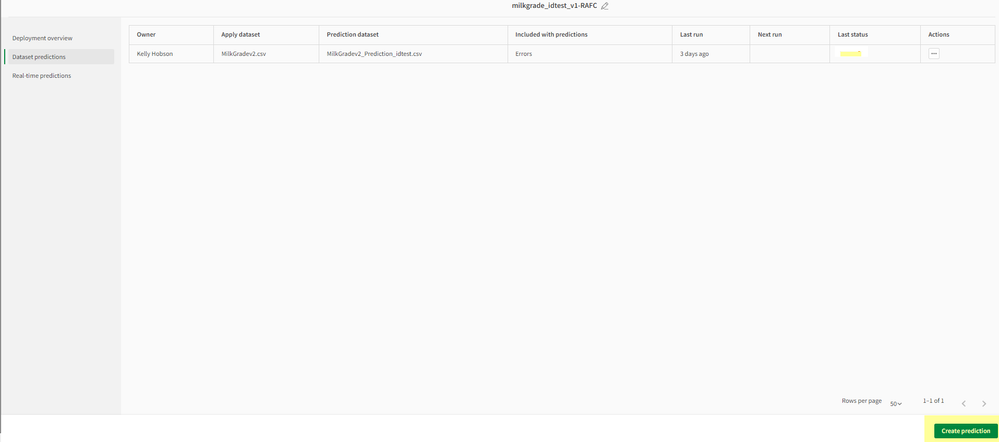

Introduction The scheduling feature is now available in Qlik AutoML to run a prediction on a daily, weekly, or monthly cadence. Steps 1. Open a ... Show MoreIntroduction

The scheduling feature is now available in Qlik AutoML to run a prediction on a daily, weekly, or monthly cadence.

Steps

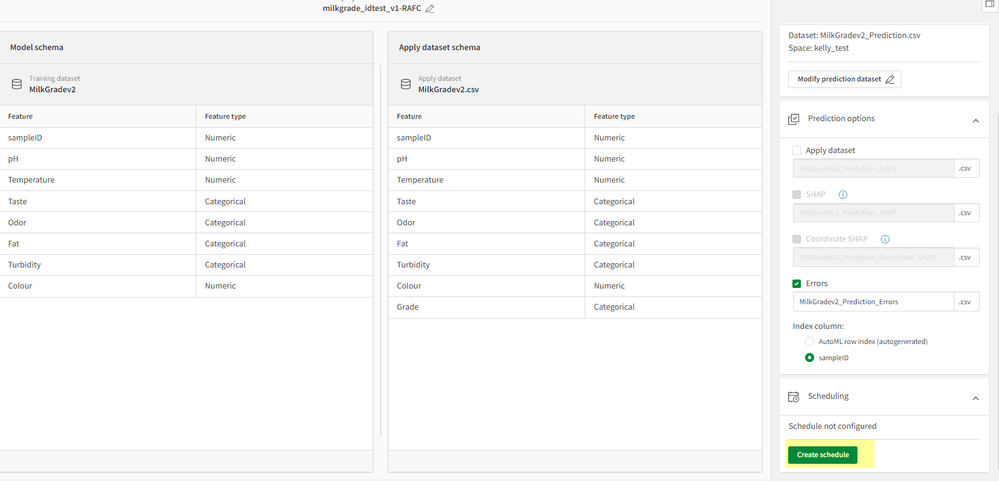

1. Open a deployed model from Qlik Catalog

2. Navigate to 'Dataset predictions' and click on 'Create prediction' on bottom right

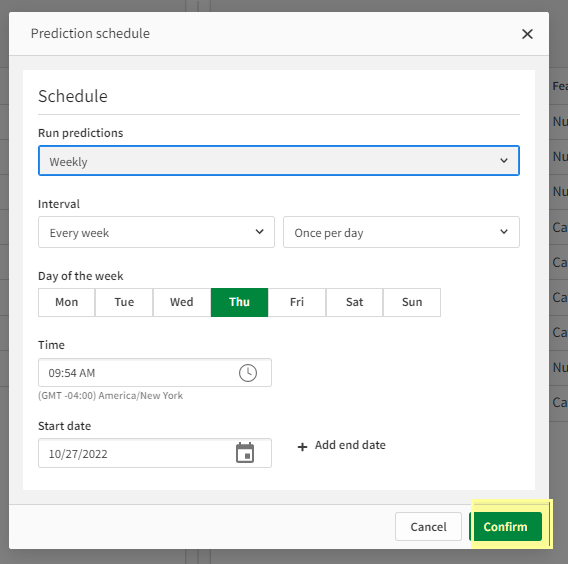

3. Select Apply Dataset, Name prediction datset, select your options, then click on 'Create Schedule'

4. Set your schedule options you would like to follow then click confirm

5. Your options now are to 'Save and close' (this will not run a prediction until the next scheduled) or 'Save and predict now' (this will run a prediction now in addition to the schedule)

Note: Users need to ensure the predicted dataset is updated and refreshed ahead of the prediction schedule.

Environment

The information in this article is provided as-is and to be used at own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.